DavidGraham

Veteran

What's the current triangle rate for Titan RTX? how is it even calculated nowadays?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Any luck guys?What's the current triangle rate for Titan RTX? how is it even calculated nowadays?

Any luck guys?

The setup rate and fragment output is the same as in Pascal. The geometry processing (culling/tessellation/projection) throughput is a bit higher, since Turing has much more multi-processors to balance the workload within a GPC.What's the current triangle rate for Titan RTX? how is it even calculated nowadays?

I think most of it. 1 geo unit for every 2 SMs in turing right? That gives a 2080 24 against the 28 of a 1080ti. The higher clockspeeds and maybe some slight efficiency improvements seem to line up with the tessellation benchmarks. Im not aware of any reviews that tested geometry throughput with anything other than tessmark and the microsoft tessellation benchmark used by PCGamesHardware. Other than mesh shading, nvidia doesnt mention geometry improvements in any of its documents.How much of that is clock speed related?

There were a handful of reviews that used other tests ... Comptoir-hardware used PixMark Julia 32, GIMark and Tessmark.Im not aware of any reviews that tested geometry throughput with anything other than tessmark and the microsoft tessellation benchmark used by PCGamesHardware.

The geometry workload is distributed at SM level, no shared hardware for that. Nvidia simply throttles the SMs of how much cycles they can spend on geometry and since Turing has plenty of them, it would be an overkill (and power draining) to keep processing primitives at full tilt if the next pipeline stage can't consume that much data. That is evident, by looking at how the number of SMs per GPC cluster has been rising steadily since Kepler.I think most of it. 1 geo unit for every 2 SMs in turing right? That gives a 2080 24 against the 28 of a 1080ti. The higher clockspeeds and maybe some slight efficiency improvements seem to line up with the tessellation benchmarks. Im not aware of any reviews that tested geometry throughput with anything other than tessmark and the microsoft tessellation benchmark used by PCGamesHardware. Other than mesh shading, nvidia doesnt mention geometry improvements in any of its documents.

They will start to lobby for mesh shaders and replacing of the geometry pipeline instead.Nvidia doesn't need to push geometry performance any higher. They can just stop lobbying for games to use effects that generate sub-pixel triangles like hairworks on Witcher 3.

The geometry workload is distributed at SM level, no shared hardware for that. Nvidia simply throttles the SMs of how much cycles they can spend on geometry and since Turing has plenty of them, it would be an overkill (and power draining) to keep processing primitives at full tilt if the next pipeline stage can't consume that much data. That is evident, by looking at how the number of SMs per GPC cluster has been rising steadily since Kepler.

Even within a generation there is a difference: 3 cycles per polygon for each SM in GM200 and 2 cycles for GM204.

The test with 0% culling was hitting the setup pipelines full time, so in this case the limit was not in geometry processing. Older generations of Nvidia GPUs were still rasterizing at half-rate (sans tessellation) and AMD could have benchmarked better then.I wasnt aware they artificially throttled geometry rates. In one of PCGamesHardware GPU reviews a while back they had a special bench showing geometry rates for various levels of culling and geforce cards fell further behind AMD as culling percentages got lower. I think AMD cards were over twice as fast at 0% culled.

The test with 0% culling was hitting the setup pipelines full time, so in this case the limit was not in geometry processing. Older generations of Nvidia GPUs were still rasterizing at half-rate (sans tessellation) and AMD could have benchmarked better then.

No performance changes, only feature updates like higher tier conservative rasterization support.Whats the rasterization rate for pascal and turing? Has it changed since maxwell

No performance changes, only feature updates like higher tier conservative rasterization support.

https://www.tomshardware.com/news/nvidia-geforce-rtx-t10-8-tu102,40166.htmlAIDA64, a widely used system information, diagnostics, and benchmarking tool, has added information for a mysterious, unannounced Nvidia GeForce RTX T10-8 graphics card, which is apparently based on the TU102 die.

Currently, there are four Nvidia graphics card that use the Turing TU102 silicon. The GeForce Titan RTX and GeForce RTX 2080 Ti hail from Nvidia's mainstream product line while the Quadro RTX 8000 and Quadro RTX 6000 belong to the enterprise side. Thanks to AIDA64's latest changelog, we're almost certain that Nvidia is working on another TU102-based graphics card behind the scenes.

The GeForce RTX moniker implies that the unknown graphics card is most likely aimed towards the gaming market. That's practically the only clue we have at the moment. So, it could be something like a GeForce RTX 2080 Ti Super or GeForce Titan RTX Black. However, we're more inclined to the first since the GeForce Titan RTX already employs a maxed-out TU102 die.

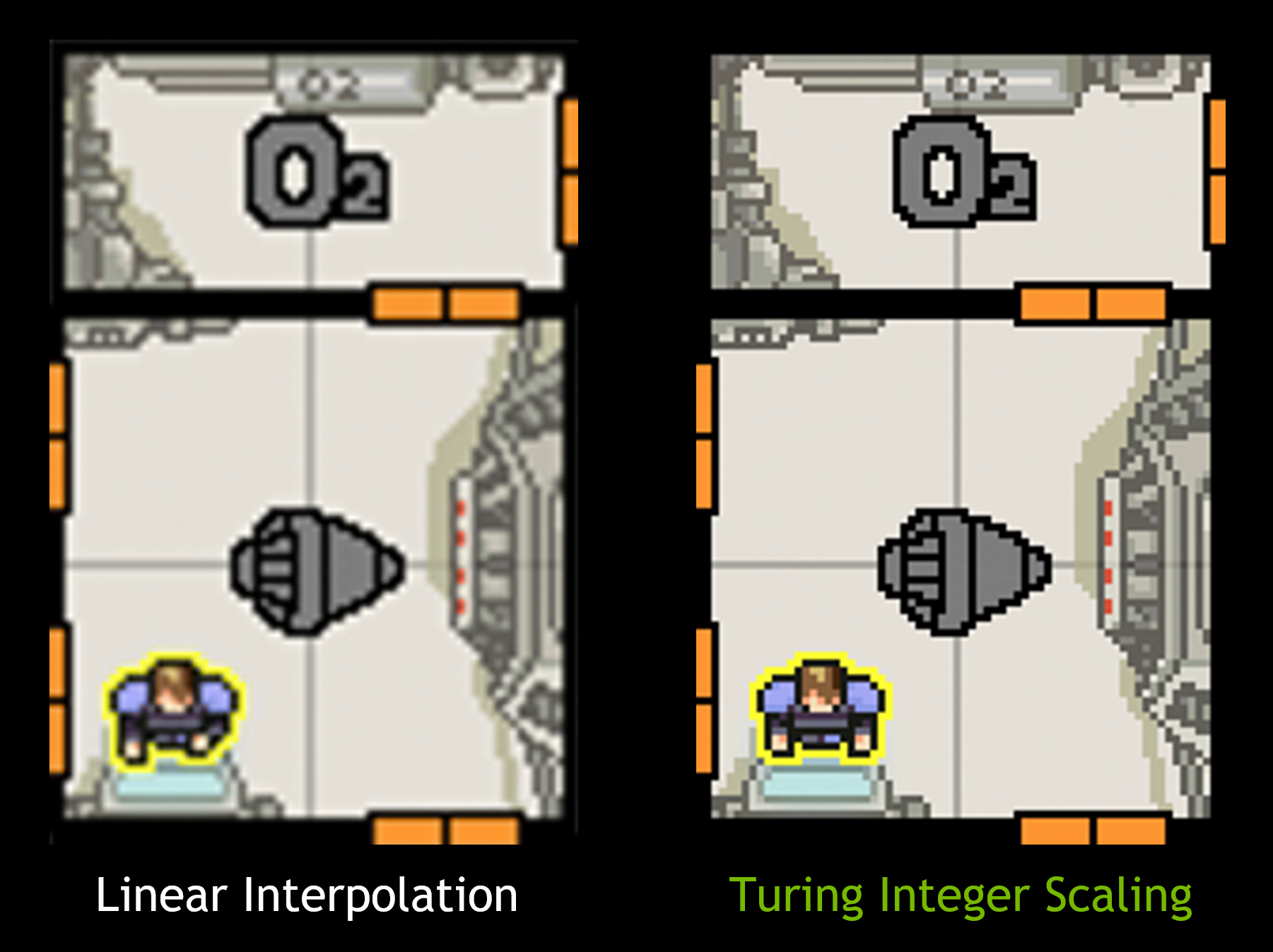

GeForce 436.02 Gamescom-special drivers. among several new features and performance updates, introduced integer scaling, a resolution upscaling algorithm that scales up extremely low-resolution visuals to a more eye-pleasing blocky pixellated lines by multiplying pixels in a "nearest-neighbor" pattern without changing their color, as opposed to bilinear upscaling, that blurs the image by attempting to add details where none exist, by altering colors of multiplied pixels.

....

NVIDIA's integer upscaling feature has been added only to its "Turing" architecture GPUs (both RTX 20-series and GTX 16-series), but not on older generations. NVIDIA explains that this is thanks to a "hardware-accelerated programmable scaling filter" that was introduced with "Turing." Why this is a big deal? The gaming community has a newfound love for retro games from the 80's thru 90's, with the growing popularity of emulators and old games being played through DOSBox. Many small indie game studios are responding to this craze with hundreds of new titles taking a neo-retro pixellated aesthetic (eg: "Fez").

....

Intel originally announced an integer upscaler this June that will be exclusive to the company's new Gen11 graphics architecture, since older generations of its iGPUs "lack the hardware requirements" to pull it off. Intel's driver updates that add integer-scaling are set to arrive toward the end of this month, and even when they do, only a tiny fraction of Intel hardware actually benefit from the feature (notebooks and tablets that use "Ice Lake" processors).

NAS boosts Wolfenstein: Youngblood performance by up to 15%

"Using VRS [Variable Rate Shading], we created NVIDIA Adaptive Shading (NAS), which combines two forms of VRS into one content aware option"

August 26, 2019

NAS Deep Dive

https://www.nvidia.com/en-us/geforce/news/nvidia-adaptive-shading-a-deep-dive/