DmitryKo

Veteran

Introduction to DirectX Raytracing: Overview of Ray Tracing

Peter Shirley, NVIDIA

http://intro-to-dxr.cwyman.org/presentations/IntroDXR_RayTracingOverview.pdf

7

Ray Tracing Versus Rasterization for primary visibility

Rasterization: stream triangles to pixel buffer to see that pixels they cover

Ray Tracing: stream pixels to triangle buffer to see what triangles cover them

8

Introduction to DirectX Raytracing: Overview and Introduction to Ray Tracing Shaders

Chris Wyman, NVIDIA

http://intro-to-dxr.cwyman.org/presentations/IntroDXR_RaytracingShaders.pdf

15-21

DirectX Rasterization Pipeline

What do shaders do in today’s widely-used rasterization pipeline?

• Run a shader, the vertex shader, on each vertex sent to the graphics card

– This usually transforms it to the right location relative to the camera

• Group vertices into triangles, then run tessellation shaders to allow GPU subdivision of geometry

– Includes 3 shaders with different goals, the hull shader, tessellator shader, and domain shader

• Run a shader, the geometry shader, on each tessellated triangle

– Allows computations that need to occur on a complete triangle, e.g., finding the geometric surface normal

• Rasterize our triangles (i.e., determine the pixels they cover)

– Done by special-purpose hardware rather than user-software

– Only a few developer controllable settings

• Run a shader, the pixel shader (or fragment shader), on each pixel generated by rasterization

– This usually computes the surface’s color

• Merge each pixel into the final output image (e.g., doing blending)

– Usually done with special-purpose hardware

– Hides optimizations like memory compression and converting image formats

22

Squint a bit, and that pipeline looks like:

Input: Set of Triangles

Shader(s) to transform vertices into displayable triangles → Rasterizer → Shader to compute color for each rasterized pixel → Output (ROP)

Output: Final Image

23-25

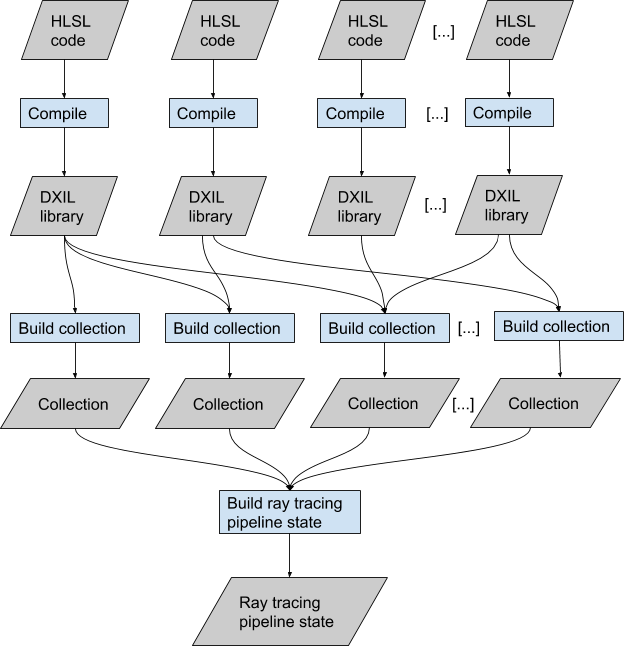

DirectX Ray Tracing Pipeline

So what might a simplified ray tracing pipeline look like?

Input: Set of Pixels

Take input pixel position, generate ray(s) → Intersect Rays With Scene → Shade hit points; (Optional) generate recursive ray(s) → Output

Output: Final Image

One advantage of ray tracing:

– Algorithmically, much easier to add recursion

26-33

Pipeline is split into five new shaders:

– A ray generation shader defines how to start ray tracing - Runs once per algorithm (or per pass)

– Intersection shader(s) define how rays intersect geometry - Defines geometric shapes, widely reusable

– Miss shader(s) define behavior when rays miss geometry }

– Closest-hit shader(s) run once per ray (e.g., to shade the final hit) } – Defines behavior of ray(s) – Different between shadow, primary, indirect rays

– Any-hit shader(s) run once per hit (e.g., to determine transparency) }

An new, unrelated sixth shader:

– A callable shader can be launched from another shader stage - Abstraction allows this; explicitly expose it

Peter Shirley, NVIDIA

http://intro-to-dxr.cwyman.org/presentations/IntroDXR_RayTracingOverview.pdf

7

Ray Tracing Versus Rasterization for primary visibility

Rasterization: stream triangles to pixel buffer to see that pixels they cover

Ray Tracing: stream pixels to triangle buffer to see what triangles cover them

8

Code:

Key Concept Rasterization Ray Tracing

Fundamental question What pixels does geometry cover? What is visible along this ray?

Key operation Test if pixels are inside a triangle Ray-triangle intersection

How streaming works Stream triangles (each triangle tests pixels) Stream rays (each ray tests intersections)

Inefficiences Shade many triangles per pixel (overdraw) Test many intersections per ray

Acceleration structure (Hierarchial) Z-buffering Bounding volume hierarchies

Drawbacks Incoherent queries difficult to make Traverses memory incoherentlyIntroduction to DirectX Raytracing: Overview and Introduction to Ray Tracing Shaders

Chris Wyman, NVIDIA

http://intro-to-dxr.cwyman.org/presentations/IntroDXR_RaytracingShaders.pdf

15-21

DirectX Rasterization Pipeline

What do shaders do in today’s widely-used rasterization pipeline?

• Run a shader, the vertex shader, on each vertex sent to the graphics card

– This usually transforms it to the right location relative to the camera

• Group vertices into triangles, then run tessellation shaders to allow GPU subdivision of geometry

– Includes 3 shaders with different goals, the hull shader, tessellator shader, and domain shader

• Run a shader, the geometry shader, on each tessellated triangle

– Allows computations that need to occur on a complete triangle, e.g., finding the geometric surface normal

• Rasterize our triangles (i.e., determine the pixels they cover)

– Done by special-purpose hardware rather than user-software

– Only a few developer controllable settings

• Run a shader, the pixel shader (or fragment shader), on each pixel generated by rasterization

– This usually computes the surface’s color

• Merge each pixel into the final output image (e.g., doing blending)

– Usually done with special-purpose hardware

– Hides optimizations like memory compression and converting image formats

22

Squint a bit, and that pipeline looks like:

Input: Set of Triangles

Shader(s) to transform vertices into displayable triangles → Rasterizer → Shader to compute color for each rasterized pixel → Output (ROP)

Output: Final Image

23-25

DirectX Ray Tracing Pipeline

So what might a simplified ray tracing pipeline look like?

Input: Set of Pixels

Take input pixel position, generate ray(s) → Intersect Rays With Scene → Shade hit points; (Optional) generate recursive ray(s) → Output

Output: Final Image

One advantage of ray tracing:

– Algorithmically, much easier to add recursion

26-33

Pipeline is split into five new shaders:

– A ray generation shader defines how to start ray tracing - Runs once per algorithm (or per pass)

– Intersection shader(s) define how rays intersect geometry - Defines geometric shapes, widely reusable

– Miss shader(s) define behavior when rays miss geometry }

– Closest-hit shader(s) run once per ray (e.g., to shade the final hit) } – Defines behavior of ray(s) – Different between shadow, primary, indirect rays

– Any-hit shader(s) run once per hit (e.g., to determine transparency) }

Note: Read spec for more advanced usage, since meaning of “any” may not match your expectations

An new, unrelated sixth shader:

– A callable shader can be launched from another shader stage - Abstraction allows this; explicitly expose it

Last edited: