Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Xbox One (Durango) Technical hardware investigation

- Thread starter Love_In_Rio

- Start date

- Status

- Not open for further replies.

Couple questions:

1. What would a substantial (whatever that may mean) more ACE's actually equate to/be useful for given what we believe we know about the XB1 architecture?

2. Same question but about Volcanic Islands?

If you think I'm being too lazy to look everything up, just point me in the direction of where to read.

I've read the VI thread before, but that and the other gcn architectures are all just mush in my brain now.

1. What would a substantial (whatever that may mean) more ACE's actually equate to/be useful for given what we believe we know about the XB1 architecture?

2. Same question but about Volcanic Islands?

If you think I'm being too lazy to look everything up, just point me in the direction of where to read.

I've read the VI thread before, but that and the other gcn architectures are all just mush in my brain now.

Betanumerical

Veteran

All signs point to the gpu being a VI part. NDA supposedly expires at same time AMD debuts VI. MS closes the show at hot chips, so unless you think that the Kinect sensor is worthy of that timeslot ... etc.

Pretty much this is what that Mister guy has been saying all along.. anyway can't wait to see actual details of the silicon for the xb1!! (at hotchips)

Except for you know, the fact that the XBONE GPU is identical to GCN1.0/1.1 in practically everyway sans the queues (like the PS4) and that VI is a new architecture which has differences to GCN1.0/1.1.

So no, he was not right.

Better compute efficiency.

which doesn't seem as big a plus on this architecture as the "alu heavy" competitor, imho. it's likely they'll need to dedicate most everything to graphics to keep up.

but more versatility is good, and compute during dead nanoseconds can help keep gpu utilization high.

Ceger, welcome to B3D. No, MS tech will not have the advantage, because the rumor is XB1 will have a MEC which is 4 ACEs, while PS4 will have 8 ACEs (as Gipsel noted just up the page). And don't bring "fear of another console" nonsense into this, please.

liquidboy and astrograd, what's so amazing or mysterious about claiming XB1 has more bandwidth/flop than PS4? Simplistic addition gives us eDRAM + DDR3 of roughly the same as PS4's GDDR5, no? And that's for 1/3 less ALU/TMU and 1/2 the ROPs, hence beta's claim. We can debate just how additive eDRAM bandwidth is to DDR3, but the whole point of eDRAM is to obviate the need for super-high-bandwidth main memory, so there is some additive effect.

If the sticking point is "ALU heavy" (which Rangers put in quotes on purpose), PS4 has the same ALU:TMU ratio as XB1, right? And it's not ALU heavy in relation to ROPs. So what part of beta's claim are you all up in arms against? The weird theory going around is that PS4 is disproportionally GPU heavy, not ALU heavy.

Edit: BRiT, feel free to delete this, too, if you think it's OT.

liquidboy and astrograd, what's so amazing or mysterious about claiming XB1 has more bandwidth/flop than PS4? Simplistic addition gives us eDRAM + DDR3 of roughly the same as PS4's GDDR5, no? And that's for 1/3 less ALU/TMU and 1/2 the ROPs, hence beta's claim. We can debate just how additive eDRAM bandwidth is to DDR3, but the whole point of eDRAM is to obviate the need for super-high-bandwidth main memory, so there is some additive effect.

If the sticking point is "ALU heavy" (which Rangers put in quotes on purpose), PS4 has the same ALU:TMU ratio as XB1, right? And it's not ALU heavy in relation to ROPs. So what part of beta's claim are you all up in arms against? The weird theory going around is that PS4 is disproportionally GPU heavy, not ALU heavy.

Edit: BRiT, feel free to delete this, too, if you think it's OT.

liquidboy and astrograd, what's so amazing or mysterious about claiming XB1 has more bandwidth/flop than PS4? Simplistic addition gives us eDRAM + DDR3 of roughly the same as PS4's GDDR5, no? And that's for 1/3 less ALU/TMU and 1/2 the ROPs, hence beta's claim. We can debate just how additive eDRAM bandwidth is to DDR3, but the whole point of eDRAM is to obviate the need for super-high-bandwidth main memory, so there is some additive effect.

Xbox One does not have eDRAM.

All signs point to the gpu being a VI part. NDA supposedly expires at same time AMD debuts VI. MS closes the show at hot chips, so unless you think that the Kinect sensor is worthy of that timeslot ... etc.

wow thats pretty huge if true. wouldn't that mean the 768 sp quoted by microsoft cant be compared to current gcn offerings since volcanic island might be more efficient or even different?

Betanumerical

Veteran

wow thats pretty huge if true. wouldn't that mean the 768 sp quoted by microsoft cant be compared to current gcn offerings since volcanic island might be more efficient or even different?

This is true, if it is VI (which I really doubt).

If it was VI the diagrams wouldn't be functionally identical to GCN1.0/1.1 cards since VI is meant to bring about a lot of differences.

This is true, if it is VI (which I really doubt).

If it was VI the diagrams wouldn't be functionally identical to GCN1.0/1.1 cards since VI is meant to bring about a lot of differences.

VI is still GCN, and all the possible differences to previous GCN IP levels are pure speculation at this point.

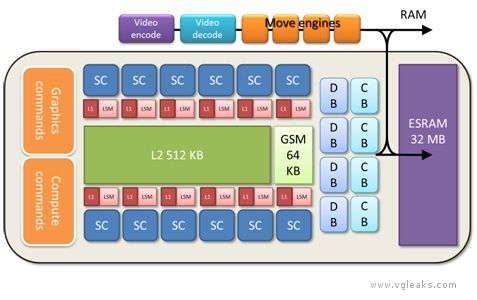

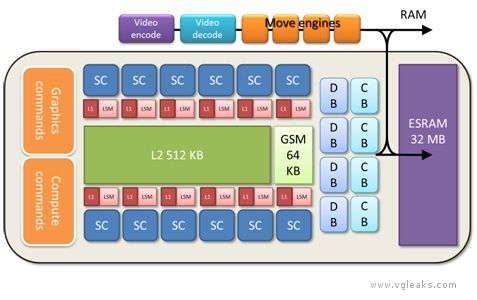

Also, this is the only "legit graph" of Durango GPU as far as I know, and it doesn't really tell anything detailed about it (we even know for sure it has more than one ACE

Xbox One does not have eDRAM.

Well, I guess that moots my whole post, then.

Betanumerical

Veteran

VI is still GCN, and all the possible differences to previous GCN IP levels are pure speculation at this point.

Also, this is the only "legit graph" of Durango GPU as far as I know, and it doesn't really tell anything detailed about it (we even know for sure it has more than one ACE)

What about all the diagrams that follow that exact picture in the same article? they all describe GCN 1.1/1.0 down to a T. Nothing in any of them suggests VI.

Pretty much it would seem to me that if the XBONE ends up being VI that both will be VI as the diagrams for both GPU's are rather similar they only differ in queues really.

Well, I guess that moots my whole post, then.

They do have eSRAM though. :smile:

What about all the diagrams that follow that exact picture in the same article? they all describe GCN 1.1/1.0 down to a T. Nothing in any of them suggests VI.

Pretty much it would seem to me that if the XBONE ends up being VI that both will be VI as the diagrams for both GPU's are rather similar they only differ in queues really.

The diagrams that follow wouldn't show any differences unless they do major, major changes to GCN. And major would be something like the difference between R600/VLIW5 and Cayman/VLIW4

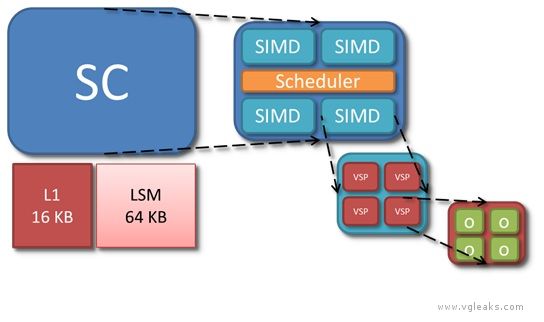

They're over simplified graphs, compare this, Durango "SC" aka CU

To bit more detailed GCN CU:

Last edited by a moderator:

What about all the diagrams that follow that exact picture in the same article? they all describe GCN 1.1/1.0 down to a T. Nothing in any of them suggests VI.

Pretty much it would seem to me that if the XBONE ends up being VI that both will be VI as the diagrams for both GPU's are rather similar they only differ in queues really.

I get you arguing against VI, I agree it seems unlikely and we dont even know how much performance increase VI will bring.

But you do suddenly have to wonder why MS didn't, if they didn't. It appears it will time very will with the launch.

i suppose there could be a risk if it suffered any delay, and 2 years ago in Xbone planning stages they probably wouldn't have wanted to take that risk that VI would be precisely ready in late 2013.

Kinect 2 has the world's first consumer level time-of-flight camera. This is a huge technological advance. Kinect got a showing at Hotchips in 2011, and Kinect 2 is way more deserving as it is using a novel chip technology.All signs point to the gpu being a VI part. NDA supposedly expires at same time AMD debuts VI. MS closes the show at hot chips, so unless you think that the Kinect sensor is worthy of that timeslot ... etc.

Your hardware needs to be ready a year in advance of release to develop the software for it. It's not feasible to design a console around a technology that'll debut around your release date, unless releasing something like a PC that already abstracts that hardware.But you do suddenly have to wonder why MS didn't, if they didn't. It appears it will time very will with the launch.

french toast

Veteran

Xenon? (Gpu)

Agree regarding hotchips and Kinect. So no telling what they plan to discuss.Kinect 2 has the world's first consumer level time-of-flight camera. This is a huge technological advance. Kinect got a showing at Hotchips in 2011, and Kinect 2 is way more deserving as it is using a novel chip technology.

Your hardware needs to be ready a year in advance of release to develop the software for it. It's not feasible to design a console around a technology that'll debut around your release date, unless releasing something like a PC that already abstracts that hardware.

Well, XB1 and PC probably aren't that far apart in terms of abstraction, DX11 ontop of windows derived kernel of some sorts.

Come to think of it, didn't MS say that they had full DX11 driver and the mono one is where they started to strip it down and streamline it? Sounds like windows driver maybe.....

So if anything that would've been in their favour.

This post in the PS4 thread links to this interview with an AMD's PR dude saying only PS4 has HUMA and XB1 doesn't. I think that confirms previous thinking regards which flavour of AMD hardware is in XB1.

- Status

- Not open for further replies.

Similar threads

- Replies

- 57

- Views

- 10K

- Replies

- 5

- Views

- 3K

- Locked

- Replies

- 3K

- Views

- 314K

- Replies

- 546

- Views

- 41K