Depending on the evolution (or lack thereof) of hardware ray tracing, AAA games may or may not have choice anymore in the future on whether or not they can implement feature ...I think the RT software looks bad in comparison. This is the economy solution for the lower end of the hardware, I will never use it voluntarily if I can use a hardware version. Entitlements are just different...

UE5 software luman made fixed function obsolete? Then everyone would just have to make it in software. CIG is also developing a software and hardware GI for SC and they said that the hardware version is much more detailed.

Immortals of Aveum looks more realistic than Alan Wake 2? The color grading too?

Currently no rekeased UE game can compete with Alan Wake 2 or Cyberpunk 2077.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Unreal Engine 5, [UE5 Developer Availability 2022-04-05]

- Thread starter mpg1

- Start date

this just reminds me how overcast lighting and camera word do an excellent job at hiding gaminess.Alan Wake 2 and Cyberpunk still look like a game, whereas RoboCop looks like a photograph. It's easy to see.

View attachment 10146

I'm sorry, but Robocop looks a lot better to me and more realistic. Alan Wake looks video-gamey even with path tracing.

One example of a bad implementation won't change how much more realistic Lumen looks.

for example, that infamous video of the motorcycle game Ride 4

That's because your brain has been trained to notice these things more so than the average gamer. That's how the human brain works. I have to say this as I feel this forum needs a reality check sometimes. We are in a tiny tech enthusiast bubble after all here.

Yes average gamers tend to have average standards. Clearly most of the discussions on this forum aren’t focused on IQ expectations of the average gamer.

I disagree with the notion that SSR artifacts aren’t noticeable in gameplay. It depends on the game of course as running and gunning tends to ignore those things. In slower paced narrative experiences they are very noticeable.

DegustatoR

Legend

My brain has been "trained" to notice the disappearance of reflections or noise/ghosting/boiling by the reality around me in which these don't occur. Hence...That's because your brain has been trained to notice these things more so than the average gamer.

This is just false. Any random person will immediately tell you that the game which is more stable in motion is the "more realistic" one.Let me assure you, even if you friends and family members would compare both games running on your PC, they will still think Robocop is the more realistic game.

DavidGraham

Veteran

Once again, Lumen looks good in certain outdoor scenarios, but it falls apart in most indoors, also software Lumen has lots of screen space elements, especially around emissive lights and ambient occlusion, which breaks apart as you move the camera, it also has light leaks and light boiling in areas with secondary bounces/emissive lights. Many indoor sections lack indirect lighting entirely. All of this on top of the lackluster reflections.Alan Wake 2 and Cyberpunk still look like a game, whereas RoboCop looks like a photograph. It's easy to see.

This is shown here in the DF analysis of UE5 games.

You get none of that with proper hardware RT/PT.

I think everyone should refrain from making bold claims about the general populace without hard evidence. I think people are so diverse that there'll be a lot of different responses.This is just false. Any random person will immediately tell you that the game which is more stable in motion is the "more realistic" one.

Modhat: Rather than making arguments in support of 'the greater good', everyone should just talk about their own preference and acknowledge it's their preference, shared by a currently unknown proportion of everyone else (until such time as someone can present the research into how the general populace responds to gaming visuals).

Fortnite now has motion-matching, procedural layering and physics based animation. Some vids in the link. Looks a lot better.

DegustatoR

Legend

Let me rephrase that: a more realistic graphics would be the one which is closer to reality in which there is no disappearing reflections / noise / boiling.I think everyone should refrain from making bold claims about the general populace without hard evidence. I think people are so diverse that there'll be a lot of different responses.

All else is just someone's personal preference of one art style other the other.

Whatever examples of UE5 games which do make use of RT h/w we have now show that you get even better graphics with the same art style thus making the whole "RT cores existential crisis" angle completely pointless to peddle.

The new season of Fortnite has launched and it looks great. Some good Nanite on display here.

The game now lets you toggle Lumen GI and reflections independently, although from trying it out, it seems like HW RT needs to be enabled to actually get reflections with Lumen GI off. The reflections with Lumen GI off can also appear sharper in some instances. Here is a comparison: https://imgsli.com/MjIzODEy

Wonder what is going on there. Is it just falling back to traditional RT reflections?

Another cool thing is the option to test graphical settings. Instead of having to constantly apply settings, back out to in-game and then go back through the settings, you can just press a single button that shows you your new settings in game. Upon pressing ESC you're returned to where you left off to make further tweaks. It's really neat.

The game now lets you toggle Lumen GI and reflections independently, although from trying it out, it seems like HW RT needs to be enabled to actually get reflections with Lumen GI off. The reflections with Lumen GI off can also appear sharper in some instances. Here is a comparison: https://imgsli.com/MjIzODEy

Wonder what is going on there. Is it just falling back to traditional RT reflections?

Another cool thing is the option to test graphical settings. Instead of having to constantly apply settings, back out to in-game and then go back through the settings, you can just press a single button that shows you your new settings in game. Upon pressing ESC you're returned to where you left off to make further tweaks. It's really neat.

Now that is very curious. I would guess it is no longer using surface cache, but instead using something else then for shading hardware RT... AKA hitlighting, It is so bizarre you need to turn off lumen GI for that to happen, realistically, that should be a separate option WITH lumen GI. You can also see though how Lumen GI is used for the rougher edges of the pool of water though, which is nice.The new season of Fortnite has launched and it looks great. Some good Nanite on display here.

The game now lets you toggle Lumen GI and reflections independently, although from trying it out, it seems like HW RT needs to be enabled to actually get reflections with Lumen GI off. The reflections with Lumen GI off can also appear sharper in some instances. Here is a comparison: https://imgsli.com/MjIzODEy

Wonder what is going on there. Is it just falling back to traditional RT reflections?

Another cool thing is the option to test graphical settings. Instead of having to constantly apply settings, back out to in-game and then go back through the settings, you can just press a single button that shows you your new settings in game. Upon pressing ESC you're returned to where you left off to make further tweaks. It's really neat.

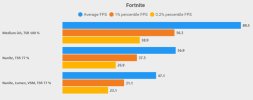

Okay, some initial benchmarks of a forest scene. Screenshot taken with everything maxed out including effects and post processing, but for benchmarking I set effects and post processing to low. Just wanted to make it easier to see the impacts of nanite, lumen and RT. I went into a replay with nanite, lumen and vsm set to epic (RT off) and flew around with the camera until I found a scene that had seemed to have a lower fps. There could be other scenes where lumen, nanite, vsms have different impacts. This scene is likely very hard on nanite/vsms because of the foliage. Maybe not as much on lumen.

A bit of math:

with nanite, lumen, vsms off the frame time is about 4.3 ms as a baseline.

nanite on - 6.6 ms (costs 2.3 ms ... 6.6 - 4.3)

vsm high - 9.3 ms (costs 2.7 ms ... 9.3 - 6.6)

lumen high - 10.1 ms (costs 3.5 ms ... 10.1 - 6.6)

vsm high, lumen high - 12.2 ms (costs 5.6 ms ... 12.2 - 6.6 ... seem to be a little cheaper together)

Add HW RT - 13 ms (costs 0.8 ms ... 13 - 12.2 ... very cheap. Must be the HW RT performance improvements coming to UE 5.4? The exe details have version 5.4)

A bit of math:

with nanite, lumen, vsms off the frame time is about 4.3 ms as a baseline.

nanite on - 6.6 ms (costs 2.3 ms ... 6.6 - 4.3)

vsm high - 9.3 ms (costs 2.7 ms ... 9.3 - 6.6)

lumen high - 10.1 ms (costs 3.5 ms ... 10.1 - 6.6)

vsm high, lumen high - 12.2 ms (costs 5.6 ms ... 12.2 - 6.6 ... seem to be a little cheaper together)

Add HW RT - 13 ms (costs 0.8 ms ... 13 - 12.2 ... very cheap. Must be the HW RT performance improvements coming to UE 5.4? The exe details have version 5.4)

Last edited:

atpkinesin

Newcomer

here are non-nanite, nanite, nanite+VSM+Lumen benchmark results for the new Fortnite map with the AMD FX-8320. these are all cpu-limited results, but its a bit red delicious to granny smith compared to my prior post. in this case, i benchmarked a 9 minute, 10 elim*, zero build replay that was my 3rd match on the new map. there were 2 large stutters in each the nanite/vsm/lumen and nanite-only replays, with none in the non-nanite pass.

in general the results for the new map were roughly the same (and i used the same graphics settings) as the OG map even though there are obvious increases in visual quality and geometry, which is an impressive result for nanite and lumen wrt CPU demands. i played the match live with the most intensive settings using nanite/vsm/lumen and qualitatively it was a tolerable experience from a performance POV, but the visuals were absolutely fantastic. the new map looks great.

FPS Summary:

Naive Frametimes (top) and GPU-Busy Frametimes (bottom):

baseline ~ 11.2 ms

nanite on ~ 17.6 ms (+6.4 ms)

N/V/L on ~ 21.2 ms (V/L +3.6 ms, N/V/L + 10 ms)

*im not a great fps-er but there was literally a million people playing the zero build mode when i played the match, so i think the average skill level was pretty low. probably a good time to play if you're looking for a BR style win.

in general the results for the new map were roughly the same (and i used the same graphics settings) as the OG map even though there are obvious increases in visual quality and geometry, which is an impressive result for nanite and lumen wrt CPU demands. i played the match live with the most intensive settings using nanite/vsm/lumen and qualitatively it was a tolerable experience from a performance POV, but the visuals were absolutely fantastic. the new map looks great.

FPS Summary:

Naive Frametimes (top) and GPU-Busy Frametimes (bottom):

baseline ~ 11.2 ms

nanite on ~ 17.6 ms (+6.4 ms)

N/V/L on ~ 21.2 ms (V/L +3.6 ms, N/V/L + 10 ms)

*im not a great fps-er but there was literally a million people playing the zero build mode when i played the match, so i think the average skill level was pretty low. probably a good time to play if you're looking for a BR style win.

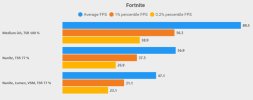

Was trying to figure out if there was a way for me to maintain 120 fps with hardware ray tracing on.

1440p DLSS performance

Post process: low (don't want it)

Effects: medium (low disables some lights, epic adds performance killing volumetric clouds, high does ... maybe particle differences?)

nanite: on

Lumen gi/reflections: high

VSMs: medium (no shadows on grass, maybe other things)

draw distance: epic

textures: epic

Hardware Ray Tracing: On

I used an almost 20 minute replay. Did one run without benchmarking to make sure there would be minimal to no shader comp then repeated the run and benchmarked. Repeated this same process with HW RT off.

Those numbers look to be fairly correct. The performance difference between hardware RT on and off is not huge anymore. The thing is, I was getting noticeable stutters during the replay and I haven't figured out why yet. I've never seen them during actual gameplay, so I'm not sure if it's capframex causing it during a long recording, something with my pc, or something with replays. I'd see my cpu spike up and then a stutter would occur. It's hard to do a good analysis from the graphs. I know with HW RT on the forest areas were in the high 120s and some other areas were in the 140s. Dropping from the bus had some noticeable drops, but I wasn't worried too much about maintaining 120 fps while gliding.

In terms of 1440p DLSS performance, it doesn't look as bad as other games. I think having detailed geometry that can be per-pixel or near per-pixel really helps upscaling, but there is more noise in shadows and lights. Overall, even with ray tracing the game seems to have "boiling" in indirect lighting from Lumen High, regardless of what DLSS quality I select.

Here's Michael Myers with a sour candy gun wrap frolicking in the snow.

DLSS performance 1440p. Definitely a bit softer, a little harder to see things at long distances, but overall way better than I expected.

1440p DLSS quality

1440p TAA native

1440p DLSS performance

Post process: low (don't want it)

Effects: medium (low disables some lights, epic adds performance killing volumetric clouds, high does ... maybe particle differences?)

nanite: on

Lumen gi/reflections: high

VSMs: medium (no shadows on grass, maybe other things)

draw distance: epic

textures: epic

Hardware Ray Tracing: On

I used an almost 20 minute replay. Did one run without benchmarking to make sure there would be minimal to no shader comp then repeated the run and benchmarked. Repeated this same process with HW RT off.

Those numbers look to be fairly correct. The performance difference between hardware RT on and off is not huge anymore. The thing is, I was getting noticeable stutters during the replay and I haven't figured out why yet. I've never seen them during actual gameplay, so I'm not sure if it's capframex causing it during a long recording, something with my pc, or something with replays. I'd see my cpu spike up and then a stutter would occur. It's hard to do a good analysis from the graphs. I know with HW RT on the forest areas were in the high 120s and some other areas were in the 140s. Dropping from the bus had some noticeable drops, but I wasn't worried too much about maintaining 120 fps while gliding.

In terms of 1440p DLSS performance, it doesn't look as bad as other games. I think having detailed geometry that can be per-pixel or near per-pixel really helps upscaling, but there is more noise in shadows and lights. Overall, even with ray tracing the game seems to have "boiling" in indirect lighting from Lumen High, regardless of what DLSS quality I select.

Here's Michael Myers with a sour candy gun wrap frolicking in the snow.

DLSS performance 1440p. Definitely a bit softer, a little harder to see things at long distances, but overall way better than I expected.

1440p DLSS quality

1440p TAA native

Frenetic Pony

Veteran

It's interesting to see GI and VSM have little overlap in resource usage here, barely budging the frame times from one or the other to both being on.

I mean one is sending out to hardware RT and the other is compute RT through a shadow map. The former doing a sparse crawl through cache and into memory while the latter hitting cache pretty regularly. Apparently it's a good overlap of resources, at least on Nvidia 3XXX, and makes me wonder if Restir shadows are going to be a viable replacement performance wise. Then again there's probably less parallelism to be gained between VSM and GI on AMD 6XXX with its more compute dependent hardware RT.

I mean one is sending out to hardware RT and the other is compute RT through a shadow map. The former doing a sparse crawl through cache and into memory while the latter hitting cache pretty regularly. Apparently it's a good overlap of resources, at least on Nvidia 3XXX, and makes me wonder if Restir shadows are going to be a viable replacement performance wise. Then again there's probably less parallelism to be gained between VSM and GI on AMD 6XXX with its more compute dependent hardware RT.

I don't think the UE5.4 performance improvements for HW-RT are implemented yet. The performance difference between SW-RT and HW-RT has always been very small.

The performance difference between hardware RT on and off is not huge anymore.

I need to verify, but I believe the stuttering in my benchmarks yesterday was caused by the latest nvidia driver which can cause intermittent stuttering with vsync enabled. I have vsync enabled in the nvidia control panel for fortnite. I'll install the hotfix driver and see if benchmarks look the same. I'd installed the driver just before benchmarking the replay.

Edit: Okay with the hotfix driver things are looking a lot better. No more 250-300ms frame spikes. Without hardware RT I could probably get away with DLSS balanced and get 120 fps minimum.

Same settings, same replay.

1440p DLSS performance

Post process: low (don't want it)

Effects: medium (low disables some lights, epic adds performance killing volumetric clouds, high does ... maybe particle differences?)

nanite: on

Lumen gi/reflections: high

VSMs: medium (no shadows on grass, maybe other things)

draw distance: epic

textures: epic

Hardware Ray Tracing: On

Edit: Okay with the hotfix driver things are looking a lot better. No more 250-300ms frame spikes. Without hardware RT I could probably get away with DLSS balanced and get 120 fps minimum.

Same settings, same replay.

1440p DLSS performance

Post process: low (don't want it)

Effects: medium (low disables some lights, epic adds performance killing volumetric clouds, high does ... maybe particle differences?)

nanite: on

Lumen gi/reflections: high

VSMs: medium (no shadows on grass, maybe other things)

draw distance: epic

textures: epic

Hardware Ray Tracing: On

Last edited:

Similar threads

- Replies

- 158

- Views

- 19K

- Replies

- 104

- Views

- 21K

- Replies

- 0

- Views

- 1K

- Locked

- Replies

- 260

- Views

- 25K