This press release? The one that says RSX at 550 MHz? The one that says 6 USB ports, 4 on front and 2 at back? The one that says 3 Gigabit Ethernet ports? The one that says 2 HDMI ports? Your PS3 got all that stuff as well? Or, shock horror, is this original press release not accurate regards final hardware?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

RSX Secrets

- Thread starter edepot

- Start date

- Status

- Not open for further replies.

6 USB ports down to 4 , 2 HDMI ports down to 1 , 3 Gb Ethernet ports down to 1 are not affect with your PS3 games source code and how its perform on your screen, isn't it?

However , 550/700MHz GPU to 500/650MHz GPU will cost at least 10-15% performance hit

on your PS3 games code.

I've no idea how the hell 50MHz downgrade clock make sense for save SCEI in someway.

This may cause PS3 games perform below its compettiter console and may games namely

SONY or SCEI the big LIER in the worlds like PS2 can do PIXAR graphic again.

I don't think they dare to do some hell idea like down speed GPU clock to kill themselves.

However , 550/700MHz GPU to 500/650MHz GPU will cost at least 10-15% performance hit

on your PS3 games code.

I've no idea how the hell 50MHz downgrade clock make sense for save SCEI in someway.

This may cause PS3 games perform below its compettiter console and may games namely

SONY or SCEI the big LIER in the worlds like PS2 can do PIXAR graphic again.

I don't think they dare to do some hell idea like down speed GPU clock to kill themselves.

I think anything that not official can be think but cannot be trust?

It's nothing happen if we said " PS3 is downclocked GPU " . These qoute is not harm you all

cause you're gamers and consumers. However if SCEI said the hell like that, It'll affect to

their stock exchange immediatly. All of their partner in business will kick out them to the graveyard as soon as they can.

So even if SCEI has state that " RSX was 500/650MHz " like some smoke around the net.

I think we'd better trust them rather than the private source in shadows.

It's nothing happen if we said " PS3 is downclocked GPU " . These qoute is not harm you all

cause you're gamers and consumers. However if SCEI said the hell like that, It'll affect to

their stock exchange immediatly. All of their partner in business will kick out them to the graveyard as soon as they can.

So even if SCEI has state that " RSX was 500/650MHz " like some smoke around the net.

I think we'd better trust them rather than the private source in shadows.

No, but the point is these specs on the Official Press Release you refer to as telling you what hardware you have goes to show that press release is WRONG! It's not a valid reference document. Sony changed the specifications of the machine. They removed a number of ports, and they downclocked the graphics system and RAM.6 USB ports down to 4 , 2 HDMI ports down to 1 , 3 Gb Ethernet ports down to 1 are not affect with your PS3 games source code and how its perform on your screen, isn't it?

IIRC Xbox got a down spec. GC got a one. Can't remember PS2 (finding out changing specs on these old machines has proven beyond my websearching skills!). Changing specs is normal, for consoles and CE goods. It's not going to kill PS3 because people aren't buying PS3 for a paper specification. They're buying it because it does what they want it to do. And as pointed to earlier, the results now with less than 100% hardware utilisation are plenty good enough. Yes, a 10% drop in performance from original spec is a disappointment, in theory, but the end product is good enough that no-one cares. No-one's boycotting PS3 because the RSX clockspeed got dropped, any more than other products have been avoided. If it really annoys you, contact Sony, tell them you were informed by their press release that you were buying a console with a 550 MHz GPU and now that you've learnt it's not 550 MHz, you want your money back. Kick up a stink and then you can happily clear out that shelf space and not have to waste your time with PS3 any more, and not have to play any of those games that aren't running on a 550 MHz GPU, and you won't have to put up with BRD playback on a system that only has a 500 MHz GPU. Whatever reason you bought a PS3 for, obviously the fact it hasn't got a 550 MHz GPU makes it totally unsuitable for the job, or at least poor value, and you'd be better off with your money back, right? Who wants LBP or MGS4 or Uncharted or RFoM 2 or GT5 if the only way to get to play them is to buy a console with a lower clock speed than originally announced?I've no idea how the hell 50MHz downgrade clock make sense for save SCEI in someway.

This may cause PS3 games perform below its compettiter console and may games namely

SONY or SCEI the big LIER in the worlds like PS2 can do PIXAR graphic again.

I don't think they dare to do some hell idea like down speed GPU clock to kill themselves.

6 USB ports down to 4 , 2 HDMI ports down to 1 , 3 Gb Ethernet ports down to 1 are not affect with your PS3 games source code and how its perform on your screen, isn't it?

However , 550/700MHz GPU to 500/650MHz GPU will cost at least 10-15% performance hit

on your PS3 games code.

I've no idea how the hell 50MHz downgrade clock make sense for save SCEI in someway.

This may cause PS3 games perform below its compettiter console and may games namely

SONY or SCEI the big LIER in the worlds like PS2 can do PIXAR graphic again.

I don't think they dare to do some hell idea like down speed GPU clock to kill themselves.

My friend, this board is full of developers including some high profile PS3 developers. If the PS3 really did run at 550/700 we would certainly know about it.

I think its time to accept the harsh reality.

IIRC Xbox got a down spec. GC got a one. Can't remember PS2 (finding out changing specs on these old machines has proven beyond my websearching skills!). Changing specs is normal, for consoles and CE goods.

I seem to remember the PS2 getting a spec upgrade actually - the CPU went from 250 to 300 mHz at some point between unveiling and release.

Anyway, the PS3's 50 mhz drops aren't such a big deal IMO. Perhaps they were an issue for developers who were trying to stabilise frame rates on early titles (I'm thinking about the issues that have been raised on B3D about polygon throughput etc), but in terms of the quality of visuals the consumer sees I'd guess a 9% drop in GPU power would only have a small impact.

That's sound very surprise to me. I've not heard that EE clock was 250MHz from SCEI. Only 292.3458MHz at announced.

If anyone whom PS3 developer here. Can you state that "RSX 550/700MHz or 500/650MHz "?

USB , HDMI, Ethernet ports which you cliam reduce from E3 2005 press were edited

on SCE official page around the world as presented specification, isn't it?

However SCEI did not announce RSX 550/700MHz get down 50MHz clockrate anymore.

So. RSX must 550/700MHz as E3 2005 spec.

If anyone whom PS3 developer here. Can you state that "RSX 550/700MHz or 500/650MHz "?

USB , HDMI, Ethernet ports which you cliam reduce from E3 2005 press were edited

on SCE official page around the world as presented specification, isn't it?

However SCEI did not announce RSX 550/700MHz get down 50MHz clockrate anymore.

So. RSX must 550/700MHz as E3 2005 spec.

Very very good information in this link (and topic) but i have some questions :

1 - If G71/Geforce 7900GT/GTX have 64KB cache per quad we have something like 10/15% increase performance overall over G70/Geforce 7800GTX at same clock etc,how much impact in performance RSX with 96KB(10% over G71?)?

2 - A fast vector normalize its same extra 7 flops in pixel shader pipe (Ex: pixel shader -> at best ...each has 27 flops/5 instructions = 16flops/4* vec4 + vec4 "free" + 7 flops normalize) with increase to more or present in vextex shader pipe?

3 - With 63 max vertz = 36KB post transform/light cache?

Since now thanx all for answers.

Annyone can answer my questions?

Last edited by a moderator:

DuckThor Evil

Legend

However SCEI did not announce RSX 550/700MHz get down 50MHz clockrate anymore.

So. RSX must 550/700MHz as E3 2005 spec.

Stop spamming dude! You don't need to say the same thing over and over again. If you refuse to believe people that know this issue very well, then ok you can have your opinion, but you said it already!

archangelmorph

Veteran

Stop spamming dude! You don't need to say the same thing over and over again. If you refuse to believe people that know this issue very well, then ok you can have your opinion, but you said it already!

PS3MAN...

You'd do well to drop your silly arguement & accept the reality that's being presented to you..

I really don't mean to come off as harsh but really... What you think is true regarding whatever out-dated spec sheet you have access to really didn't turn out to be the truth you were hoping for..

I'm not going to say anymore..

Search the forum. They already have done, as you've been told like a dozen times now...If anyone whom PS3 developer here. Can you state that "RSX 550/700MHz or 500/650MHz "?

Sony announced a spec sheet prior to launch. They changed the machine from spec. The fact they don't record the changes to clockspeeds doesn't mean they remained unchanged. Indeed if the clockspeeds are the same as the E3 05 announcement, why aren't Sony publicly exhibiting them?USB , HDMI, Ethernet ports which you cliam reduce from E3 2005 press were edited

on SCE official page around the world as presented specification, isn't it?

However SCEI did not announce RSX 550/700MHz get down 50MHz clockrate anymore.

So. RSX must 550/700MHz as E3 2005 spec.

Anyway, donning my mod hat, this aspect of RSX discussion is deemed closed. If anyone wants to believe RSX runs at 550 MHz, that's their prerogative, but repeating the point is counter productive to the board's discussion. From this point on any posts where RSX is presented as being 550 MHz will get chopped unless someone is presenting categoric confirmation of that fact. All discussion surrounding PS3's architecture will remain on the assumption it's 500/650 MHz RSX clock/GDDR clock with associated processing rates and bandwidth, as per indicated by prior discussion. Attempts to argue otherwise, without categoric proof (a current, relevant spec sheet from Sony, or a signed affidavit from a Sony engineer who worked on PS3's hardware, or suitably positioned Sony employee, or developer, with full disclosure of credentials), will be regarded as topic derailment.

Lucid_Dreamer

Veteran

Actually, The Pixar/Toy Story graphics claim was just a rumor. You will not find any direct quotes from Sony saying that for the PS2 (just other people saying that). However, you can find a similar statement quoted from a MS executive about the original Xbox. Somehow, it became widely accepted as fact that Sony said that about the PS2.This may cause PS3 games perform below its compettiter console and may games namely

SONY or SCEI the big LIER in the worlds like PS2 can do PIXAR graphic again.

I would love to know the answer to his questions as well.Annyone can answer my questions?

Thanx for remember.I would love to know the answer to his questions as well.

So... i repeat

Very very good information in this link (and topic) but i have some questions :

1 - If G71/Geforce 7900GT/GTX have 64KB cache per quad we have something like 10/15% increase performance overall over G70/Geforce 7800GTX(48KB) at same clock etc,how much impact in performance RSX with 96KB(10% over G71?)?

2 - A fast vector normalize its same extra 7 flops in pixel shader pipe (Ex: pixel shader -> at best ...each has 27 flops/5 instructions = 16flops/4* vec4 + vec4 "free" + 7 flops normalize) with increase to more(something like from 7 flops to 14flops normalize with some increase on mini-ALU?) or present in vextex shader pipe?

3 - With 63 max vertz = 36KB post transform/light cache?

Since now thanx all for answers.

1- If G71/Geforce 7900GT/GTX have 64KB cache per quad we have something like 10/15% increase performance overall over G70/Geforce 7800GTX(48KB) at same clock etc,how much impact in performance RSX with 96KB(10% over G71?)?

96KB cache per quad total 576KB cache for all. Bigger cache size may increase cache hit rate

and reduce latencies. It'd like CPU with 256KB L2 VS 512KB L2 cache with both running at same clock speed. The bigger L2 one always perform better.

96KB cache per quad total 576KB cache for all. Bigger cache size may increase cache hit rate

and reduce latencies. It'd like CPU with 256KB L2 VS 512KB L2 cache with both running at same clock speed. The bigger L2 one always perform better.

Agreed ant thanx for answer.

My guess is RSX at same condition/enviroment /clock compare with G71/64KB cache per quad is something 10% more faster.

In world pc cpus i remember celerom pentium ,pentium pro,PII,PIII times with half (512KB -> 256KB) cache L2 performance low at least 10% at same clock/enviroment.

Agreed ant thanx for answer.

My guess is RSX at same condition/enviroment /clock compare with G71/64KB cache per quad is something 10% more faster.

In world pc cpus i remember celerom pentium ,pentium pro,PII,PIII times with half (512KB -> 256KB) cache L2 performance low at least 10% at same clock/enviroment.

I don't think thats the case at all. GPU's work very differently from CPU's so that analogy doesn't really work. Further, I don't think there is any evidence of G71 being faaster than G70 at the same clock speed and certainly not by 10-15%.

The larger cache on RSX is most likely to reduce latency when using the XDR for graphics work. It probably has no baring on performance in comparison to the PC version of the GPU which doesn't need to go out to a seperate memory pool across an external bus.

Certainly if adding a few extra KB of cache can boost performance even by a couple of percent, it would most likely already have been done. Odds are that the cache levels in G70/G71 are optimal for its architecture.

I don't think thats the case at all. GPU's work very differently from CPU's so that analogy doesn't really work. Further, I don't think there is any evidence of G71 being faaster than G70 at the same clock speed and certainly not by 10-15%.

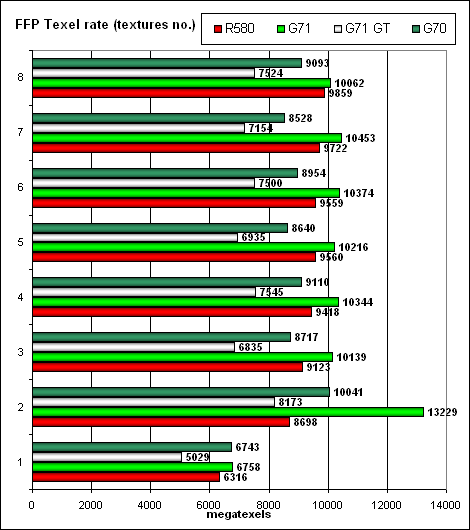

Maybe yes maybe not if wee talk about pixel shader and texel rate performace overall,we have some more increase performance like we see in this benchs:

more here: http://www.digit-life.com/articles2/video/g71-part2.html#p5

http://www.hexus.net/content/item.php?item=4872&page=9

The larger cache on RSX is most likely to reduce latency when using the XDR for graphics work. It probably has no baring on performance in comparison to the PC version of the GPU which doesn't need to go out to a seperate memory pool across an external bus.

-> This extra cache using for this? Or RSX have some other cache exclusively for hide extra latencie for acess XDRAM (textures etc)?

But when i talk about 10% more im compare based in link above performance gain G70 vs G71 and maybe RSX have some goal,despite videogame is closed box etc.

Certainly if adding a few extra KB of cache can boost performance even by a couple of percent, it would most likely already have been done. Odds are that the cache levels in G70/G71 are optimal for its architecture.

-> Im still thinking RSX is something like G70/71 gpu "adapted" for console at last hour/moment (oficcially nvidia and sony anounched in 12/7/2004 only) ,and if this really happen maybe we see some parallells at "pc counterpart" like G70 = 48KB per quad cache to G71=64KB some extra performance.

Last edited by a moderator:

Whoa that's an interesting set of comparison shots. Way to go IBM B)

- Status

- Not open for further replies.

Similar threads

- Replies

- 41

- Views

- 4K

- Replies

- 6

- Views

- 440

- Replies

- 109

- Views

- 25K

- Replies

- 73

- Views

- 8K