(((interference)))

Veteran

Yes, but there is no hope in hell anyone has ever gotten anywhere near that on a real load.

The Jaguar cores are absurdly more efficient. When there was a lot of talk about jaguar cores possibly being in the next console, I went and bought myself a bobcat minilaptop to practise on the cpu. The more code I write for it, the more impressed I am of it.

In throughput, it's somewhere pretty close to a modern Intel dualcore.

Thanks for explaining the relative efficiencies of Xenon and Jaguar.

I never posted that. Sheesh people, I'm now getting ripped for stuff I didn't even post.

Me too, but the 7770 @ 1ghz might actually be feasible. It's 80 watts TDP. The CPU's shouldn't add up to much, maybe 30 watts. You can probably then fit the whole console in 150 watts, 50 less than 360, which sounds about what I think MS would aim for (gotta be green and all that baloney ya know).

Theres passively cooled 7770's even, granted it's one giant heatsink http://www.tomshardware.com/news/sapphire-radeon-gpu-7770-heatsink,15927.html

I still think more CU's lower clock is more likely, but 1ghz is probably doable as is.

Ok, well it could be a 7770 then, it's in the TDP and flops ballpark (and has 10CUs @ 1ghz like this new rumour). What's the provenance of they guy who's posted that anyway?

Does anyone know if there were any previous rumours of note that mentioned the 7770?

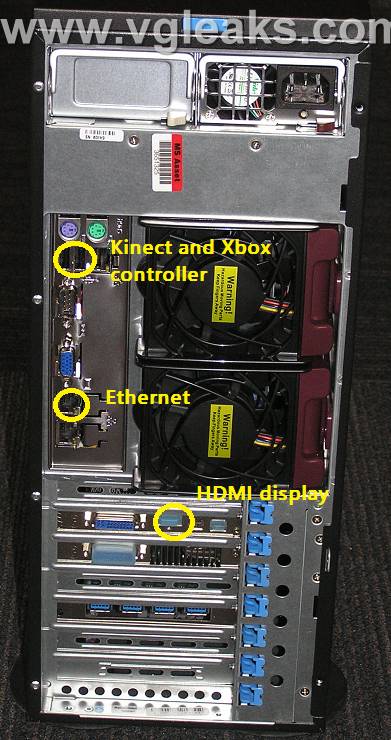

Also, going back to the leaked devkit shot, could it be possible that the GPU is some other card besides the HD6870/6950 we originally thought? Possibly around the 1 to 1.6 TF mark? What does the backside of a 7770 look like?