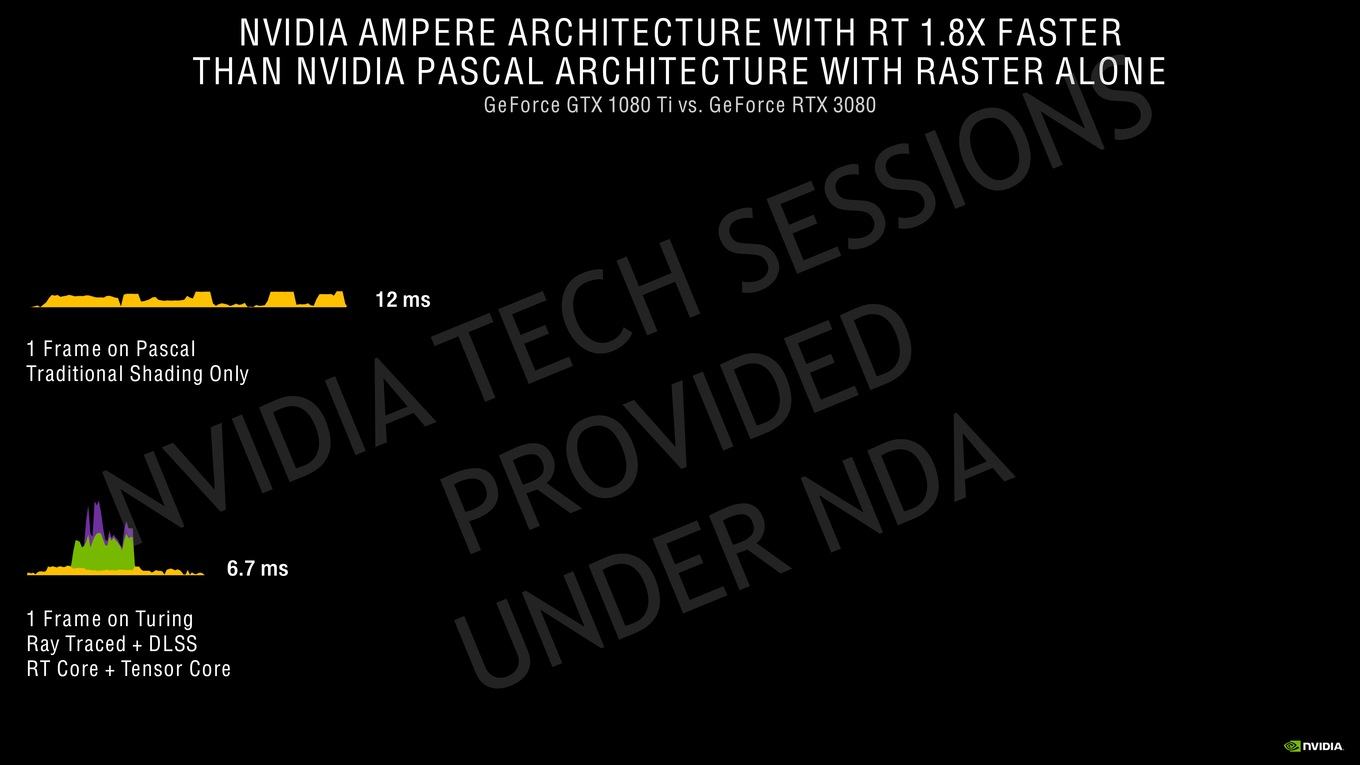

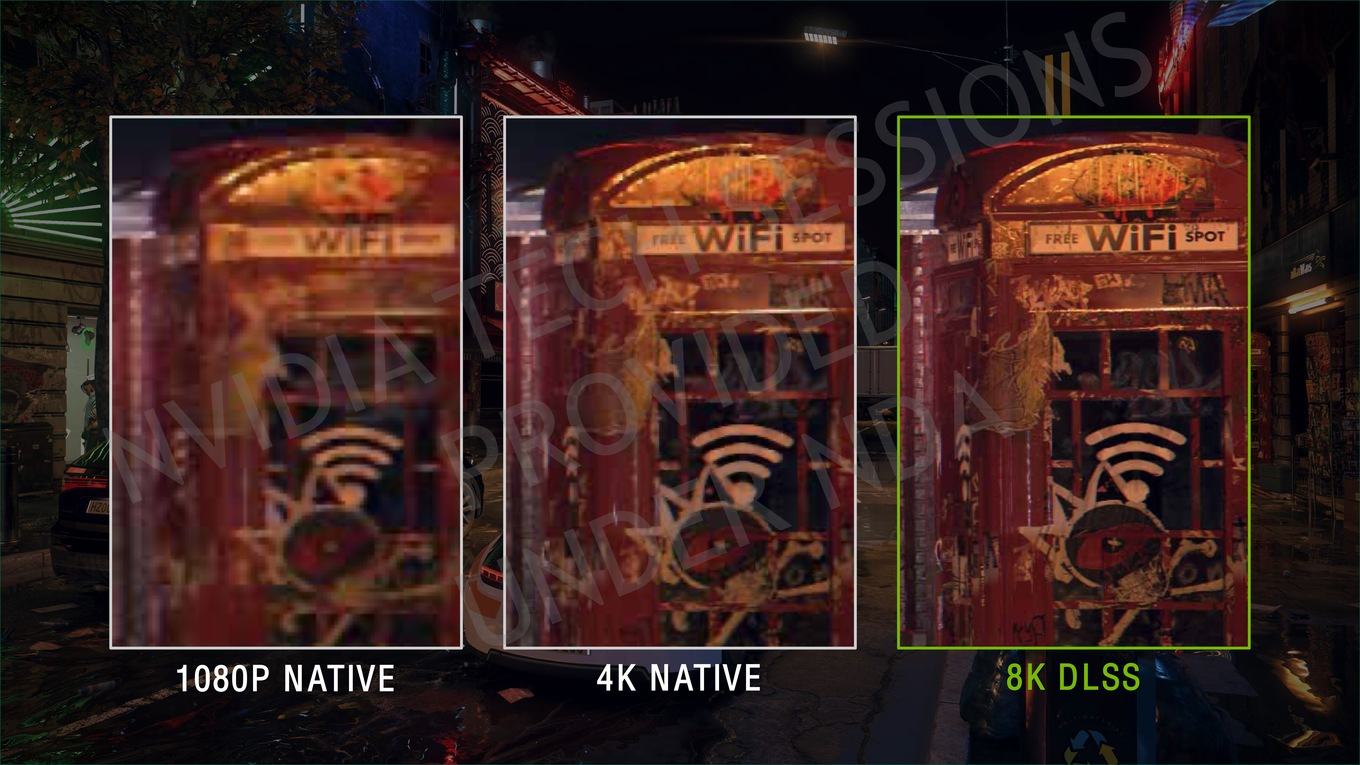

Seems like DLSS cost in frametime will be significantly lowered because of the new concurrency stuff. This should be good knows for high framerate situations where the relative cost of DLSS became more significant.

I'm pretty clueless about all things rendering, but I'm surprised DLSS processing can be started before the frame is fully rendered.

Aren't the tensor cores used for RT denoising? If so then this could be referring to that rather than DLSS. Note it happens at exactly the same time as the RT work (with a small lag at the start).