I don't knowIs this native vs. DLSS 3?

But I do not remember such artifacts with DLSS 2 even though I always turn it on

I don't knowIs this native vs. DLSS 3?

It introduces slight ghosting and certain artefacts with overlapping geometrie. Performances uses informations from 4 previous frames. It cant always hide the temporal aspect.I don't know

But I do not remember such artifacts with DLSS 2 even though I always turn it on

I think it has to do with optical flow frame generationIt introduces slight ghosting and certain artefacts with overlapping geometrie. Performances uses informations from 4 previous frames. It cant always hide the temporal aspect.

I think it has to do with optical flow frame generation

Artifacts appear on exactly one frame, the frame which was generated by dlss3

You can see for yourself. In YouTube, you can move the video one frame forward or backward (keys , and . on your keyboard). Timecode 1:33

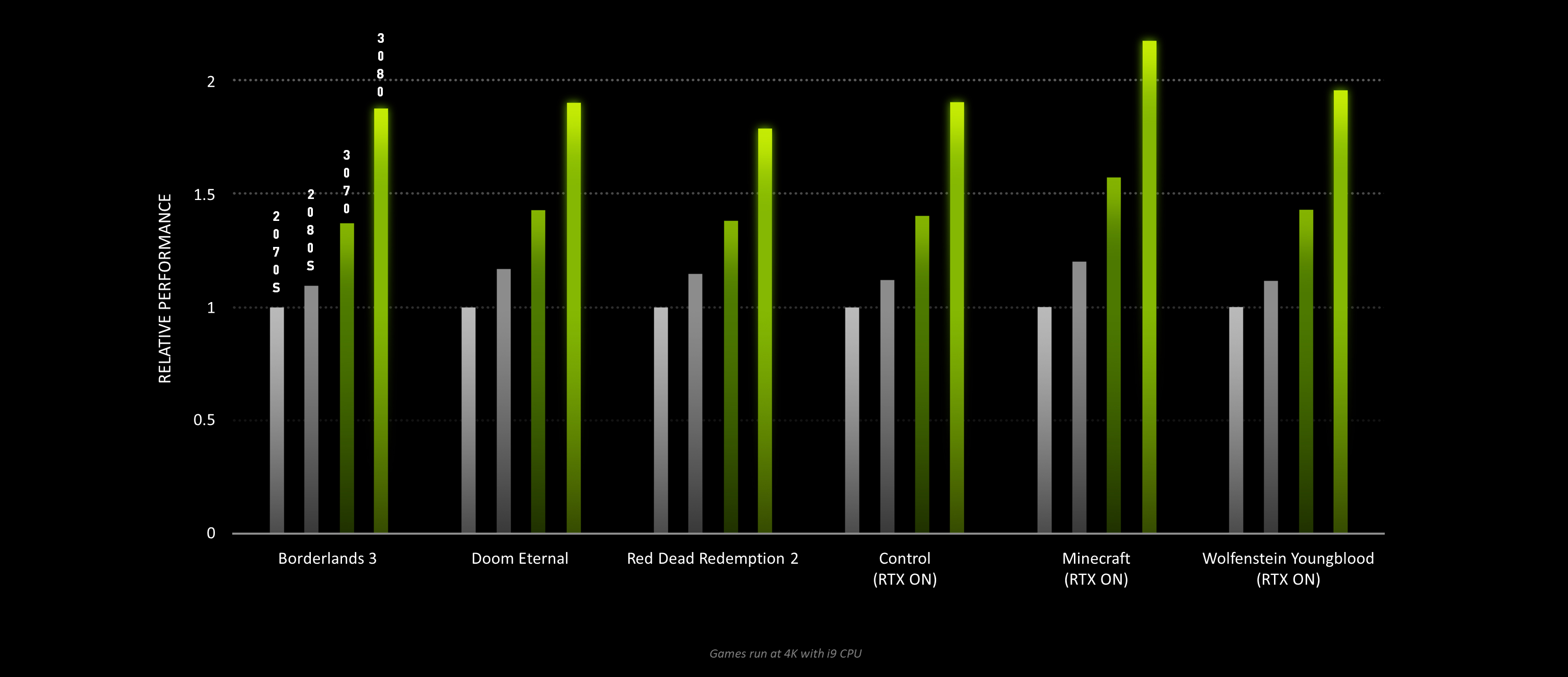

I think there will be outliers like Q2RTX but even CP2077 at 1440p shows 3080ti is 62% faster than a 2080ti, which is still a huge performance uplift in its own right but still not 2x.We did in Quake and Minecraft which i think they were refering to when they said up to twice the speed in RT

View attachment 7002

The 2x jump was really in benchmarks and games where raster rendering is not as heavy. For example Q2RTX, Minecraft RTX and Serious sam/Doom with RTI don't think we ever saw a 2x jump in the real world between the RTX2000/3000 series in gaming with RT enabled did we?

I'm pretty sure the claim was 3080 would be 2x as fast as 2080 (non-Ti). Ampere RT core was claimed to be 1.7x faster than Turing.I just watched the old RTX3000 reveal and even back then they stated 2x RT performance improvement over RTX2000 series.

I don't think we ever saw a 2x jump in the real world between the RTX2000/3000 series in gaming with RT enabled did we?

I think it has to do with optical flow frame generation

Artifacts appear on exactly one frame, the frame which was generated by dlss3

You can see for yourself. In YouTube, you can move the video one frame forward or backward (keys , and . on your keyboard). Timecode 1:33

I just watched the old RTX3000 reveal and even back then they stated 2x RT performance improvement over RTX2000 series.

I don't think we ever saw a 2x jump in the real world between the RTX2000/3000 series in gaming with RT enabled did we?

I'm pretty sure the claim was 3080 would be 2x as fast as 2080 (non-Ti). Ampere RT core was claimed to be 1.7x faster than Turing.

But please do link with timestamp if they claimed 2x perf over 2080 Ti.

Even worse than the highlighted, look at the lower right corner grate, the 2nd beam from bottom decided to fall apartInteresting...

It was very specifically the jump from the 2080 to 3080 that was claimed to be up to 2x but that was only in fully path traced games. Otherwise it was 60% faster or so.I just watched the old RTX3000 reveal and even back then they stated 2x RT performance improvement over RTX2000 series.

I don't think we ever saw a 2x jump in the real world between the RTX2000/3000 series in gaming with RT enabled did we?

They were specific.The Ampere launch video was never specific in terms of what actual product they were comparing or even if they were doing that as opposed to some type of more specific abstract comparison (eg. SM to SM).

Instead the product page at launch used this more specific product comparison -

They were specific.

I'm pretty sure the claim was 3080 would be 2x as fast as 2080 (non-Ti). Ampere RT core was claimed to be 1.7x faster than Turing.

But please do link with timestamp if they claimed 2x perf over 2080 Ti.

Well I took relative performance to include ray tracing performance, since they said "up to" 2x faster.Oh, we're referring to different charts.

I was referring the one with actual individual break downs much earlier in the presentation (since these are much more verifiable) -

It's in my post above.Do you have a time stamp for 2080 vs 3080?

It's in my post above.