You asked my reasons and I explained them. Did you not understand ? If you can't understand my posts then perhaps its best if we end correspondence here.Because there are no 40 series cards announced at prices of 30 series below 3080 12GB.

And yet you are comparing them to 3070 for some reason.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

DegustatoR

Legend

I can't understand something which has no meaning. Nvidia can't "drive prices up" if they are still selling cards at those prices <$700 which you're seemingly okay with.You asked my reasons and I explained them. Did you not understand ? If you can't understand my posts then perhaps its best if we end correspondence here.

4090 isn't more expensive than the top end cards in 30 series either.

neckthrough

Regular

Don’t need all that. Just look at performance.

Exactly. Focus on perf/power/features at iso-price or price at iso-perf vis-a-vis what else is available in the market and judge value for yourselves. No need to segfault your heads trying to deconstruct whatever 10-dimensional chess game the branding guys were playing.Or you could compare at actual similar prices instead of randomly picked 30 series cards

Some other thoughts:

DLSS3 is going to be an interesting factor in the value equation. Reconstruction has gone mainstream now, so if quality holds up for frame generation then it definitely adds to Ada's value. To what extent we can only judge after independent analysis from DF et. al.

In general I've been warning folks that rising silicon costs (way beyond inflation) are going to limit raw perf or perf/$ gains. This has been happening for the past couple of generations, but has been partially mitigated by fixed-function side-steps (RT) or clever hardware/software work-saving (reconstruction). We've seen some other one-time lifelines such as the cheaper SEC8 node. Those lifelines are running out.

Given those challenges I've been very pessimistic about pricing for this gen, especially for Nvidia because GeForce is still 50% of their bread and butter and they can't afford to take a big haircut on margins, unlike AMD or Intel who likely have more flexibility because dGPUs are effectively noise in their earnings charts. And so I wasn't too surprised by the 4080 prices but I was a little surprised by the 4090 pricing -- I was expecting it to come in higher. It's a big die on a premium node and it's actually cheaper than the inflation-adjusted launch price of the 3090 (price went up 6.7% while inflation was 14.4% from 2020 to 2022) while providing, what, +70% performance? That's *before* DLSS3. It's probably a small market segment, but for folks in that tier it seems to be a big upgrade all around.

So you still don't understand that people compare top end cards with top end cards. The 3070/3080/3090 were the high end ampre cards at launch. The 4080/real 4080 and 4090 are the high end cards now. Nvidia has no 40x0 card in the $700 price range. So I am comparing what they announced.I can't understand something which has no meaning. Nvidia can't "drive prices up" if they are still selling cards at those prices <$700 which you're seemingly okay with.

4090 isn't more expensive than the top end cards in 30 series either.

Since you can't understand it or just don't want to understand it and quite frankly your extremely condescending I am just going to stop corresponding with you. Good bye.

Flappy Pannus

Veteran

I disagree and I think Nvidia is investing the transistor budget in the right area. I don't care about raster. AT ALL. It's already enough.

I think it's not that people necessarily need 2x raster performance for the 3090 class cards, it's more that they want to see something closer to 3080/3090 class raster performance be delivered to the eventual 3070/3060ti/3060 class cards that Ada will address at some point - and delivering a 2X raster performance in the halo product would make something like that at least more likely. The significant disparity between the 4090 and 4080 in raster, and especially the 4080(cough) 12GB, indicates that this wish may not necessarily be fulfilled when those mainstream cards arrive in 2023.

Perhaps it was naivete to think a raster jump of 50% + is possible for the mainstream segment with the slowing of process advancements, sure - but those class of cards are certainly bottlenecked by raster in many games still, and come mid 2023 we'll be approaching 3 years since Ampere. I sure as hell still see a need for faster raster in the $400-$500 segment, and the early (yes, early) indications are the improvements in that area might be very minor.

It would be one thing if the 4090 was like the 3090 of past - more of an 'extreme' 3080 and with a ton of added vram, but this time its specs are vastly superior to the 4080 all around, and the 4080 12GB in turn is vastly inferior as well to the point where it really shouldn't have 8 in that name at all. Those gargantuan specs of the 4090 are what's needed to get, so far, the top-end results of 70% raster - improvements rapidly drop off in the tiers below that.

DegustatoR

Legend

"Top end cards" is a couple of top dogs launched during the generation. In case of 30 series these are 3090 (original) and 3090Ti (late refresh). Neither 3080 nor 3070 are "top end cards".So you still don't understand that people compare top end cards with top end cards.

You could compare 3080 12GB with 4080 12GB because they are launching at the same price. As for the rest - these comparisons are absolutely random.

I'm actually starting to feel like 4080 12GB was a conscious pun on those who compare cards by numbers in their names instead of prices and performance...

So you're comparing whatever you want basically for no other reason than to feel offended and be with these people on social media then who are also offended. Gotcha.Nvidia has no 40x0 card in the $700 price range. So I am comparing what they announced.

Flappy Pannus

Veteran

I'm actually starting to feel like 4080 12GB was a conscious pun on those who compare cards by numbers in their names instead of prices and performance...

Or, you know, you could actually name your cards something sensical that actually reflects the difference in the product stack. I actually think it's perfectly fine to point out when a company purposeful obfuscates the differences in products in the name of marketing and criticize that.

Nvidia increased performance at the high end by driving prices of the cards up. The runt of the litter 12gig 4080 is $900. The 3080 launched at $700 , the 3070 launched at $500. We have to see benchmarks and see how much faster the 4080 is over the 3080 and the 3070. We may end up in a situation whre the $500-$700 cards offer almost no performance uplift over the previous generation. couple that with what will surely be smaller vram pools and they could have issues when games switch over to ps5/xbox series titles.I think it's not that people necessarily need 2x raster performance for the 3090 class cards, it's more that they want to see something closer to 3080/3090 class raster performance be delivered to the eventual 3070/3060ti/3060 class cards that Ada will address at some point - and delivering a 2X raster performance in the halo product would make something like that at least more likely. The significant disparity between the 4090 and 4080 in raster, and especially the 4080(cough) 12GB, indicates that this wish may not necessarily be fulfilled when those mainstream cards arrive in 2023.

Perhaps it was naivete to think a raster jump of 50% + is possible for the mainstream segment with the slowing of process advancements, sure - but those class of cards are certainly bottlenecked by raster in many games still, and come mid 2023 we'll be approaching 3 years since Ampere. I sure as hell still see a need for faster raster in the $400-$500 segment, and the early (yes, early) indications are the improvements in that area might be very minor.

It would be one thing if the 4090 was like the 3090 of past - more of an 'extreme' 3080 and with a ton of added vram, but this time its specs are vastly superior to the 4080 all around, and the 4080 12GB in turn is vastly inferior as well to the point where it really shouldn't have 8 in that name at all. Those gargantuan specs of the 4090 are what's needed to get, so far, the top-end results of 70% raster - improvements rapidly drop off in the tiers below that.

DegustatoR

Legend

Seems like "12GB" is there for that. Or are we talking about those mythical GPU buyers who base their buying decisions solely on GPU name on the box?Or, you know, you could actually name your cards something sensical that actually reflects the difference in the product stack.

Sure, me too. Not really seeing it here though. Would prefer for 16GB model to be called 4080Ti but whatever, doesn't change anything.I actually think it's perfectly fine to point out when a company purposeful obfuscates the differences in products in the name of marketing and criticize that.

Flappy Pannus

Veteran

Nvidia increased performance at the high end by driving prices of the cards up. The runt of the litter 12gig 4080 is $900. The 3080 launched at $700 , the 3070 launched at $500. We have to see benchmarks and see how much faster the 4080 is over the 3080 and the 3070. We may end up in a situation whre the $500-$700 cards offer almost no performance uplift over the previous generation. couple that with what will surely be smaller vram pools and they could have issues when games switch over to ps5/xbox series titles.

That is my concern, and that's what I'm focusing on - the price bracket, I don't care what naming scheme Nvidia eventually calls them. There is a lot riding on DLSS 3 it seems.

The 4080 having only 12GB at $900 is also a worry for the vram amount of cheaper cards. I can't see purchasing any GPU at even $400 in 2023 that doesn't have at least 12GB.

Flappy Pannus

Veteran

Seems like "12GB" is there for that. Or are we talking about those mythical GPU buyers who base their buying decisions solely on GPU name on the box?

Seems like 12GB is there to say the card has 12GB, if specs don't matter then why change the numbering scheme of any card and just go by vram amounts? People would rightfully think 'wtf?' if the 3070 was named the 3080 8GB, and the gap in performance between those two cards is far less than the 4080 16 and the 4080 12GB.

Sure, me too. Not really seeing it here though.

Yeah I figured.

Last edited:

It's odd to me. When nvidia launched ampree the high end card was the 3090 / 3080/3070. they all announced the same day. Nvidia now launched ada and put out a 4090/4080/4080 runt edition. I am comparing apples to apples here. The issue should be taken with Nvidia for almost doubling the price of the last gen cards with this new generation of cards.Or, you know, you could actually name your cards something sensical that actually reflects the difference in the product stack. I actually think it's perfectly fine to point out when a company purposeful obfuscates the differences in products in the name of marketing and criticize that.

If I go to chevy in 2018 and they have a trax , equinox and traverse and I buy an equinox and then 3 years later I go back and they now doubled the price of the Equinox and then take the trax and rename it Equinox little and increase it over the price of what I paid for the equinox of course I am going to question wtf is going on. But apparently its okay and normal to do in the graphics market.

I'm just a customer. I bought a 3080. I would assume that the 4080 would be its successor but the price has now doubled. What's more instead of a 3070 and 3080 there is now two 4080s but they have different specs to confuse the situation even further but the only change is the amount of ram further trying to mislead consumers. Nvidia has had suffix's on cards before denoting decreased or increases in performance over the stock brand for instance using super or using ti. So why doesn't that exist now ?

If people take offence to consumers pointing out the anti consumer practices of a company then they should really take a good loook at why they are upset and revaluate some stuff.

That is my concern, and that's what I'm focusing on - the price bracket, I don't care what naming scheme Nvidia eventually calls them. There is a lot riding on DLSS 3 it seems.

The 4080 having only 12GB at $900 is also a worry for the vram amount of cheaper cards. I can't see purchasing any GPU at even $400 in 2023 that doesn't have at least 12GB.

DLSS 3 seems to be their only play. The reason for me to buy a new card would be to try and get the best performance out of upcoming games that I really want like starfield. If the only way to get a decent upgrade in performance is to spend double what I spent on my current card then there isn't really too much to say. I would rather play game without dlss as I want as pure of an image as I can get with no additional latency or artifcating going on.

I have a feeling that the lower end cards could end up with sub 10gig vram allocations. To be perfectly honesty I think a $700 card from nvidia will be wthin 10-15% of the 3080 and nvidia will try and advertise dlss3 numbers to sway people over which to me is really slimy

gamervivek

Regular

The 4080 12GB naming debate is even funnier considering the 192-bit chip used to be used in xx60 cards for the past few gens, got promoted to 104 chip designation in this gen and finally got a double promotion with the xx80 card designation.

arandomguy

Veteran

I was under the impression Micron didn't make ECC GDDR6X, which would make it a non-starter for professional use anyway.

EDIT: The protocol has error correction for transmission, but nothing to detect or correct a bit flip on the memory chip

It looks like that is the case and nothing has changed. Ada workstation cards look like they will be using GDDR6.

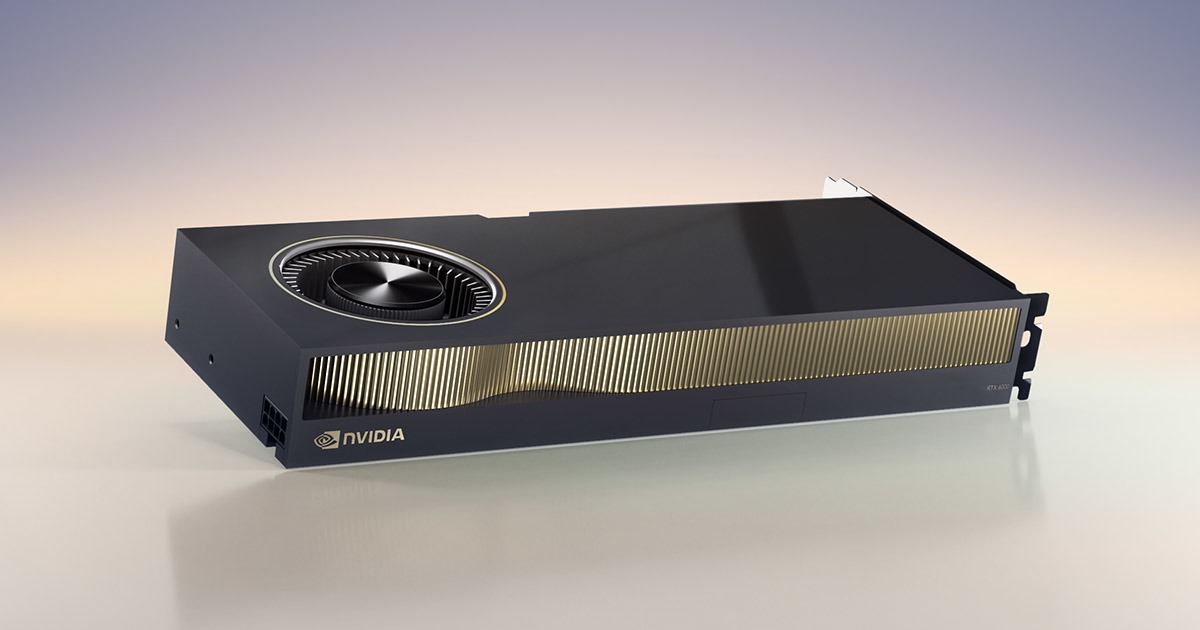

NVIDIA RTX 6000 Ada Generation Graphics Card

Powered by the NVIDIA Ada Lovelace Architecture.

www.nvidia.com

48GB GDDR6 with error-correcting code (ECC)

Personally I still find these unilateral memory standard pushes away from conventional GDDR interesting. Regardless if it's by Nvidia or AMD the results always seem at best "mixed" in terms of what they were trying to achieve.

Flappy Pannus

Veteran

DLSS 3 seems to be their only play. The reason for me to buy a new card would be to try and get the best performance out of upcoming games that I really want like starfield. If the only way to get a decent upgrade in performance is to spend double what I spent on my current card then there isn't really too much to say. I would rather play game without dlss as I want as pure of an image as I can get with no additional latency or artifcating going on.

I have a feeling that the lower end cards could end up with sub 10gig vram allocations. To be perfectly honesty I think a $700 card from nvidia will be wthin 10-15% of the 3080 and nvidia will try and advertise dlss3 numbers to sway people over which to me is really slimy

Maybe. To counter some of my cynicism somewhat, I've seen some in other forums start claiming this is the signifier that PC gaming is dead which I think is a bit hysterical, but largely because I just don't think Ada priced the way it is right now is necessarily an indicator of what Nvidia truly feels is actually indicative of anything close to the mainstream going forward (they did refer to the $329 3060 as their 'mainstream card' in this very keynote after all). Maybe Jensen is this far up his own ass but I don't quite think so.

It's a perfect storm of factors leading to this imo; which is: TMSC having everyone by the short and curlies, advanced process tech just being very difficult and restricted in capacity, inflation, and most importantly a massive backlog of Ampere cards due largely to crypto being crypto. It's priced precisely to not touch Ampere except with the slightest graze at the top of the head.

I think the one bright spot right now is the fact that the 3080/3090 class cards keep seeing price drops, while being primary a PC gaming enthusiast I want pc hardware to be flying off the shelves naturally, but at these prices I think it's a good thing that they've having some trouble moving them. I just have never seen the market for these class of cards to be that large without crypto pumping it up.

I am not foolish enough to believe we'll soon see the return of the $300 console-destroying segment (albeit that really hasn't been the case since the PS4 Pro/One X anyway), but I think this shows at least the pure insanity of the last 2 years is finally abating. So regardless of what Nvidia would like the 3070/3060 replacements to be in terms of price/performance, I don't really know if they can just jack up the price and expect it will sell. It's not just a case of AMD not bringing enough heat, there just isn't the disposable income out there like there has been.

Speaking of AMD btw, $559 ($20 rebate) 16GB 6800 XT's are now being sold. Yeah yeah, RT performance is bleh. But it does indicate the amount of pressure the channel is feeling atm. And hey, if you really value raster performance...

Your platform of choice will go first im sure.Slow death of dGPUs confirmed.

Price-wise it certainly looks like it. Then again, x80 Ti has always been on the top gaming chip though IIRC.I actually feel it's like this:

4080 12GB

4080 Ti 16GB

4090 24GB

Flappy Pannus

Veteran

Somewhat of an interesting take from another angle that focuses on the massive increase in tensor capacity and what that can bring.

Outside of the 4090 though, I'm not sure I accept the thesis this the target of these Ada models so far is really "Professional developer, occasional gamer", and I'm very skeptical of the last point in particular. I'm not sure the 4070 will actually be 'far, far different' than the lower tier 4080, especially if it has actually more graphics/compute cores - I think that would really upset new 4080 owners if in current games they are actually running at a deficit to the cheaper, lower tier card, just can't see that happening. I think these cards are designed how they are because that's where Nvidia has focused its talent and it's only natural they would leverage that into their gaming line, and the market now just means that's how they're going to price them for the time being.

Explanation: The 40XX series cards just announced are for professionals with gaming-related workloads, not actual gamers (yet)

Outside of the 4090 though, I'm not sure I accept the thesis this the target of these Ada models so far is really "Professional developer, occasional gamer", and I'm very skeptical of the last point in particular. I'm not sure the 4070 will actually be 'far, far different' than the lower tier 4080, especially if it has actually more graphics/compute cores - I think that would really upset new 4080 owners if in current games they are actually running at a deficit to the cheaper, lower tier card, just can't see that happening. I think these cards are designed how they are because that's where Nvidia has focused its talent and it's only natural they would leverage that into their gaming line, and the market now just means that's how they're going to price them for the time being.

Explanation: The 40XX series cards just announced are for professionals with gaming-related workloads, not actual gamers (yet)

Reddit User Idolhunter2 said:Heard lots of people yelling at NVIDIA for everything from the pricing, to the two 4080's with one being a re-marketed 4070 to slap on a higher MSRP, and sure, this entirely may be true, but here's my take as an actual software developer that creates the AI models & software that companies and apps use millions of times a day to make your lives easier.

GeForce is undoubtedly a gaming brand, which makes sense, it always has been. The thing is it has always just been a consumer gaming brand. The new 40XX series cards announced last night are not for consumer gamers, rather they are for professionals that have gaming somewhere in their professional workflow, whether it is actual gaming, or gaming technology for different applications.

Lots of things between the AI industry and gaming have been merged and combined over the years where it's all sort of mixed on why gaming cards are being used for deep learning and vice versa. Thing is, gaming uses the same calculations that deep learning does, but the difference here is that deep learning does it in parallel over multiple systems to train models.

Reddit User Idolhunter2 said:The true power of the 40XX series cards that were announced were the AI/Tensor cores. The 3090 Ti has a computing capacity for deep learning of 40 TFLOP/s for single precision, assuming we compare that to the FP8 we saw in the livestream for the 40XX series, that's 80 TFLOP/s for FP8 performance.

The base 4090, non overclocked, reference card has ~17.5x more AI compute than the best 3090 Ti on the market. Yes, upwards of 17.5x more AI compute, it has a total of ~1.4 PFLOP/s (1,400 TFLOP/s) FP8 performance.

Reddit User Idolhunter2 said:On top of that, they are releasing an entire database of pre-made & already verified ground truth data in NVIDIA Omniverse, which will make it far easier for your average Joe to make datasets in the size of terabytes of synthetic, high quality data to train new cutting edge models on, or fine tune already made models for specific tasks. I don't know the exact number of ground truth model data in NVIDIA Omniverse, but the show last night mentioned ~300 million open source pieces of data already in the Omniverse marketplace/library, with premade apps that are free for public use and ready to download and use.

And I can confirm, they are high quality models + data, I checked out the app last night during the event and honestly was blown away by some of the template apps they give you as tutorials, with one of them being a fully synthetic automatically re-made area of New York City from over 1 billion lidar points, with all materials being automatically created, generated & applied from open source street views, google maps, etc. It's actually insane, the models you can train using this tool are honestly going to be light years ahead of cutting edge research right now. Oh and yeah, the RacerX demo is available on their too, and it makes the 3090 cry tears that evaporate by its own heat while it barely is able to keep up with FPS like it's walking through quick sand. So the fact that a single 40XX card can run that simulation in real time, with a recording taking place, and also possible overheads for OS, other apps, etc, is more than amazing, it marks an entirely new age of technological advancements.

Reddit User Idolhunter2 said:I'm sure we will be getting more gaming-focused, and more price-friendly GPUs meant for the wider community sometime in November, and this includes the 3070, which is going to be far, far different than the lower tier 4080 we saw in the presentation (Less enabled AI Cores + Lower AI Core frequency, but more activated graphics/compute cores for gaming performance and increased frequency on those cores, with less of a demand on memory bandwidth, but rather a focus on memory latency) .

DavidGraham

Veteran

DLSS 3 seems to be adding about 50%~60% more performance. However, in Cyberpunk .. NVIDIA showed the 4090 to be 4 times faster than 3090Ti, here the 4090 is running DLSS3, while the 3090Ti is running DLSS performance (effectively @1080p). Raw performance must be very high here.Then the raw performance increase is also around 50%

Digital Foundry also shows the 4090 DLSS3 250% faster than 3090Ti DLSS Performance.

So where is the massive difference is coming from? The 4090 should be at least twice as fast as the 3090Ti in this game raw performance to raw performance.

- Status

- Not open for further replies.

Similar threads

- Replies

- 177

- Views

- 40K

D

- Replies

- 90

- Views

- 17K

- Replies

- 20

- Views

- 6K