Subtlesnake

Regular

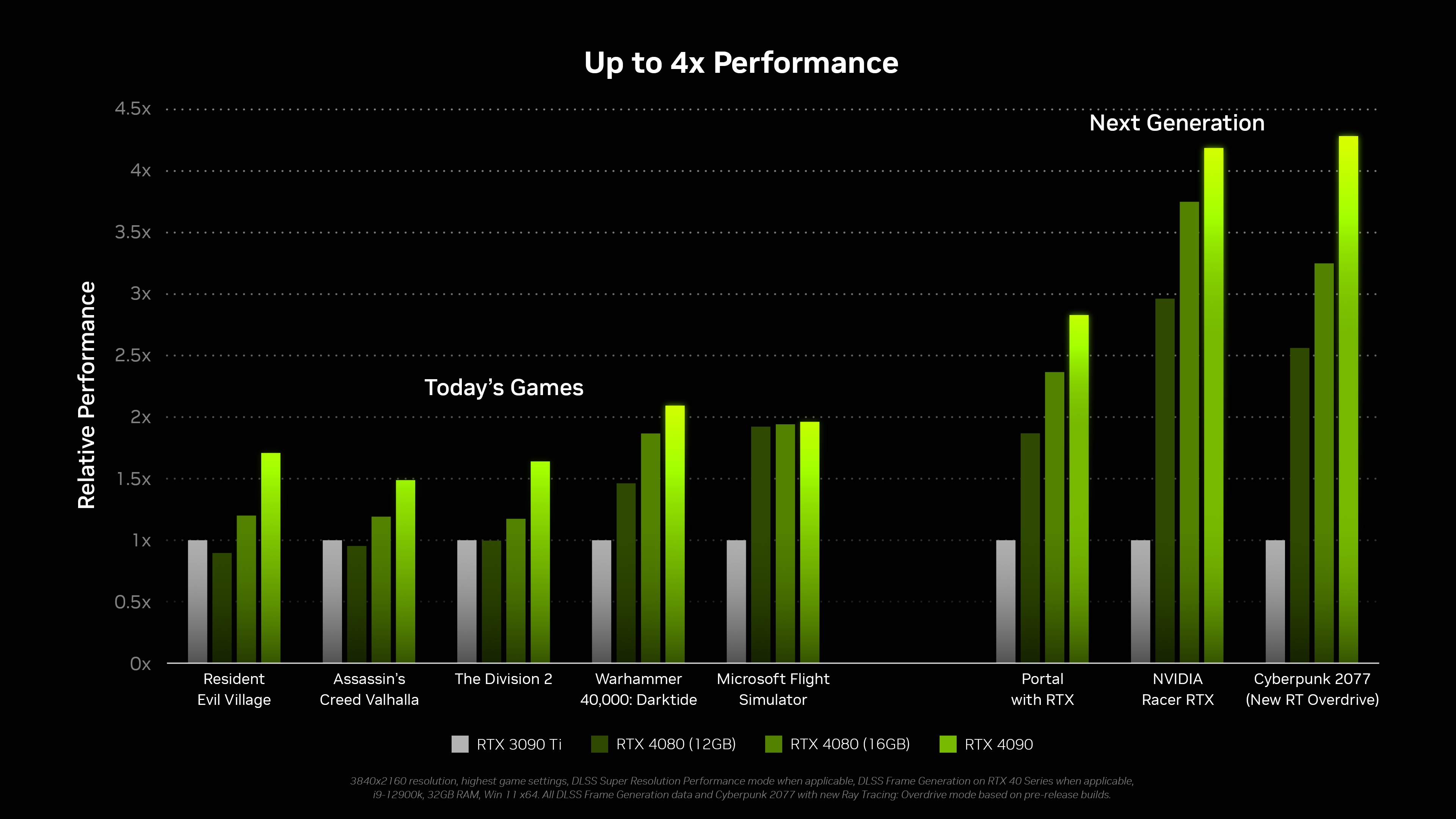

If the 3080 was more than 2X faster than the 2080 in ray tracing workloads, then the relative performance would be advertised to be "up to" more than 2X faster. The fact that 2X is the upper limit implies that this is the limit for ray tracing performance. And that's what testing confirmed.That's not we're talking about though, we're discussing the performance uplift in pure ray tracing loads, not all games in general.

And with RT performance they didn't specially compare two cards.