Wiizard

hm... actually, I have to wonder if it'd be better use of the interposer area to have two stacks e.g. take the Fiji turtle layout and chop the sorry ninja in half from head to butt so you have an arm and a leg on one side of the chip.

----

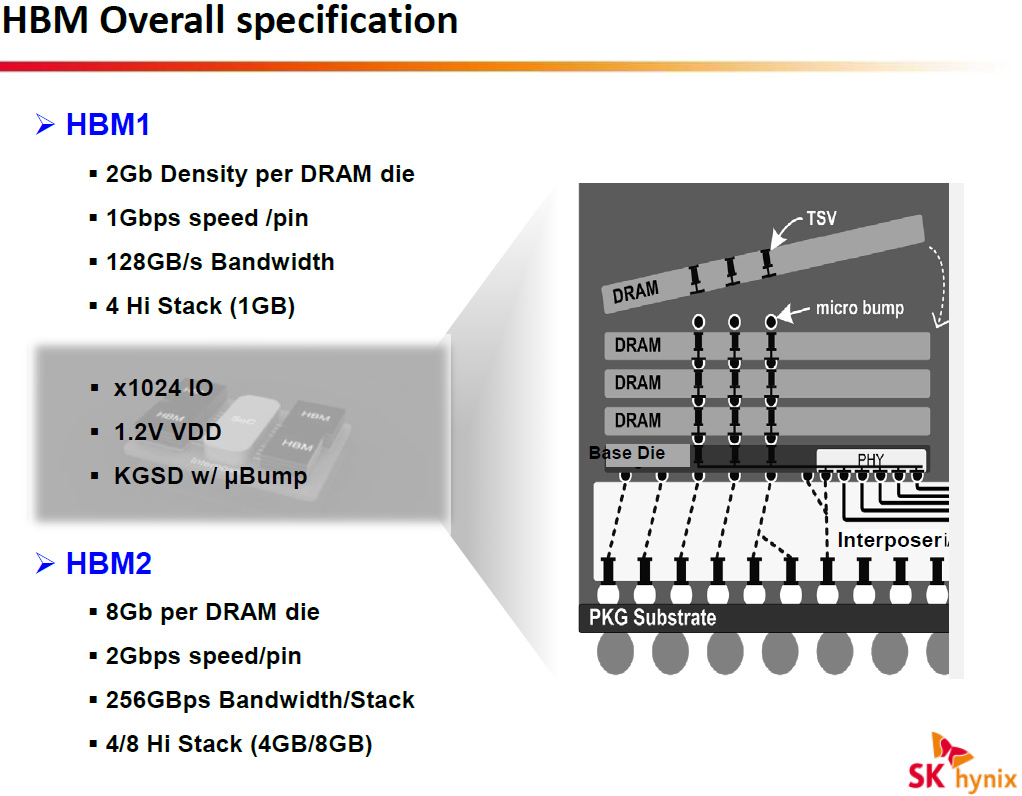

I suppose HBM is designed for 1024-bit width per stack, but they can also downclock significantly according to what the APU demands.

-----

2017 would probably be inexpensive enough for the likes of Nintendo.

HBM2 isn't much of a worry either since the size of the interposer would be small, you could get away with using only a single stack (256GB/s, max 8GB), and could avoid driving any off-interposer DRAM at all.

hm... actually, I have to wonder if it'd be better use of the interposer area to have two stacks e.g. take the Fiji turtle layout and chop the sorry ninja in half from head to butt so you have an arm and a leg on one side of the chip.

----

I suppose HBM is designed for 1024-bit width per stack, but they can also downclock significantly according to what the APU demands.

-----

2017 would probably be inexpensive enough for the likes of Nintendo.