Or neither.

This might be the SuborZ follow-up with double the specs + HDD.

I still believe Gonzalo = PS5.

Or neither.

My opinion - Gonzalo revision. Scarlett video clearly showed 320bit bus and 10 GDDR6 chips, while this one features 16.

With reduction on L3 cache, I am pretty sure we have clear picture of PS5 and design philosophy.

IMO we can expect 16GB of very fast RAM (downclocked 18Gbps chips as PCB leak suggested) on 256bit bus. Cut down desktop Zen2 and Navi XT with RT hardware.

Gonzalo is probably over 9tf and why do you expect performance above rx5700xt in nextgen consoles? If rx5700xt was 14tf(is 1.14x faster than 12.58tf vega64) and have the same performance you would be happy?Gonzalos 8 Tflops is not suficient for 4k gaming with 60 Fps and Raytraycing Effects and a 256 Bit Bus with UMA Design would be the same Bottleneckdesign like on the actual Ps4. So clearly no real new generational Lap , no innovation Tech, i cannot believe this. 16 Gbyte Ram is not enough.

Gonzalos 8 Tflops is not suficient for 4k gaming with 60 Fps and Raytraycing Effects and a 256 Bit Bus with UMA Design would be the same Bottleneckdesign like on the actual Ps4. So clearly no real new generational Lap , no innovation Tech, i cannot believe this. 16 Gbyte Ram is not enough.

The Subor team was disbanded earlier this year.This might be the SuborZ follow-up with double the specs + HDD.

I definitely feel that 16GB ram, a Zen 2 CPU and the SSD/NVMe solutions are more than a generational leap, IMHO.

You can't realistically have more than a generational leap as leaps are exponential. You can have a small leap, or a large leap, or no leap, but more than one generation would be 1.5x or 2x leap, meaning instead of a 10x increase in console power, something like a 50x or 100x increase in power. At the same price-point in the same time-frame, that's effectively impossible.

The listed specs are very much generational in scope. Plenty of folk will argue that they are pretty anemic as far as generational advances go!

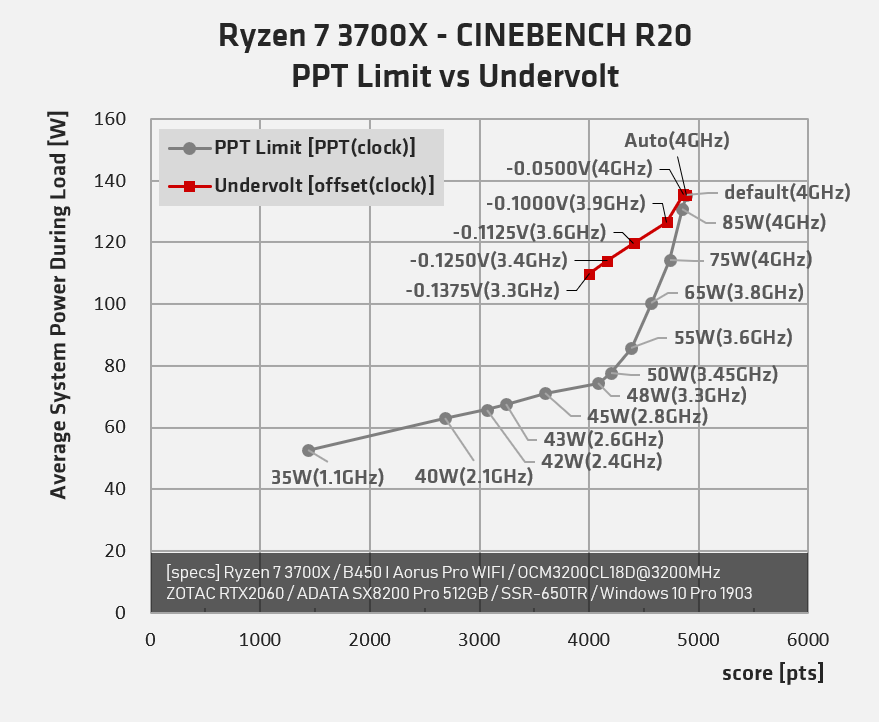

Should shave a few watts off if cache is indeed only 8MB L3 and not needing to drive I/O to an I/O die.Another "framework for discussion" kind of post. I came across an interesting graph of Zen 2 frequency v. Power draw. While I think you should dismiss the average system power under load number, the frequency at which you hit the (too pronounced?) knee is probably pretty valid assuming the same process being used. Which in turn would imply at least a 3 GHz CPU clock speed in an upcoming console. Even a Zen 2 core, and the consoles may use an improved version, is pretty damn performant at those frequencies. If you were a PC gamer that wanted twice the frame rate of a 30Hz console game that is CPU limited, you might have to fiddle around a bit with settings since just buying a twice as performant CPU might be impossible for a good long time to come (as such a limitation is unlikely to be when running all cores full out, so throwing more cores at the problem won’t help).

At the end points there are several possibilities:I've been thinking about the Xbox Scarlett teaser video and in particular the Samsung 14Gb/s GDDR6 memory chip part numbers.

It seems there is a mix between 1GB and 2GB chips, although what I'm struggling to understand is how such a setup can work?

Can such a configuration be used to provide a unified memory pool (assuming that's their objective) in a way that is transparent to the application/developer?

2 x 8 Gb + 8 x 16Gb = 14GB Game + 4GB OS.Memory controller headaches.

Maybe in a console environment the intent might be to sneak in the usual OS reservation onto the chips with higher capacity while the GameOS only sees the baseline memory capacity per chip ?

e.g.

10 chips

8 x 8Gb + 2 x 16Gb = 10GB Game + 2GB OS

6 x 8Gb + 4 x 16Gb = 10GB Game + 4GB OS

4 x 8 Gb + 6 x 16Gb = 10GB Game + 6GB OS

the game addresses 1GB per chip, the OS reserves the extra GB on each higher capacity chip.

----------------

^^ insanity++++

A forgery on behalf of MS to throw us off what it could be.

While unlikely, IMO, a possibility is that they saw how much information people derived from the Project Scorpio reveal and didn't want people to derive the same amount of information from the Project Scarlett reveal. Plus, final specs and configuration may still be somewhat fluid.

Regards,

SB

I've been thinking about the Xbox Scarlett teaser video and in particular the Samsung 14Gb/s GDDR6 memory chip part numbers.

It seems there is a mix between 1GB and 2GB chips, although what I'm struggling to understand is how such a setup can work?

Can such a configuration be used to provide a unified memory pool (assuming that's their objective) in a way that is transparent to the application/developer?

Memory controller headaches.

Maybe in a console environment the intent might be to sneak in the usual OS reservation onto the chips with higher capacity while the GameOS only sees the baseline memory capacity per chip ?

e.g.

10 chips

8 x 8Gb + 2 x 16Gb = 10GB Game + 2GB OS

6 x 8Gb + 4 x 16Gb = 10GB Game + 4GB OS

4 x 8 Gb + 6 x 16Gb = 10GB Game + 6GB OS

the game addresses 1GB per chip, the OS reserves the extra GB on each higher capacity chip.

----------------

^ insanity++++

While unlikely, IMO, a possibility is that they saw how much information people derived from the Project Scorpio reveal and didn't want people to derive the same amount of information from the Project Scarlett reveal. Plus, final specs and configuration may still be somewhat fluid.

Regards,

SB

I agree. They went to extra length to hide the mobo in subsequent real life shots. Why do it if it's 1:1 with the render.