upnorthsox

Veteran

Woah, we're half way thereoh you're an optimist.

Woah, livin' on a prayer

Take my hand, we'll make it I swear

Woah, livin' on a prayer

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Woah, we're half way thereoh you're an optimist.

That's exactly what's happeningYou think PS5 could be RNDA1, or some hybrid still?

How different could they be, though? Seriously. AMD are, by the most obvious parsing of the quote since it is part of a larger statement on AMD's support for raytracing, providing the solution for both. Why would they come up with two different solutions to the same problem?

Now what if Sony, not having the same infrastructure, used an ASIC, a bit Nvidia style, to complement RDNA 2,

not the real answer but:Wouldnt that require obscene amounts of bandwidth to ship the RayTracing data between GPU and ASIC, especially at 120 Hz?

MS's RT was crap and failed and they needed AMD's to work, whereas Sony's is super awesome and better than everyone else's....obvs.To counterpoint that, Microsoft has also been doing RT R&D since that time and has patents too, so why would they opt for AMD's solution instead? To make it easier for developers and be able to support it on the PC, is that maybe why?

Does it though? An expansive outdoor scene is largely indifferent to the effects of your locality, especially with regards to lighting. The success or failure will be in the ability/quality of blending your local sphere(and others) with the greater scene.Wouldnt that require obscene amounts of bandwidth to ship the RayTracing data between GPU and ASIC, especially at 120 Hz?

Wouldnt that require obscene amounts of bandwidth to ship the RayTracing data between GPU and ASIC, especially at 120 Hz?

That might be taking it too far.MS's RT was crap and failed and they needed AMD's to work, whereas Sony's is super awesome and better than everyone else's....obvs.

unfortunately nvidia is only a single data point in an entirely new rendering method. We've never had a RT performance shootout; so I'm not sure if those numbers even apply.Nvidia,with RT, takes a performance hit of 40% staying at max at 1440p 60 hz... Why are you assuming these consoles RT can go to 4K 120 Hz?

And will 120 hz be used besides VR?

How big is the market for 4K 120 Hz tvs? In sigle digits, 0% should not be far off!

unfortunately nvidia is only a single data point in an entirely new rendering method. We've never had a RT performance shootout; so I'm not sure if those numbers even apply.

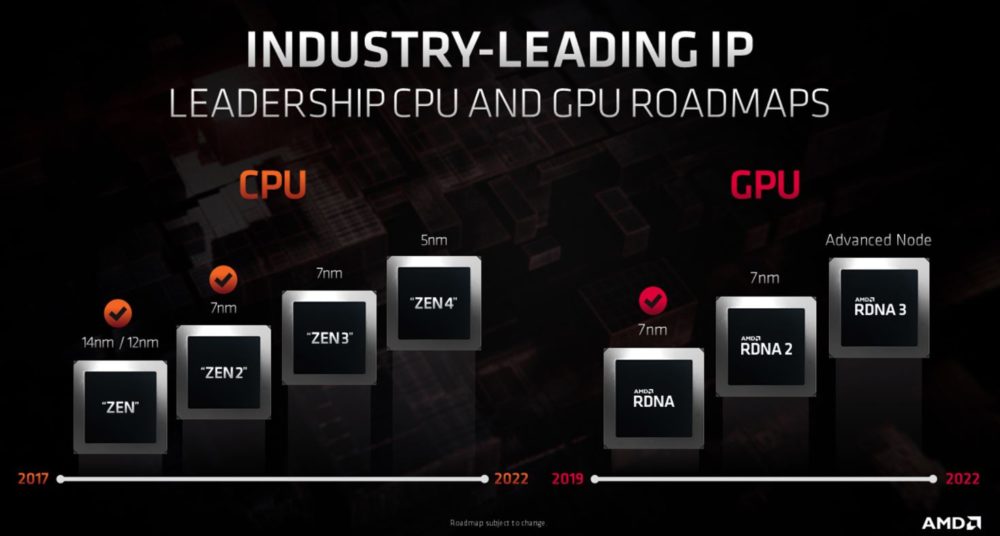

We won't know until they are ready to reveal/announce RDNA 2.0 cards.Good point. But AMD roadmap doesnt seem to show a high performance RT when it says "Selected effects" for RDNA 2 and leaves full scene RT for the cloud.

Chris, did Sony have 2 patents for SSD or one? I saw one with DRAM and other with SRAM.

I'm not sure if it is a full departure or not, people will probably debate it. But it's definitely has to be a big improvement, if only on the power/heat/frequency side.RDNA 2.0 is a full departure from GCN.

This is the reason why I was riding the whole 1.9 thing so badly.

How is that a counterpoint? MS came up with DXR without AMD's solution. Everything points to AMD being late to the party here, it wouldn't be surprising for no one to be following AMD's lead as they are not leading anything. Why are they using AMD's solution? Cost would be my mostly likely reason. That may be the determinant reason for Sony too, We'll see, maybe as soon as today.