mckmas8808

Legend

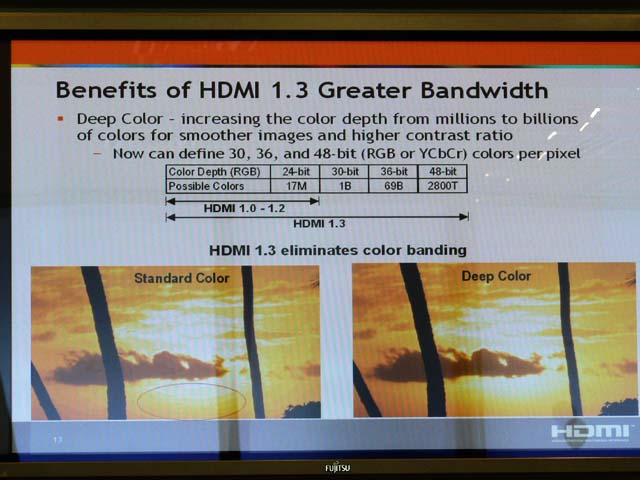

Uhm nope. The physical screen in LCDs is 8-bit per channel, as in the physical liquid crystals are only capable of that much. Last time i checked of course. Might have gone up a bit, i heard it would eventually, to 12bit, but i'm not sure it has happened yet?

Numerous times, in order to get the response times lower (to avoid motion blur LCDs have problems with) they use 6-bit panels, which is silly cause the sets still have motion blur, but this time with even less colours displayed.

So you are saying that these guys with a 360 a play a game with FP10 (aka 40bit HDR) are only getting 8bits of that HDR? Confused I am.