DegustatoR

Legend

I don't see anything in particular being "better" there. Top performing cards in 1080p are likely completely CPU limited there.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I don't see anything in particular being "better" there. Top performing cards in 1080p are likely completely CPU limited there.

If it was a CPU bottleneck then performance should keep scaling beyond the i3 10100 since there are far stronger CPUs out there but that is not the behaviour that we're seeing with the CPU results ...I don't see anything in particular being "better" there. Top performing cards in 1080p are likely completely CPU limited there.

Their CPU results should be taken with a huge grain of salt (GPU's too but to a lesser degree). But even if they are accurate they don't tell us anything about how CPU limited the 7900XTX is, and if there is a CPU limit AMD h/w tend to do better with it in D3D12 which explains the results you see in low resolutions.If it was a CPU bottleneck then performance should keep scaling beyond the i3 10100 since there are far stronger CPUs out there but that is not the behaviour that we're seeing with the CPU results ...

Or a million of other things which the developers using the engine can optimize or change differently depending on their target platforms, sponsorship agreements and general knowledge.The common denominator in performance behaviour between the graphics vendors of various UE5 titles appears to be virtual shadow mapping ...

I think one of the remnant 2 updates added an option to disable vsm, you could test your theory there.A more solid winning formula is for them to push UE5 developers into using virtual shadow maps ...

Or you are basing your theory on a sample of two: Remnant 2 (which lacks Lumen) and Immortals (which is funded by AMD), against a sample of 6 (The Lords of the Fallen, The Talos Principle 2, Fort Solis, Fortnite, Desordre and Jusant). All of these games use Virtual Shadow Maps except for The Lords of The Fallen. So your theory doesn't hold up against hard data.It seems like the relationship between Remnant 2/Immortals and why Lords of the Fallen has a somewhat mediocre showing on AMD might be down to the latter not using virtual shadow mapping. A more solid winning formula is for them to push UE5 developers into using virtual shadow maps ...

Nobody cares about junk like Fort Solis and it'll be be forgotten into oblivion just like that recent Lord of the Rings game you brought up before. Also the developers haven't explicitly disclosed whether that they use VSM in Jusant. Desodre is a single person using an RTX fork of UE5 which sure as hell isn't at all representative with how most UE5 content is developed ...Or you are basing your theory on a sample of two: Remnant 2 (which lacks Lumen) and Immortals (which is funded by AMD), against a sample of 6 (The Lords of the Fallen, The Talos Principle 2, Fort Solis, Fortnite, Desodre and Jusant). All of these games use Virtual Shadow Maps except for The Lords of The Fallen. So your theory doesn't hold up against hard data.

Additional data points include the game Satisfactory, in which you can disable and enable Lumen at will, with Lumen disabled the 7900XTX has the upper hand over the 4080, but once Lumen is enabled, the table turns. Even the gap between the 4090 and 7900XTX grows larger, from 28% with Lumen off to 57% with Lumen on.

Layers of Fear is another data point as well, but with Hardware Lumen.

You were the one who brought Lords of the Fallen in this thread, no one else did.Nobody cares about junk like Fort Solis and it'll be be forgotten into oblivion just like that recent Lord of the Rings game you brought up before

So far, none of your predictions have came true, so I wouldn't bet on it. Half of the released Lumen UE5 games so far are using HW Lumen: Fortnite, Layers of Fear, Desordre, Immortals are planning a HW Lumen update, there is also The Fabled Woods and Ant Ausventure.I suspect more good things to come in the future and sweeter too as well since none of the bigger UE5 projects (not even Lords of the Fallen) has used HW ray tracing so far

You brought up Gollum just to put the vendor you seethe in a really bad light. Nothing more to be said ...You were the one who brought Lords of the Fallen in this thread, no one else did.

Immortals didn't sell well but it was a decent AAA game. Meanwhile Fort Solis is about as good as Redfall from this year so why is the former more worthy than the latter for testing purposes ?By your logic, Immortals of Aveum is a shitty game as well, a low effort AA title that no one plays or cares for. Remnant 2 isn't using an important UE5 feature (Lumen), so should be discarded as well. So what are you arguing about again?

Neither Layers of Fear or Desodre are AAA projects. The developers behind Immortals did not specifically promise a HW lumen update ...So far, none of your predictions have came true, so I wouldn't bet on it. Half of the released Lumen UE5 games so far are using HW Lumen: Fortnite, Layers of Fear, Desodre, Immortals are planning a HW Lumen update, there is also The Fabled Woods and Ant Ausventure.

How can Epic even further optimize HW Lumen if they refuse to use vendor specific extensions like SER, OMM, DMM while there's no sign of Microsoft standardizing more DXR functionality ?Epic is extensively optimizng HW Lumen to work with Nanite for RT shadows and GI, they also want to increase performance on more capable hardware. So I expect RT capable GPUs to increase their performance tremendously once that happens.

It's a game just like any other. I bring Gollum in the context of RT enabled titles. We are not reviewers here, we evaluate games based on their tech, not review scores. You brought Lords of the Fallen to the discussion thinking the 7900XTX will have a large lead over the 4080 in this game, you were wrong, just like when you were wrong when you brought Assassin's Creed Mirgae to the discussion for that very same purpose. Now you are discarding them because they no longer fit your criteria. Be consistent with your own methodology.You brought up Gollum just to put the vendor you seethe in a really bad light. Nothing more to be said

Again, this is your subjective opinion.Meanwhile Fort Solis is about as good as Redfall from this year so why is the former more worthy than the latter for testing purposes ?

This is a tech forum, we harp about visual and technical upgrades to our games, not about checklist features or review scores.At least Remnant 2 is new project and managed to include at least one more major graphical feature (Nanite & VSM) while you keep harping on about a lightning system update for last generation assets as a realistic showcase of the engine. It's almost as bad as legitimizing those RTX remix remasters as real world content ...

Yes they did, they even detailed the process.The developers behind Immortals did not specifically promise a HW lumen update ...

The biggest change from software to hardware Lumen is that it goes from 200m to 1000m. There's a surprising secondary problem when you start adding light sources from five times the distance away, which is you're now getting reflections of lights from five times the distance away. Lumen really, really likes to handle reflections on everything, and we have a lot of lights, so now you start playing with other parameters to stop having too many reflections on all your shiny materials. Julia's team loves shiny materials, we have a lot, so it's a constant balance between having too few and too many reflections. If you optimise in the wrong direction, it either becomes muddled or it becomes like a glitter sparkle fest, neither of which are awesome. So we're threading the needle really tightly to [balance frame-rate and visual fidelity]. That requires a lot of testing, and you can't break other things while doing that.

Our current min spec is a RX 5700 XT and RTX 2080. I assume the updated specs are somewhere in people's hands; we've actually been able to lower minimum specs a little. We've been pushing really hard to drop that down well outside of anything that supports RTX. The side effect of that, obviously, is we need to make sure that software [Lumen] on PC does not go bad as we begin to optimise for hardware [Lumen].

That's their plan, they announced it here. They specifically talk about enabling 60fps for Hardware Lumen on consoles, and enabling using Ray Traced shadows with Nanite.How can Epic even further optimize HW Lumen if they refuse to use vendor specific extensions like SER, OMM

Maybe they should just use modern features. But then a console developer cares only about consoles. And when the graphics IP is 10 years old we only get outdated solutions for modern problems.How can Epic even further optimize HW Lumen if they refuse to use vendor specific extensions like SER, OMM, DMM while there's no sign of Microsoft standardizing more DXR functionality ?

@Bold It really isn't and even technical media tries to stay relevant by covering more pertinent material. Most of them aren't going to go out of their way to consistently benchmark purely ray traced content or the games you desire included in the set ...It's a game just like any other. I bring Gollum in the context of RT enabled titles. We are not reviewers here, we evaluate games based on their tech, not review scores. You brought Lords of the Fallen to the discussion thinking the 7900XTX will have a large lead over the 4080 in this game, you were wrong, just like when you were wrong when you brought Assassin's Creed Mirgae to the discussion for that very same purpose. Now you are discarding them because they no longer fit your criteria. Be consistent with your own methodology.

You can dream on as much as possible all you want for Fort Solis to be a part of the main benchmark set but we all know most of the press aren't even going to bother again ...Again, this is your subjective opinion.

Some software are more worthwhile than others for incorporating as regular coverage so just deal with the likely reality of fact that Fort Solis, Desordre, and Layers of Fear will be tossed aside by the wider community ...This is a tech forum, we harp about visual and technical upgrades to our games, not about checklist features or review scores.

You excluded games without the complete UE5 features, you excluded Lords of the Fallen because it lacks Virtual Shadows, you excluded Jusant because it might lack it too, by your logic you should also exclude Remnants 2 because it lacks Lumen entirely, be consistent with your own logic.

You can only hope that they won't come back empty handed as is the case now this year with Atomic Heart, Redfall, or Diablo IV ...Yes they did, they even detailed the process.

Epic Games does not intend to make RT Shadows compatible with Nanite. They intend for RT shadows to complement VSM. VSM is always going to be used for nanite meshes since acceleration structure builds will never be fast enough and I'd like to see Nvidia try to spend dark silicon for accelerated BVH construction. RT Shadows are meant to handle other cases such as multiple shadow casting lights and area shadows for simpler meshes ...That's their plan, they announced it here. They specifically talk about enabling 60fps for Hardware Lumen on consoles, and enabling using Ray Traced shadows with Nanite.

- YouTube

Auf YouTube findest du die angesagtesten Videos und Tracks. Außerdem kannst du eigene Inhalte hochladen und mit Freunden oder gleich der ganzen Welt teilen.www.youtube.com

Nvidia should just go make their own game engine instead of constantly baiting or mooching off Epic Games to support all of their proprietary technologies. I sincerely wish them all the best of luck in trying to court developers to their dumpster fire that is the "RTX branch" since there's no one loony enough out there to use it for any serious work ...Maybe they should just use modern features. But then a console developer cares only about consoles. And when the graphics IP is 10 years old we only get outdated solutions for modern problems.

Nobody needs to nowadays, the verdict has been cast already, for any serious RT or PT work AMD is a no go, they are so far behind it's not even funny. Bechmakring is just a curiosity at this point to see whether AMD is 2x or 3x behind.Most of them aren't going to go out of their way to consistently benchmark purely ray traced content

Oh those games have been benchmarked already, Desodre in particular is gathering good interest for it's technical prowess with it's Path Tracing rendering.Desordre, and Layers of Fear will be tossed aside by the wider community

Yeah, anytime now, whenever that vendor steps up their hardware/software and produce a working tech that isn't generations behind. Instead of constantly producing the cheaper, less effective solutions, or even the buggy solution that bans players from playing their games.Everyone should welcome the days where no one vendor aren't 3+ performance tiers behind their competitor anymore ...

You excluded Jusant because it lacks Virtual Shadows, so you inadvertently excluded Lords of the Fallen, because it lacks Virtual Shadows as well. Once more stay consistent.I never excluded Lords of the Fallen

Since you are not a representative of Epic, and since your speculation contradicts their clear statement, I will regard this quote as another piece of personal opinion/prediction that will probably fail the test of time like the rest of the previous predictions.Epic Games does not intend to make RT Shadows compatible with Nanite

We not interested in forming a benchmark suite, we are individuals collecting data points, which you seem to ignore and insist on doing speculations and personal opinions.You can dream on as much as possible all you want for Fort Solis to be a part of the main benchmark set

Is this a court of law or something? They are real content whether you like or not. They are visual upgrades pushing the limits of technology and achieveing unprecednted visual heights that are only achievable on PC, as it should be.It's almost as bad as legitimizing those RTX remix remasters as real world content ...

Will the verdict matter if most AAA game studios are moving on to UE5 of which thus far has largely failed to produce new higher budget projects with hardware ray tracing ?Nobody needs to nowadays, the verdict has been cast already, for any serious RT or PT work AMD is a no go, they are so far behind it's not even funny. Bechmakring is just a curiosity at this point to see whether AMD is 2x or 3x behind.

It'll be a one-off event either way since AAA games are still the standard to measure against ...Oh those games have been benchmarked already, Desodre in particular is gathering good interest for it's technical prowess with it's Path Tracing rendering.

Keep grasping for some more straw mans. I didn't mention Jusant because it's simply not a AAA game ...You excluded Jusant because it lacks Virtual Shadows, so you inadvertently excluded Lords of the Fallen, because it lacks Virtual Shadows as well. Once more stay consistent.

It must've went over your head but here's what Kevin Ortegren (lead rendering programmer) said in the presentation itself ...Since you are not a representative of Epic, and since your speculation contradicts their clear statement, I will regard this quote as another piece of personal opinion/prediction that will probably fail the test of time like the rest of the previous predictions.

Virtual shadow maps are clearly here to stay based off of his statements and RT shadows is not a true replacement for them as you misinterpreted ...The last point here is an overall goal we've had for a while and that is to reduce the number of bespoke shadow techniques and integrate them into more of a coherent stack. We have something like 20+ shadow techniques and concepts in the engine. Anything from capsule shadows, per-object instance shadows, or distance field shadows. Virtual shadow maps cover many of them but not all so we'd like to have a more comprehensible shadow tech stack and where techniques complement each other and share data and caching structures where applicable.

Well I AM interested in what will actually go into the benchmark suite because that's what everyone will look at ...We not interested in forming a benchmark suite, we are individuals collecting data points, which you seem to ignore and insist on doing speculations and personal opinions.

Why not go and insist on your drivel to the reviewers and see if they'll even give a damn about entertaining a bunch of irrelevant tech demos that aren't even AAA games ? Nearly everyone knows they're not going to seriously take some indie puzzle game built off a fork of UE5 or a port of an iOS game that uses some translation layer to implement a bunch of RT effects as a rubric to compare against between the different hardware vendors ...Is this a court of law or something? They are real content whether you like or not. They are visual upgrades pushing the limits of technology and achieveing unprecednted visual heights that are only achievable on PC, as it should be.

If your favorite vendor is incapable of producing acceptable performance or image quality in these contents then it's the fault of the vendor, not the content.

Just don't say most, so far we have had several developers indeed, and all of them are work in progress, most developers have yet to show anything though. Thus you can't claim they failed to support hardware ray tracing. Most didn't say much yet. And just like AA UE5 games, some will do and some won't. STALKER 2 already announced support for hardware RT, so did ARK: Survival Ascended, Legend of Ymir and Black Myth Wukong just to name a few.Will the verdict matter if most AAA game studios are moving on to UE5 of which thus far has largely failed to produce new higher budget projects with hardware ray tracing ?

Why would it be a one off event? It's the work of a single guy, it's impressive as it is, and many more can be done with more personnel.It'll be a one-off event either way

Yet you continue to discard games left and right, you are only interested in benchmarking the games that show AMD hardware isn't weaker than NVIDIA. And you are borderline despising and talking down on games for any technical achievements that lie outside your console view! This is very strange indeed.Well I AM interested in what will actually go into the benchmark suite because that's what everyone will look at ...

I understood well. Epic is heavily invested in their RT architecture, they are looking to make Nanite ray traceable, which covers shadows, lighting and reflections. They want Nanite to be feature complete. They want 60fps for HW Lumen, so they will optimize BVH updates further. Hardware RT shadows for Nanite is a crucial feature for them. As RT shadows works with all content, while VSMs don't work well with non Nanite content (which covers lots and lots of dynamic objects). RT shadows also allow for lots of shadow casting lights, and will benefit from the mentioned improvements to BVH optimizations. They also want higher quality reflections, and scaling up Lumen for higher end use cases.Virtual shadow maps are clearly here to stay based off of his statements and RT shadows is not a true replacement for them as you misinterpreted ...

Cyberpunk, Alan Wake 2, Minecraft RTX (and the likes) are not techdemos, they are AAA games. They are already used in benchmarks.entertaining a bunch of irrelevant tech demos that aren't even AAA games

Well, PCGamesHardware already used Lego Builder’s Journey, as well as Cyberpunk and Dying Light 2 in their 7900XTX review. Comptoir-Hardware used Portal RTX, Quake 2 RTX, and other household RT titles. They used MineCraft RTX in the past too. Tomshardware uses Minecraft RTX and Bright Memory Infinite regularly. Digital Foundry uses Control, Cyberpunk and Dying Light 2. In fact all of these sites do test these last 3 games on a regular basis.Nearly everyone knows they're not going to seriously take some indie puzzle game built off a fork of UE5 or a port of an iOS game that uses some translation layer to implement a bunch of RT effects as a rubric to compare against between the different hardware vendors ...

Realtime Pathtracing exists and will be heavily used in the professionell market. UE5 has absolute no chance to survive here anymore. Their lighting system is outdated and inaccurate.Nvidia should just go make their own game engine instead of constantly baiting or mooching off Epic Games to support all of their proprietary technologies. I sincerely wish them all the best of luck in trying to court developers to their dumpster fire that is the "RTX branch" since there's no one loony enough out there to use it for any serious work ...

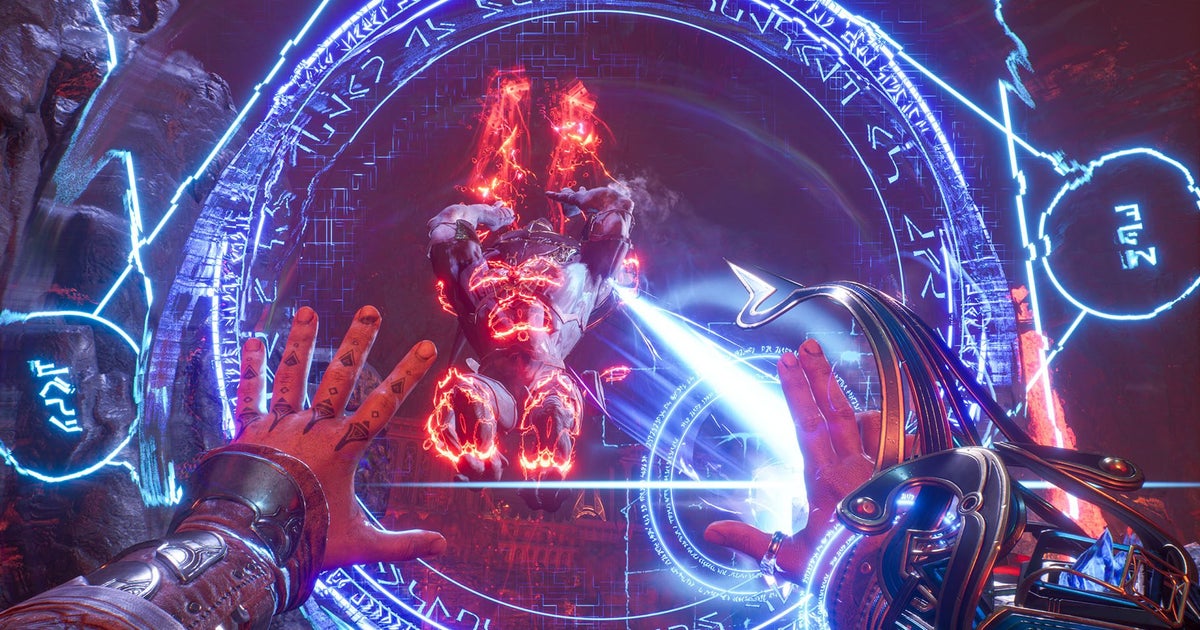

In Robocop, the 4090 is 2.2x times faster than 6900XT.I'm interessted why nVidia performs so much better here than in other UE5 games. The 4090 is more than 2x faster than the 6900XT...

www.dsogaming.com

www.dsogaming.com

www.dsogaming.com

www.dsogaming.com

www.dsogaming.com

www.dsogaming.com

They didn't actually backtrack anything, at least not now. At Intel Innovation last fall they said "High-NA EUV technology in development with 18A, production with "Intel Next"" (which we now know is 14A) so it wasn't even meant to be used in 18A production

Intel adds 14A to the roadmap.

They've also silently backtracked on 18A being High NA, with this being pushed to the 14A node, which they're saying is 'late 2026 for early risk production', so realistically probably late 2027 before it's put in any products.

Ah well they backtracked on it earlier then. lol It was originally slated to be a High NA node I think back in 2021 when it was first announced.They didn't actually backtrack anything, at least not now. At Intel Innovation last fall they said "High-NA EUV technology in development with 18A, production with "Intel Next"" (which we now know is 14A) so it wasn't even meant to be used in 18A production