Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Gears of War Ultimate edition for windows 10

- Thread starter eastmen

- Start date

Another fun modern AAA PC development wreck caused by who-knows-what internal corporate agendas and stuff. Was it MS who didn't test it worth a damn? Was it AMD's inability to adequately support all of their cards? Was it NV for wanting to get some special Gameworks attention? Let the crazy uninformed sensational speculation begin!

Last edited:

I'm a definite proponent of fairness in gaming but that's not Nvidia's fault imo, that's Microsoft for labelling the HBAO+ proprietary method as simply Ambient Occlusion and allowing it to be used to AMD cards. If it 's indeed HBAO+ as all indications point to, it's just not ever available on AMD cards. Just another retard moment for MS on this shambles of a release.Looks like you were right:

http://wccftech.com/nvidia-gameworks-visual-corruption-gears-war-ultimate-edition/

Looks like you were right:

http://wccftech.com/nvidia-gameworks-visual-corruption-gears-war-ultimate-edition/

"In BaseEngine.ini we spotted a very peculiar entry. “bDisablePhysXHardwareSupport=False”. More peculiar is the fact that this file cannot be edited in anyway. ... The entry means that hardware accelerated PhysX is enabled by default in the game"

Nope.

I'm a definite proponent of fairness in gaming but that's not Nvidia's fault imo, that's Microsoft for labelling the HBAO+ proprietary method as simply Ambient Occlusion and allowing it to be used to AMD cards. If it 's indeed HBAO+ as all indications point to, it's just not ever available on AMD cards. Just another retard moment for MS on this shambles of a release.

I am with you on this and seems WCCF jumped the gun as usual, also that WCCF journalist is err a bit biased towards AMD to say the least

Even on Steam there are those able to use AMD cards with HBAO+ active but have problems with SSAO *shrug*.

Now there is an update to that article to say AMD report they were not given a copy/involved in QA their hardware, but this reminds me of the racing car situation where AMD originally blamed NVIDIA Gameworks and then blamed the developer for not being proactive with them.

I just get the feeling in these scenarios where the ball is dropped, AMD expects the developers to be proactive and go to them rather than AMD spending time/resources engaging first.

I can see AMD's point and in an ideal world it would work; but developers have a serious tight budget and critically project time frame/milestones, so AMD need to work more like NVIDIA in the business development model and aggressively engage developers and ensure they have a seat at some point on key games (I appreciate they do not have the same budget allocation as NVIDIA so need to be more selective on games but this does not stop them working with all the major developers to agree which games and when to work with them).

But AMD do like to blame everything on either NVIDIA or developer

I remember Rise of the Tomb Raider Pure Hair showed a large visual improvement over that of NVIDIA and yet both had exactly same % performance loss (one review showed comparison to snow and hair - snow was really nice), NVIDIA response was to work more to get a future update out and no comments at the time.

Edit:

I also remember reading a review that showed some strange GPU dynamic memory behaviour for AMD with Gears of War Ultimate, might be something as well or not.

NOTE: 2nd line above missed out the game that worked with HBAO+ for some and not SSAO.

I meant to say Rise of the Tomb Raider as the game in my 2nd line regarding the Steam and HBAO+ active issues with SSAO - too late to change that 2nd line now doh.

Edit:

Quick example read comments further down:

https://steamcommunity.com/app/391220/discussions/0/405693392918148093/

Cheers

I meant to say Rise of the Tomb Raider as the game in my 2nd line regarding the Steam and HBAO+ active issues with SSAO - too late to change that 2nd line now doh.

Edit:

Quick example read comments further down:

https://steamcommunity.com/app/391220/discussions/0/405693392918148093/

Cheers

Silent_Buddha

Legend

"In BaseEngine.ini we spotted a very peculiar entry. “bDisablePhysXHardwareSupport=False”. More peculiar is the fact that this file cannot be edited in anyway. ... The entry means that hardware accelerated PhysX is enabled by default in the game"

Nope.

Yeah I like that. No way to disable Hardware PhysX in the game. Which means incredibly slow software emulation and potentially huge performance implications for non-Nvidia hardware. Unlike games where you can disable Hardware PhysX.

This is like Nvidia's wet dream. All these wonderful Nvidia sponsored things to cripple the competition without end users having an option to disable them.

And I find it shameful that Microsoft participated either willingly (unlikely) or inadvertently but naively trusting Nvidia to implement good consumer centric practices. Anyone that trusts Nvidia's Gameworks to work correctly on the competitions hardware is just a naive tool. Hell, half the time they can't even get Gameworks to work correctly or well on their own hardware (see Batman Arkham Knight and many other Gameworks titles).

Regards,

SB

No, no, no....

GoW Ultimate does not use hardware accellerated PhysX effects, so you cannot disable something, which is not used. It's a standard entry in almost all UE 3 games.

GoW Ultimate does not use hardware accellerated PhysX effects, so you cannot disable something, which is not used. It's a standard entry in almost all UE 3 games.

Guys is it that simple when a 390x runs Gears of War 2x faster than the Fury and Nano cards, and also without stuttering or hitching or artifacts (includes the 370 as well running better) according to Forbes?

They said:

Cheers

They said:

Trend here seems to suggest GCN version *shrug*, and maybe compounded by the AMD dynamic memory driver solution that also is running strange on Gears as I mentioned before - sorry cannot find the site that analysed that.AMD’s Radeon Fury X and Radeon 380 also choked when switching quality to High and running at 1440p or higher.

Surely the performance gets even worse as you make your way down the Radeon product stack, right? Oddly enough, no. I tested an Asus Strix R7 370 under the same demanding 4K benchmark, and it turned in only a 13% lower average framerate. Crucially, no stuttering or artifacting was present.

The Radeon 390x is just fine, achieving double the framerate at High Quality/4K as the more expensive Fury and Nano cards.

Cheers

Exactly. The game absolutely does not use hardware acceleration for Physx. That config entry does nothing.No, no, no....

GoW Ultimate does not use hardware accellerated PhysX effects, so you cannot disable something, which is not used. It's a standard entry in almost all UE 3 games.

And which ties in with the fact the game runs much better and without artifacts on the older GCN versions according to Forbes, which bizarrely WCCFTech mentions in their article and reached the conclusion themselves must be Gameworks......while ignoring the fact Forbes mentioned 390x and 370 run fine and even in an earlier article (Keith May video) at WCCF noted the same behaviour and working fine with a 290x.No, no, no....

GoW Ultimate does not use hardware accellerated PhysX effects, so you cannot disable something, which is not used. It's a standard entry in almost all UE 3 games.

This does not sound like an NVIDIA related issue to me, and AMD as I mentioned admitted they have not been involved in any QA development testing.

D

Deleted member 13524

Guest

Exactly. The game absolutely does not use hardware acceleration for Physx. That config entry does nothing.

Why wouldn't it use hardware acceleration when a nvidia card is detected?

Is there some special coding that needs to be done to get hardware acceleration on physx?

Hi Guys,

I find this thread on forum and i make a video about Gears of War Ultimate Edition

when was released for Windows 10 and i thinks it's ok if i put my video about this subject here for share my opinion with you

I find this thread on forum and i make a video about Gears of War Ultimate Edition

when was released for Windows 10 and i thinks it's ok if i put my video about this subject here for share my opinion with you

Well looks like 16.3 drivers actually improved the situation, so comes back to what seems AMD not being involved in the initial QA development testing; tbh this is not something I blame on the developers and AMD needs to be more proactive in their meetings with the various gaming companies and scheduling engagement in the QA process, I would expect this game to be one on their list to do even if they need to prioritise due to different spending structure to NVIDIA.

Same way NVIDIA has dropped the ball a couple of times recently as well.

Cheers

Same way NVIDIA has dropped the ball a couple of times recently as well.

Cheers

I can't play the game , it was fine for awhile but now it just drops to a slide show.

I hope quantum break is better than this

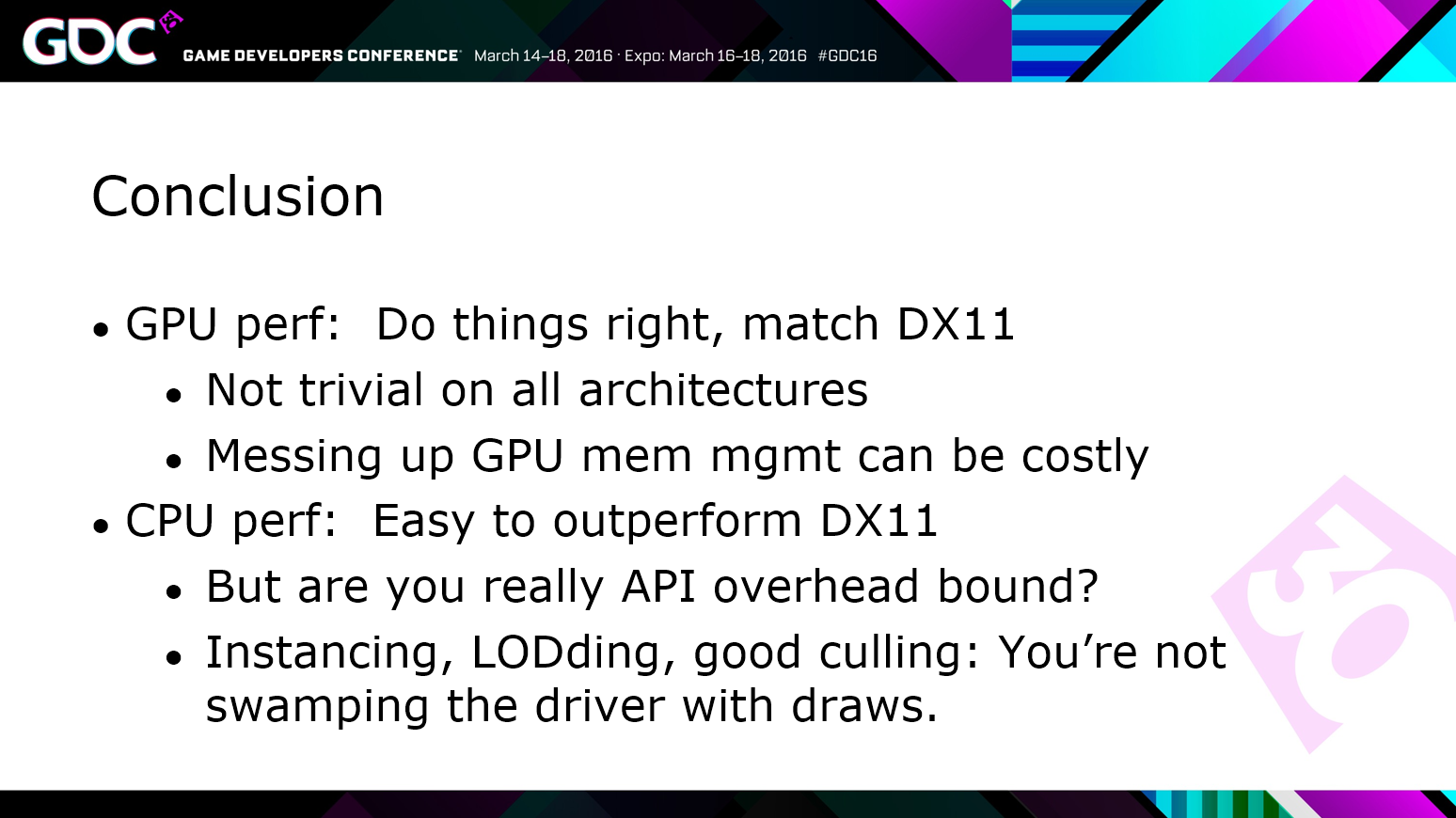

Looks like that'll be the case, from GDC: Developing The Northlight Engine: Lessons Learned

DX11 drivers are able to circumvent HW pitfalls. We’re matching DX11 GPU perf on Maxwell + AMD.

CPU perf: Sure DX12 can be much faster, but if your engine design is such that you don’t swamp the API with draw calls, the actual API overhead might not be significant in your overall CPU cost. We saved ~10% overall renderer time

Last edited:

Similar threads

- Replies

- 10

- Views

- 2K

- Replies

- 6

- Views

- 3K

- Replies

- 40

- Views

- 6K

- Replies

- 40

- Views

- 14K