These are somewhat different things we're talking about here...

What the eye does with the incoming signals is a separate issue. There is something we perceive as blurring, but in actual reality it is continuous movement. This is what realistic graphics should aim to reproduce.

Old studies have determined that around 24 discrete frames per second we start to accept the illusion of movement, too; but if the images are completely sharp (so the camera has fast shutter speed) then we still get a sense of strobing, too. So to help sell the illusion, we use slower shutter speeds and let the lengthy exposure time create blur on the individual still images themselves.

This gives 24 fps cinema a certain look or feel, but it also destroys information. Usually, even with the strobing, our eyes could catch details or movement, track an object as it moves along the screen - but the motion blur covers it up completely.

On top of it all, no current video game can deliver realistic motion blur, just some more or less effective fake solutions instead. In most cases objects will keep their normal silhouettes, standing still, and just blur the pixels within those silhouettes. True 3D motion blur is still quite resource intensive even for CGI, but it is absolutely necessary for realistic movie VFX work.

Good game engines are pretty selective with their motion blur, and tune it back significantly compared to what's expected with cinema and usual shutter speeds. And they mostly use it to compensate for the lack of a better frame rate.

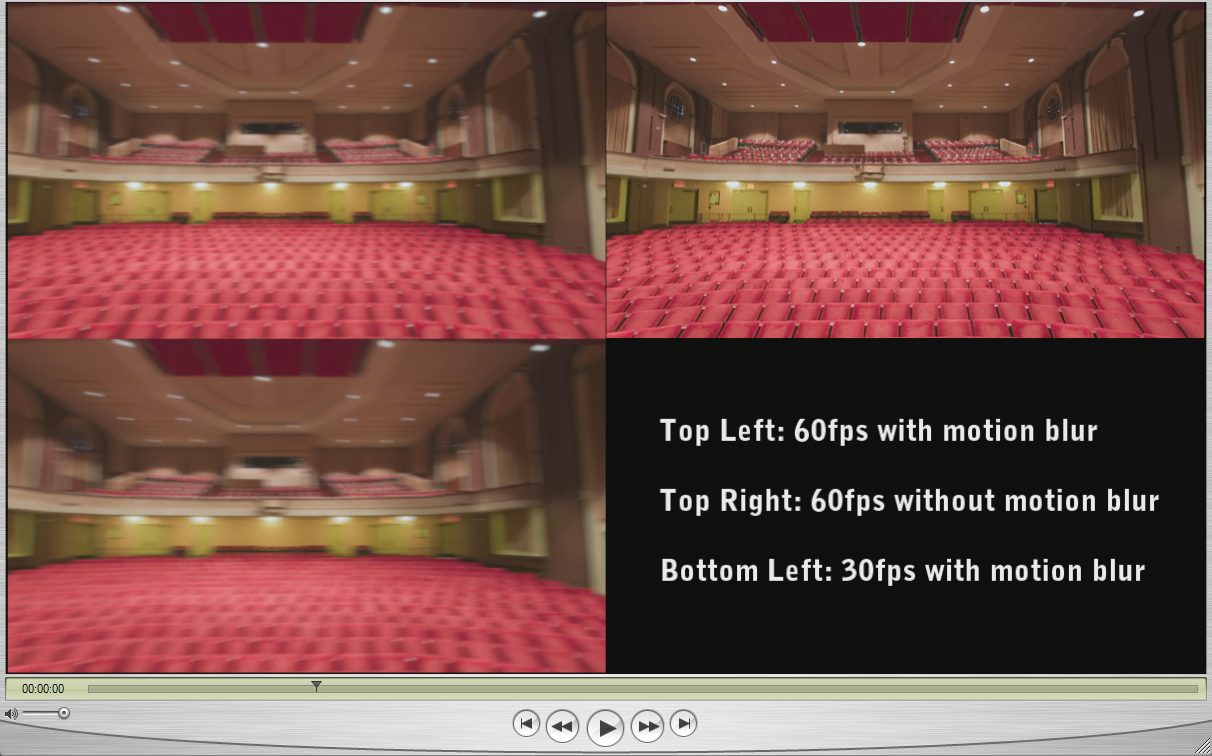

So I still believe that 60fps with no motion blur is a better way to deliver visual information in an interactive environment like a video game, compared to either 30fps with motion blur or 60fps with motion blur. The best, of course, would be 100-120 fps with no blur, where our own eyesight would "add" the "motion blur" naturally, as with real life movement. Gameplay shouldn't look and feel like cinema, it's not good for control and reaction times and such; and 3D is going to require smoother frame rates as well. James Cameron is heavily pushing for at least 48fps, because it's evident that 24fps is absolutely not enough even for cinema when you add stereoscopy. For them it's a more complex question because of the visual language of movies that we've gotten used to, but it's still more likely that they'll abandon 24fps and motion blur in favor of a smoother viewing experience, even though the associated costs will be significant (4 times the rendering and storage capacity compared to a mono 24fps movie).

So again, in short - the entire existence of motion blur is there to compensate for a low frame rate, even in the cinema. Games shouldn't try to replicate that and instead leave it to the human vision to interpret and blur the images when necessary, IMHO.