I don't know about Gears implementation but i guess in that case ignorance is bliss as people can't actually try and compare with VRS off on their console to see if the performance improvements resulting in a very small resolution increase (almost completely hidden by DRS + TAA) are worth it.

This what I also noticed in all comparisons with hardware VRS On on Xbox, Off on PLaystation. The image looks noticeably better for me on PS even when Tiers 2 is used. Granted I like the textures being sharp, but still. Even John thinks the textures are visibly worse in Doom Eternal on Xbox compared to PS (and the game actually outputs at a lower average resolution on Pro vs X1X!). And he is finally

wondering if the whole technique is actually worth it.

Doom overall is significantly higher resolution on Xbox and manages to maintain much more consistency in resolution vs PlayStation. We have this in the thread where I look at spectrum analysis.

Ultimately we don’t play games in screenshots. And during developer interview John does agree that without 3x zoom it’s neigh impossible to notice. We just don’t play games like that. Our implementations for AA and upscaling Algol’s are just the same, trade offs in which we get temporary artifacts and edge artifacts in exchange for higher overall resolution, or slightly less better AA.

Native 4K with no temporal component will always look the same as 4K TAAU at a standstill screen shot, but motion is a completely different animal. Alex covers a lot of ghosting artifacts in many videos caused by occlusion or just looking left and right.

VRS will look worse in standstill to any trained eye, but in motion assuredly the overall higher resolution is going to be more noticeable.

The challenge is that as we continue to mix all sorts of technologies together, one has to ask if it continues to make sense. Compounding techniques could be an issue that requires additional navigation.

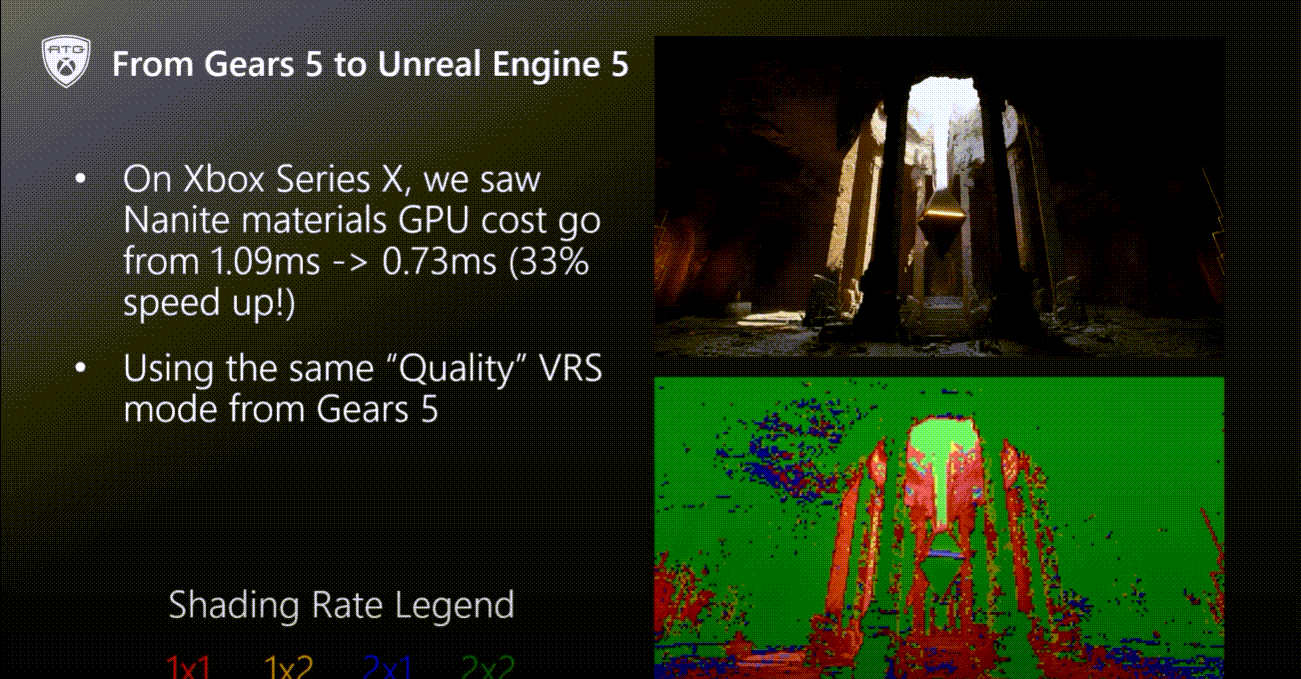

The reality is, this is still one of few (less than 10ish?) VRS titles ever released versus the hundreds of games that are using DRS, temporal AA and upscaling. There are more games using FSR than and RT than VRS. So this idea that we’ve seen peak VRS implementation is unlikely. People aren’t going to engage in it until they need it, and apparently it’s not needed yet as there are so many other areas in the game engine that needs upgrading prior to freeing up more compute. Wave 3/4 games may require it though, when the limits of these consoles are pushed at edge : you will want an additional tools to smooth out issues.

It’s important to note, VRS can also be dynamic, and no developer has implemented such a system yet. Right now it’s a constant application, but it doesn’t have to be. VRS if it ever becomes mainstream will have better implementations as times go on.