PCGH has numbers: https://www.pcgameshardware.de/Unre...I-Raytracing-DLSS-Frame-Generation-1426790/3/How does it different on AMD and Intel then? Also what's performance on this game like in comparison to other UE5 titles? How important is the DLSS3 Frame Generation in hitting framerates?

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

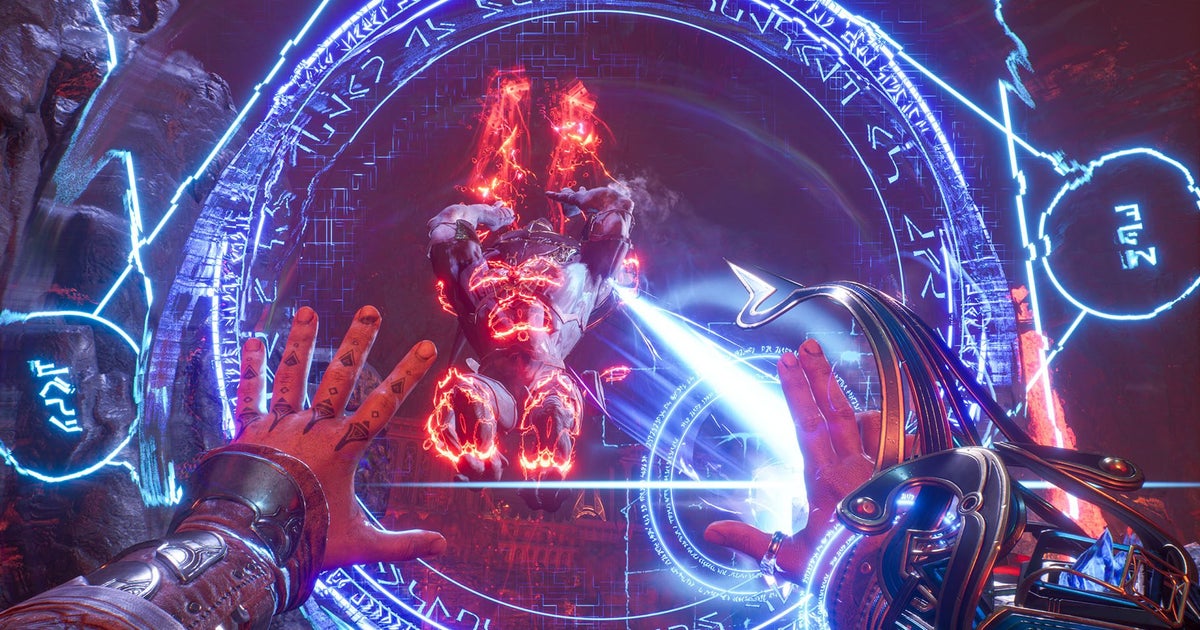

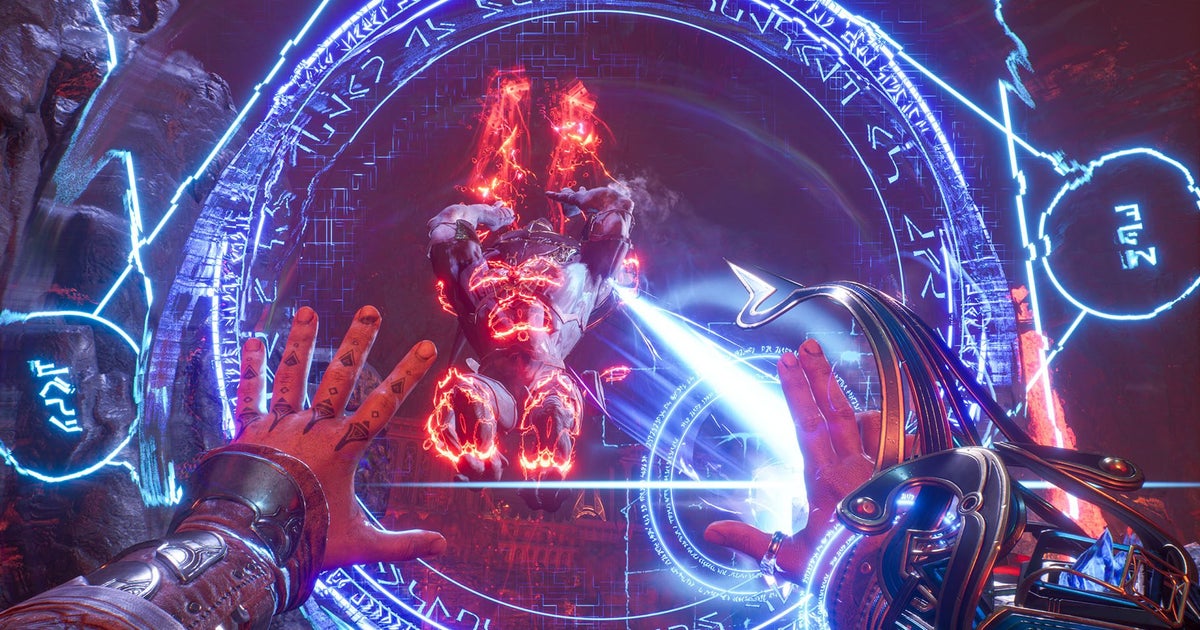

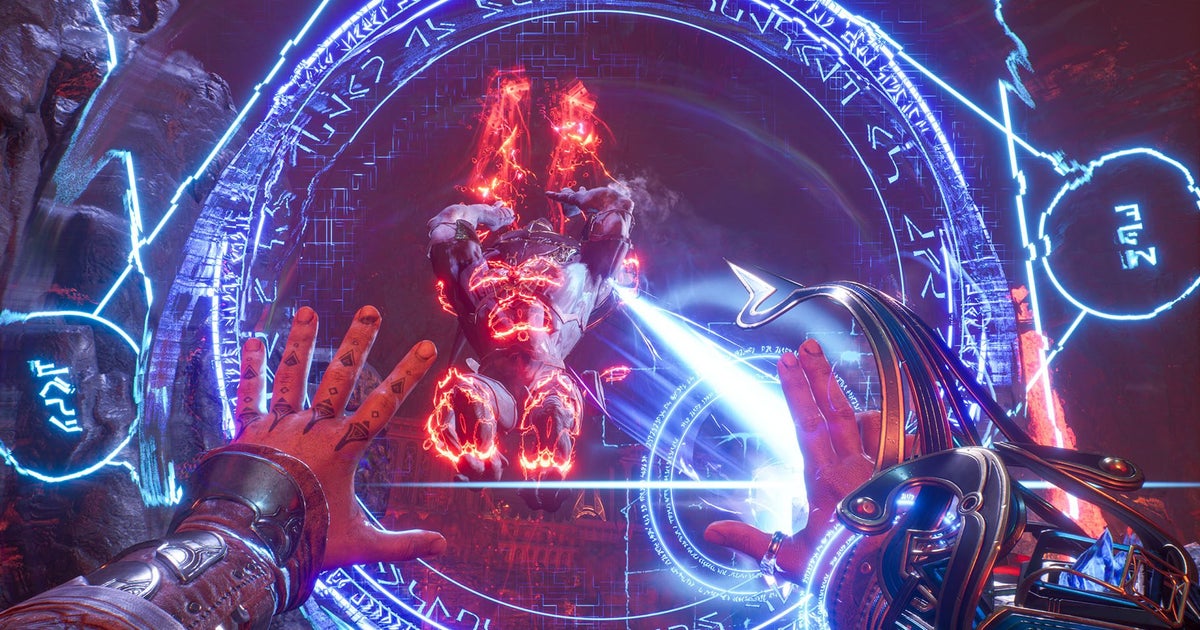

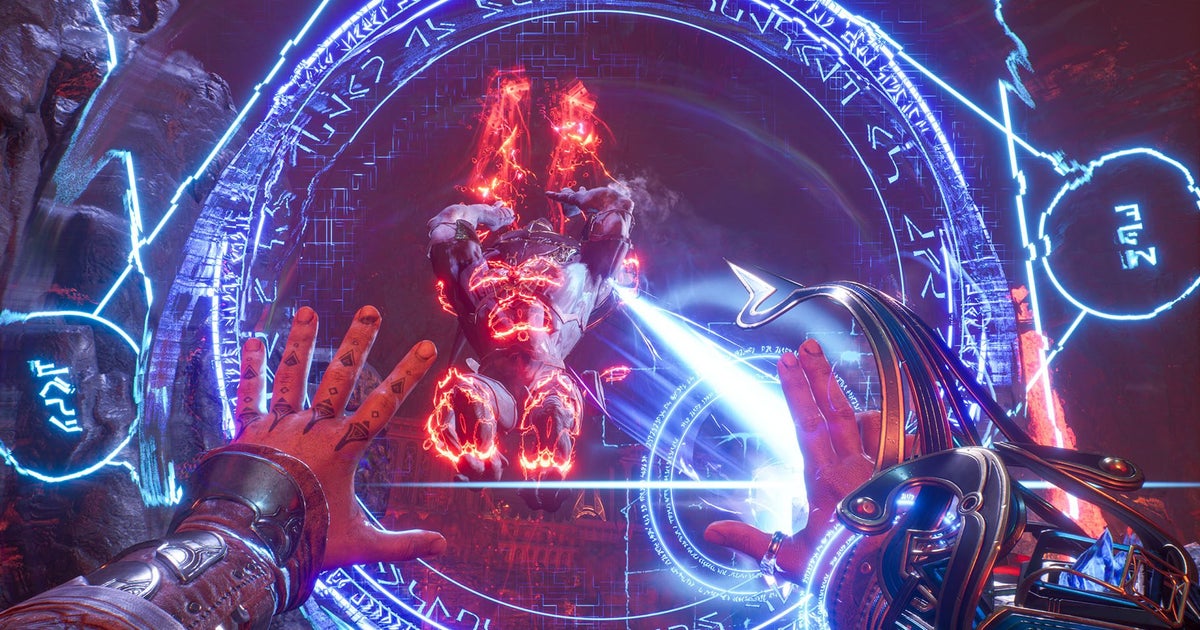

Current Generation Games Analysis Technical Discussion [2023] [XBSX|S, PS5, PC]

- Thread starter BRiT

- Start date

- Status

- Not open for further replies.

Ah, so even that's below 60 fps without upscaling. Full featured UE5 is basically just very demanding then, with more titles needed to evaluate optimisation. We'll need other titles on other engines to get an idea how much is limitations of the engine versus limitations of the hardware and modern quality features. Take-home at this point is that unified RT like lighting at high framerates seems a long way off, further off than it seemed 10 years ago with promising experiments. It seems the various problems are just too computationally taxing.

DavidGraham

Veteran

On the 4090 it's 60fps at native 4K + DLAA. Keep in mind this is using the maximum HW Lumen quality possible and includes experimental stuff. It vastly increases the GI resolution, disables screen tracing entirely, and activates Hit Lighitng for maximum reflections resolution. All of which are significantly more taxing than the regular HW Lumen.so even that's below 60 fps without upscaling

DavidGraham

Veteran

The developer of Immortals of Aveum just shed an important light on the difference between Series X and PS5 in terms of performance.

"Despite [having performance] parity, Series X and PS5 handle things differently. Async compute works really well on one but not as well on the other, which changes the GPU burden"

They state that PS5 and Series X have similar performance but one can't do Async Compute as well as the other. I am guessing Series X is the one that can't do Async well, which really explains why they often have fps parity or why the PS5 sometimes pushes above it's weight.

www.eurogamer.net

www.eurogamer.net

"Despite [having performance] parity, Series X and PS5 handle things differently. Async compute works really well on one but not as well on the other, which changes the GPU burden"

They state that PS5 and Series X have similar performance but one can't do Async Compute as well as the other. I am guessing Series X is the one that can't do Async well, which really explains why they often have fps parity or why the PS5 sometimes pushes above it's weight.

Inside Immortals of Aveum: the Digital Foundry tech interview

Ahead of the launch of Immortals of Aveum, Alex Battaglia and Tom Morgan spoke with Ascendent Studios' Mark Maratea, Julia Lichtblau and Joe Hall about the game

It's 33.3 fps 4k DLAA native. 57.6 with Frame Generation. Your point on extra quality does count though, so what's performance like for just quality UE5 features without cranking everything up to 11?On the 4090 it's 60fps at native 4K + DLAA. Keep in mind this is using the maximum HW Lumen quality possible and includes experimental stuff. It vastly increases the GI resolution, disables screen tracing entirely, and activates Hit Lighitng for maximum reflections resolution. All of which are significantly more taxing than the regular HW Lumen.

DavidGraham

Veteran

This is with RTXDI not Lumen, RTXDI enables vastly more indirect GI and reflections to the scene. Max HW Lumen is 60fps at native 4K. It's in the graph.It's 33.3 fps 4k DLAA native

My personal guess is at least 50% more performance, which should make this title run at 90fps at 4K.so what's performance like for just quality UE5 features without cranking everything up to 11?

But the Series X runs this game significantly better than the PS5. Side by side, it's sometimes more than 10fps faster.The developer of Immortals of Aveum just shed an important light on the difference between Series X and PS5 in terms of performance.

"Despite [having performance] parity, Series X and PS5 handle things differently. Async compute works really well on one but not as well on the other, which changes the GPU burden"

They state that PS5 and Series X have similar performance but one can't do Async Compute as well as the other. I am guessing Series X is the one that can't do Async well, which really explains why they often have fps parity or why the PS5 sometimes pushes above it's weight.

Inside Immortals of Aveum: the Digital Foundry tech interview

Ahead of the launch of Immortals of Aveum, Alex Battaglia and Tom Morgan spoke with Ascendent Studios' Mark Maratea, Julia Lichtblau and Joe Hall about the gamewww.eurogamer.net

Doesn't this game run better on Series X, though? They also mention how they leverage async compute on PC, so that would suggest this isn't a DirectX limitation.The developer of Immortals of Aveum just shed an important light on the difference between Series X and PS5 in terms of performance.

"Despite [having performance] parity, Series X and PS5 handle things differently. Async compute works really well on one but not as well on the other, which changes the GPU burden"

They state that PS5 and Series X have similar performance but one can't do Async Compute as well as the other. I am guessing Series X is the one that can't do Async well, which really explains why they often have fps parity or why the PS5 sometimes pushes above it's weight.

Inside Immortals of Aveum: the Digital Foundry tech interview

Ahead of the launch of Immortals of Aveum, Alex Battaglia and Tom Morgan spoke with Ascendent Studios' Mark Maratea, Julia Lichtblau and Joe Hall about the gamewww.eurogamer.net

Yup dosent make sense.But the Series X runs this game significantly better than the PS5. Side by side, it's sometimes more than 10fps faster.

I am honestly not sure which one they meant there actually lolThe developer of Immortals of Aveum just shed an important light on the difference between Series X and PS5 in terms of performance.

"Despite [having performance] parity, Series X and PS5 handle things differently. Async compute works really well on one but not as well on the other, which changes the GPU burden"

They state that PS5 and Series X have similar performance but one can't do Async Compute as well as the other. I am guessing Series X is the one that can't do Async well, which really explains why they often have fps parity or why the PS5 sometimes pushes above it's weight.

Inside Immortals of Aveum: the Digital Foundry tech interview

Ahead of the launch of Immortals of Aveum, Alex Battaglia and Tom Morgan spoke with Ascendent Studios' Mark Maratea, Julia Lichtblau and Joe Hall about the gamewww.eurogamer.net

One would think the fatter GPU with more compute units would be slower at raster stuff, thus have a larger bubble for async compute (for example in the shadow maps pass). While the one with the thinner GPU that does raster perhaps faster in its alloted time would have less of a bubble for compute.

He says in the interview:I am honestly not sure which one they meant there actually lol

One would think the fatter GPU with more compute units would be slower at raster stuff, thus have a larger bubble for async compute (for example in the shadow maps pass). While the one with the thinner GPU that does raster perhaps faster in its alloted time would have less of a bubble for compute.

We also leverage async compute, which is a wonderful speed boost when coupled with DirectStorage as it allows us to load compute shaders directly on the GPU and then do magical math and make the game look better and be more awesome.

Make it sound like the async compute on PS5 isn’t working as well. Well, you did the interview so you should know lol.

I am honestly not sure which one they meant there actually lol

One would think the fatter GPU with more compute units would be slower at raster stuff, thus have a larger bubble for async compute (for example in the shadow maps pass). While the one with the thinner GPU that does raster perhaps faster in its alloted time would have less of a bubble for compute.

This is my take also. Any time PS5 and Series X are performing similarly, Series X will be leaving a lot of compute unused. Presumably this means there's can be a bigger performance uplift from async on Xbox.

They also talk about async and Direct Storage working really well together, which may be another indication they mean Xbox.

Edit: I think the Series X was designed with compute and async compute in mind. I think that (and RT) is why they could justify going so wide.

Now we're past cross gen I think Xbox hardware will start to be more fully utilised.

Last edited:

I bought Desordre 2 hours ago and tested it one and a half hours. The game is only about 2 GB in size.

I find RTXDI already prettier than HW Lumen. It's more accurate and the range is wider.

The shadows in this game could be softer, the reflections could reach further and the edge smoothing could be a bit better. From a certain distance the reflections are colorless and that starts quite close. I am still undecided whether to activate SSR to compensate for that or not.

Apart from that it looks very nice. It's just a little too bright for me.

I find RTXDI already prettier than HW Lumen. It's more accurate and the range is wider.

The shadows in this game could be softer, the reflections could reach further and the edge smoothing could be a bit better. From a certain distance the reflections are colorless and that starts quite close. I am still undecided whether to activate SSR to compensate for that or not.

Apart from that it looks very nice. It's just a little too bright for me.

Last edited:

DavidGraham

Veteran

But the Series X runs this game significantly better than the PS5. Side by side, it's sometimes more than 10fps faster.

Doesn't this game run better on Series X, though? They also mention how they leverage async compute on PC, so that would suggest this isn't a DirectX limitation.

In the DF analysis of the game, the PS5 version have higher AO and SSR resolution, which explains why it's slower than Series X in certain scenes.I am honestly not sure which one they meant there actually lol

One would think the fatter GPU with more compute units would be slower at raster stuff, thus have a larger bubble for async compute (for example in the shadow maps pass). While the one with the thinner GPU that does raster perhaps faster in its alloted time would have less of a bubble for compute.

The developer also mentions that both systems have performance parity, I think they would have stated Series X is faster if it's indeed faster?

"There is one exception on the menu screen". It's only there that PS5 has better settings. They're identical otherwise.In the DF analysis of the game, the PS5 version have higher AO and SSR resolution, which explains why it's slower than Series X in certain scenes.

The developer also mentions that both systems have performance parity, I think they would have stated Series X is faster if it's indeed faster?

Performance parity just means that they're both targeting the same frame rate and resolution. There's almost no chance the dev ran them side by side and if they did, they obviously would have seen that performance isn't the same. The Series X outperforms the PS5 by a significant margin but also looks noticeably worse in terms of IQ.

The IQ difference is probably higher sharpening on the PS5. And regarding the SSR difference on the menu, the XSX and XSS look identical here so I'd hazard a guess and say it is probably one of the side effects of having one SDK for Series X and S."There is one exception on the menu screen". It's only there that PS5 has better settings. They're identical otherwise.

Performance parity just means that they're both targeting the same frame rate and resolution. There's almost no chance the dev ran them side by side and if they did, they obviously would have seen that performance isn't the same. The Series X outperforms the PS5 by a significant margin but also looks noticeably worse in terms of IQ.

I'mDudditz!

Newcomer

One would think the fatter GPU with more compute units would be slower at raster stuff, thus have a larger bubble for async compute (for example in the shadow maps pass). While the one with the thinner GPU that does raster perhaps faster in its alloted time would have less of a bubble for compute.

Make it sound like the async compute on PS5 isn’t working as well. Well, you did the interview so you should know lol.

PS5 performs better in async compute workloads for this game.

Implementations for async compute is obviously platform dependent (ie. we are calling console OS libraries) and thats generally been faster on PS5.

I'mDudditz!

Newcomer

More commentary from developer. I really appreciate his transparency and I hope it doesn't result in online harassment later on. We don't often get this level of platform comparison

@Dictator what he's saying below seems to correspond with your previous twitter discussion with Matt Hargett.

@Dictator what he's saying below seems to correspond with your previous twitter discussion with Matt Hargett.

Huh, I really would have thought that the XSX would have the superior Async-compute.

Being a ( much ) wider and a bit slower, I would imagine that is the perfect case for Async on the GPU.

However in the comments above i can see evidence, for it being better on the XBOX, ie. it being coupled with the direct Storage quote,

AND evidence for it being better on the PS5, ie where he directly calls out the async on the PS5.

Is leather-Tommorow the save dev who gave the initial interview with Eurogamer/DF?

I'd easily believe it's 2 different comments from 2 different devs, and that they are both correct in their statements.

( Game dev is hard, multi-platform optimization is even harder )

live and learn, or live and just have more Q.s!

Being a ( much ) wider and a bit slower, I would imagine that is the perfect case for Async on the GPU.

However in the comments above i can see evidence, for it being better on the XBOX, ie. it being coupled with the direct Storage quote,

AND evidence for it being better on the PS5, ie where he directly calls out the async on the PS5.

Is leather-Tommorow the save dev who gave the initial interview with Eurogamer/DF?

I'd easily believe it's 2 different comments from 2 different devs, and that they are both correct in their statements.

( Game dev is hard, multi-platform optimization is even harder )

live and learn, or live and just have more Q.s!

Riddlewire

Regular

Huh, I really would have thought that the XSX would have the superior Async-compute.

Being a ( much ) wider and a bit slower, I would imagine that is the perfect case for Async on the GPU.

However in the comments above i can see evidence, for it being better on the XBOX, ie. it being coupled with the direct Storage quote,

AND evidence for it being better on the PS5, ie where he directly calls out the async on the PS5.

Is leather-Tommorow the save dev who gave the initial interview with Eurogamer/DF?

I'd easily believe it's 2 different comments from 2 different devs, and that they are both correct in their statements.

( Game dev is hard, multi-platform optimization is even harder )

live and learn, or live and just have more Q.s!

Maybe don't draw any conclusions based on this one debut game from a new studio using UE5 for the first time, regardless of what the developers say.

A few years ago, this forum never had a problem with that.

Something changed in the last year or so.

- Status

- Not open for further replies.

Similar threads

- Replies

- 797

- Views

- 80K

- Locked

- Replies

- 3K

- Views

- 320K

- Replies

- 656

- Views

- 53K

- Replies

- 3K

- Views

- 316K