Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Current Generation Games Analysis Technical Discussion [2023] [XBSX|S, PS5, PC]

- Thread starter BRiT

- Start date

- Status

- Not open for further replies.

arandomguy

Veteran

It doesn't explain why it might be better overall but doesn't the PS5 have more resources (eg. internal cache and bandwidth for instance) per CU/tflop than the XSX? In theory you could see it extracting more performance per CU/tflop.

What? Xbox is more powerful hardware. According to this alleged developer, PS5 is using some higher settings. Perhaps those + better software environment for developers is keeping the performance differences from being larger.So if it has better tools and apis and according to someone who claims to be a dev on this game better hw why does it not perform better than console with weaker hw and worse APIs and tools.

What a bizzare world we live in.

Its a weird situation, DF says one thing somebody that claim to be a developer on this game says that there could be a difference in settings. Some users take it as a declaration of a platform superiority while performance is shaky. Not really sure what to make out of it.What? Xbox is more powerful hardware. According to this alleged developer, PS5 is using some higher settings. Perhaps those + better software environment for developers is keeping the performance differences from being larger.

I think it's important to make clear, for me at least, ( and i hope others feel the same )

That this is just 1 more data point, and since currently we have a somewhat limited set of data points for the performance of UE5 on PS5 vs Xbox,

and also on PC, But PC is a waaay more complicated issue. we are probably over estimating the influence and information we can gain from this data point.

But we cant just extrapolate from the performance of the Matrix demo, or Fortnite forever.

We need to start including as many data points as possible.

Together with a solid understanding of the Hardware and software involved, we can theorize as to why we get the results we do.

Lastly i think we also have a real lack of understanding on the software involved.

That this is just 1 more data point, and since currently we have a somewhat limited set of data points for the performance of UE5 on PS5 vs Xbox,

and also on PC, But PC is a waaay more complicated issue. we are probably over estimating the influence and information we can gain from this data point.

But we cant just extrapolate from the performance of the Matrix demo, or Fortnite forever.

We need to start including as many data points as possible.

Together with a solid understanding of the Hardware and software involved, we can theorize as to why we get the results we do.

Lastly i think we also have a real lack of understanding on the software involved.

It's a terribly made game and as such shouldn't be used as a vehicle for anything other than some interesting discussion.Its a weird situation, DF says one thing somebody that claim to be a developer on this game says that there could be a difference in settings. Some users take it as a declaration of a platform superiority while performance is shaky. Not really sure what to make out of it.

Here is the first ue4 title. Does anyone care? No. Is it representative of the engine in general? No.

I don’t know how many versions of UE4 there were. I think 4.27 is the last. Did they skip versions or were there 27?

Overall I’d take the wait and see approach. At some point we’ll get more info on best practices and more devs sharing info.

I don’t know how many versions of UE4 there were. I think 4.27 is the last. Did they skip versions or were there 27?

Overall I’d take the wait and see approach. At some point we’ll get more info on best practices and more devs sharing info.

If they chose to balance it differently. We've seen this before especially PS360 era, where different consoles had different targets. Let's say for the sake of argument PS5 is 10% faster than XBSS in the current version of this game. Let's say XBSS is hitting 55fps at 900p. Wtih an extra 10% in the tank, the devs could choose to runPS5 at 1000p at 55fps, or 60 fps at 900p. Or they could pick a completely different sweet-spot. Maybe they feel IQ is more important than framerate so choose to run 1080p at 50fps. Particularly if they are profiling and find better hardware utilisation for IQ over framerate, that sweetspot may max out the hardware better.So if it has better tools and apis and according to someone who claims to be a dev on this game better hw why does it not perform better than console with weaker hw and worse APIs and tools.

What a bizzare world we live in.

Until you have the XBSS running the same settings as PS5 to compare, you don't have a direct comparison. You could try calculating pixels-per-second for the two versions but as not all pixels are drawn equal, I don't think you can get any metric that'll comfortable prove the described delta.

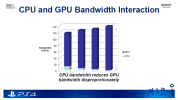

On the matter of faster RAM, if they are squeezed on XBSX, they will be needing to access the slower RAM for things, right? And if they are doing that, BW goes down from peak. Plus we don't know if there are 'gotchas' for either machine like the eye-opening PS4 RAM contention crashing bandwidth...

It's possible for Reasons these devs are seeing lower available BW on XBSX. The response to that is, "hmmm, interesting," and then we wait and see if other devs speak openly about their experiences and provide another piece of the puzzle.

Which is what this tech analysis and comparison, and indeed the whole point of this forum, is. We've a generation to learn and understand the true nature of these hardwares and how they relate to their paper specs before the next one drops. The exciting part of consoles is it's never as simple as the raw numbers would suggest, and the curve-balls and unexpected twists are what make it interesting. "Who'd have thought that XXX would be able to outperform YYY given ZZZ?!" We go in with expectations like 'Cell has teraflops!!' and come out with 'utilisation gimped it but deferred rendering was a better fit on PS3 than XB360'. No winners or losers, except for the people who enjoyed the 10 years fascination of the evolving understanding of hardware.

I don't know if it changed with newer versions of Direct X, or if anything was customized for Xbox consoles, or if there were advancements made with newer RDNA designs, but I believe RDNA1 had more async compute features available in Vulkan than it did in Direct X. I remember seeing a slide about it, and it had like 1 thing supported on DX and 3 or 4 on Vulkan. I thought it was on the amd website, but even the wayback machine didn't help me find it. The RDNA page has been updated with RDNA3 information and no mention of async compute.I would imagine the difference comes down to Sony having a better API and tools. Hardware wise I can't imagine what differences would make the PS5 superior at async compute. The GPU tech in each console is so similar.

arandomguy

Veteran

Something interesting with UE4 is that wasn't the initial graphics defining GI solution showcased in the tech demos never actually ended up in any shipping games?Here is the first ue4 title. Does anyone care? No. Is it representative of the engine in general? No.

I don’t know how many versions of UE4 there were. I think 4.27 is the last. Did they skip versions or were there 27?

Did any ever do a retrospective of how games ended up comparing to Elemental and Infiltrator? Would be an interesting look back.

How does Xbox OS have 'higher than expected memory footprint' when it has fixed set of memory dedicated to it? Are they implying that the OS actually needs more than this dedicated amount and eats into the actual game rendering availability? :/

This doesn't really sound believable to me.

This doesn't really sound believable to me.

If the social media posts are to be believed, they state that on PS5 you can claw back some of the memory that is reserved for OS stuff. Perhaps they expected a similar exception to be made on Xbox but it isn't. The thing with broken expectations, is that there is a variable in the form of the expectation. If you expect linguine for dinner but get spaghetti you will thinner than expected noodles.How does Xbox OS have 'higher than expected memory footprint' when it has fixed set of memory dedicated to it? Are they implying that the OS actually needs more than this dedicated amount and eats into the actual game rendering availability? :/

This doesn't really sound believable to me.

I guess that could explain it. Very weird to have such expectations, though.If the social media posts are to be believed, they state that on PS5 you can claw back some of the memory that is reserved for OS stuff. Perhaps they expected a similar exception to be made on Xbox but it isn't. The thing with broken expectations, is that there is a variable in the form of the expectation. If you expect linguine for dinner but get spaghetti you will thinner than expected noodles.

Better way of saying it would simply be that Sony allows for slightly higher 'game available' RAM and leave it at that.

No game yet looks as good as infiltrator. It still looks quite a bit better than our games.Something interesting with UE4 is that wasn't the initial graphics defining GI solution showcased in the tech demos never actually ended up in any shipping games?

Did any ever do a retrospective of how games ended up comparing to Elemental and Infiltrator? Would be an interesting look back.

arandomguy

Veteran

It's might be worth maybe thinking back historically with UE4's timeline. But what would people consider the first visually impressive UE4 game? And how long did it take to come out? Also at this point what do we consider the best looking UE4 game, and how many are on the level of the Infiltrator tech demo?

I'm not sure if it's good or bad sign (and for whom) that I can't really immediately think of answers to the above.

I'm not sure if it's good or bad sign (and for whom) that I can't really immediately think of answers to the above.

Gears 5 and Days gone were the first, and I’d argue, only visually impressive UE4 titles in terms of AAA games. The Ascent qualifies as a lower budget title that impresses. I didn’t find Returnal impressive at all.It's might be worth maybe thinking back historically with UE4's timeline. But what would people consider the first visually impressive UE4 game? And how long did it take to come out? Also at this point what do we consider the best looking UE4 game, and how many are on the level of the Infiltrator tech demo?

I'm not sure if it's good or bad sign (and for whom) that I can't really immediately think of answers to the above.

arandomguy

Veteran

Gears 5 and Days gone were the first, and I’d argue, only visually impressive UE4 titles in terms of AAA games. The Ascent qualifies as a lower budget title that impresses. I didn’t find Returnal impressive at all.

If that timeline translates I guess we'd be looking at what? 2026/27? for UE5 equivalents?

I strongly agree with this. Frankly, I just don’t rate Unreal Engine at all. It’s all so mid. Yes it has nanite and lumen but with performance this bad, it’s just hard to justify. I’m more interested to see what the likes of ID Tech and co come up with.Gears 5 and Days gone were the first, and I’d argue, only visually impressive UE4 titles in terms of AAA games. The Ascent qualifies as a lower budget title that impresses. I didn’t find Returnal impressive at all.

UE4 used to perform better on nvidia GPUs than comparative AMD ones in the early days. I haven't really followed it but I've always assumed it was held back a bit by the console GPUs being AMD-based.I strongly agree with this. Frankly, I just don’t rate Unreal Engine at all. It’s all so mid. Yes it has nanite and lumen but with performance this bad, it’s just hard to justify. I’m more interested to see what the likes of ID Tech and co come up with.

I never knew if there was any truth to it though. One theory was that it preferred strong pixel shader performance over compute shaders for post-effects (due to mobile GPUs) so that it benefited Maxwell more than GCN. It was probably nonsense but I've always wondered.

- Status

- Not open for further replies.

Similar threads

- Replies

- 797

- Views

- 80K

- Locked

- Replies

- 3K

- Views

- 320K

- Replies

- 641

- Views

- 52K

- Replies

- 3K

- Views

- 316K