DavidGraham

Veteran

Forza MotorSport (a pure Xbox title), is performing considerably worse on RDNA GPUs in raster mode. In RT mode the 4090 is 80% faster than 7900XTX.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Forza MotorSport (a pure Xbox title), is performing considerably worse on RDNA GPUs in raster mode. In RT mode the 4090 is 80% faster than 7900XTX.

That is extra strange, might it be one of the few edge cases where Ampere's doubled FP32 rate vs Turing actually matters?Some general weird performance all around - why is the 3060ti blowing away the 2070 super?

View attachment 9756

Some general weird performance all around - why is the 3060ti blowing away the 2070 super?

Performances of 8GB GPUs seems to be highly unstable at the moment, suffering from severe run to run variances.Like 1440p:

How on earth is an RX 590 beating the 2070 Super?

Yep. Clearly we didn't make a big enough issue about this before..

Also the Talos Principle 2 has shader stuttering, and as mentioned before the Ghostrunner 2 demo also has it.

View attachment 9755

That was never the messaging that should have been received, and if people read that Starfield and UE5 were the turning points of AMD toppling Nvidia, that was never the case. What both Starfield and UE5 have in common is that they attempt full lighting and reflection systems entirely in software compute based shaders, and no hardware RT is used at all.Quite unexpected and does somewhat weaken the argument that Starfields superior AMD performance is born from it being an Xbox exclusive alone.

Yep. Clearly we didn't make a big enough issue about this before..

Also looks like Epic is done with it as they don't mention anything about continuing to improve the situation on their roadmap.

nVidia is much faster with software based raytracing. Look at every compute solution like OpenCL.That was never the messaging that should have been received, and if people read that Starfield and UE5 were the turning points of AMD toppling Nvidia, that was never the case. What both Starfield and UE5 have in common is that they attempt full lighting and reflection systems entirely in software compute based shaders, and no hardware RT is used at all.

The newest FM does not attempt to do this, and if it does, it does it through RT based hardware. The non-RT lighting and GI system is not anywhere close to the fidelity of Starfield or Lumen and without RTAO would look painfully last generation.

By all expectations, this title is our run of the mill typical title that Nvidia cards are designed to completely smash though.

It was never about AMD performing better than nvidia, it was about people upset that nvidia wasn't performing enough in certain titles, (claiming that said titles are poorly optimized) and yet those select titles had some very specific things in common. The new Forza Motorsport, while looking better than FM7, is honestly, just not graphically representative of a leap in lighting and reflections.

So the Robocop developers released a patch and it's fixed the shader compilation stuttering issue.

The Talo's Principle 2 developers have communicated with players and are fixing the compilation stuttering in that game too

The only developers who have yet to even acknowledge the shader compilation issues of their game is the Ghostrunner 2 developers.. (that I know of)

I actually didn't experience any shader stuttering, and today with the updated demo was actually my very first time playing the game.. but I did experience some very slight traversal stuttering, but in my experience it was in corridor rooms with nothing else happening between the big fighting sequences. And it was indeed very slight, not nearly as intrusive as it has been in other games.Yep, about 90% are fixed. Still got a handful at the start and there's the occasional traversal stutter, but just a massive improvement overall. The price to pay is about 10 seconds worth of compiling at the title screen.

Yeah, it's not a good look, albeit GR2 is still UE4 so perhaps that's part of the reason, UE5 seems to be far quicker/obvious to implement a precompiling option, or at least one that actually captures more of the PSO's.

The devs definitely should implement a shader compilation screen so that it doesn't stutter during the intro logo videos lol. UE should really have something built in to easily allow that for developers.

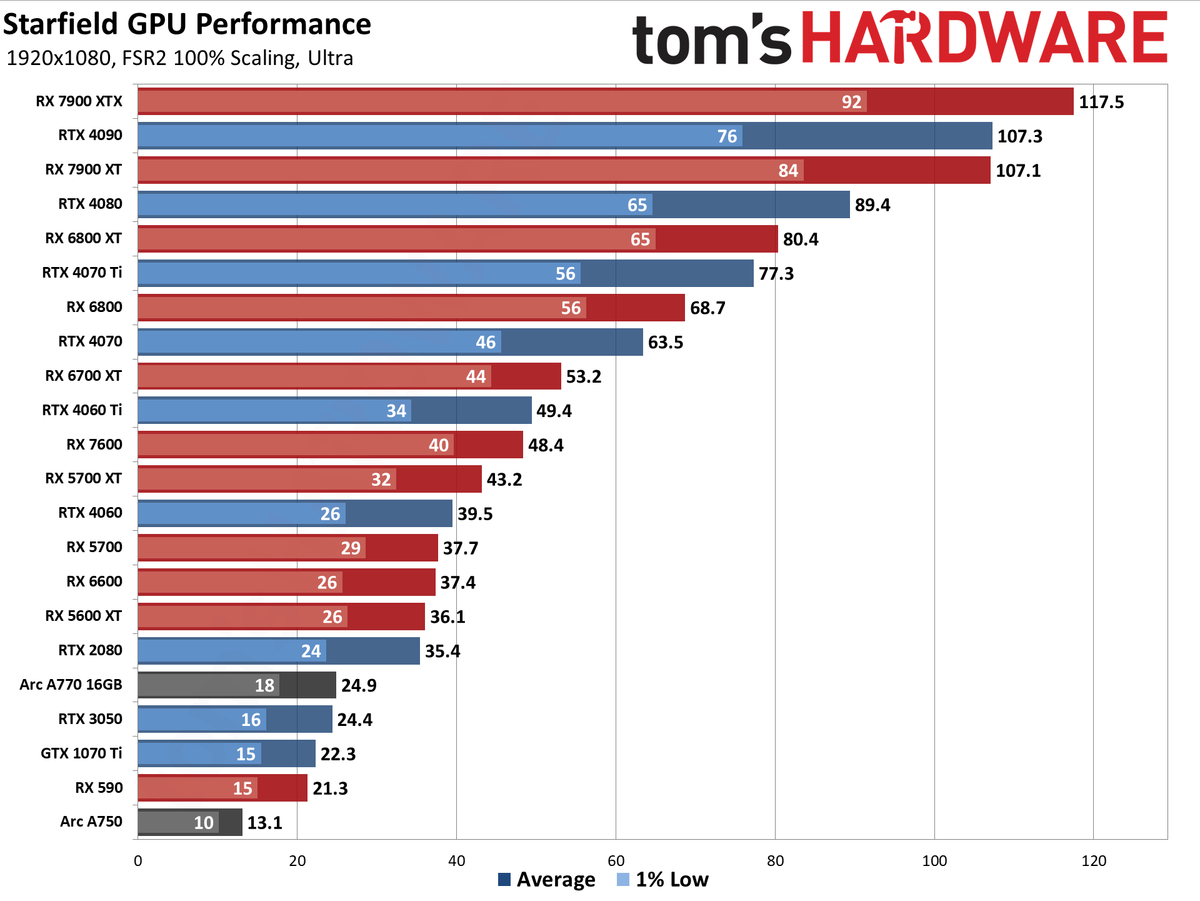

Pardon me for quoting a slightly old post, but Intel just announced a driver that boosted their Starfield performance by huge margins. So driver improvements are indeed happening in this title.That’s the point, they aren’t going to rewrite all the shaders of a game to benefit nvidia, and no drivers will address it either

Game performance improvements versus Intel® 31.0.101.4885 software driver for:

- Starfield (DX12)

- Up to 117% uplift at 1080p with Ultra settings

- Up to 149% uplift at 1440p with High settings

it was never working with intel to begin with.Pardon me for quoting a slightly old post, but Intel just announced a driver that boosted their Starfield performance by huge margins. So driver improvements are indeed happening in this title.

Intel's new driver boost Starfield performance by up to 149%, adds Arc A580 support - VideoCardz.com

Starfield gets a massive boost with the latest Intel Arc GPU drivers Intel has rolled out an important driver update that brings substantial performance enhancements to one of today’s most popular games. Initially, Intel faced some challenges with Arc GPU support for Starfield, to the extent...videocardz.com

Pardon me for quoting a slightly old post, but Intel just announced a driver that boosted their Starfield performance by huge margins. So driver improvements are indeed happening in this title.

At 1080p Ultra, Arc GPUs were working, just not optimally. An A770 was so underperforming, it was basically equal to an RTX 3050. Now with the new driver, I am sure it's now up to a 3060/4060.it was never working with intel to begin with.

NVIDIA GPUs are severely underperforming in this title as well (just like Intel GPUs), their power consumption is significantly lower than normal. If Intel can do it, NVIDIA could do it as well.I think it’s fair intel would see significant improvements. I still don’t know what you are expecting with nvidia performance.

Starfield is pure DX12 though, not DX11.Intel didn't discover any magic involving supposed shader 'rewrites', they're just continuing their long process to mature their DX11 drivers.