This is a hyperbole btw. Some people keep repeating over and over again how Spiderman is special and how it uses CPU to create BHVs and so on. Almost ALL ray tracing games so far utilize CPU heavily, most likely for BHV creation. There are certain factions

1) NVIDIA faction: "The game is not utilizing RTX GPU properly!!!" - No relation: Almost all ray tracing games so far utilized CPU heavily like Spiderman did, and most likely for similar reasons

2) PS5 faction: "PS5 is creating them with special hardware, you have to do it on CPU on PC, etc. etc." - It is quite possible PS5 may have some special trick up in its sleeve. I can't deny or agree with this statement.

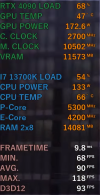

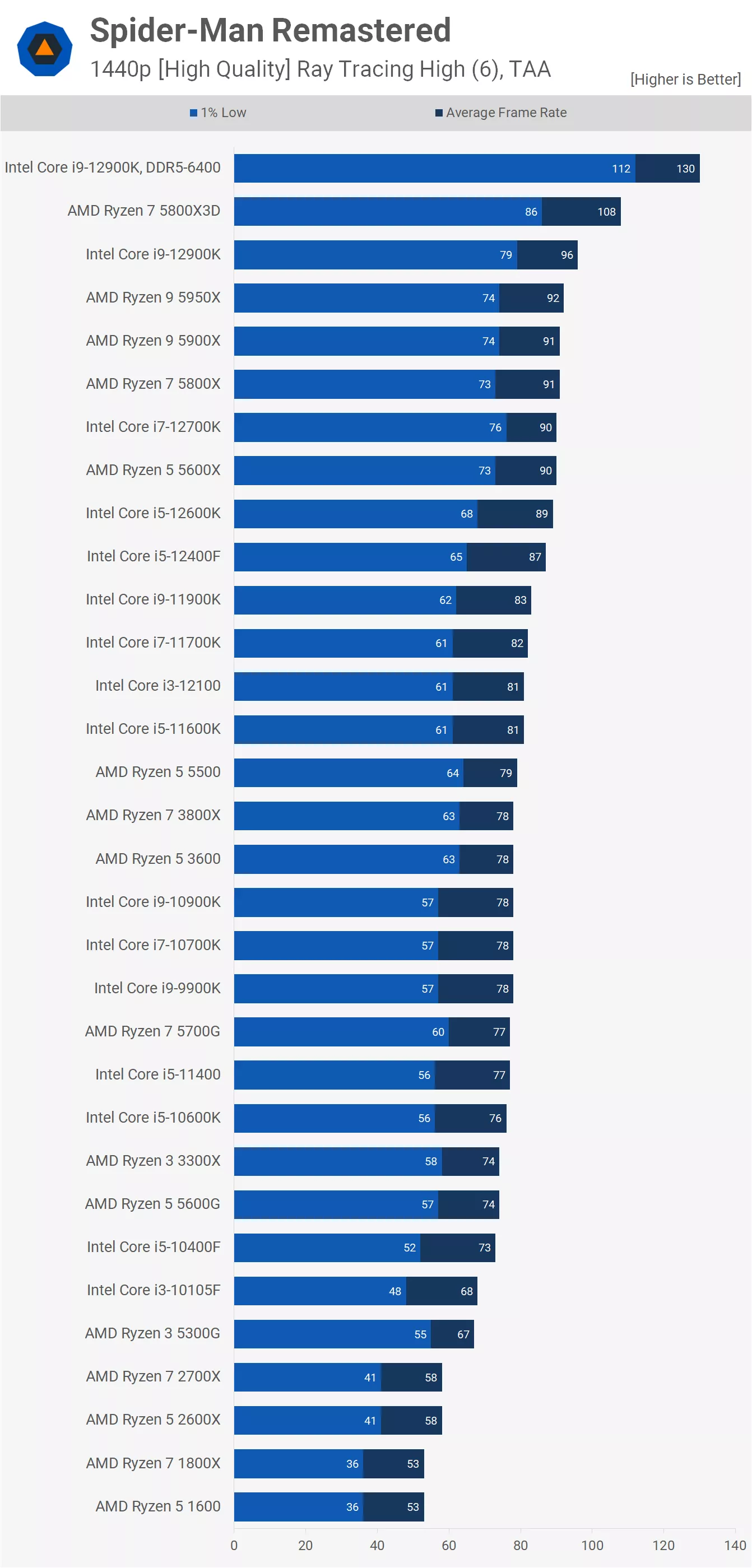

1st argument is used by NVIDIA fans to speak badly of the game, despite not understanding or realizing that since the arrival of ray tracing to PC games, it has been super CPU bound, and almost always created an extra %15 to %30 CPU boundness. It is no different in Spiderman, it will simply take %25-30 more CPU power, just like how it happens in Cyberpunk, GOTG and many other Ray Tracing titles I've PERSONALLY tested the CPU impact of.

Some people see how CPU bound Spiderman is and naturally think it is evil caused by ray tracing and Sony/Imsomniac purposefully gimping PCs to not utilize RTX hardware. I really don't think it is the case. I'm sure HW accerelated Ray Tracng still plays a part in BHV structures, but it is quite evident that almost all ray tracing games so far have increased CPU demands. On top of BHV stuff, Alex also stated that extra objects drawn / rendered in reflections also pile up on CPU as well.

Raytracing und die CPU - Was kostet Raytracing im Prozessorlimit?

Raytracing stellt bekanntermaßen hohe Ansprüche an die Grafikkarte. Aber wie wirkt sich die Technologie auf die Prozessor-Performance aus?www.pcgameshardware.de

Again this is not some special trick and this is known since 2020 an Xbox Series presentation. Read Digitalfoundry presentation or last UE 5 presentation, on consoles this is possible to build BVH for static geometry offline and just stream it from the SSD.