a 5700XT is around 9-12% faster than a non XT

Around 15% generally, however close enough.

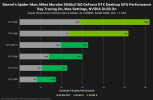

as an example here is DF analysis of Death Stranding

In death stranding yes. The discussion was around A Plague Tale, numbers will be different for other games in special ports. I have mentioned before theres a reason why reviewers generally do not go by ports but multiplatform titles to keep things more accurate.

the 5700 having 75% of the PS5 performance which is close to a Plague Tale Requiem of the Same GPU having 78% of the PS5 performance

while the 5700XT having 83% of the PS5 performance

so i am expecting the same gap in Performance in this Title as well

PS5 is performing somewhat below 6600XT (or slightly below 2070S) which does put it around 10% above a RX5700XT which is according to spec as the 5700XT is a 9.7TF GPU at a slightly older architecture.

as Alex mentioned in the Video AMD GPU's doesn't perform that well on a Plague Tale Requiem compared to Nvidia as the 2070Super slightly outperforms the PS5 in this title while it under performs in Death Stranding despite the PS5 having the Same Performance advantage against it's RDNA1 brethren "Driver perhaps?"

It seems that thats true for the high end AMD/NV range indeed to some small extend, the 3080 should be faster, maybe not that much faster, but its kinda close, close enough as what to expect. Lower down the range it seems AMD and NV gpus are performing more close to what they should be doing, ie the playing field of the consoles (10TF gpus).

Death Stranding is a port not a multiplatform game. Games natively designed for a specific platform that later get ported usually favor the native platforms (in this case consoles) design. The 2070S is indeed underperforming there. It isnt in this benchmark though, neither are the PS5, 5700XT etc.