But HOW is that possible? Wasn't it already proven by several other sites that at least on HD5870 you're limited by the core clocks, not memory clocks, and considering how much they cut rest of the core, losing some 30GB bandwidth shouldn't become that limiting factor, should it?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD: R8xx Speculation

- Thread starter Shtal

- Start date

Because as was discussed before, the rops can't actually use the theoretical bandwidth, there seems to be some limitation in how they are tied to the memory channels, hence disabling half of them also effectively disabled half the bandwidth (for the rops - the additional bandwidth should still be useful for other consumers like texture lookups etc but I think it's no great secret that rops are the major bandwidth consumers typically).But HOW is that possible? Wasn't it already proven by several other sites that at least on HD5870 you're limited by the core clocks, not memory clocks, and considering how much they cut rest of the core, losing some 30GB bandwidth shouldn't become that limiting factor, should it?

If that were the case then you'd have a lovely chequer board of rendered and black tiles!Because as was discussed before, the rops can't actually use the theoretical bandwidth, there seems to be some limitation in how they are tied to the memory channels, hence disabling half of them also effectively disabled half the bandwidth (for the rops - the additional bandwidth should still be useful for other consumers like texture lookups etc but I think it's no great secret that rops are the major bandwidth consumers typically).

Well, that's all speculation. Clearly the rops can access all memory required - somehow, but all evidence shows they don't have the required (and theoretically provided) bandwidth to do so.If that were the case then you'd have a lovely chequer board of rendered and black tiles!

Would be nice if you could elaborate WHY this card using just about any test (be it actual games with memory overclocked, or synthetic color fill rate tests) acts (for the rops) like it only had a 128bit bus...

mczak: If I understand it well, each of the RBE blocks has it's own bus connected to the memory controller. If you disable half of the RBE blocks, you'll loose half of the bandwidth between the RBE blocks and memory controller(s). Maybe I don't understand it well... :smile:

Another possibility would be disabling half of RBEs in each block. But that would be probably a bit more complicated...

(I hope Jawed will read this thread and share a nice theory)

Another possibility would be disabling half of RBEs in each block. But that would be probably a bit more complicated...

(I hope Jawed will read this thread and share a nice theory)

Jawed

Legend

Honestly? HD50fucking830 is awful junk that should be ignored. HD5770 with the wrong trousers.(I hope Jawed will read this thread and share a nice theory)

I like the sound of that theorymczak: If I understand it well, each of the RBE blocks has it's own bus connected to the memory controller. If you disable half of the RBE blocks, you'll loose half of the bandwidth between the RBE blocks and memory controller(s). Maybe I don't understand it well... :smile:

HD4890's 16 RBEs are, presumably, over-provisioned in comparison, e.g. with more bandwidth, or bigger caches - or the memory controller channels are different (64-bit channels instead of 32-bit channels like in Evergreen?). etc.

Anyway, HD4890 is running in its designed configuration, whereas this Cy-depressed should just be put out of its misery.

This idea came up before. I couldn't see a way for it to be plausible, in the end...Another possibility would be disabling half of RBEs in each block. But that would be probably a bit more complicated...

Jawed

Yeah well there are several possibilities. So the bus (or whatever connection that is) between RBE and MCs runs at memory clock? Otherwise it wouldn't make sense that it scales with memory frequency.mczak: If I understand it well, each of the RBE blocks has it's own bus connected to the memory controller. If you disable half of the RBE blocks, you'll loose half of the bandwidth between the RBE blocks and memory controller(s). Maybe I don't understand it well... :smile:

Another possibility would be disabling half of RBEs in each block. But that would be probably a bit more complicated...

Honestly? HD50fucking830 is awful junk that should be ignored. HD5770 with the wrong trousers.

We need someone to snap some faster memories on it, didn't the benches somewhere show that memory OC'ing had near linear if not linear scaling?

Paired with 1200MHz memories it would fit it's price quite well, assuming near 20% scaling

Yes but that's just crazy. The card really should already have more bandwidth than it needs, it just can't use it. From a technical point of view, really disabling half the rops that way is fail. Looks to me like that was more an afterthought rather than really properly engineered for that. Nvidia's method (disable 1/4 the rop along with 1/4 the memory channels for instance) seems to make way more sense to me, though it'll give you "odd" memory sizes.We need someone to snap some faster memories on it, didn't the benches somewhere show that memory OC'ing had near linear if not linear scaling?

Paired with 1200MHz memories it would fit it's price quite well, assuming near 20% scaling

Errr, that would result in nothing getting benefit and the performance is even lower. You won't save on memory costs if you want 1GB designs (who doesn't?) because you still need 8 devices and the savings at the board level don't translate exactly as you need to go to a x16 design.

PSU-failure

Newcomer

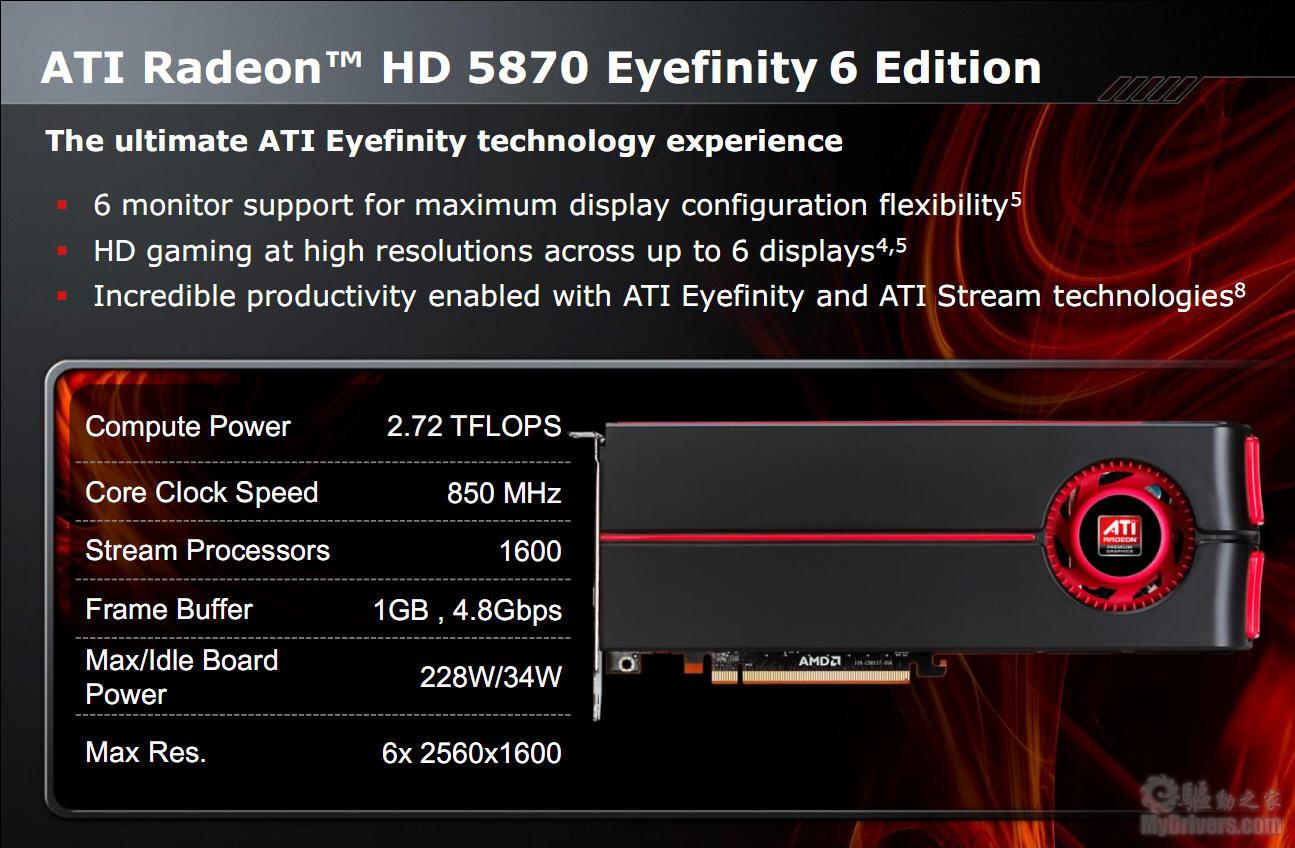

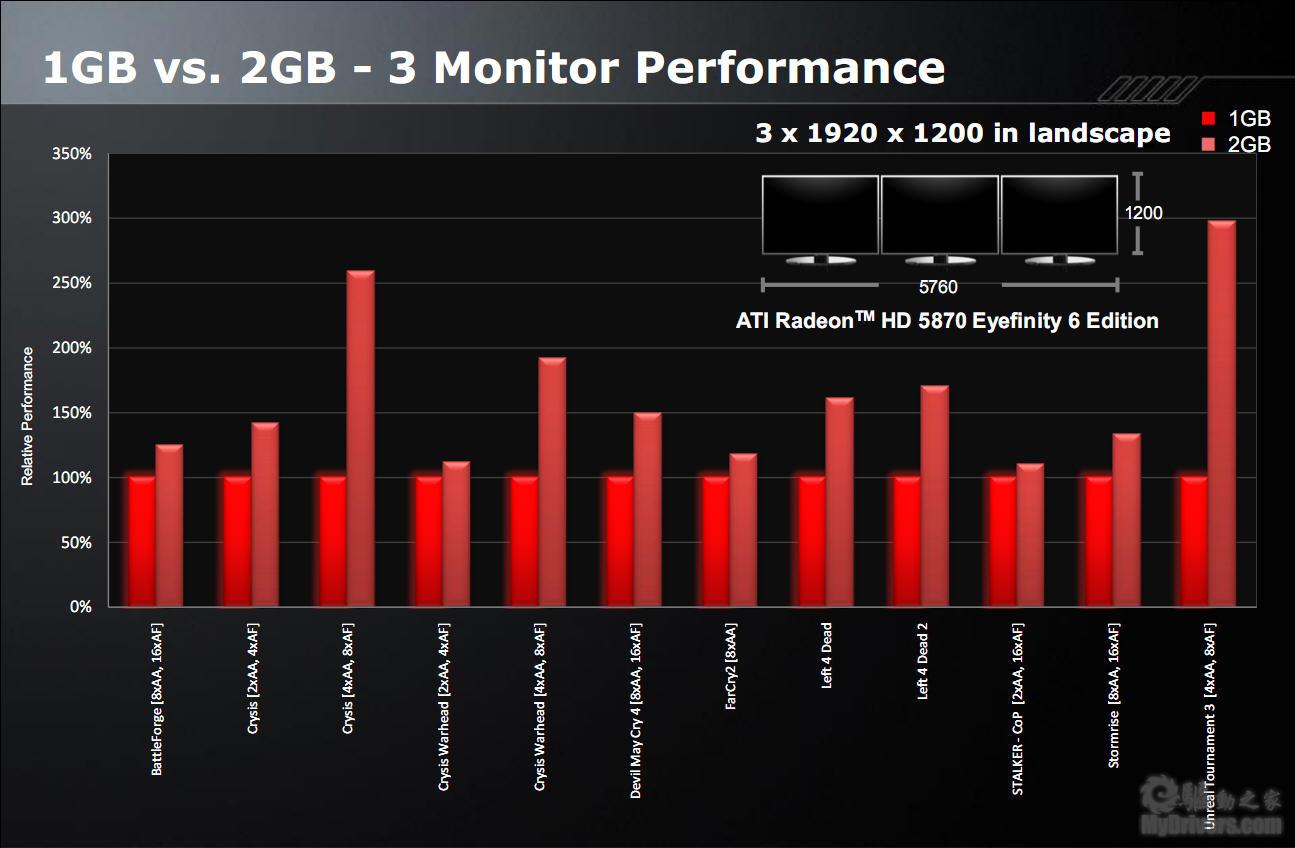

I hope these slides are not the real final launch ones...

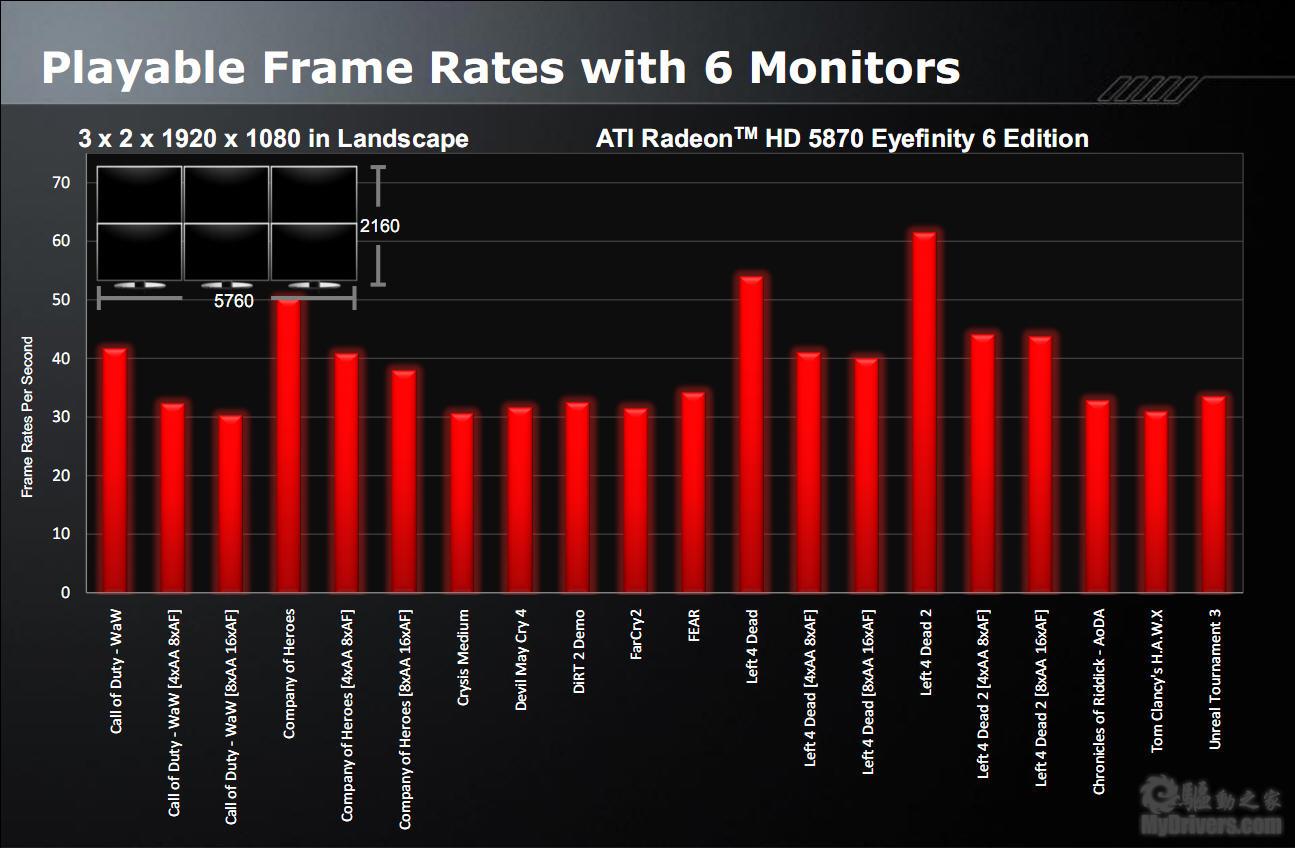

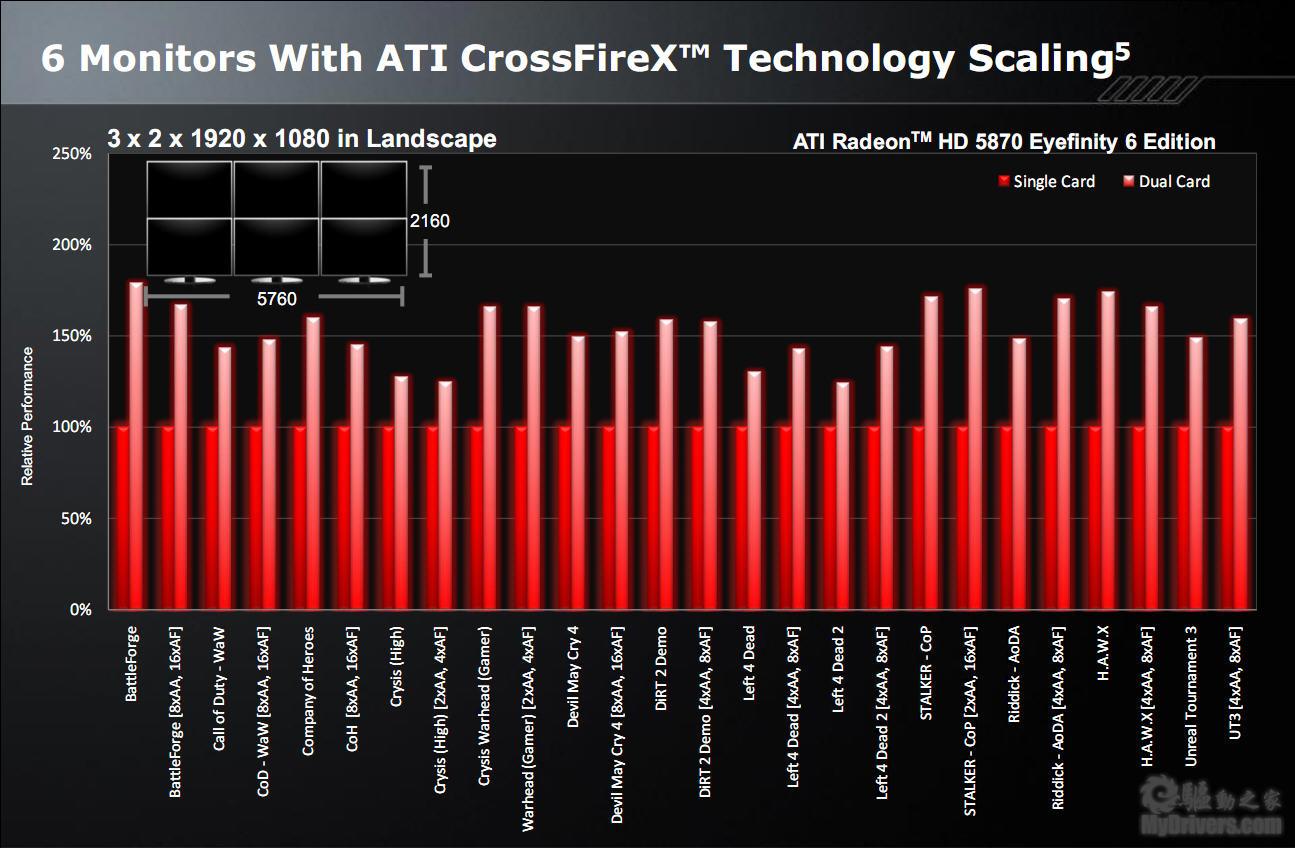

"Frame Rates per Second" along with relative performance numbers without details on the test conditions (although they're mentioned in other graphs, so it's not a lack of useable space), that's not quite "pro".

Apart from that, it's at the same time positive for the Eyefinity6 version (quite good framerates in 6x2) and for the regular 5870 (not that much gain in 3x1).

"Frame Rates per Second" along with relative performance numbers without details on the test conditions (although they're mentioned in other graphs, so it's not a lack of useable space), that's not quite "pro".

Apart from that, it's at the same time positive for the Eyefinity6 version (quite good framerates in 6x2) and for the regular 5870 (not that much gain in 3x1).

Silent_Buddha

Legend

Nice. Although whenever I get around to doing Eyefinity, I'm not going for 6 monitors. The main benefit for me would be ultra-widescreen for exanded FOV. 6 monitors will still have that to an extent but be less than a 3 monitor setup. Additionally having the horizontal bezel right in the middle might be a problem for FPS and crosshairs.

That said 6 monitor would rock for MMORPGs and RTS games. Starcraft 2 on 6 monitors. Ooooh.

Regards,

SB

That said 6 monitor would rock for MMORPGs and RTS games. Starcraft 2 on 6 monitors. Ooooh.

Regards,

SB

Someone alert the system administrator, it appears one of the video cards is spitting out superheated gas

Someone alert the system administrator, it appears one of the video cards is spitting out superheated gas

I hear the PM is a big fan of all sorts of hot sauces...maybe that's the aftermath. What goes in must come out, one would assume

1Gb framebuffer? I thought the Eyefinity6 edition was being released as 2Gb? As the later slide suggests given the 1Gb vs 2Gb comparison.

AFAIK Blizzard stated they won't be supporting eyefinity due to advantage in player vs player.

That said 6 monitor would rock for MMORPGs and RTS games. Starcraft 2 on 6 monitors. Ooooh.

AFAIK Blizzard stated they won't be supporting eyefinity due to advantage in player vs player.

It's just typo.1Gb framebuffer? I thought the Eyefinity6 edition was being released as 2Gb? As the later slide suggests given the 1Gb vs 2Gb comparison.

Is this not a Blizzard's game?AFAIK Blizzard stated they won't be supporting eyefinity due to advantage in player vs player.

It's just typo.

Is this not a Blizzard's game?

I was referring to Starcraft 2 in particular, yes WoW supports Eyefinity already.

Similar threads

- Replies

- 17

- Views

- 4K

- Replies

- 220

- Views

- 91K

- Replies

- 90

- Views

- 16K

- Replies

- 172

- Views

- 22K