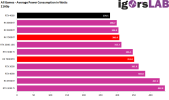

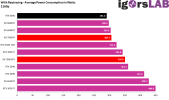

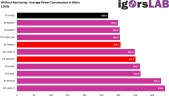

Why does the 7900 show 0.5-2.5GB more “VRAM used” than the 4800, especially with RT? It also clocks way lower in some RT tests vs. the rasterized test.Joker Productions with a nice comparision between 7900XTX and 4080 using a overlay:

No clue which clock rate this overlay reads but in F1 22 the 7900XTX can clock under 2000MHz. That explains the low performance in a few games:

Guardians of the Galaxy and F1 2022 show the biggest VRAM deltas. It clocks quite high in both Guardians tests but is much slower than the 4800 in both. It clocks quite low in both F1 tests yet is only slow with RT.

Last edited: