3Х would put them about on par with Ampere. I think they'll need more than that to remain on par with Lovelace.AMD needs at least a 3x increase (which is rumored) to not be obliterated again this fall.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GPU Ray Tracing Performance Comparisons [2021-2022]

- Thread starter DavidGraham

- Start date

DavidGraham

Veteran

If you didn't notice, this thread is created specifically for that purpose.No need to spam the forums with every single game performance test you come across

Hardware-based techniques applicable for ray tracing for efficiently representing and processing an arbitrary bounding volume - NVIDIA Corporation (freepatentsonline.com)

Nvidia seems to be considering both hardware SBVH and OBB(sort of) for better traversal performance.

12 box intersections per clock. I think earlier patents mentioned 8. I wonder if Turing and Ampere already support these sub-box intersections as it looks like a big efficiency win for arbitrarily shaped geometry.

As far as I know, Turing and ampere share basically the same RT cores with some small tweaks on Ampere (triangle position interpolation and motion blur)12 box intersections per clock. I think earlier patents mentioned 8. I wonder if Turing and Ampere already support these sub-box intersections as it looks like a big efficiency win for arbitrarily shaped geometry.

Lovelace is really the next big evolution. Without marketing blurb, it should be:

Turing RT core v1

Ampere v1.1

Lovelace v2

Hopefully it is. Sadly rumors suggest it's just another Ampere, having small improvements but having much more performance accomplished by higher power draw. So that would make it v1.2.As far as I know, Turing and ampere share basically the same RT cores with some small tweaks on Ampere (triangle position interpolation and motion blur)

Lovelace is really the next big evolution. Without marketing blurb, it should be:

Turing RT core v1

Ampere v1.1

Lovelace v2

Sadly rumors suggest it's just another Ampere

Not that that would be bad considering, if that would be the case.

Just like Ampere is "just another Volta"?Sadly rumors suggest it's just another Ampere

The fact that the basic architecture of multiprocessors will remain the same means about nothing for either the chip capabilities or its ray tracing performance (or even its performance, in general).

RNDA2 multiprocessors are essentially the same as these of RDNA1 for example.

As this poi

Regarding the new features that this board will deep dive weeks and weeks after public announcement, it suffice to look at A100 to H100 evolution to get an idea of what to expect from one generation jump. Some think that H100 is just a refined A100. Other think it's a major new architecture. So I guess the final answer on Lovelace will depend on each one sensitivity.

One thing is sure, with next gen from both AMD and Nvidia coming at nearly same time, winter will be hot in this forum

At this point, 22 years after the first GPU, one can argue that every new gen is only evolution. Fundamental blocks like ALUs and 3D pipeline are well know, leaving small room for improvement. But truth is that Lovelace (and RDNA3) will bring performance increase that will remind us the early days of GPUs.Hopefully it is. Sadly rumors suggest it's just another Ampere, having small improvements but having much more performance accomplished by higher power draw. So that would make it v1.2.

Regarding the new features that this board will deep dive weeks and weeks after public announcement, it suffice to look at A100 to H100 evolution to get an idea of what to expect from one generation jump. Some think that H100 is just a refined A100. Other think it's a major new architecture. So I guess the final answer on Lovelace will depend on each one sensitivity.

One thing is sure, with next gen from both AMD and Nvidia coming at nearly same time, winter will be hot in this forum

As this poi

At this point, 22 years after the first GPU, one can argue that every new gen is only evolution. Fundamental blocks like ALUs and 3D pipeline are well know, leaving small room for improvement. But truth is that Lovelace (and RDNA3) will bring performance increase that will remind us the early days of GPUs.

Regarding the new features that this board will deep dive weeks and weeks after public announcement, it suffice to look at A100 to H100 evolution to get an idea of what to expect from one generation jump. Some think that H100 is just a refined A100. Other think it's a major new architecture. So I guess the final answer on Lovelace will depend on each one sensitivity.

One thing is sure, with next gen from both AMD and Nvidia coming at nearly same time, winter will be hot in this forum

The next battleground is "RT On" so I expect much of the innovation of apis and hardware to occur in that space. Thankfully we seem to have moved on from the "does RT matter" debate and AAA games without RT will be the exception going forward. There is still room for big leaps in RT performance so that should hopefully be more exciting than the usual incremental bumps in flops and bandwidth.

AAA games without RT

There arent many AAA games without RT, in the future probably none.

DavidGraham

Veteran

A new ray tracing benchmark from BaseMark has been released, called Relic Of Life, at 4K, the 3090Ti is almost 3 times faster than 6900XT.

https://www.comptoir-hardware.com/a...-test-nvidia-geforce-rtx-3090-ti.html?start=5

Some other game tests from the same site at 4K resolution, focusing on the 3090Ti vs 6900XT.

Minecraft: 3X times faster than 6900XT

Quake 2 RTX: 2.5X times faster

Cyberpunk 2077: 2X times faster

Dying Light 2: 2X times faster

Doom Eternal: 65% faster

Watch Dogs Legion: 62% faster

Ghostwire Tokyo: 60% faster

F1 2021: 58% faster

Metro Exodus Enhanced: 54% faster

Control: 51% faster

Deathloop: 48% faster

Resident Evil Village: 15% faster

https://www.comptoir-hardware.com/a...-test-nvidia-geforce-rtx-3090-ti.html?start=4

https://www.comptoir-hardware.com/a...-test-nvidia-geforce-rtx-3090-ti.html?start=5

Some other game tests from the same site at 4K resolution, focusing on the 3090Ti vs 6900XT.

Minecraft: 3X times faster than 6900XT

Quake 2 RTX: 2.5X times faster

Cyberpunk 2077: 2X times faster

Dying Light 2: 2X times faster

Doom Eternal: 65% faster

Watch Dogs Legion: 62% faster

Ghostwire Tokyo: 60% faster

F1 2021: 58% faster

Metro Exodus Enhanced: 54% faster

Control: 51% faster

Deathloop: 48% faster

Resident Evil Village: 15% faster

https://www.comptoir-hardware.com/a...-test-nvidia-geforce-rtx-3090-ti.html?start=4

Last edited:

arandomguy

Regular

Yeah that’s been my experience in GPU limited scenarios. Current cards are really good at holding at the power limit. So if they’re reading lower it’s due to a cpu limitation or other fps cap. A 3090 power limited to 300w would probably still wipe the floor with the 6900xt.

An alternative to look at that might be interesting is to compare the clock speeds with respect to RT vs non RT workloads. I wonder if the under RT workloads the clock rates end up lower.

Something I posed when the "power creep" was first rumored for the then upcoming generation in Ampere was whether or not a factor in in that decision was to account for what happens when all the different more specialized hardware units end up being used concurrently. I'm at least guessing that the efficiency curve in terms of clock speed/power usage might be different with say just raster resources being used vs. hypothetically raster/RT/and even tensor simultaneously. So if you set the clock table more so for latter it would inherently mean it's less efficient in theory for just raster since it'd end up more so in the diminishing returns end of the frequency/power curve.

Hopefully it is. Sadly rumors suggest it's just another Ampere, having small improvements but having much more performance accomplished by higher power draw. So that would make it v1.2.

In terms of that rumor what I thought was maybe it as specifically referring to no changes in the SM configuration. We've had the last few generations making changes focused in that area with Pascal->Turing separate FPU and ALU, Turing->Ampere x2 FPU. If we want to say Pascal also had changes in that they halved the FPUs per SM from Maxwell then it'd mean I believe they've made SM configuration changes every generation.

Nebuchadnezzar

Legend

In my experience over the years you should not trust BaseMark with any kind of accurate performance data. They do not have the resources to validate and optimise their benchmarks.A new ray tracing benchmark from BaseMark has been released, called Relic Of Life, at 4K, the 3090Ti is almost 3 times faster than 6900XT.

https://www.comptoir-hardware.com/a...-test-nvidia-geforce-rtx-3090-ti.html?start=5

In my experience over the years you should not trust BaseMark with any kind of accurate performance data. They do not have the resources to validate and optimise their benchmarks.

It’s also really ugly…

DavidGraham

Veteran

Overclock3d latest review shows the 2080Ti being 10% faster than the 6900XT in Metro Exodus Enhanced Edition, Control and Cyberpunk @4K.

As for the situation with the 3090Ti, it showed the same huge gaps vs the 6900XT in games with multiple RT effects at 4K resolution.

Metro Exodus Enhanced: 3090Ti is 2.2X times faster

Control: 3090Ti is 2X times faster

Cyberpunk 2077: 3090Ti is 2X times faster

Watch Dogs Legion: 3090T is 60% faster

https://www.overclock3d.net/reviews/gpu_displays/gigabyte_rtx_3090_ti_oc_review/6

PurePC reached almost the same conclusion as well, the 2080Ti is faster than the 6900XT in Control, Cyberpunk, and ties it in Metro Exodus Enhanced Edition @4K.

As for the situation with the 3090Ti, it continues the same story vs the 6900XT @4K.

Control: 3090Ti is 2X times faster

Cyberpunk 2077: the 3090Ti is 2X times faster

Metro Exodus Enhanced: 3090Ti is 80% faster

https://www.purepc.pl/test-karty-graficznej-nvidia-geforce-rtx-3090-ti?page=0,19

As for the situation with the 3090Ti, it showed the same huge gaps vs the 6900XT in games with multiple RT effects at 4K resolution.

Metro Exodus Enhanced: 3090Ti is 2.2X times faster

Control: 3090Ti is 2X times faster

Cyberpunk 2077: 3090Ti is 2X times faster

Watch Dogs Legion: 3090T is 60% faster

https://www.overclock3d.net/reviews/gpu_displays/gigabyte_rtx_3090_ti_oc_review/6

PurePC reached almost the same conclusion as well, the 2080Ti is faster than the 6900XT in Control, Cyberpunk, and ties it in Metro Exodus Enhanced Edition @4K.

As for the situation with the 3090Ti, it continues the same story vs the 6900XT @4K.

Control: 3090Ti is 2X times faster

Cyberpunk 2077: the 3090Ti is 2X times faster

Metro Exodus Enhanced: 3090Ti is 80% faster

https://www.purepc.pl/test-karty-graficznej-nvidia-geforce-rtx-3090-ti?page=0,19

No apparent reasons not to expect some changes here in Lovelace either. No idea what that rumor is referring to.In terms of that rumor what I thought was maybe it as specifically referring to no changes in the SM configuration. We've had the last few generations making changes focused in that area with Pascal->Turing separate FPU and ALU, Turing->Ampere x2 FPU. If we want to say Pascal also had changes in that they halved the FPUs per SM from Maxwell then it'd mean I believe they've made SM configuration changes every generation.

GPU Benchmarks Hierarchy 2022 - Graphics Card Rankings | Tom's Hardware (tomshardware.com)

April 8, 2022

April 8, 2022

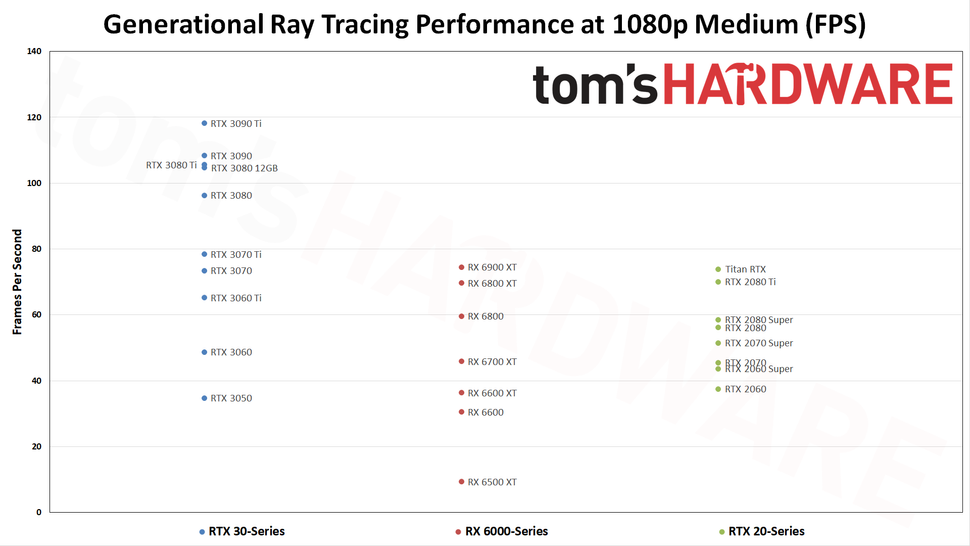

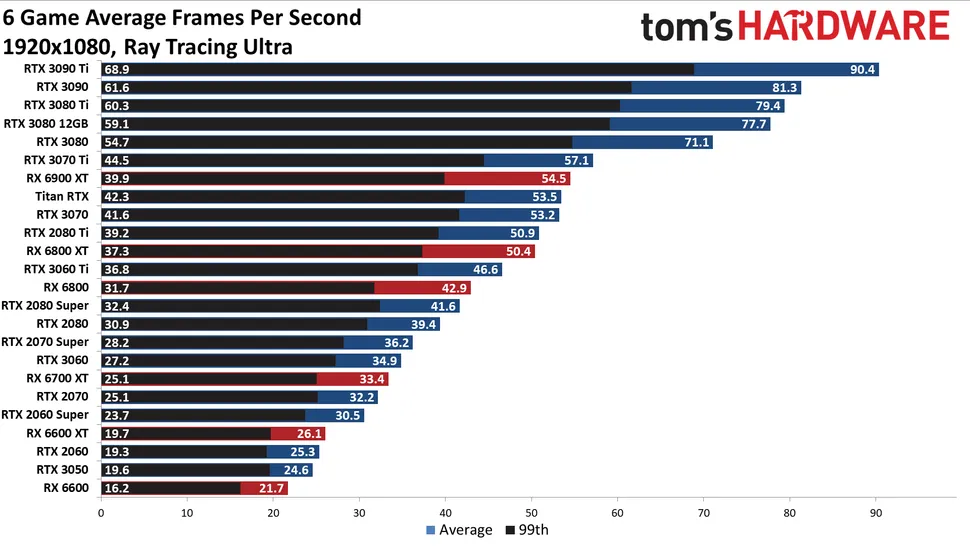

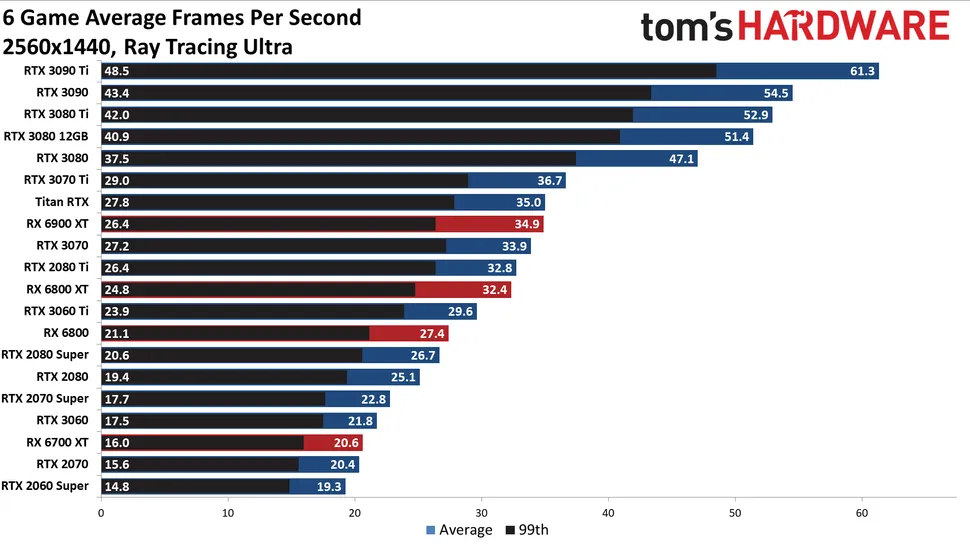

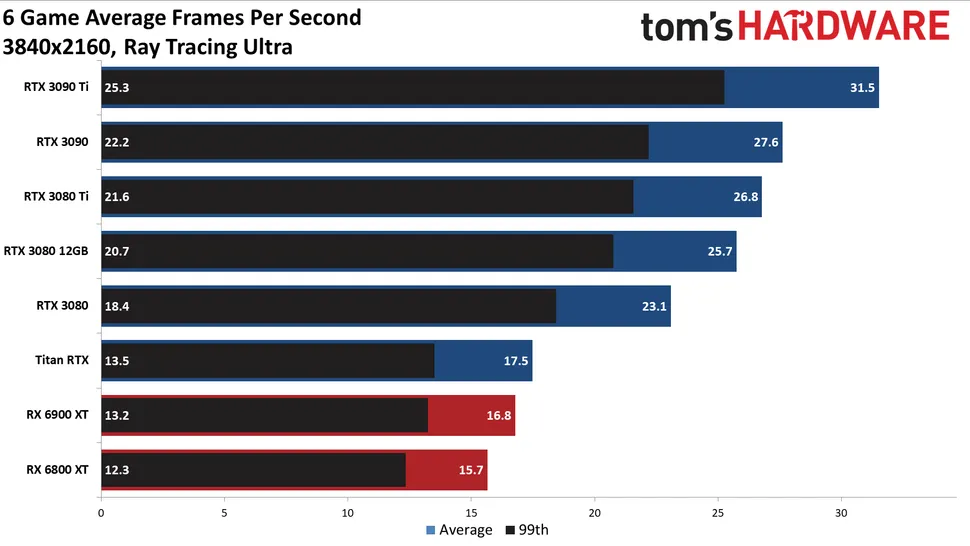

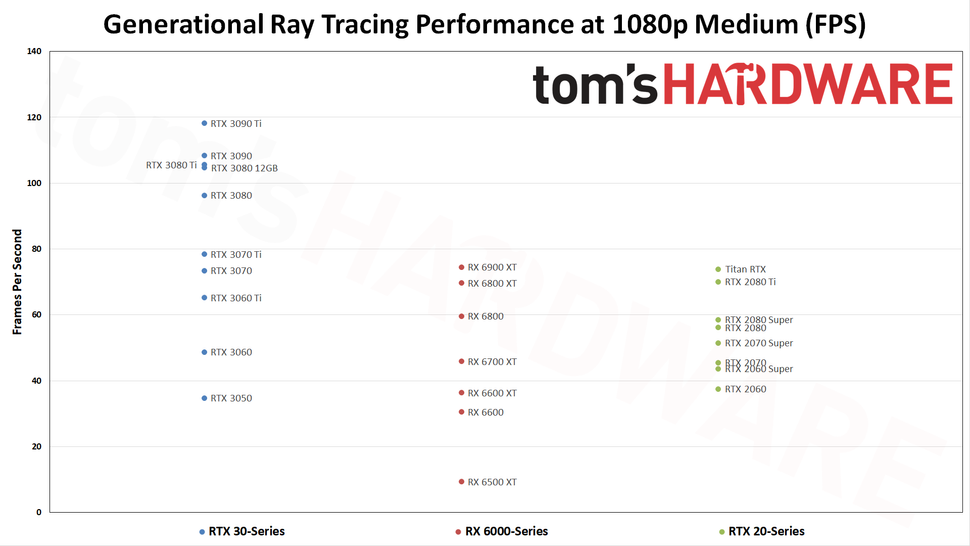

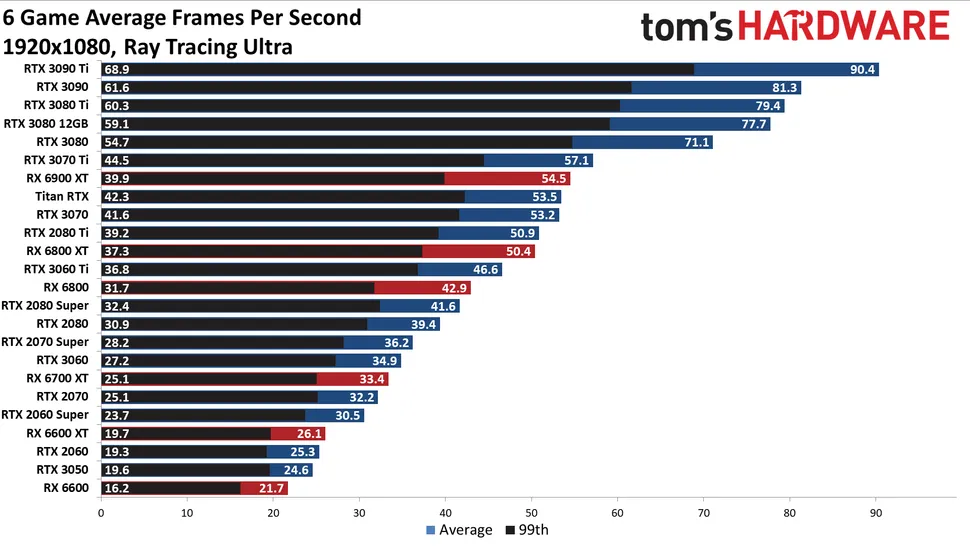

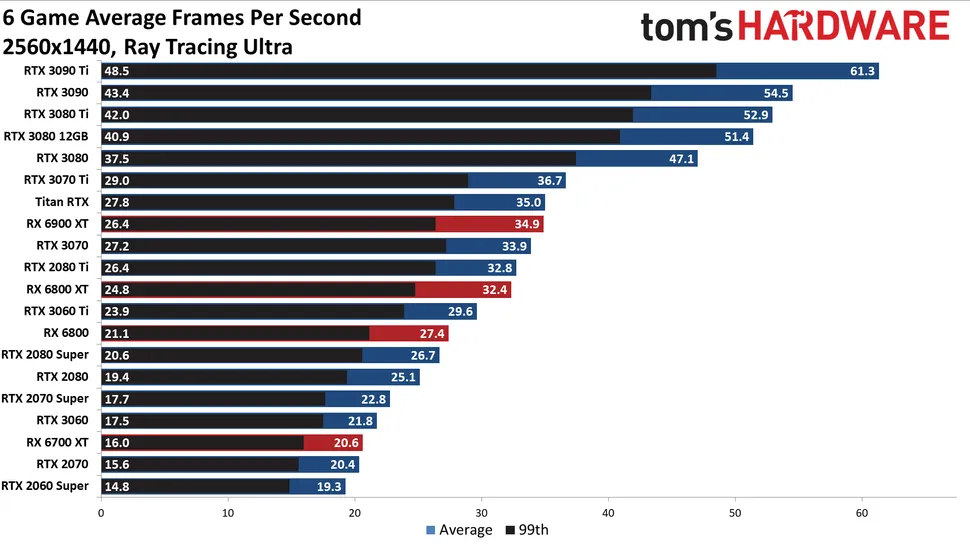

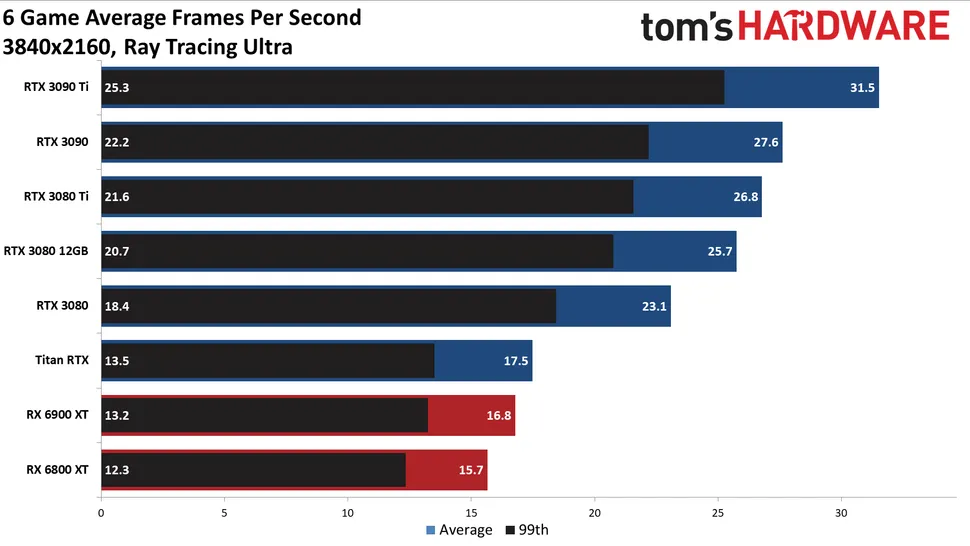

We've revamped our GPU testbed and updated all of our benchmarks for 2022, and are nearly finished retesting every graphics card from the past several generations. We have completed testing of the current generation AMD RDNA 2 and Nvidia Ampere GPUs, the Turing and RDNA series as well, and many of the other generations as well. Most of what remains are either budget offerings or extreme Titan cards, or cards that are no longer supported with the current drivers. We'll continue to flesh out the remaining holes with more GPUs as we complete testing..

DavidGraham

Veteran

They tested six ray tracing games: Bright Memory Infinite, Control Ultimate Edition, Cyberpunk 2077, Fortnite, Metro Exodus Enhanced, and Minecraft. The 3090Ti is essentially 2 times faster than 6900XT in these games.

Why can't anyone be bothered to normalize their fps*? It's not really rocket science, you can google it into excel in five minutes max and have much more valuable data.

*yes, i realize they have tables normalized to a geo mean of fps, but that's like so...

edit: I feel I need to spell it out once. Withgeo means averages (the worst), you potentially (numbers very much exaggerated) do this:

Game A

Card n0 120 fps

Card n1 240 fps

Card n2 390 fps

Game B

Card n0 60 fps

Card n1 30 fps

Card n2 10 fps (for example too little VRAM)

Your geo mean results:

Card n0 avg. 90 fps

Card n1 avg. 135 fps

Card n2 avg. 200 fps

Your (flawed) conclusion: Card n0 is slowest, card n1 is mediocre and card n2 is uber!!! You not only completely miss the fact that you can barely play Game B on card n1 and almost not at all on card n2, your conclusion points in the opposite direction!

With normalized numbers, you arrive at

Game A

Card n0 120 fps = 30,8%

Card n1 240 fps = 61,5%

Card n2 390 fps = 100%

Game B

Card n0 60 fps = 100%

Card n1 30 fps = 50%

Card n2 10 fps = 16,7%

You could just average the normalized values:

Card n0 geo mean of avgs. 65,4%

Card n1 geo mean of avgs. 55,8%

Card n2 geo mean of avgs. 58,4%

Or, if you want to give a fixed reference point, you could normalize those percentages again, so people can readily see, how good 58,4% really is, without an external anchor.

Card n0 normalized index 100%

Card n1 normalized index 85,3 %

Card n2 normalized index 89,3%

At least, you're not presenting a false winner. And still, you don't see that there are games, which just don't run well on cards #2 and 3 with the chosen settings. So please provide your raw fps/ms whatever it is, so people can verify your conlusion (and point out any error you may have made!) and weigh it differently to arrive at their individual conclusion (Game F does not interest me / I don't have a 4k display, so I'll disregard this).

*yes, i realize they have tables normalized to a geo mean of fps, but that's like so...

edit: I feel I need to spell it out once. With

Game A

Card n0 120 fps

Card n1 240 fps

Card n2 390 fps

Game B

Card n0 60 fps

Card n1 30 fps

Card n2 10 fps (for example too little VRAM)

Your geo mean results:

Card n0 avg. 90 fps

Card n1 avg. 135 fps

Card n2 avg. 200 fps

Your (flawed) conclusion: Card n0 is slowest, card n1 is mediocre and card n2 is uber!!! You not only completely miss the fact that you can barely play Game B on card n1 and almost not at all on card n2, your conclusion points in the opposite direction!

With normalized numbers, you arrive at

Game A

Card n0 120 fps = 30,8%

Card n1 240 fps = 61,5%

Card n2 390 fps = 100%

Game B

Card n0 60 fps = 100%

Card n1 30 fps = 50%

Card n2 10 fps = 16,7%

You could just average the normalized values:

Card n0 geo mean of avgs. 65,4%

Card n1 geo mean of avgs. 55,8%

Card n2 geo mean of avgs. 58,4%

Or, if you want to give a fixed reference point, you could normalize those percentages again, so people can readily see, how good 58,4% really is, without an external anchor.

Card n0 normalized index 100%

Card n1 normalized index 85,3 %

Card n2 normalized index 89,3%

At least, you're not presenting a false winner. And still, you don't see that there are games, which just don't run well on cards #2 and 3 with the chosen settings. So please provide your raw fps/ms whatever it is, so people can verify your conlusion (and point out any error you may have made!) and weigh it differently to arrive at their individual conclusion (Game F does not interest me / I don't have a 4k display, so I'll disregard this).

Last edited:

Similar threads

- Replies

- 7

- Views

- 1K

- Replies

- 188

- Views

- 26K

- Replies

- 15

- Views

- 2K

- Replies

- 19

- Views

- 3K

- Replies

- 6

- Views

- 1K