Based on the fact they're advertising it as RDNA2 part, yes, the hardware should be capable of it. Until proven otherwise, you can assume feature parity between the consoles on graphics side of things (API limitations aside)And, BTW? Does PS5 support Sampler Feedback?

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Current Generation Hardware Speculation with a Technical Spin [post GDC 2020] [XBSX, PS5]

- Thread starter Proelite

- Start date

- Status

- Not open for further replies.

It's most likely standard RDNA2 features.Why was VRS not mentioned in the deep dive. If PS5 don't have it, then they're going to be noticeably weaker in third party games.

After RT, VRS is the 2nd biggest feature of RDNA 2.

VRS is basically a tweaked MSAA.

It certainly is not the most important features after RT.

I'm not even sure if RT is the most important feature.

Getting rid of old vertex shader pipeline is quite a big one, developers can now write nice tessellator and lot's of interesting things with Mesh Sahders.

New texture samplers and other additions are also quite big ones, we will see a lot of interesting takes on shading, shader reuse and so on. (Perhaps quite silly things, like during runtime creating narrow/cone area lightfields with RT for far reflections stored in textures.. etc.)

Pretty sure it's RDNA2 features, so yes I'm certain of it.And, BTW? Does PS5 support Sampler Feedback?

API limitations have finally started to open even on PC side, will be very interesting to see if there is still some on DX12/Vulkan.Based on the fact they're advertising it as RDNA2 part, yes, the hardware should be capable of it. Until proven otherwise, you can assume feature parity between the consoles on graphics side of things (API limitations aside)

It was interesting to see mentions of barycentric coordinates being accessed on Ps4, when GCN/APIs didn't allow it on PC.

Silent_Buddha

Legend

Giving that both CPUs are based on the same architecture and the 0.1 ghz difference, if any game causes a significant drop in PS5 do to hitting CPU limits, XBSX will suffer a similar drop as well.

It's 0.1 GHz when comparing XBSX CPU with SMT versus PS5 CPU unknown.

It's 0.3 GHz when comparing XBSX CPU without SMT versus PS5 CPU unknown.

If the PS5 CPU is with SMT at 3.5 GHz, it's going to consume more power than PS5 CPU without SMT which in turn means there's a far larger chance that the PS5 will have to downclock the GPU.

Of course if a developer is pushing the PS5 GPU particularly hard, then the PS5 CPU may not be able to hit 3.5 GHz regardless of SMT or no SMT.

That said, I don't expect this to lead to any large disparity in presentation between the two consoles.

The only practical difference this will make, IMO, is that developers on PS5 have to pay attention to how they use the CPU and GPU where on XBSX they get the same performance from each regardless of how they use it.

Regards,

SB

Giving that both CPUs are based on the same architecture and the 0.1 ghz difference, if any game causes a significant drop in PS5 do to hitting CPU limits, XBSX will suffer a similar drop as well.

The SSD speed advantage OTOH is likely going to be based on XBSX's performance for now as it's a new tech devs don't know how to use or scale ideally that's already some two orders of magnitude faster than the 20 GB/s target streaming of PS4. For a dev to make their game better on PS5 thanks to its faster SSD, they'll have to deliberately develop for it, unlike making use of XBSX's faster CPU that just happens. Maybe down the line, engines will scale naturally based on storage performance just as they do graphics performance and CPU performance (we have that now regards pop-in on streaming assets at least), but certainly for the time being, PS5's double-speed SSD isn't going to yield massive advantages in cross-platform games. Cross-platform games will target the most sensible storage performance target and not care about optimising for a niche audience.

Giving that both CPUs are based on the same architecture and the 0.1 ghz difference, if any game causes a significant drop in PS5 do to hitting CPU limits, XBSX will suffer a similar drop as well.

On the topic of CPU, Alex from DF just posted on resetera "Zen just runs around the Jag that most cross gen games are not going to worry about CPU time". By the time any game hits CPU limits on these consoles we will probably have PS5Pro already on the market.

Considering one is advertised as best case "boost" and the other is locked in, we actually don't the speed difference between the two.

I'm not saying XBSX has a meaningful advantage! It's a qualitative difference between the advantage of SSD speed versus CPU speed, and not a quantitative advantage that one is much more better off. A CPU speed advantage will affect every game on the platform with no work needed by the devs, whether that's by 10% or 0.1%. Have a game that's maxxing out the system? Then a faster CPU makes it run faster.

The SSD speed advantage OTOH is likely going to be based on XBSX's performance for now as it's a new tech devs don't know how to use or scale ideally that's already some two orders of magnitude faster than the 20 GB/s target streaming of PS4. For a dev to make their game better on PS5 thanks to its faster SSD, they'll have to deliberately develop for it, unlike making use of XBSX's faster CPU that just happens. Maybe down the line, engines will scale naturally based on storage performance just as they do graphics performance and CPU performance (we have that now regards pop-in on streaming assets at least), but certainly for the time being, PS5's double-speed SSD isn't going to yield massive advantages in cross-platform games. Cross-platform games will target the most sensible storage performance target and not care about optimising for a niche audience.

But regardless of streaming, surely when loading the game, loading levels, fast travelling and all of that will simply load at the maximum possible speed on either?

Are you just assuming this, or has there been more info released somewhere?Very interesting Direct Storage seems to use a special game format dedicated to SSD. Great news for XSX and PC.

anexanhume

Veteran

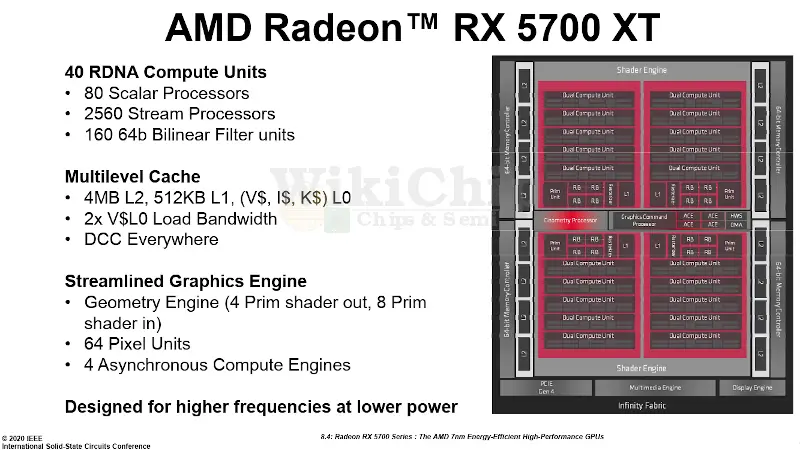

GPU shader engines have had geometry engines, or geometry processors, depending on what AMD has decided to call them. If there's a distinction to be made between the two terms, I haven't seen that communicated.

RDNA has the one block called a geometry processor, although there are elements at the shader array level that would seem to cover parts of what fell under that label in prior generations.

I haven't seen further explanation about what has changed there.

Sony's presentation has something called the Geometry Engine (all caps in the presentation, but it seems to fit Sony's liking for labeling something an Engine of some kind), where there seems to be emphasis that there's a specific collection of programmable features and associated IP that can handle geometry and primitive setup. It's discussed as something separate from the conventional setup process.

This has some implications that are similar to mesh shaders as put forward by DX12 or Nvidia, but only some high-level possibilities were mentioned in the presentation. Primitive shaders are an apparent way to provide synthesis or procedural generation, but more general effects rather than details were given to know how they slot in with regards to the stages associated with mesh shading in other formulations.

The PS5 seems to list primitive shaders' most basic formulation to be along the lines of the culling triangle-sieve formulation from the PS4 and GCN, though it seems Sony is committing itself to offering primitive shaders in a form that developers can interact with and program.

Where on the Venn diagram the functionality overlaps with our understanding of prior forms of what AMD has discussed (compiler-created primitive shaders, future versions of primitive shading, surface shaders) and other formulations of the geometry front end (DX12 amplification and mesh shaders, the Xbox Series X's potentially custom elements for it, and/or unique details of Nvidia's task and mesh shaders) would need more disclosures.

I came across this, so it does indeed appear to be primitive/mesh shader related:

https://fuse.wikichip.org/news/3331/radeon-rx-5700-navi-and-the-rdna-architecture/2/

I’m not sure what kind of foothold they have. I remember reading about their tech and being very intrigued, but it also sounds like Kraken has a huge foothold.Rich Geldreich is a founder of Binomial, the company behind the Basis Universal Supercompressed GPU Texture Codec. And apparently Microsoft is using this technology in XSX.

https://github.com/BinomialLLC/basis_universal/blob/master/README.md

D

Deleted member 7537

Guest

I'm not saying XBSX has a meaningful advantage! It's a qualitative difference between the advantage of SSD speed versus CPU speed, and not a quantitative advantage that one is much more better off. A CPU speed advantage will affect every game on the platform with no work needed by the devs, whether that's by 10% or 0.1%. Have a game that's maxxing out the system? Then a faster CPU makes it run faster.

The SSD speed advantage OTOH is likely going to be based on XBSX's performance for now as it's a new tech devs don't know how to use or scale ideally that's already some two orders of magnitude faster than the 20 GB/s target streaming of PS4. For a dev to make their game better on PS5 thanks to its faster SSD, they'll have to deliberately develop for it, unlike making use of XBSX's faster CPU that just happens. Maybe down the line, engines will scale naturally based on storage performance just as they do graphics performance and CPU performance (we have that now regards pop-in on streaming assets at least), but certainly for the time being, PS5's double-speed SSD isn't going to yield massive advantages in cross-platform games. Cross-platform games will target the most sensible storage performance target and not care about optimising for a niche audience.

No, I didn’t want to mean you were saying that. I just wanted to say that I really don’t expect games to hit CPU limits on these consoles anytime soon.

Edit: everyone should take a look at the latest steam survey to understand why.

Doesn't PS5's get more impacted by contention though? I'm completely at a loss how that works!

I wasn’t drawing any conclusions, just stating that in terms of hardware this seems like a different balancing of virtually identical silicon ... ? And so I am interested in how the differences play out. I can certainly imagine that the advantage may be significant.

DavidGraham

Veteran

Assuming it doesn't downclock under load.PS5 has a 405MHz gpu clock advantage

Yep.But regardless of streaming, surely when loading the game, loading levels, fast travelling and all of that will simply load at the maximum possible speed on either?

But regardless of streaming, surely when loading the game, loading levels, fast travelling and all of that will simply load at the maximum possible speed on either?

That said, can the difference be that big?Yep.

Of course it's possible that on PC everything is just so badly optimized, but so long as you set SATA SSD as baseline, going faster SSDs and NVMe seems to have relatively small effect on loading times?

Are you just assuming this, or has there been more info released somewhere?

I find something about it where they tell the last 10 years I/O software system are obsolete and we deliver something new about it.

anexanhume

Veteran

This is going to get old real fast.Assuming it doesn't downclock under load.

Like Cerny said, just a 2-3% downclock could reduce thermal by 10%. That's ~50MHz and shouldn't be much greater. so it's still pretty much a 10 TF console through and through with a sizable 350MHz clock advantage in worst case scenario while keeping a impressive 405MHz advantage most of the time.Assuming it doesn't downclock under load.

DuckThor Evil

Legend

Of course it's possible that on PC everything is just so badly optimized, but so long as you set SATA SSD as baseline, going faster SSDs and NVMe seems to have relatively small effect on loading times?

That's because the SSD speed isn't the bottleneck, but the low CPU utilization on decompressing the data being loaded.

That's nonsense. PS5 has to divide available memory bandwidth between GPU and CPU, so effective GPU memory bandwidth will be much lower than the theoretical maximum and less deterministic. XBSX GPU will have the fast memory all to itself (unless devs are morons) and CPU will almost always use slower memory.

That's not how the XSX memory setup works. There are 10 32-bit controllers, of which 6 attach to 2GB chips and 4 attach to 1GB chips. Every controller works at 14Gbps. The 10GB "fast pool" is just the first 1GB on all the chips, so the 6GB "slow pool" is not independent of the fast pool, having the CPU use the slow portion does still slow down the fast portion.

That's why the total bandwidth is still 560GB/s, not 560+336GB/s

The full twitter convo:

Yea DF: get the scoop and start talking

Yea DF: get the scoop and start talking

- Status

- Not open for further replies.

Similar threads

- Replies

- 797

- Views

- 79K

- Locked

- Replies

- 3K

- Views

- 296K

- Replies

- 37

- Views

- 3K

- Replies

- 22

- Views

- 8K