The roadmap for UE5.3 is pretty interesting. For example, Epic promises significant optimizations for HW-Lumen.

Reflections will also support more than one bounce.

Reflections will also support more than one bounce.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Yeah that's fairly bad... not sure offhand why it would be happening. I'm guessing there's some pretty screwy Lumen scene representation happening there, perhaps with a malformed SDF from a monolithic object with too much concave portions. It looks like possibly only the screen space rays are "saving" it when it's not in motion.

I assume you mean "100% automatic/hands-off" rather than "real-time" here. To be clear, I don't think anyone is claiming that any of these new systems - including triangle raytracing - require zero art consideration. It's more that they significantly reduce the workload to the point that I would expect something like the above artifact could be fixed by a single person pretty easily, and probably without significant "hacks" like invisible light blockers or otherwise. Clearly something in the Lumen Scene there is screwy, and it's usually pretty easy to use the editor visualizations to track down what is going on in my experience. There's some discussion of various things to think about in this article for what it's worth: https://docs.unrealengine.com/5.2/en-US/lumen-technical-details-in-unreal-engine/

For the curious, Triangle RT generally has other issues more related to biasing near surfaces and LOD. In cases where other algorithms may break down in quality, it also often breaks down in performance, neither of which are acceptable for games so generally need to be addressed in content additionally. It's a good fit for cases like architecture with static planar surfaces and thin walls, but tends to completely break down in cases like foliage where it both performs terribly and provides a relatively poor approximation of the aggregate geometry.

I don't know whether Jusant is using HW (triangle RT) or SW Lumen, but judging from the pretty strong performance I would guess SW. Lots of static geometry though so definitely possible it is using HW RT on simplified geometry.

I assume you mean "100% automatic/hands-off" rather than "real-time" here.

Glad optimizations for consoles are still happening. Since console is on lower rung of hw these days the savings should scale up to more powerful PC offeringsThe roadmap for UE5.3 is pretty interesting. For example, Epic promises significant optimizations for HW-Lumen.

Reflections will also support more than one bounce.

I'm not familiar enough with the details of Lumen to comment. I'll just say generally the Lumen guys have experimented with a *lot* of techniques before settling on the current ones, and if something different comes up that is obviously better across the platforms and content that Unreal supports I don't doubt they would be happy to implement it. Indeed there have been quite a lot of non-trivial changes between 5.0 and 5.2+ already.Any consideration for hash grids like AMD is using for their "GI 1.0"? It seems like a big re-write, but ultimately a timesaver for artists if it really does get rid of cached geo representation issues.

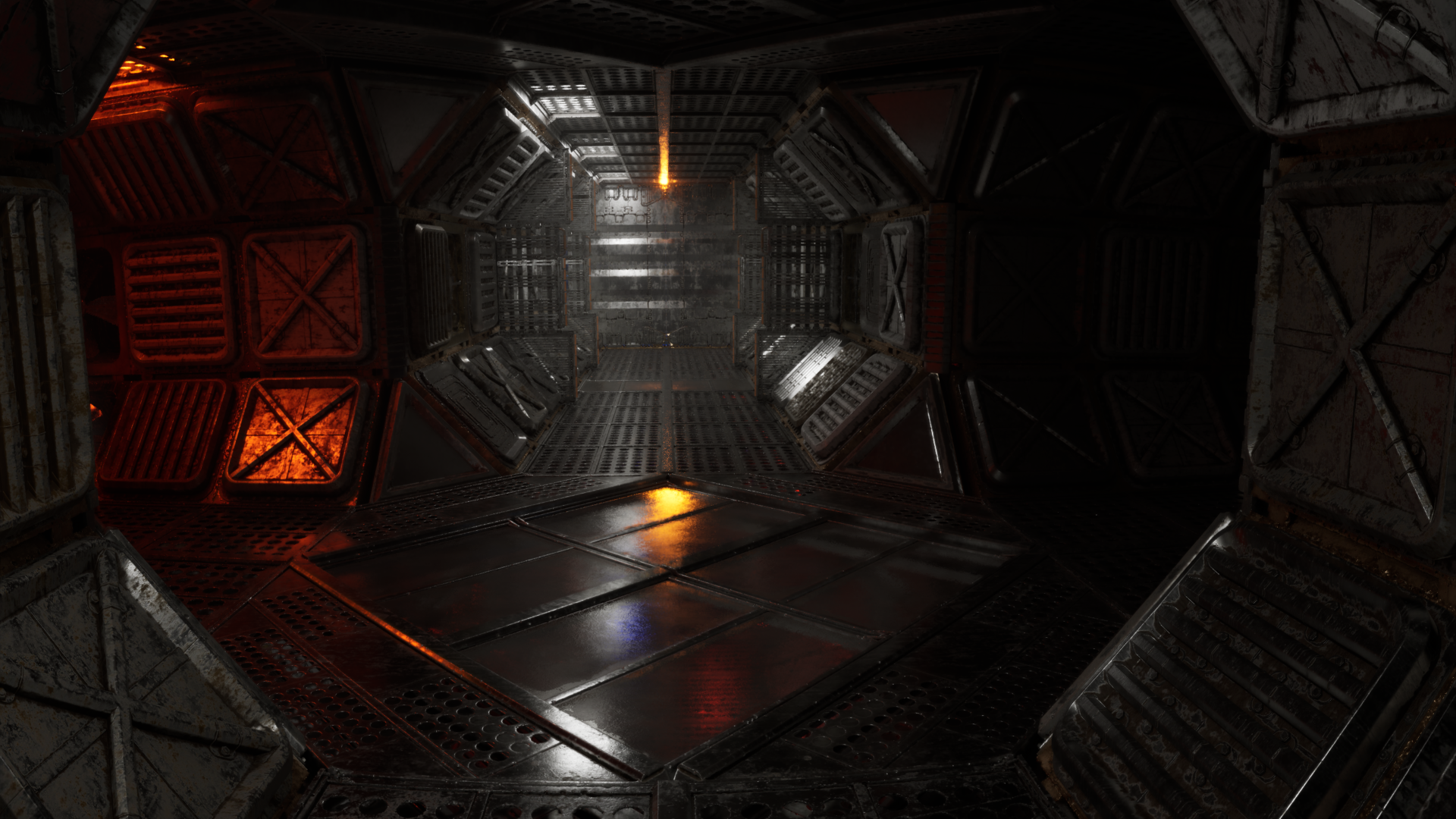

dylserx.itch.io

dylserx.itch.io

Corridor is a tech-demo / test project of sorts for learning Unreal Engine's new material system, Substrate using the latest features on UE5-Main branch.

This is designed for high end systems only and is fairly heavy due to the ultra high poly nature of the environment and high density textures as well as lots of Lumen use.

I'll be updating this as I add more and as more Substrate features get added to the engine.

Controls are standard WASD for movement, F to toggle flashlight and M to toggle settings menu and hold down middle mouse button to zoom in the camera.

As it is built on UE5-Main expect crashes from time to time, for instance right now a 4090 crashes when changing screen percentage sometimes.

Shipping a game with VSM, Lumen, and Nanite at 60 fps on current gen is not going to be easy at all.What’s the current state of UE5?

Shipping a game with VSM, Lumen, and Nanite at 60 fps on current gen is not going to be easy at all.

because of cinematic angles, pre fight and win sequences look the same in the beta than in the reveal trailer, which never used nanite nor lumen.Does anyone know when we will see UE5 AAA games?

For instance, Tekken 8 looks impressive on real-time reveal trailer.

Now it looks like a cross-gen UE4 game

What’s the current state of UE5?

Apparently they even went to the effort of adding SER.

DESORDRE : A Puzzle Game Adventure on Steam

Immerse yourself in the multidimensional universe of Desordre. Solve intricate puzzles, push the boundaries of 4-dimensional thinking. Get ready for a unique experience where reflection takes on a whole new dimension. Created by one-man development studio SHK Interactive using Unreal Engine.store.steampowered.com

This game added RTXDI as of recently, along with DLSS 3 and XeSS support, and started using UE 5.2, Lumen and stuff.

Sounds like they are just using the NvRTX branch which has SER and RTXDI built into it:Apparently they even went to the effort of adding SER.

Based on UE 5.2.0-release engine. Initial NvRTX 5.2 release.

Engine enhancements:

- Inexact Occlusion

- Sampled Direct Lighting (RTXDI/ReSTIR)

- Sampled Lighting (RTXDI) for Lumen Surface Cache

- Sampled Lighting (RTXDI) for Lumen Reflections

- Ray Traced Volumetric Fog

- Shader Execution Reordering (SER): ray tracing, path tracing, and Lumen reflections

- BVH Visualization (deprecated in this release)

Apparently they even went to the effort of adding SER.

"I'm pleased to announce that DESORDRE now incorporates NVIDIA's Shader Execution Reordering (SER) for players using RTX 4000 series GPUs and daring to push their systems with the "Let My PC Burn" setting. In hardware mode, SER will provide a noticeable boost to the speed of rendering reflections, enhancing performance by a few percentage points. This improvement means a smoother and more responsive gaming experience without significantly altering the overall look of the game."

I may aswell drop the RTXDI paragraph here aswell. Both these quotes are from the update notes from the steam page linked.

"I'm excited to share another key feature of this update: the integration of RTXDI (Direct Illumination) into DESORDRE. This advanced technology from Nvidia allows each light source in the game to cast ray-traced shadows, adding a profound layer of depth and visual realism.

With the implementation of RTXDI, every glimmer of light and shadow in the world of DESORDRE is now more accurately represented. This brings about richer contrasts, pronounced depth, and an overall more immersive gaming experience. The shadows beneath objects, the lighting of open spaces, and minute details will stand out more vividly.

Furthermore, thanks to the recently implemented DLSS 3.0 technology, these stunning visual improvements come without sacrificing game performance. Even with the intricate shadows and lighting effects from ray tracing, frame rates remain impressively high for a smooth gaming experience."

Would be interesting to see some actual performance and image quality/difference data. If someone with an ada gpu has to time and motivation to look at it and report here I will gift you the game.

Spent the 10€ to support this guy.Would be interesting to see some actual performance and image quality/difference data. If someone with an ada gpu has to time and motivation to look at it and report here I will gift you the game.

Thanks for that, appreciated. So it does seem quite a bit heavier judging by the overlay numbers, but the visual difference is pretty noticable aswell. Hope we see a few other devs use that ue branch. @pjbliverpool might be a little disappointed your pc seems fire proofSpent the 10€ to support this guy.

Game runs very good on my 4090 and 4090 mobile. Get >50 FPS in 3440x1440 with everything on and DLAA with the desktop 4090. RTXDI looks good, too. Like in Cyberpunk its putting color into games: https://imgsli.com/MTk1Njcz

Its more stable than Lumen only, too.

With FrameGeneration and DLSS this game runs with ~120FPS+.

You need an Xbox specific UE5 developed by The Coalition, they have coding wizards.Shipping a game with VSM, Lumen, and Nanite at 60 fps on current gen is not going to be easy at all.

The game looks quite good although not at the same level as the initial trailer. But at least some stages where the lighting is better, the visuals are very very close.Does anyone know when we will see UE5 AAA games?

For instance, Tekken 8 looks impressive on real-time reveal trailer.

Now it looks like a cross-gen UE4 game

What’s the current state of UE5?