We know it is. We just don't know what real difference that makes versus the second, third and fourth best in class.if people then would more willingly accept that PS5s I/O system is now still one of the best out there and also by release of PS5 the Best in class like Epic Man said it ..

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Ratchet & Clank technical analysis *spawn

- Thread starter Inuhanyou

- Start date

Did PS5 patching make much difference?

Nice but we need comparisons against PS5 40hz mode!

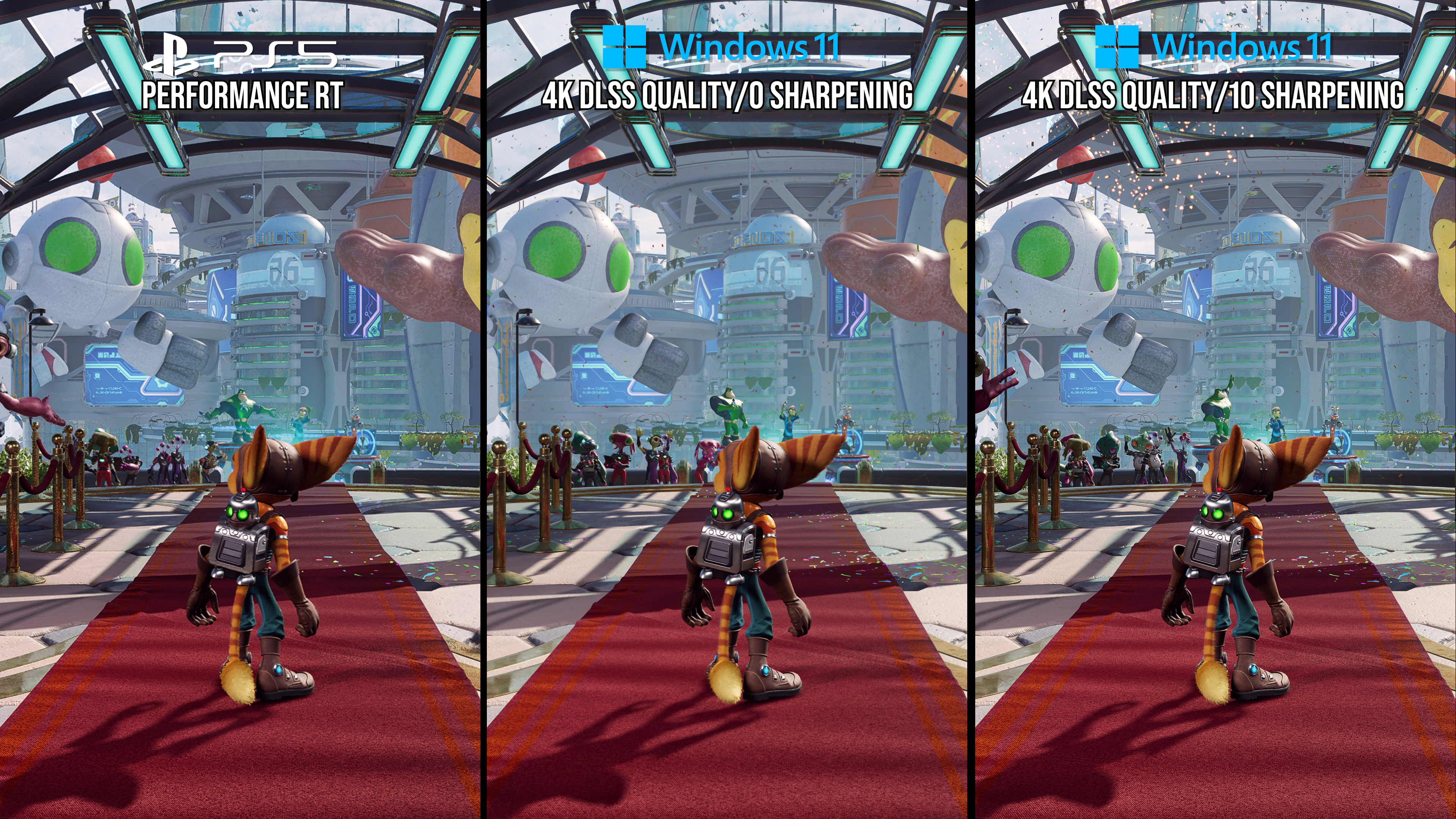

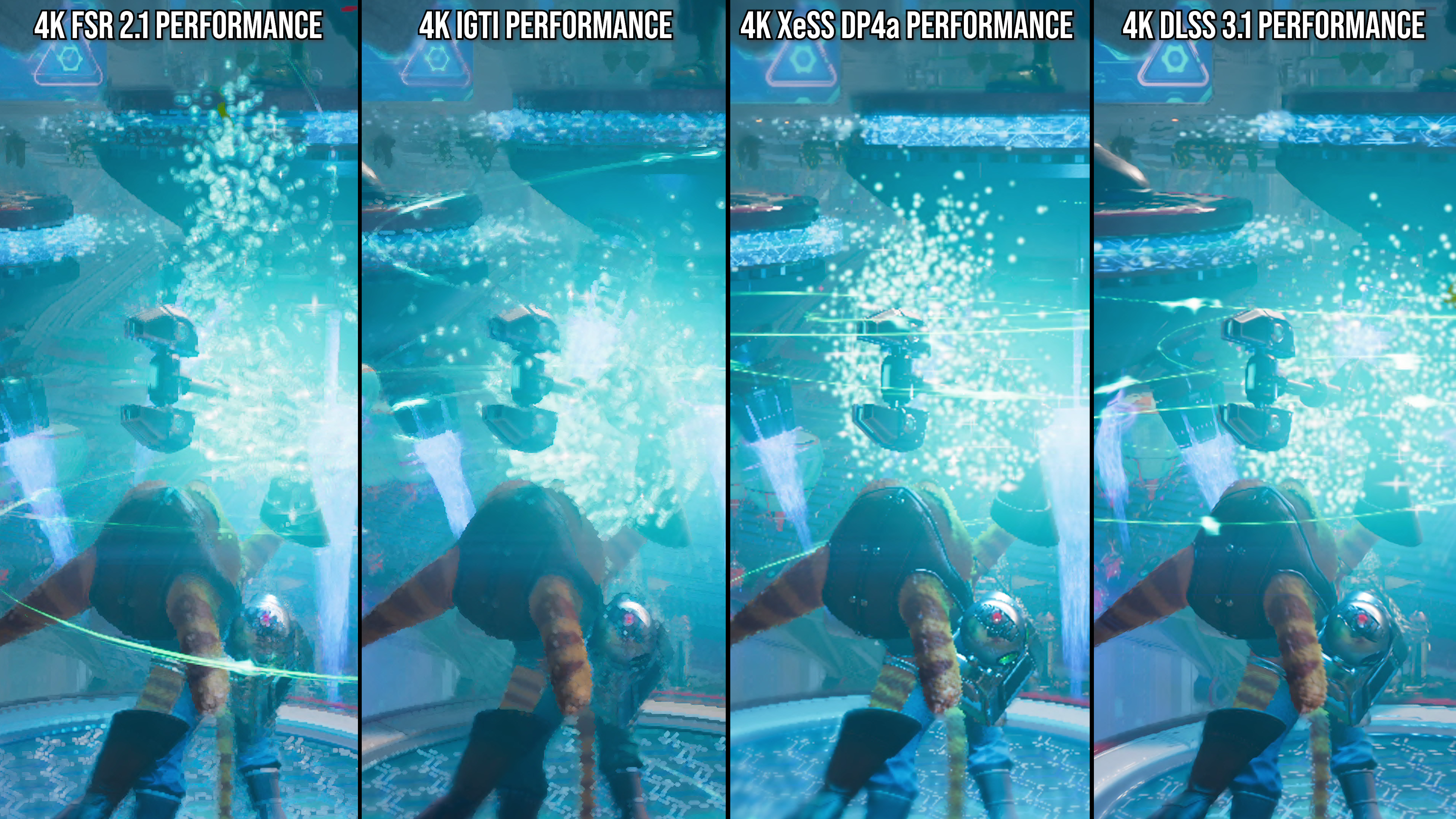

To say the least, DLSS kind of owns every image technique in this game. IGTI does decidedly beat FSR 2.1 here too.

@pjbliverpool - my main video coming on friday is pretty no non-sense. I say it feels unfinished and unpolished within the first few mins there. Sony pushing it out the door too early. Nixxes are very talented and know how to do good things, so many things not right at launch tells me the time table was bad.

Overstander

Newcomer

at least in a chapter when its about IQ and LoD Settings!Nice but we need comparisons against PS5 40hz mode!

id be intrested to see that RTX 2070/Ryzen5 3600 system of yours, how that system fares in high settings against PS5 especially with GPU Decompression...

That'll be in the deep dive. This is not the deep dive. It says in the article -Nice but we need comparisons against PS5 40hz mode!

We received code for this relatively late, so our deep dive review coverage is still work-in-progress, but we have put together a detailed 'let's play' video that shows three members of the Digital Foundry team playing the game on three very different pieces of hardware.

That's it, just a fun look at three specs. Not a deep dive. Not a dive. Just a little paddling about. We don't even get to see real performance on HDD and to see how much Sony were lying as some believed proven by the minimum specs mentioning HDD...

They enhanced IQ, performance and RT through several patches.Did PS5 patching make much difference?

Overstander

Newcomer

why the sarcastic angle? Was and is the inet not full of doubters that call all PS5 I/O a marketing hoax and that PC "will just do better" - PS5 Storage SOlution is the most elgant we have and also the one that taxes development the least - since cerny said - it is all abstracted. Devs dont need to code for PS5 I/O Specifics. That Direct Storage is here now and somewhat capable is actually good because that means that Devs have a leverage to achieve similar results on PC (otherwise i was afraid , Sony would step on the breaks with PS5 optimisations of its games just to have an easier PC Port) .We know it is. We just don't know what real difference that makes versus the second, third and fourth best in class.

But the Devil lies within the Detail as we say here in Germany : How much ahead of time was PS5s storage at release exactly? Whats actually needed to mimik a game that actually trys to use 100% PS5s intrinsics. Will RTX 20xx realy "just be fine" when they have on top of rendering the game also to do the GPU Decompression?

All these questions are to be answered and especially since PS5 was and is viewed as a mere cheap console - but is is a sophistcated piece of hardware and it has to be respected.

Could be the 30fps as well - i think it is similar in IQ and Settings to the 40fps mode. But thats not to say you should always use this mode. Just have a chapter with the 30fps Mode when its about IQ rather than fps..Dictator: Why the 40 FPS Mode and Not the 30 fps one? Also only Rich has the possibility to record 120hz modes (40 FPS) at 4K with a preproduction professional grade capture card. I do not own one, like most humans on earth.

Because there is not much difference in image fidelity between those modes IMO.Why the 40 FPS Mode and Not the 30 fps one? Also only Rich has the possibility to record 120hz modes (40 FPS) at 4K with a preproduction professional grade capture card. I do not own one, like most humans on earth.

Flappy Pannus

Veteran

Don't get me wrong but Spiderman's texture load issues with ray tracing enabled never got fixed. I have reported several times but they didn't seem to care (not that there was a public exposition like this, but I'm glad it does now).

Yeah they never fixed that issue in the opening cutscene with the cop cars and low-res badges. What's odd is that even when running from an HDD, if you pause the game at that exact spot - the high-res textures immediately pop in, and stay there for the remainder of the cutscene. Really seems like a bug that was never fixed as it never made sense, hopefully this will not be the case in R&C.

You can see from the brief look at Alex's early "PS5 equivalent" PC, a 2700 Super + 3600, it struggles quite a bit to maintain 60fps during the portal transition scenes. It seems CPU limited in this scene, but the next portal has it near 95%+ usage. At 1080p with DLSS, and considering that at the medium preset it's likely not using RT and the PS5 is running at a dynamic 4k (and seems pretty close, I play in that as compared to RT which I find too blurry), this may be the biggest gap in performance from a 2070 Super -> PS5 that we've seen since TLOU P1. Maybe the GPU decompression is playing a part here? We can see with comparing CPU vs. GPU that will be likely be in the full review video later I guess.

The exception might be is if the DLSS dynamic works like it does in Spiderman though, where it just keeps rendering at a higher internal res until your GPU is constantly in the 95% range. For example, you can be overlooking the city in Spiderman on my 3060 and with 4k DLSS Quality, be at 75% GPU. Turn on Dynamic 60, and the GPU will jump up to 98% - so the game goes beyond DLSS quality, either DLAA at points or just keeps upping the internal res, dunno. *Edit: There's extended sequences with 70% GPU for that brief 2070Super look, so maybe scratch that theory.

Regardless, some of the posts here before launch were veering close to "PC victory laps" and the "hype" of the PS5's SSD architecture, which I thought a bit silly at the time. Nixxes are great but people have to remember that it took Spiderman many months before some egregious bugs were fixed, and not all were. These ports tuned explicitly for the custom hardware are very difficult, and there are definitely optimizations to be had on a closed system. It's not all smoke and mirrors, and Nixxes are not infallible. DirectStorage may be a good 'stop-gap' solution until GPU's get dedicated hardware blocks for this stuff - perhaps, but way too early to really guess on prime bottlenecks at this point.

Also, seems another loss for FSR2 in image quality when compared to not just DLSS, but even IGTI (and based on Spiderman, I don't think the PC's implementation of their IGTI is the exact same quality as the PS5 version to boot). Cripes I think UE4's temporal upscaling might be able to produce more consistent results. Really sucks that PC may be getting one of the worst reconstruction technologies forced upon them with no other options for some games thanks to AMD.

Last edited:

Flappy Pannus

Veteran

To say the least, DLSS kind of owns every image technique in this game. IGTI does decidedly beat FSR 2.1 here too.

Yeah that's why I don't play in Performance RT mode on my PS5. The sharpening is pretty egregious and leads to a lot of fringing, it just doesn't look good and doesn't mask the clearly lower internal res.

I wonder how much the GPU decompression affects performance.Yeah they never fixed that issue in the opening cutscene with the cop cars and low-res badges. What's odd is that even when running from an HDD, if you pause the game at that exact spot - the high-res textures immediately pop in, and stay there for the remainder of the cutscene. Really seems like a bug that was never fixed as it never made sense, hopefully this will not be the case in R&C.

You can see from the brief look at Alex's early "PS5 equivalent" PC, a 2700 Super + 3600, it struggles quite a bit to maintain 60fps during the portal transition scenes. It seems CPU limited in this scene, but the next portal has it near 95%+ usage. At 1080p with DLSS, and considering that at the medium preset it's likely not using RT and the PS5 is running at a dynamic 4k (and seems pretty close, I play in that as compared to RT which I find too blurry), this may be the biggest gap in performance from a 2070 Super -> PS5 that we've seen since TLOU P1. Maybe the GPU decompression is playing a part here? We can see with comparing CPU vs. GPU that will be likely be in the full review video later I guess.

View attachment 9276

The exception might be is if the DLSS dynamic works like it does in Spiderman though, where it just keeps rendering at a higher internal res until your GPU is constantly in the 95% range. For example, you can be overlooking the city in Spiderman on my 3060 and with 4k DLSS Quality, be at 75% GPU. Turn on Dynamic 60, and the GPU will jump up to 98% - so the game goes beyond DLSS quality, either DLAA at points or just keeps upping the internal res, dunno.

Regardless, some of the posts here before launch were veering close to "PC victory laps" and the "hype" of the PS5's SSD architecture, which I thought a bit silly at the time. Nixxes are great but people have to remember that it took Spiderman many months before some egregious bugs were fixed, and not all were. These ports tuned explicitly for the custom hardware are very difficult, and there are definitely optimizations to be had on a closed system. It's not all smoke and mirrors, and Nixxes are not infallible. DirectStorage may be a good 'stop-gap' solution until GPU's get dedicated hardware blocks for this stuff - perhaps, but way too early to really guess on prime bottlenecks at this point.

Also, seems another loss for FSR2 in image quality when compared to not just DLSS, but even IGTI (and based on Spiderman, I don't think the PC's implementation of their IGTI is the exact same quality as the PS5 version to boot). Cripes I think UE4's temporal upscaling might be able to produce more consistent results. Really sucks that PC may be getting one of the worst reconstruction technologies forced upon them with no other options for some games thanks to AMD.

No sarcasm.why the sarcastic angle?

This is a technical forum for technical discussions. Raise particular points with particulars posters in those discussions; don't rant against 'The Internet'.Was and is the inet not full of doubters that call all PS5 I/O a marketing hoax

When Cerny was talking about it, 3 years ago. Tech moves on and some day PC will overtake PS5, although that hasn't happened yet, and probably never will in terms of 'elegance' as it has to wrestle with inherently messy legacy architectures - you never get nicer than a fresh clean-slate design. Everyone here accepts that, PS5 was ahead, is likely still ahead, you have no argument. What matters for PC gaming is what level of experience they can get from their solutions and can the PC legacy arch maneouvre its way into a similar performance through alternative technologies such as GPU decompress.and that PC "will just do better" - PS5 Storage SOlution is the most elgant we have

If it's that important to you to have PS5 win a "best IO" award, take it with pride, but please stop interjecting that opinion into discussions wanting to understand the impact of the IO solutions and how different approaches compare.

Yeah they never fixed that issue in the opening cutscene with the cop cars and low-res badges. What's odd is that even when running from an HDD, if you pause the game at that exact spot - the high-res textures immediately pop in, and stay there for the remainder of the cutscene. Really seems like a bug that was never fixed as it never made sense, hopefully this will not be the case in R&C.

You can see from the brief look at Alex's early "PS5 equivalent" PC, a 2700 Super + 3600, it struggles quite a bit to maintain 60fps during the portal transition scenes. It seems CPU limited in this scene, but the next portal has it near 95%+ usage. At 1080p with DLSS, and considering that at the medium preset it's likely not using RT and the PS5 is running at a dynamic 4k (and seems pretty close, I play in that as compared to RT which I find too blurry), this may be the biggest gap in performance from a 2070 Super -> PS5 that we've seen since TLOU P1. Maybe the GPU decompression is playing a part here? We can see with comparing CPU vs. GPU that will be likely be in the full review video later I guess.

View attachment 9276

The exception might be is if the DLSS dynamic works like it does in Spiderman though, where it just keeps rendering at a higher internal res until your GPU is constantly in the 95% range. For example, you can be overlooking the city in Spiderman on my 3060 and with 4k DLSS Quality, be at 75% GPU. Turn on Dynamic 60, and the GPU will jump up to 98% - so the game goes beyond DLSS quality, either DLAA at points or just keeps upping the internal res, dunno. *Edit: There's extended sequences with 70% GPU for that brief 2070Super look, so maybe scratch that theory.

Regardless, some of the posts here before launch were veering close to "PC victory laps" and the "hype" of the PS5's SSD architecture, which I thought a bit silly at the time. Nixxes are great but people have to remember that it took Spiderman many months before some egregious bugs were fixed, and not all were. These ports tuned explicitly for the custom hardware are very difficult, and there are definitely optimizations to be had on a closed system. It's not all smoke and mirrors, and Nixxes are not infallible. DirectStorage may be a good 'stop-gap' solution until GPU's get dedicated hardware blocks for this stuff - perhaps, but way too early to really guess on prime bottlenecks at this point.

Also, seems another loss for FSR2 in image quality when compared to not just DLSS, but even IGTI (and based on Spiderman, I don't think the PC's implementation of their IGTI is the exact same quality as the PS5 version to boot). Cripes I think UE4's temporal upscaling might be able to produce more consistent results. Really sucks that PC may be getting one of the worst reconstruction technologies forced upon them with no other options for some games thanks to AMD.

The weird thing there is that the GPU clearly isn't at capacity so those dips could be caused by another bottleneck. I do like the comparable to console spec PC comparisons but I fear the 3600x just doesn't cut it. I'd like to see GPU performance completely isolated in this case on the 12900k system for comparison purposes.

The performance drop shown here during the transition is very likely a case of GPU decompression not being turned on. Nixxes said its only enabled at high settings and up. Alex is running medium here. Since the decompression is handled by the CPU, it is very much not up to the task as the GPU utilization is low. Now since I've not seen any GPU decompression setting I am wondering... which setting exactly enables it? Or is it only enabled when using the default preset high and up (which would be disappointing, since I would want to tweak shadows to medium for example without having to sacrifice GPU decompression). My best guess is GPU decompression is enabled with the level of detail on high and up.You can see from the brief look at Alex's early "PS5 equivalent" PC, a 2700 Super + 3600, it struggles quite a bit to maintain 60fps during the portal transition scenes. It seems CPU limited in this scene, but the next portal has it near 95%+ usage. At 1080p with DLSS, and considering that at the medium preset it's likely not using RT and the PS5 is running at a dynamic 4k (and seems pretty close, I play in that as compared to RT which I find too blurry), this may be the biggest gap in performance from a 2070 Super -> PS5 that we've seen since TLOU P1. Maybe the GPU decompression is playing a part here? We can see with comparing CPU vs. GPU that will be likely be in the full review video later I guess.

View attachment 9276

I'm sure @Dictator's video on Friday will answer these questions.

Flappy Pannus

Veteran

The performance drop shown here during the transition is very likely to be a case of GPU decompression not being turned on. Nixxes said that its only enabled at high settings and up. Alex is running medium here. Since the decompression is handled by the CPU, it is very much not up to the task as the GPU utilization is low. Now since I've not seen any GPU decompression setting I am wondering... what setting exactly enables it? Or is it only enabled when using the default preset high and up (which would be disappointing, since I would want to tweak shadows to medium for example without having to sacrifice GPU decompression). My best guess is GPU decompression is enabled with the level of detail on high and up.

I'm sure @Dictator's video on Friday will answer these questions.

Oh yeah, forgot about that. Yeah guess we'll see, I hope GPU decompression is a separate toggle and not tied into presets.

im guessing it would be textures set to high? They use gpu decompression for larger (resolution) mip levels (maybe 0,1) and cpu for smaller mip levels. It’s not used for other things like models. Not sure why they use cpu for small textures. Maybe there’s some balance with latency.

Flappy Pannus

Veteran

Yes it's a shame DF didn't look at the load speed difference between different CPU's as well as different drives.

They did, briefly.

im guessing it would be textures set to high? They use gpu decompression for larger (resolution) mip levels (maybe 0,1) and cpu for smaller mip levels. It’s not used for other things like models. Not sure why they use cpu for small textures. Maybe there’s some balance with latency.

Yes this is exactly why I'd like to see GPU performance isolated on a high end system for testing this title (not exclusively but as a pure measure of GPU performance). Direct Storage GPU decompression doesn't decompress everything on the GPU by design. And it seems in R&C even some GPU data is still done on the CPU so CPU load will still be higher than it will in the consoles. There is also the additional BHV and API overhead to consider which all hit CPU performance.

Similar threads

- Replies

- 70

- Views

- 22K

- Replies

- 64

- Views

- 54K

- Replies

- 149

- Views

- 28K

- Replies

- 4

- Views

- 4K