My post may have been poorly worded there. I was wishing for the CPU performance test in addition to the drive speed test as I see the two tests as interrelated. The test we got proves the issue isn't with NVMe speed, the test we're (currently) missing should tell us if it's really a CPU bottleneck. Obviously memory speed and GPU speed (for decompression) would also need to be varied to provide a full picture.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Ratchet & Clank technical analysis *spawn

- Thread starter Inuhanyou

- Start date

davis.anthony

Veteran

It seems they've actually internally capped the loading time for the portal on PC.

That's the only logical reason why there would be no loading difference when increasing drive speed.

That's the only logical reason why there would be no loading difference when increasing drive speed.

It seems they've actually internally capped the loading time for the portal on PC.

That's the only logical reason why there would be no loading difference when increasing drive speed.

That's not inconceivable but I'd say it's more likely a CPU limit. A simple underclock would tell us a lot one way or the other.

Flappy Pannus

Veteran

Indeed looks like it's a dual GPU/CPU decompression going on. Big textures, GPU. Smaller textures, CPU:

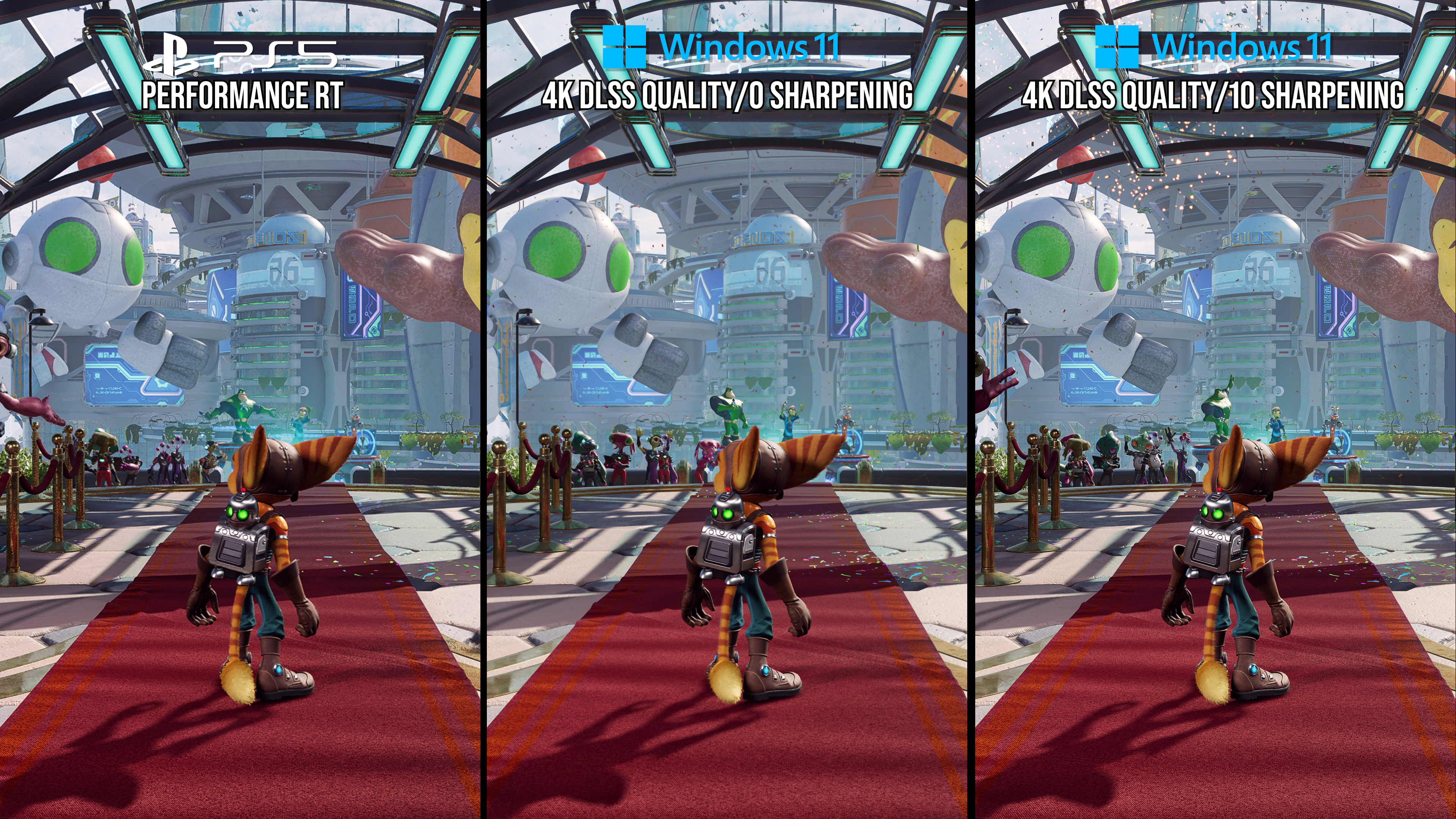

Then 60fps should be fine in theory. At the end of the day DF is reviewing the PC port here, they aren’t benchmarking PS5 hardware.Because there is not much difference in image fidelity between those modes IMO.

The settings chosen are because these are the settings (60fps) people with PCs will allow at a lower end, very few PC people are comfortable at playing frame rates below 60.

The comparison of 60fps at PC and 60fps with PS5 is apt then, since a PC player if they couldn’t afford a PC to play said game at 60fps may be tempted to see what is being offered for cheaper on PS5 at 60fps.

Then can compare what graphical features are supported in differences between ps5 and PC, but once again those who keep trying to draw a line between PC and PS5 here on a port will be misguided. Multiplatform games are more fair game if one must do this, but ports are a really bad title to choose for obvious reasons.

Why not Kraken and Oodle? I am Bit surprised they wanted to rework this for the port to save money?Indeed looks like it's a dual GPU/CPU decompression going on. Big textures, GPU. Smaller textures, CPU:

Because you think most PCs will run this game at stable 60fps with 4K DLSS max settings like in this comparison? And since when framerates are into the pictures when comparing the best quality possible console vs PC? On that account anyways the best mode is still the 40fps mode (say the 30fps mode then) on PS5 as it can potentialy run at 45-50fps if you have the right TV (and the difference of Image fidelity between 30hz and 40hz mode is very small).Then 60fps should be fine in theory. At the end of the day DF is reviewing the PC port here, they aren’t benchmarking PS5 hardware.

The settings chosen are because these are the settings (60fps) people with PCs will allow at a lower end, very few PC people are comfortable at playing frame rates below 60.

The comparison of 60fps at PC and 60fps with PS5 is apt then, since a PC player if they couldn’t afford a PC to play said game at 60fps may be tempted to see what is being offered for cheaper on PS5 at 60fps.

Then can compare what graphical features are supported in differences between ps5 and PC, but once again those who keep trying to draw a line between PC and PS5 here on a port will be misguided. Multiplatform games are more fair game if one must do this, but ports are a really bad title to choose for obvious reasons.

davis.anthony

Veteran

Because you think most PCs will run this game at stable 60fps with 4K DLSS max settings like in this comparison?

1080p is still the most common resolution on STEAM hardware survey.

DF will typically do the absolute highest and the absolute lowest, and the most common mainstream setup - 60fps is often the target framerate.Because you think most PCs will run this game at stable 60fps with 4K DLSS max settings like in this comparison? And since when framerates are into the pictures when comparing the best quality possible console vs PC? On that account anyways the best mode is still the 40fps mode (say the 30fps mode then) on PS5 as it can potentialy run at 45-50fps if you have the right TV (and the difference of Image fidelity between 30hz and 40hz mode is very small).

DF isn't a GPU benchmarking site either that's just tossing out raw stats. They are the very definition of video game tech journalism, the need to discuss and contrast image fidelity is part of that process to educate and inform their readers on what the impact of features do visually and the impact to the performance. While most GPU benchmarking sites are satisfied with using the presets of ultra, high, low. They actually spend some time to figure out what optimized settings are.

Last edited:

Flappy Pannus

Veteran

Its worth to remember that 60fps rt mode when uncapped run around 80fps, 30fps mode run on 120hz 40-50fps

It's really all over the place in that video. There is some speculation that dynamic res is disabled in VRR mode, dunno - but 'around' is going a lot of heavy lifting here, it can be 90fps, then drop to ~60 when turning the camera.

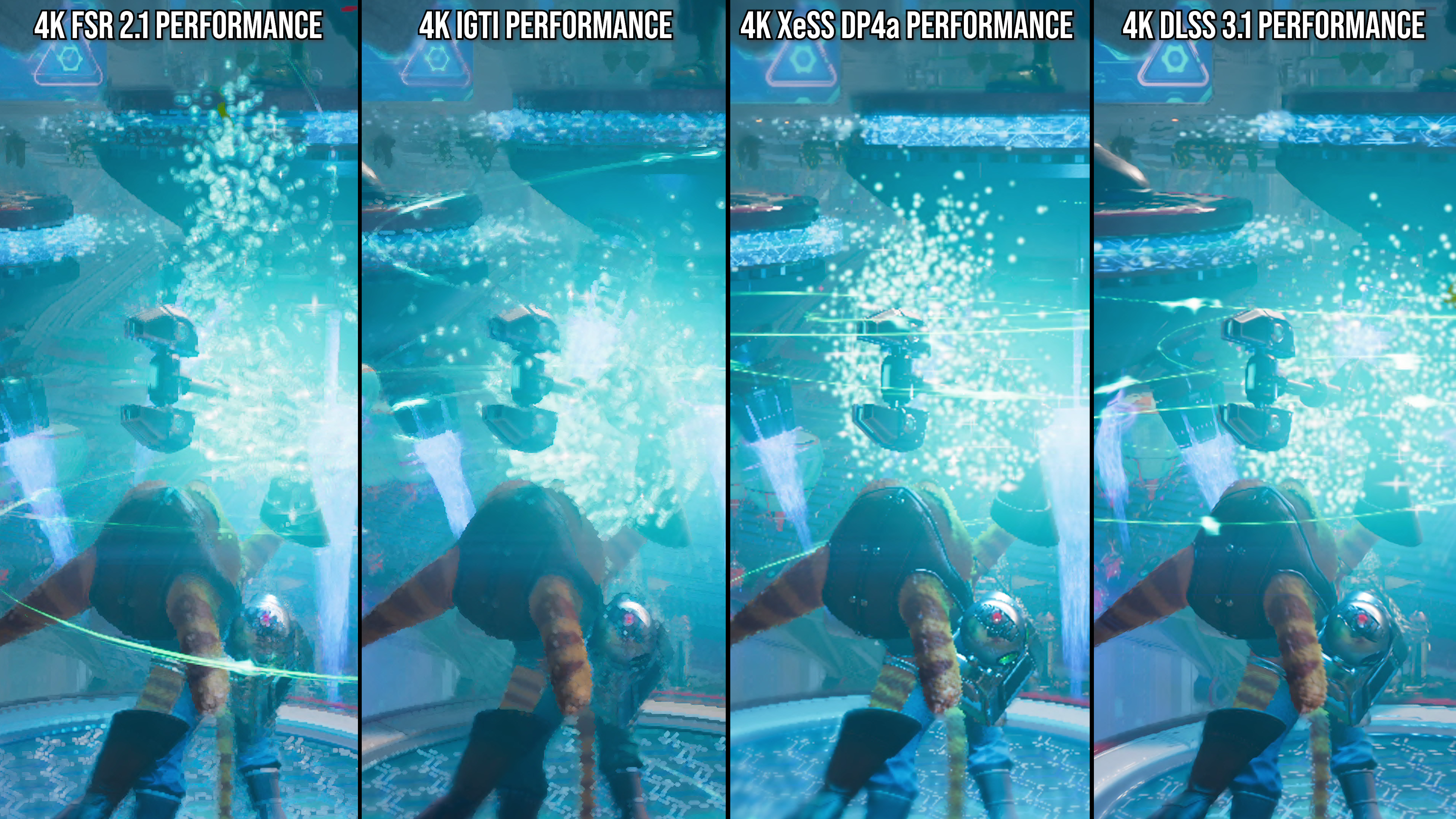

I expected FSR 2.1 to look better in this game, but it's the worst of all. Quality wise, from higher to lower, unless I am missing something is:

To say the least, DLSS kind of owns every image technique in this game. IGTI does decidedly beat FSR 2.1 here too.

@pjbliverpool - my main video coming on friday is pretty no non-sense. I say it feels unfinished and unpolished within the first few mins there. Sony pushing it out the door too early. Nixxes are very talented and know how to do good things, so many things not right at launch tells me the time table was bad.

DLSS>XeSS>IGTI>FSR2

DavidGraham

Veteran

The RTX 2070 Super is decimated due to it's 8GB VRAM buffer, in fact all 8GB GPUs are decimated with max RT settings, as the game is VRAM heavy even at 1080p, it needs around 10GB with RT.

The 2080Ti is truly the minimum GPU to run this game with RT, and it's faster than PS5 even when it's running at a much higher quality raster and RT settings.

gamegpu.com

gamegpu.com

The 2080Ti is truly the minimum GPU to run this game with RT, and it's faster than PS5 even when it's running at a much higher quality raster and RT settings.

Ratchet & Clank: Rift Apart тест GPU/CPU | Action / FPS / TPS | TEST GPU

Мы выполнили тестирование игры Ratchet & Clank: Rift Apart на наивысших параметрах графики, на видеокартах из серий GEFORCE

arandomguy

Veteran

Circling back to the GPU decompression discussion a bit I'd still be wondering if it's question of memory budget leading to that decision. I know it was mentioned that DirectStorage itself recommends a 128MB buffer only but how does that translate to GPU decompression? Is there a fixed buffer requirement for example that would make decompressing too small a file end up needing more VRAM?

This was kind of my question and caveat with DirectStorage and GPU decompression (and also other things such as Sampler Feedback) in that they might not alleviate VRAM pressure in the sense many are assuming. It could make memory management more efficient at the higher end but do nothing (if not increase) the floor. Which also means it might not not be panacea for lower VRAM GPUs even if it's very beneficial for just the next step up. They might enable for example much more memory efficiency for even just 12GB GPUs but yet do nothing (if not be even worse) for say 8GB GPUs.

This was kind of my question and caveat with DirectStorage and GPU decompression (and also other things such as Sampler Feedback) in that they might not alleviate VRAM pressure in the sense many are assuming. It could make memory management more efficient at the higher end but do nothing (if not increase) the floor. Which also means it might not not be panacea for lower VRAM GPUs even if it's very beneficial for just the next step up. They might enable for example much more memory efficiency for even just 12GB GPUs but yet do nothing (if not be even worse) for say 8GB GPUs.

I wouldn’t rely on that. They do a couple of tests and then just scale the other GPUs on that website.The RTX 2070 Super is decimated due to it's 8GB VRAM buffer, in fact all 8GB GPUs are decimated with max RT settings, as the game is VRAM heavy even at 1080p, it needs around 10GB with RT.

The 2080Ti is truly the minimum GPU to run this game with RT, and it's faster than PS5 even when it's running at a much higher quality raster and RT settings.

Ratchet & Clank: Rift Apart тест GPU/CPU | Action / FPS / TPS | TEST GPU

Мы выполнили тестирование игры Ratchet & Clank: Rift Apart на наивысших параметрах графики, на видеокартах из серий GEFORCEgamegpu.com

arandomguy

Veteran

It seems they've actually internally capped the loading time for the portal on PC.

That's the only logical reason why there would be no loading difference when increasing drive speed.

What drives specifically were those? A lot of the discussion seems to be focused on the theoretical sustained sequential large block read speeds for SSDs but that's really only one performance characteristic. I'm not sure we can assume that DirectStorage still will lead to sequential large block read speeds and/or high QD actually showing huge differentiation.

Would be interesting to actually profile the data access requests for these DirectStorage games to see if they for instance have even really moved on from being mostly 4kb random reads.

Here's a few tests I ran with disk counters on my various drives. The total amount of disk reads are very similar to my earlier results for PS5 so the PC version should be broadly comparable from that standpoint. The one exception is the HDD which only reads about 1/3rd of the amount of data that the SSDs do at 7GB for the whole sequence. All of these tests were run at the highest settings (without RT) so it's possible they use a different loading system when detecting HDDs.

The other thing I noticed is that all three SSDs will routinely hit 100% active time yet they never come anywhere close to their bandwidth limits. Though I will note that I had to disable BypassIO to get these readings from the NVMe drives which might be a confound.

The other thing I noticed is that all three SSDs will routinely hit 100% active time yet they never come anywhere close to their bandwidth limits. Though I will note that I had to disable BypassIO to get these readings from the NVMe drives which might be a confound.

Flappy Pannus

Veteran

Here's the rift sequence with CPU/GPU counters with an unlocked framerate, you can see that during the transitions the 4090's GPU usage drops, so it's not a case of the GPU not being able to keep up with the decompression demands, some other bottleneck:

Similar threads

- Replies

- 70

- Views

- 22K

- Replies

- 64

- Views

- 54K

- Replies

- 149

- Views

- 28K

- Replies

- 4

- Views

- 4K