If I understood it correctly visibility samples are full res, texturing/shading is done at target rate.With variable rate shading is base color/texture still sampled at the highest resolution?

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

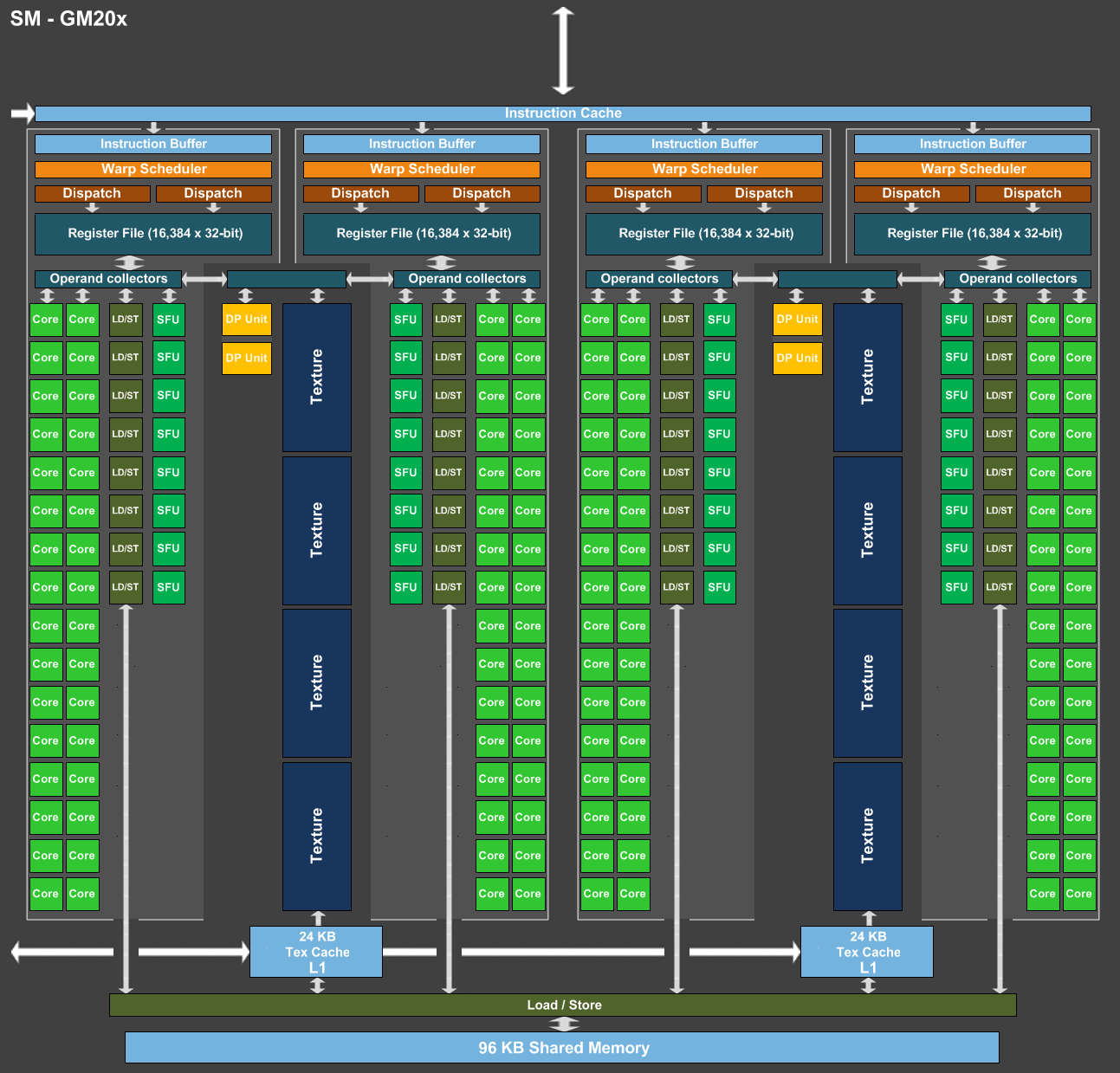

SIMD width is not really indicative of warp size. G80 was 8-wide and took 4 clocks to execute an instruction. It would also increase scheduler cost significantly and break years of optimizations tailored for 32 wide warps.

I understand that, but thread divergence is determined by the number of threads in a warp is it, and not the nr of threads in the sense of nr of SIMD lanes ? In case of the former (as I understood it was), I know scheduling rate will need to increase, but thread divergence should improve a lot as there is only 16 instead of 32 threads to diverge with warp size 16.

FP-group size was 16 since Fermi, but going forwar from maxwell, Nvidia did not make that readily apparent in their diagrams anymore. You really had to ask about it. So, I don't think they will do away the economization of control vs. execution width. I'm actually glad they did not increase it.

DavidGraham

Veteran

So does RTX not need DXR to work? I am asking because the Ray Tracing in the StarWars demo works on both Pascal and Turing without DXR.

I'm assuming via Nvidia Optix in their drivers? Likely the demo is hand tuned to Optix, which means it probably won't work on Vega once next W10 update hits (and AMD adds DXR to drivers)So does RTX not need DXR to work? I am asking because the Ray Tracing in the StarWars demo works on both Pascal and Turing without DXR.

So does RTX not need DXR to work? I am asking because the Ray Tracing in the StarWars demo works on both Pascal and Turing without DXR.

Nope, it can also run as an acceleration layer for Vulkan RT and Optix.

Unfortunately the screenshots of the upcoming 3dmark RT benchmark look quite unimpressive. Hopefully someone does a proper DXR or Vulkan benchmark.

More on Variable Rate Shading.

https://devblogs.nvidia.com/turing-variable-rate-shading-vrworks/

From coarse shading of 4x4 to 8xSSAA.

Not bad.

https://devblogs.nvidia.com/turing-variable-rate-shading-vrworks/

From coarse shading of 4x4 to 8xSSAA.

Not bad.

Last edited:

More on Variable Rate Shading.

https://devblogs.nvidia.com/turing-variable-rate-shading-vrworks/

From coarse shading of 4x4 to 8xSSAA.

Not bad.

Pretty cool. Still waiting on that killer app though for VR to really take off.

Nope, it can also run as an acceleration layer for Vulkan RT and Optix.

Unfortunately the screenshots of the upcoming 3dmark RT benchmark look quite unimpressive. Hopefully someone does a proper DXR or Vulkan benchmark.

Isn't the "look" irrelevant as long as it gives numbers to compare across hardware?

The Star War Reflections demo is built using DXR. RTX accelerates DXR but DXR can run on any DX12 GPU using the fallback layer.So does RTX not need DXR to work? I am asking because the Ray Tracing in the StarWars demo works on both Pascal and Turing without DXR.

Isn't the "look" irrelevant as long as it gives numbers to compare across hardware?

We already have a benchmark where looks are irrelevant, it’s called math.

3DMark actually used to be a benchmark in the literal sense by producing visuals that games of the time couldn’t match. It set expectations for what’s possible in the future when using the latest and greatest tech. It hasn’t been that for a long time. Now it just spits out a useless number and doesn’t look good doing it.

I miss the good old days of Nature and Airship.

We already have a benchmark where looks are irrelevant, it’s called math.

3DMark actually used to be a benchmark in the literal sense by producing visuals that games of the time couldn’t match. It set expectations for what’s possible in the future when using the latest and greatest tech. It hasn’t been that for a long time. Now it just spits out a useless number and doesn’t look good doing it.

I miss the good old days of Nature and Airship.

Aaaah, the sweet memories of running Nature on a ATI 9700 Pro! Bliss! :smile2:

It will be certainly interesting to see how it will finally happen and does it need a additional change to hardware.Pretty cool. Still waiting on that killer app though for VR to really take off.

We might see something nice in couple of days though. (Hoping for turing/oculus presentation.)

Indeed.We already have a benchmark where looks are irrelevant, it’s called math.

3DMark actually used to be a benchmark in the literal sense by producing visuals that games of the time couldn’t match. It set expectations for what’s possible in the future when using the latest and greatest tech. It hasn’t been that for a long time. Now it just spits out a useless number and doesn’t look good doing it.

I miss the good old days of Nature and Airship.

Would be a lot more interesting if it would give proper timings on what happens within GPU during the test.

For raytracing the pure tracing speeds and with different kinds of shaders attached. (To see how GPU handles tracing and shading, I'm quite sure software path will get hit in comparison.)

Old good demos were gorgeous.

Last edited:

Pretty cool. Still waiting on that killer app though for VR to really take off.

Dunno whether that would qualify as a killer app, but I thought that Superhot VR, in spite of its odd and silly name, was really, really fun:

DavidGraham

Veteran

Despite the 8700K being 30% faster than 2700X @1080p, it can't power the 2080Ti. The progress of CPUs has really fallen off a cliff in the past couple of years. This needs to change for both Intel and AMD.

Similar threads

- Replies

- 6

- Views

- 4K

- Replies

- 3K

- Views

- 397K

D

- Replies

- 97

- Views

- 34K

- Replies

- 8

- Views

- 9K

D