I think it means Minister Bruno gets to take your shit if he feels like it.These people are hilarious. What exactly does fair competition in AI look like?

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

DegustatoR

Legend

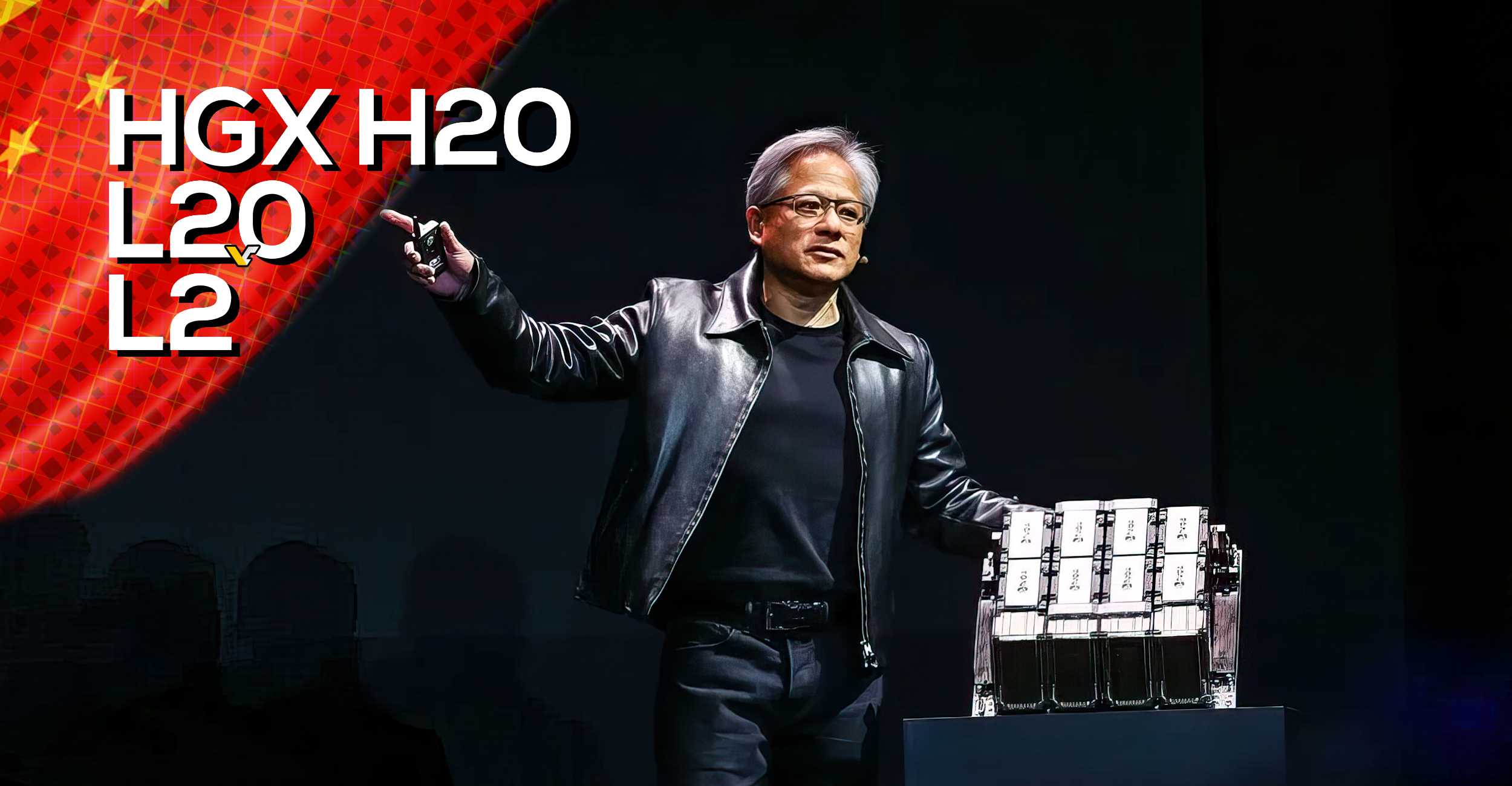

NVIDIA to launch HGX H20, L20 and L2 GPUs for China - VideoCardz.com

NVIDIA already has new data-center GPUs for China NVIDIA responded quickly to new restrictions on exporting powerful GPUs from the U.S. Recently, NVIDIA ceased the shipment of high-performance GPUs to countries like China. The new U.S. restrictions aim to curb the utilization of powerful...

D

Deleted member 2197

Guest

Nvidia GPU Used To Decipher Ancient Greco-Roman Scroll

AI helped a student win a $40,000 prize.

An undergraduate student used an Nvidia GeForce GTX 1070 and AI to decipher a word in one of the Herculaneum scrolls to win a $40,000 prize (via Nvidia). Herculaneum was covered in ash by the eruption of Mount Vesuvius, and the over 1,800 Herculaneum scrolls are one of the site's most famous artifacts. The scrolls have been notoriously hard to decipher, but machine learning might be the key.

...

Luke Farritor, an undergrad at the University of Nebraska-Lincoln and Space-X intern, used his old GTX 1070 to train an AI model to detect "crackle patterns," which indicate where an ink character used to be. Eventually, his GTX 1070-trained AI was able to identify the Greek word πορφυρας (or porphyras), which is either the adjective for purple or the noun for purple dye or purple clothes. Deciphering this single word earned Farritor a $40,000 prize.

Nvidia GPU Used To Decipher Ancient Greco-Roman Scroll

AI helped a student win a $40,000 prize.www.tomshardware.com

That's a pretty awesome application for AI. It's kinda crazy that we're living through the AI revolution now which I honestly believe will make all previous revolutions with the possible exception of the agricultural pale in comparison.

For people of the generation that I think many here are, I.e. can remember a time before mobile phones, the Internet and WIFI, we are living through by far the most remarkable transition that has happened in the space of a human life time in all of human history.

How privileged are we!

There are likely tons of undiscovered applications for AI but it’s far too early to say it’ll be more transformative than the internet. The internet enabled entirely new markets, professions and hobbies and had a tremendous impact on global society and culture. So far AI is just making existing things easier and/or faster.

nVidia will power europe's first Exaflop supercomputer with 24,000 GH200 superchips: https://nvidianews.nvidia.com/news/...mputers-to-propel-ai-for-scientific-discovery

AI will make labor intensive tasks way cheaper, imagine AI creating animations for game characters with solid results that are indistinguishable from real mocap. Speaking of which, I’d love to see cloud AI being used to enhance game world in non latency sensitive applications, such as:There are likely tons of undiscovered applications for AI but it’s far too early to say it’ll be more transformative than the internet. The internet enabled entirely new markets, professions and hobbies and had a tremendous impact on global society and culture. So far AI is just making existing things easier and/or faster.

-Very realistic tree/leaf movement in a game like Forza or GT where the cloud AI calculates one intensive physics instance and share it with clients hardware so everyone playing that particular track gets very nice looking physics while those who do not have access to cloud will simply use a downgraded locally calculated version.

-remake higher polygon assets for old games to breath a new life into them, no matter how much you upres a game, upgrade lighting or boost fps the low poly objects will always stick out.

https://videocardz.com/press-release/nvidia-introduces-hopper-h200-gpu-with-141gb-of-hbm3e-memory

they also "teased" Blackwell , more than double the performance of Hopper H200 GPU, even higher focus on AI , launch in 2024

https://videocardz.com/newz/nvidia-...erformance-in-gpt-3-175b-large-language-model

https://wccftech.com/nvidia-blackwell-b100-gpus-2x-faster-hopper-h200-2024-launch/

“To create intelligence with generative AI and HPC applications, vast amounts of data must be efficiently processed at high speed using large, fast GPU memory. With NVIDIA H200, the industry’s leading end-to-end AI supercomputing platform just got faster to solve some of the world’s most important challenges.”

— said Ian Buck, vice president of hyperscale and HPC at NVIDIA.

they also "teased" Blackwell , more than double the performance of Hopper H200 GPU, even higher focus on AI , launch in 2024

https://videocardz.com/newz/nvidia-...erformance-in-gpt-3-175b-large-language-model

https://wccftech.com/nvidia-blackwell-b100-gpus-2x-faster-hopper-h200-2024-launch/

Last edited:

D

Deleted member 2197

Guest

DegustatoR

Legend

GB202 gets 384 bit G7 after all. If true 512 bit buses are to remain a rare thing in gaming GPUs.

512-bit never made any sense when they were already gonna get a massive bandwidth lift from GDDR7. I genuinely dont know how kopite didn't think give that one a little more skepticism from the get-go, especially if he didn't actually hear '512-bit' specifically in his information.

DegustatoR

Legend

The lift won't be nearly as massive for Nvidia since they are using G6X for the second generation now.512-bit never made any sense when they were already gonna get a massive bandwidth lift from GDDR7.

Micron's roadmap shows early G7 launching at 32 Gbps which is a fairly minor jump over late G6X's 24 Gbps.

Which also explains why Blackwell seemingly adds even more L2 in comparison to Lovelace.

The more interesting part on G7 is the addition of 1.5 step capacity which will allow new memory sizes on the same bus widths: 12 GB on 128 bit, 18GB on 192 bit and 24GB on 256 bit.

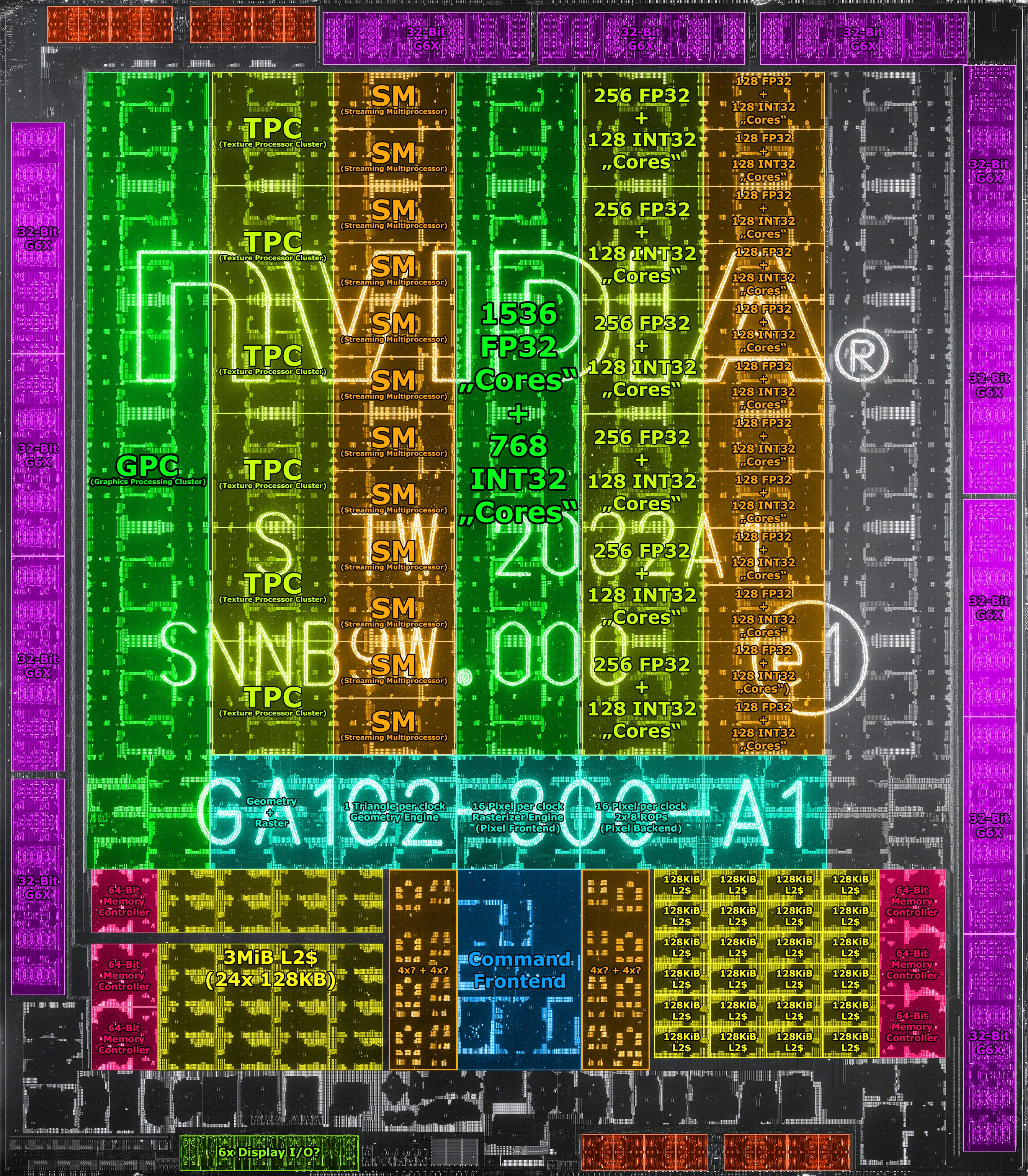

also the old 128MB L2 rumor is impossible with 384 bit. AD102 has 16MB of L2 per each 64bit memory controller, with 128MB that would be 21.333 MB for each 64bit MC which obviously doesn't work.512-bit never made any sense when they were already gonna get a massive bandwidth lift from GDDR7. I genuinely dont know how kopite didn't think give that one a little more skepticism from the get-go, especially if he didn't actually hear '512-bit' specifically in his information.

ad102 has 48 slices of 2MB for a total of 96MB L2, for blackwell I guess they will just increase it to 2.5MB per slice for a total of 120MB L2.

If we're just talking flagship parts, a 4090 uses 21gbps at stock, not 24. So even at the base 32gbps of GDDR7, that's over a 50% increase in bandwidth. Not that a mere 33% would be 'fairly minor' in either case.The lift won't be nearly as massive for Nvidia since they are using G6X for the second generation now.

Micron's roadmap shows early G7 launching at 32 Gbps which is a fairly minor jump over late G6X's 24 Gbps.

Which also explains why Blackwell seemingly adds even more L2 in comparison to Lovelace.

The more interesting part on G7 is the addition of 1.5 step capacity which will allow new memory sizes on the same bus widths: 12 GB on 128 bit, 18GB on 192 bit and 24GB on 256 bit.

Does the L2 have to be so linked with the memory controllers in terms of numbers? On AD103, it doesn't seem like they're laid out like they would be.also the old 128MB L2 rumor is impossible with 384 bit. AD102 has 16MB of L2 per each 64bit memory controller, with 128MB that would be 21.333 MB for each 64bit MC which obviously doesn't work.

ad102 has 48 slices of 2MB for a total of 96MB L2, for blackwell I guess they will just increase it to 2.5MB per slice for a total of 120MB L2.

I think so. in this die shot of GA102 each 64bit MC is linked to 8 slices of L2 cache. I think it's the same for Ada but each slice was increased from 128KB to 2MB.Does the L2 have to be so linked with the memory controllers in terms of numbers? On AD103, it doesn't seem like they're laid out like they would be.

I think the word you're looking for is "PHY"Ah, I was thinking you were meaning the main GDDR6 memory controllers.

It's a good talk but it's always interesting to see how research is disassociated from day-to-day hardware at practically every semiconductor company! In the Q&A, Bill Dally says that NVIDIA GPUs process matrix multiplies in 4x4 chunks and go back to the register file after every chunk which he says is "in the noise" and "maybe 10% energy". But that's definitely NOT the case on Hopper where matrix multiplication still isn't systolic but works completely differently (asynchronous instruction that can read directly from shared memory etc...) with a minimum shape of 64x8x16 (so they've effectively solved that issue): https://docs.nvidia.com/cuda/parall...tml#asynchronous-warpgroup-level-matrix-shapeThis is a quite long talk by Bill Dally about the future of deep learning hardware, including some interesting tidbits on log numbers and sparse matrices.

I don't really get his point about glitching with unstructured sparsity though, that's only because they are trying to detect 0s and shuffle values around *in the same pipeline stage as the computation*. But it doesn't feel that complicated to do it a cycle early and just have a signal specifying the multiplexer behaviour as an input to your "computation" pipeline stage, which should reduce glitching to nearly the same level as structured sparsity... Maybe I'm missing something (e.g. is it actually the multiplexer glitching he's worried about at very small data sizes like 4/8-bit? or the cost of the pipeline stage is much higher than I'd expect?) but it feels like a fairly standard hardware engineering problem to me. On the other hand, the fact NVIDIA can do this kind of analysis and realise they need to focus on this kind of thing way before implementation does put them in a league of their own as I'm skeptical most competitors do that kind of analysis that early...

If I had to make a guess for Blackwell, I'd say they will include vector scaling and 4-bit log numbers (the patent diagram he showed with the binning is clever), but that really didn't sound very optimistic for unstructured sparsity or activation sparsity. From a competitive standpoint, I think NVIDIA is going to have much tougher competition for inference than training, so focusing on this kind of inference-only improvement makes a lot of sense for them.

Last edited:

- Status

- Not open for further replies.

Similar threads

- Replies

- 84

- Views

- 7K

- Replies

- 375

- Views

- 32K

- Locked

- Replies

- 260

- Views

- 25K