Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Hopefully the leaks about 4070 super 16GB is true

DavidGraham

Veteran

D

Deleted member 2197

Guest

Exclusive: Nvidia to make Arm-based PC chips in major new challenge to Intel | Reuters

October 23, 2023

Nvidia has quietly begun designing central processing units (CPUs) that would run Microsoft’s Windows operating system and use technology from Arm Holdings, two people familiar with the matter told Reuters.

The AI chip giant's new pursuit is part of Microsoft's effort to help chip companies build Arm-based processors for Windows PCs. Microsoft's plans take aim at Apple, which has nearly doubled its market share in the three years since releasing its own Arm-based chips in-house for its Mac computers, according to preliminary third-quarter data from research firm IDC.

Advanced Micro Devices also plans to make chips for PCs with Arm technology, according to two people familiar with the matter.

...

Nvidia and AMD could sell PC chips as soon as 2025, one of the people familiar with the matter said. Nvidia and AMD would join Qualcomm, which has been making Arm-based chips for laptops since 2016.

Microsoft granted Qualcomm an exclusivity arrangement to develop Windows-compatible chips until 2024, according to two sources familiar with the matter.

Microsoft has encouraged others to enter the market once that exclusivity deal expires, the two sources told Reuters.

“Microsoft learned from the 90s that they don’t want to be dependent on Intel again, they don’t want to be dependent on a single vendor,” said Jay Goldberg, chief executive of D2D Advisory, a finance and strategy consulting firm. “If Arm really took off in PC (chips), they were never going to let Qualcomm be the sole supplier.”

October 23, 2023

Nvidia has quietly begun designing central processing units (CPUs) that would run Microsoft’s Windows operating system and use technology from Arm Holdings, two people familiar with the matter told Reuters.

The AI chip giant's new pursuit is part of Microsoft's effort to help chip companies build Arm-based processors for Windows PCs. Microsoft's plans take aim at Apple, which has nearly doubled its market share in the three years since releasing its own Arm-based chips in-house for its Mac computers, according to preliminary third-quarter data from research firm IDC.

Advanced Micro Devices also plans to make chips for PCs with Arm technology, according to two people familiar with the matter.

...

Nvidia and AMD could sell PC chips as soon as 2025, one of the people familiar with the matter said. Nvidia and AMD would join Qualcomm, which has been making Arm-based chips for laptops since 2016.

Microsoft granted Qualcomm an exclusivity arrangement to develop Windows-compatible chips until 2024, according to two sources familiar with the matter.

Microsoft has encouraged others to enter the market once that exclusivity deal expires, the two sources told Reuters.

“Microsoft learned from the 90s that they don’t want to be dependent on Intel again, they don’t want to be dependent on a single vendor,” said Jay Goldberg, chief executive of D2D Advisory, a finance and strategy consulting firm. “If Arm really took off in PC (chips), they were never going to let Qualcomm be the sole supplier.”

Exclusive: Nvidia to make Arm-based PC chips in major new challenge to Intel | Reuters

October 23, 2023

Nvidia has quietly begun designing central processing units (CPUs) that would run Microsoft’s Windows operating system and use technology from Arm Holdings, two people familiar with the matter told Reuters.

The AI chip giant's new pursuit is part of Microsoft's effort to help chip companies build Arm-based processors for Windows PCs. Microsoft's plans take aim at Apple, which has nearly doubled its market share in the three years since releasing its own Arm-based chips in-house for its Mac computers, according to preliminary third-quarter data from research firm IDC.

Advanced Micro Devices also plans to make chips for PCs with Arm technology, according to two people familiar with the matter.

...

Nvidia and AMD could sell PC chips as soon as 2025, one of the people familiar with the matter said. Nvidia and AMD would join Qualcomm, which has been making Arm-based chips for laptops since 2016.

Microsoft granted Qualcomm an exclusivity arrangement to develop Windows-compatible chips until 2024, according to two sources familiar with the matter.

Microsoft has encouraged others to enter the market once that exclusivity deal expires, the two sources told Reuters.

“Microsoft learned from the 90s that they don’t want to be dependent on Intel again, they don’t want to be dependent on a single vendor,” said Jay Goldberg, chief executive of D2D Advisory, a finance and strategy consulting firm. “If Arm really took off in PC (chips), they were never going to let Qualcomm be the sole supplier.”

Would we be able to run existing x86 apps (like games) on these CPU's andnif so is there likely to be a significant performance penalty?

D

Deleted member 2197

Guest

In 2021 Nvidia had two demos of games running on a MediaTek Kompanio 1200 Arm processor and a RTX 3060.Would we be able to run existing x86 apps (like games) on these CPU's andn if so is there likely to be a significant performance penalty?

Arm Is RTX ON! World’s Most Widely Used CPU Architecture Meets Real-Time Ray Tracing, DLSS

A pair of new demos running GeForce RTX technologies on the Arm platform unveiled by NVIDIA today show how advanced graphics can be extended to a broader, more power-efficient set of devices. The two demos, shown at this week’s Game Developers Conference, included Wolfenstein: Youngblood from...

blogs.nvidia.com

The demos are made possible by NVIDIA extending support for its software development kits for implementing five key NVIDIA RTX technologies to Arm and Linux.

They include:

- Deep Learning Super Sampling (DLSS), which uses AI to boost frame rates and generate beautiful, sharp images for games

- RTX Direct Illumination (RTXDI), which lets developers add dynamic lighting to their gaming environments

- RTX Global Illumination (RTXGI), which helps recreate the way light bounces around in real-world environments

- NVIDIA Real-Time Denoisers (NRD) a denoising library that’s designed to work with low ray per pixel signals

- RTX Memory Utility (RTXMU), which optimizes the way applications use graphics memory

D

Deleted member 2197

Guest

Eureka! NVIDIA Research Breakthrough Puts New Spin on Robot Learning | NVIDIA Blog

AI agent uses LLMs to automatically generate reward algorithms to train robots to accomplish complex tasks.

blogs.nvidia.com

A new AI agent developed by NVIDIA Research that can teach robots complex skills has trained a robotic hand to perform rapid pen-spinning tricks — for the first time as well as a human can.

The stunning prestidigitation, showcased in the video above, is one of nearly 30 tasks that robots have learned to expertly accomplish thanks to Eureka, which autonomously writes reward algorithms to train bots.

“Reinforcement learning has enabled impressive wins over the last decade, yet many challenges still exist, such as reward design, which remains a trial-and-error process,” said Anima Anandkumar, senior director of AI research at NVIDIA and an author of the Eureka paper. “Eureka is a first step toward developing new algorithms that integrate generative and reinforcement learning methods to solve hard tasks.”

Eureka-generated reward programs — which enable trial-and-error learning for robots — outperform expert human-written ones on more than 80% of tasks, according to the paper. This leads to an average performance improvement of more than 50% for the bots.

“Eureka is a unique combination of large language models and NVIDIA GPU-accelerated simulation technologies,” said Linxi “Jim” Fan, senior research scientist at NVIDIA, who’s one of the project’s contributors. “We believe that Eureka will enable dexterous robot control and provide a new way to produce physically realistic animations for artists.”

It’s breakthrough work is bound to get developers’ minds spinning with possibilities, adding to recent NVIDIA Research advancements like Voyager, an AI agent built with GPT-4 that can autonomously play Minecraft.

Not made clear whether these games were ported natively to ARM or were just using some translation software. Actual CPU performance isn't indicated whatsoever, either.In 2021 Nvidia had two demos of games running on a MediaTek Kompanio 1200 Arm processor and a RTX 3060.

Arm Is RTX ON! World’s Most Widely Used CPU Architecture Meets Real-Time Ray Tracing, DLSS

A pair of new demos running GeForce RTX technologies on the Arm platform unveiled by NVIDIA today show how advanced graphics can be extended to a broader, more power-efficient set of devices. The two demos, shown at this week’s Game Developers Conference, included Wolfenstein: Youngblood from...blogs.nvidia.com

I dont think there's any question that you can run x86 apps on an ARM platform, but whether that's actually a useful alternative to just sticking with native x86. Especially for anybody who prioritizes gaming at all.

Plus we're seeing ARM advances slow down(outside Apple's designs), while x86 CPU's are still making consistent progress.

D

Deleted member 2197

Guest

They did not use any translation software like Luxtorpeda.Not made clear whether these games were ported natively to ARM or were just using some translation software. Actual CPU performance isn't indicated whatsoever, either.

I dont think there's any question that you can run x86 apps on an ARM platform, but whether that's actually a useful alternative to just sticking with native x86. Especially for anybody who prioritizes gaming at all.

Plus we're seeing ARM advances slow down(outside Apple's designs), while x86 CPU's are still making consistent progress.

The games were ported to a 64-bit ARM Linux version and run on a Media TeK ARM cpu. Also necessary Nvidia SDK, software and drivers were also ported. Time stamped below.

neckthrough

Regular

For gaming I don't think anything other than recompilation to native is going to work. With MS supporting DX natively on ARM the recompilation path doesn't seem too bad -- in theory. We'll see how things work out in practice.Not made clear whether these games were ported natively to ARM or were just using some translation software. Actual CPU performance isn't indicated whatsoever, either.

There has to be a tangible benefit to the gaming audience. In the laptop space it could be improved battery life, thermals and/or form factor vs. an equivalent-performance x86 "enthusiast-gaming" tier machine. But that story doesn't work for desktops. Maybe there's a story for a Grace-Hopper style behemoth with massive CPU-GPU bandwidth, but you'd also need the games to be designed to take advantage of the architecture.I dont think there's any question that you can run x86 apps on an ARM platform, but whether that's actually a useful alternative to just sticking with native x86. Especially for anybody who prioritizes gaming at all.

That's just a function of investment. Intel, AMD and Apple have invested in competent and well-paid engineers to work on the designs. Nvidia will have to do the same if they want to succeed.Plus we're seeing ARM advances slow down(outside Apple's designs), while x86 CPU's are still making consistent progress.

Many fast x86 emulators only targets user space emulation (think FEX/Rosetta 2/WOW64). Emulating kernel space (ring 0) is forever unfeasible ...Would we be able to run existing x86 apps (like games) on these CPU's andnif so is there likely to be a significant performance penalty?

neckthrough

Regular

This is an MS-blessed product. Why would emulating kernel space be needed? Any OS call will run natively.Many fast x86 emulators only targets user space emulation (think FEX/Rosetta 2/WOW64). Emulating kernel space (ring 0) is forever unfeasible ...

This is an MS-blessed product. Why would emulating kernel space be needed? Any OS call will run natively.

Windows Arm-based PCs FAQ - Microsoft Support

Learn about using a Windows Arm-based PC and get answers to common questions about Arm-based PCs.

- Drivers for hardware, games and apps will only work if they're designed for a Windows 11 Arm-based PC. For more info, check with the hardware manufacturer or the organization that developed the driver. Drivers are software programs that communicate with hardware devices—they're commonly used for antivirus and antimalware software, printing or PDF software, assistive technologies, CD and DVD utilities, and virtualization software.

If a driver doesn’t work, the app or hardware that relies on it won’t work either (at least not fully). Peripherals and devices only work if the drivers they depend on are built into Windows 11, or if the hardware developer has released Arm64 drivers for the device.- Certain games won’t work. Games and apps may not work if they use a version of OpenGL greater than 3.3, or if they rely on "anti-cheat" drivers that haven't been made for Windows 11 Arm-based PCs. Check with your game publisher to see if a game will work.

neckthrough

Regular

Right, drivers will have to be native as well of course. I can't imagine the horrors of emulating a driver, especially with ARM's weaker memory model.Windows Arm-based PCs FAQ - Microsoft Support

Learn about using a Windows Arm-based PC and get answers to common questions about Arm-based PCs.support.microsoft.com

This is true whether you recompile or emulate the app itself and will definitely lead to some growing pains as peripheral vendors update their drivers. This was true of MacOS as well, but Windows's peripheral ecosystem is probably much wider. I suppose generic drivers will work for standard/simple peripherals.

x86 CPU vendors potentially have another immediate moat that they can use such as AVX-512 and it remains to be seen if the industry will be able to keep making advancements to transistor technology for the ARM architecture to close that gap ...Right, drivers will have to be native as well of course. I can't imagine the horrors of emulating a driver, especially with ARM's weaker memory model.

This is true whether you recompile or emulate the app itself and will definitely lead to some growing pains as peripheral vendors update their drivers. This was true of MacOS as well, but Windows's peripheral ecosystem is probably much wider. I suppose generic drivers will work for standard/simple peripherals.

Progress in ARM compatibility for x86 applications can easily take a sharp setback for the worse if x86 vendors release both attractive to the developer community and difficult to emulate features. Another thing to keep in mind are technologies like 3D V-cache which won't necessarily hardlock ARM CPUs out from emulating x86 applications but if PC developers unanimously start heavily relying on it to get their intended performance in their applications, should ARM vendors adopt similar technology to keep up at the expense of embedded/mobile software developers or do they keep suiting to the requirements of those said developers to avoid them migrating over other architectures like RISC-V ? Implementing technologies like 3D V-cache is less compelling for ARM vendors if they still come out behind the x86 equivalents in performance and developers on smaller systems lose out too due to higher cost/power consumption ...

neckthrough

Regular

Yes it's a moat but how wide is it really? I haven't seen a huge enthusiastic adoption.x86 CPU vendors potentially have another immediate moat that they can use such as AVX-512

Not sure how transistor technology is relevant. If x86 can implement vector extensions in a specific technology so can ARM. The hard part is auto-vectorization software to take advantage of those extensions, not the implementation itself.and it remains to be seen if the industry will be able to keep making advancements to transistor technology for the ARM architecture to close that gap ...

I don't understand this argument at all. You're saying that in a battle between A and B, B can have a sharp disadvantage if in future A gains a hypothetical amazing new feature that B lacks. Well... yeah???Progress in ARM compatibility for x86 applications can easily take a sharp setback for the worse if x86 vendors release both attractive to the developer community and difficult to emulate features.

You're pointing out 2 issues here:Another thing to keep in mind are technologies like 3D V-cache which won't necessarily hardlock ARM CPUs out from emulating x86 applications but if PC developers unanimously start heavily relying on it to get their intended performance in their applications, should ARM vendors adopt similar technology to keep up at the expense of embedded/mobile software developers or do they keep suiting to the requirements of those said developers to avoid them migrating over other architectures like RISC-V ? Implementing technologies like 3D V-cache is less compelling for ARM vendors if they still come out behind the x86 equivalents in performance and developers on smaller systems lose out too due to higher cost/power consumption ...

(1) A potential divergence between mobile vs. higher-tier client platforms. I don't see a problem with divergence. Just because both use the ARM ISA doesn't mean they have to have any similarities in implementation.

(2) Diminishing returns of ARM's USP (perf/W), as you start building high-end systems that push power envelopes in pursuit of performance. This is a valid point, although both Apple and server-grade systems have shown some promise that with good designs it's possible to maintain an advantage.

We're starting to see some movement with it in productivity and in high performance/scientific applications. It's a bit presumptuous right now to make this predication but after the next generation game consoles release, I can very much see game development pivoting towards usage of the extension as well since it's the natural successor to AVX/2 which is already seeing widespread proliferation in games ...Yes it's a moat but how wide is it really? I haven't seen a huge enthusiastic adoption.

ARM vendors can implement more vector extensions but it's going to come with a power consumption and hardware logic cost. It's possible that we'll reach a plateau in transistor miniaturization technology later on during this decade so ARM architect designers will have to make a hard choice between getting closer to x86 architectures while facing competitive pressure with other architectures (RISC-V) or keep optimizing their use case for embedded/mobile systems. The way I see it as we're quickly reaching the wall in transistor technology, doing both of those options simultaneously may not be possible anymore ...Not sure how transistor technology is relevant. If x86 can implement vector extensions in a specific technology so can ARM. The hard part is auto-vectorization software to take advantage of those extensions, not the implementation itself.

Divergence is nearly the source of all ails in multiplatform software development. More divergence only keeps perpetuating different development practices between the different platforms. Subsequently, it's inappropriate to have one unified architecture across the varying spectrum of platforms if they all have very different sets of requirements. As long as these different sets of requirements keep persisting, there'll be strong incentives for the industry keep multiple architectures in place ...I don't understand this argument at all. You're saying that in a battle between A and B, B can have a sharp disadvantage if in future A gains a hypothetical amazing new feature that B lacks. Well... yeah???

You're pointing out 2 issues here:

(1) A potential divergence between mobile vs. higher-tier client platforms. I don't see a problem with divergence. Just because both use the ARM ISA doesn't mean they have to have any similarities in implementation.

(2) Diminishing returns of ARM's USP (perf/W), as you start building high-end systems that push power envelopes in pursuit of performance. This is a valid point, although both Apple and server-grade systems have shown some promise that with good designs it's possible to maintain an advantage.

neckthrough

Regular

I won't debate your point on AVX. Time will tell.We're starting to see some movement with it in productivity and in high performance/scientific applications. It's a bit presumptuous right now to make this predication but after the next generation game consoles release, I can very much see game development pivoting towards usage of the extension as well since it's the natural successor to AVX/2 which is already seeing widespread proliferation in games ...

ARM vendors can implement more vector extensions but it's going to come with a power consumption and hardware logic cost. It's possible that we'll reach a plateau in transistor miniaturization technology later on during this decade so ARM architect designers will have to make a hard choice between getting closer to x86 architectures while facing competitive pressure with other architectures (RISC-V) or keep optimizing their use case for embedded/mobile systems. The way I see it as we're quickly reaching the wall in transistor technology, doing both of those options simultaneously may not be possible anymore ...

Divergence is nearly the source of all ails in multiplatform software development. More divergence only keeps perpetuating different development practices between the different platforms. Subsequently, it's inappropriate to have one unified architecture across the varying spectrum of platforms if they all have very different sets of requirements. As long as these different sets of requirements keep persisting, there'll be strong incentives for the industry keep multiple architectures in place ...

But I can't comprehend your argument about embedded vs. client. I don't understand why you keep bringing up embedded. They are different markets served by different vendors already. Just because both may use the "ARM" ISA means nothing. They are ALREADY diverged and will continue to be diverged even if client switches to ARM.

Now, the x86-vs-ARM divergence within the client space itself *is* a concern. But that has nothing to do with ARM-embedded vs. ARM-client, which is a bizarre non-issue to bring into this discussion.

The problem with ARM vendors is that they still want to both participate in embedded/mobile AND client when the development community for them are different. I do not think most of them truly desire to diverge as much as you are led believe hence why they maintain binary compatibility as much as possible between the different platforms ...I won't debate your point on AVX. Time will tell.

But I can't comprehend your argument about embedded vs. client. I don't understand why you keep bringing up embedded. They are different markets served by different vendors already. Just because both may use the "ARM" ISA means nothing. They are ALREADY diverged and will continue to be diverged even if client switches to ARM.

Now, the x86-vs-ARM divergence within the client space itself *is* a concern. But that has nothing to do with ARM-embedded vs. ARM-client, which is a bizarre non-issue to bring into this discussion.

ARM designs for the client space should have no qualms about introducing many exotic extensions to close the performance gap with x86 processors but they won't do such a thing because they themselves don't want to maintain potentially non-standardized functionality or include tons of dark silicon that might go unused!

D

Deleted member 2197

Guest

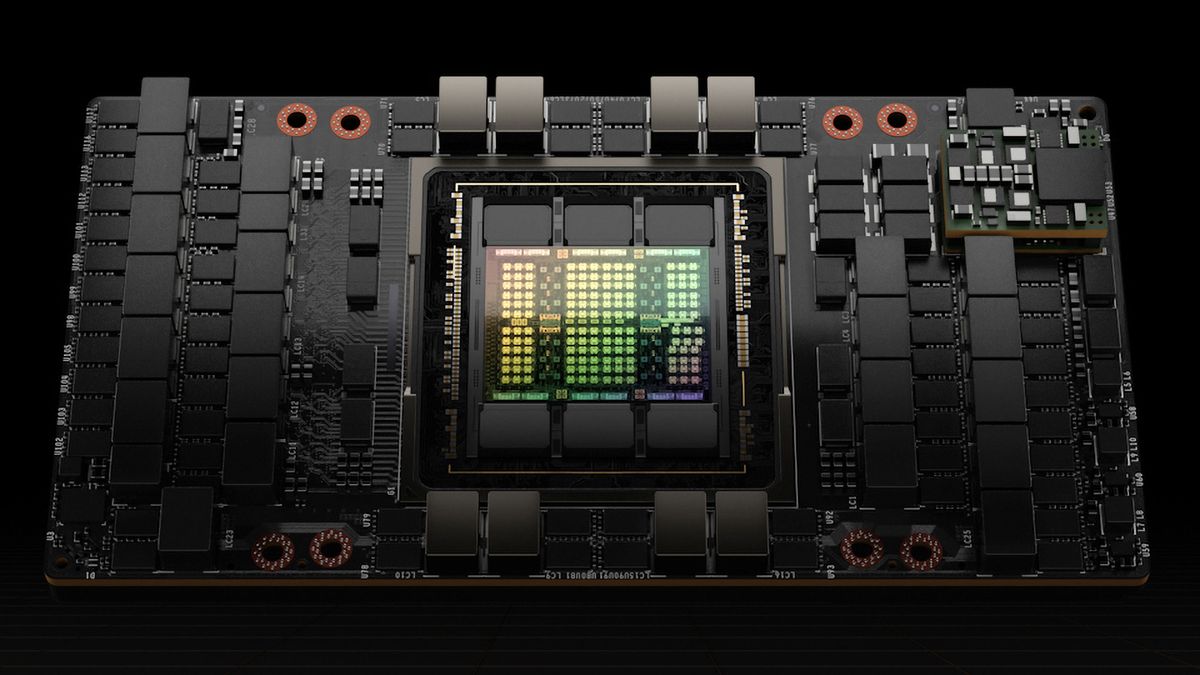

US Govt Speeds Up Export Restrictions for Nvidia's GPUs

Sales of Nvidia GPUs to Chinese entities effective immediately.

"On October 23, 2023, the United States Government informed Nvidia […] that the licensing requirements of the interim final rule [concerning AI and HPC processors] dated October 18, 2023, applicable to products having a 'total processing performance' of 4800 or more and designed or marketed for datacenters, is effective immediately, impacting shipments of the Company's A100, A800, H100, H800, and L40S products," an Nvidia's filing with the U.S. Securities and Exchange Commission reads.

These licensing requirements were originally to be effective after a 30-day period, so starting November 16. Instead, the U.S. government decided to speed up their implementation.

As a result, Nvidia needs to get a license to ship its ship its A100, A800, H100, H800, and L40S cards and modules for AI and HPC computing to China and a number of other countries immediately, which essentially means that they can no longer ship these products to their partners. Yet, it is unclear whether this also applies to shipping the GeForce RTX 4090 and L40 processors to the aforementioned countries. If yes, the best graphics cards for gaming could end up in short supply and get considerably more expensive in China, Saudi Arabia, the United Arab Emirates, and Vietnam.

"Given the strength of demand for the Company's products worldwide, the Company does not anticipate that the accelerated timing of the licensing requirements will have a near-term meaningful impact on its financial results," Nvidia said. They'll just sell AI GPUs previously destined for China to someone else, in other words.

- Status

- Not open for further replies.

Similar threads

- Replies

- 0

- Views

- 397

- Replies

- 84

- Views

- 6K

- Replies

- 375

- Views

- 28K

- Locked

- Replies

- 260

- Views

- 22K