Yes.You certain? DLSS up from 1080p to 4K looks pretty sharp in this game.

https://www.overclock3d.net/reviews/gpu_displays/control_rtx_raytracing_pc_analysis/3

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Yes.You certain? DLSS up from 1080p to 4K looks pretty sharp in this game.

On average, DLSS is slightly sharper, but ...

Control is generally a pretty blurry game. DLSS can certainly do that, many objects are sharper with the AI upscaling at the highest level, a few but also slightly blurred. However, this only applies from the WQHD resolution. DLSS with the target resolution Full HD, however, is a pretty plush. With DLSS, the game flickers a little more, it makes the lower resolution noticeable. So far so good.

For example, if shadows appear frayed, the effect with DLSS usually increases significantly. And fence-like objects that are no longer displayed perfectly even without DLSS, then suddenly frayed with the feature or are sometimes no longer displayed. With DLSS, Control is showing even more graphics errors than it already does, which is not very conducive to graphics quality. The bottom line is that if the video card renders in the same resolution once with and once without DLSS, the result is different but not better.

....

Nvidia has integrated DLSS as a remedy. In principle this works quite well in Control and brings a big FPS boost. Sometimes even the blur is reduced, with some objects then appear overshadowed and flicker. However, DLSS is quite allergic to the game's graphics flaws, and they are made worse by AI upscaling. First of all, using ray tracing to make the graphics more realistic, but then to incorporate further errors with DLSS does not work.

...

ComputerBase has received Control from Nvidia for testing. The game was made available under NDA. The only requirement was the earliest possible release date. An influence of the manufacturer on the test report did not take place, a obligation for the publication did not exist.

Leveraging this AI research, we developed a new image processing algorithm that approximated our AI research model and fit within our performance budget. This image processing approach to DLSS is integrated into Control, and it delivers up to 75% faster frame rates.

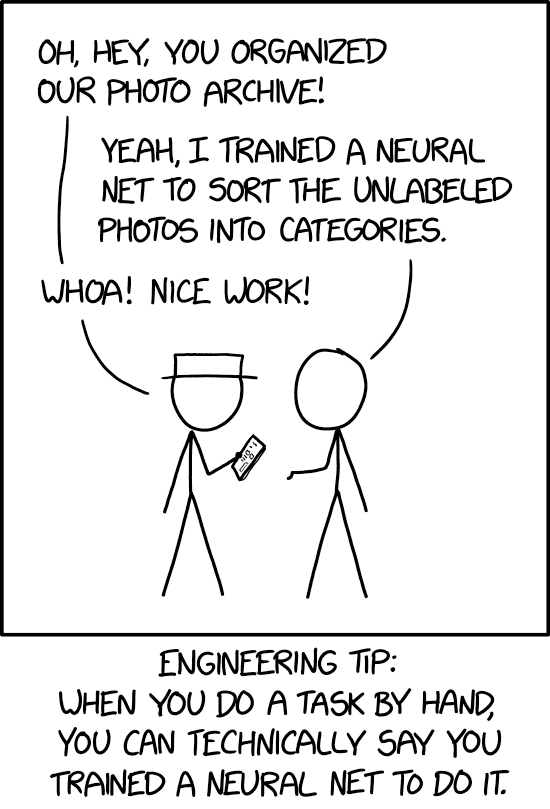

Most importantly, it's not DLSS but a 'conventional' image-processing algorithm. "Hand engineered algorithms are fast and can do a fair job of approximating AI." Their improved DLSS system is too slow using ML so they're using a compute based solution that approximates the AI results.NVIDIA claims they developed a new algorithm for Control, and intend to use it for future titles.

So humans learn from machine learning. Sure, makes total sense."Hand engineered algorithms are fast and can do a fair job of approximating AI."

They wanted the effect of DLSS but wanted to bypass the NN for speed. Interesting to say the least.So humans learn from machine learning. Sure, makes total sense.

The message behind this twisted marketing way of saying things indirectly is quite interesting, but no surprise

Do you still plan to experiment with ML upscaling?They wanted the effect of DLSS but wanted to bypass the NN for speed. Interesting to say the least.

Yeah that Nvidia article is really focused on promoting "DLSS" and Turing's tensor cores whilst dancing around the fact that Control doesn't seem to use either.The message behind this twisted marketing way of saying things indirectly is quite interesting, but no surprise

So humans learn from machine learning. Sure, makes total sense.

I think it's more the case that hand engineered algorithms can use more efficient non-linear operators. MLP really puts limits on how efficient image filters can be.Most importantly, it's not DLSS but a 'conventional' image-processing algorithm. "Hand engineered algorithms are fast and can do a fair job of approximating AI."

Likely we could get there without taking a step back.The MLP craze will die down

I'll continue on itDo you still plan to experiment with ML upscaling?

I thought about how i would do it. Idea is to generate a line field from image brightness / hue, and then use it to calculate an elliptic filter kernel for sampling. Should give nice AA at least, and not much work to try.

Currently i'm lost in other work, but if you ever get at it, i might take the challenge for a comparison...

Uhhh... that hackery skillsplanning to open their driver pack to see fi they have a model I can take a peek at and see if I can replicate it

oh, well, I'd probably start there as well, but I'm curious to see how they implemented their NN.Uhhh... that hackery skillsI'd only do it with C++ on a still image

I take the article as them stating that the Image Processing Algorithm is what DLSS currently is. They are taking images from developers processing them, and developing their NN algorithm to run on the GPU. Their AI Research Algorithm is a more advanced form to show what is possible given a longer frame budget... however it's not at the point yet where they can run it in real-time on the tensor cores at the speed they want. Their goal is to get the AI Research algorithm optimized to the point where they can maintain that detail and quality, while fitting within their performance budget to run on the tensor cores.Most importantly, it's not DLSS but a 'conventional' image-processing algorithm. "Hand engineered algorithms are fast and can do a fair job of approximating AI." Their improved DLSS system is too slow using ML so they're using a compute based solution that approximates the AI results.

The plan is to get the AI fast enough to give better results, but Control's implementation isn't ML and is much lower impact, which is by-and-large what games need. They don't need perfect, but good enough at fast enough speeds. So Control is actually a +1 for algorithmic reconstruction methods, with ML contributing to the development of the algorithm.

I take the article as them stating that the Image Processing Algorithm is what DLSS currently is. They are taking images from developers processing them, and developing their NN algorithm to run on the GPU. Their AI Research Algorithm is a more advanced form to show what is possible given a longer frame budget... however it's not at the point yet where they can run it in real-time on the tensor cores at the speed they want. Their goal is to get the AI Research algorithm optimized to the point where they can maintain that detail and quality, while fitting within their performance budget to run on the tensor cores.

Where in the article does it say they aren't running Control's DLSS solution on the tensor cores, or that this isn't using Machine Learning? Machine learning means training the algorithm. This isn't a compute based solution. It's still DLSS.

"Deep Learning: The Future of Super Resolution

Deep learning holds incredible promise for the next chapter of super resolution. Hand engineered algorithms are fast and can do a fair job of approximating AI. But they’re brittle and prone to failure in corner cases."

They show several examples of their approximation breaking. They also end the article by stating that Turing's tensor cores and ready and waiting to be used.

Our next step is optimizing our AI research model to run at higher FPS. Turing’s 110 Tensor teraflops are ready and waiting for this next round of innovation. When it arrives, we’ll deploy the latest enhancements to gamers via our Game Ready Drivers.

You take that at meaning they aren't being used in Control? lol no. They're saying those cores are there and they're capable of the next round of improvements coming to DLSS which is a more optimized version of their AI research model. It's also a way of reassuring people that they wont need a next gen GPU to handle these improvements when they come. Their AI model utilizes deep learning to train their Image Processing algorithm. The goal is to get that high quality of the AI model performant enough so that it can run on the tensor cores.

I disagree for several reasons.

1. All previous DLSS implementations have been rather expensive. Suddenly this one has the same performance as basic upscaling.

2. The various selectable resolutions from which to upscale resulting in more combinations to train than previous titles.

4. Its now usable at all performance levels and provides an improvement regardless of how long other parts of the GPU require to process a frame.

3. There would be no need for the article.

A new algorithm could explain away all what you just wrote though. I mean, they literally state that they need to get it to a performance budget small enough that it can run on the tensor cores. That implies that they are using them.. and want to continue using them.