Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia GeForce RTX 50-series product value

- Thread starter Boss

- Start date

Question with the 5000 series not supporting Physx some people may want to purchase a low power / quiet older card to act as a dedicated Physx card, so whats the slowest card you could use for that ?

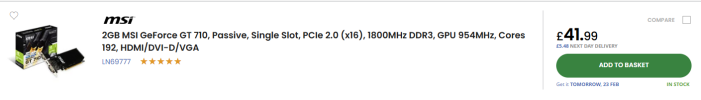

the slowest new card I can find on sale is

View attachment 13189

Ehhm.. it's an interesting question but I'm not sure if this is gonna lead to a satisfactory outcome. Unlike reusing some older card you happen to have lying around.

But ok if you check Nvidia's CUDA compatibility list that model number should have compute capability up to version 2.1 or 3.5 (as there are Fermi and Kepler versions of that card supposedly).

And driver support ends with 475.14.

A Pascal based GT1010 or 1030 shouldn't cost much more but supports all the way to 6.1 as well as still being supported by the latest Nvidia drivers. Might be a more sensible expense.

I could use an older card (and I guess it would be the same for a lot of people) but having a quite high end card (for the time) dedicated to just Physx is a bit much

I never thought about the driver side of things would for example having a rtx5070 with the latest driver and a gt710 with PhysX-9.13.0604-SystemSoftware-Legacy driver installed be enough to use that combo ?

or if not a gtx 9000 series card or later and NVIDIA PhysX System Software installed

I never thought about the driver side of things would for example having a rtx5070 with the latest driver and a gt710 with PhysX-9.13.0604-SystemSoftware-Legacy driver installed be enough to use that combo ?

or if not a gtx 9000 series card or later and NVIDIA PhysX System Software installed

DegustatoR

Legend

You would definitely want a card supported by the same driver as your main card which means that anything older than Maxwell is out and if you plan on using the card for more than just playing the games now then you'd probably make a cut at Turing even - which means something like GTX 1630 or RTX 3050 I'd say.Question with the 5000 series not supporting Physx some people may want to purchase a low power / quiet older card to act as a dedicated Physx card, so whats the slowest card you could use for that ?

the slowest new card I can find on sale is

View attachment 13189

One of the best looks at DLSS4 so far and my limited personal testing confirms his findings.

Brilliant job by Tim and goes to show that nvidia literally outdoing native.

The most noticeable flaw I saw in DLSS4 in Witcher 3 (driver override) was the disocclusion around Geralt. I'm suspect this is less of a problem at super high FPS but with base FPS around 45-60 it's definitely noticeable. Still better about this than any other upscaling solution I tested. Even the old way model was better than any other solution available in that game.

Something funny is that I mostly only see the disocclusion artifacting around Geralt's head, maybe since that's where my eyes rest. If there was increased quality around that small area of the screen, maybe it would have an outsized impact on my perception of the image quality.

Looks like Nvidia was caught selling 5090s with missing ROPs. As if the margins aren’t juicy enough, they’re still cutting corners everywhere. Horrible power connector design which represents a serious regression from the 3090ti. Now they’re selling incomplete hardware.

I mean, this is just ridiculous.

I mean, this is just ridiculous.

The driver doesn't have some ROP count list in it to read from. The driver provides a path for GPU-Z to ask from the GPUWould NVIDIA have to build support for 168 ROP 5090 in their driver for the driver to tell GPUZ that it has only 168 ROPs?

Also how did no AIB partners notice this? It's not hard to detect the issue and it clearly impacts performance.

Would the BIOS have to be configured differently to allow for the deactivation of functional units? I'm trying to understand what is different (hardware or software) with these cards, so I can understand how something like this could happen. I don't know how this is done. For all I know they could scrape off the ROPs with a beltsander.The driver doesn't have some ROP count list in it to read from. The driver provides a path for GPU-Z to ask from the GPU

BIOS could play a part, we know they got final BIOSes at really late date too.Would the BIOS have to be configured differently to allow for the deactivation of functional units? I'm trying to understand what is different (hardware or software) with these cards, so I can understand how something like this could happen. I don't know how this is done. For all I know they scrape off the ROPs with a beltsander.

If the BIOS is different on these "defective" cards, how could the AIBs not notice?BIOS could play a part, we know they got final BIOSes at really late date too.

Is this the worst GPU launch in a long time? It feels like it to me.from someone which is usually hyped about nVidia products, this generation is a swindle.

You can't expect this type of performance from 1000€+ GPUs. From 580fps to 7fps in a jiffy.

On Mafia 2 the 4090 beat the 5090 to a pulp.

-Dropped support for 32 bit physx

-Missing Rops

-The worst gen on gen increase in a long time

-Fake MSRPs

-Bad Availability at launch

-Power connector design flaws

-Reports of GPUs going up in flames

-Bad performance per dollar improvements gen on gen.

I feel like there are quite a few I’m missing.

Brilliant job by Tim and goes to show that nvidia literally outdoing native.

Fantastic video. Between the DLSS4 upgrade and the rocky launch Nvidia is making it real easy for people to skip the 50 series.

This is the best analysis of upscaling IQ I’ve seen. Tim’s coverage of FSR 4 will be must watch TV. I wish he included more shots of native/DLAA though.

that's terrible. From hiding the heat generated in the warmest part of the GPU -afaik there is no way to know via sensor which is the inner temp of the central part of the GPU-, to GPUs on fire and so on and so forth.And it's not just some 5090's also some 5070ti have 88 ROPs instead of 96 here

I was just waiting for the RTX 5060 to be launched and see how much VRAM it has and so on, just out of curiosity, but now I'm just not that hyped.

GhostofWar

Regular

I'm seeing 5070ti's in stock at 3 stores down here in aus, looks to be about 4 models all above msrp. Not sure if it price or the defects are the reason, there are 5080's instock aswell and again the ones instock are massively over msrp.

I'm seeing 5070ti's in stock at 3 stores down here in aus, looks to be about 4 models all above msrp. Not sure if it price or the defects are the reason, there are 5080's instock aswell and again the ones instock are massively over msrp.

Yep, and they're all priced higher than what you could get a 4080 Super for up until very recently. After taking off local tax and converting, we're talking $1050 - $1150USD for a 5070Ti. The 5080's in stock are $1500 - $1650USD. Terrible value and no one should buy at these price gouge prices.

Last edited:

GhostofWar

Regular

I'm seeing 5080's costing more than my 4090 did ($2750 aud), the dollar was around 64 u.s cents at the time so that can't be the reason as it's around that still. There is a zotac 5090 and keep in mind they are a budget brand on ple for $6600 aud and the cooler is a pretty budget one. So uh yeh that's quite an interesting price lol.Yep, and they're all priced higher than what you could get a 4080 Super for up until very recently. After taking off local tax and converting, we're talking $1050 - $1150USD for a 5070Ti. The 5080's in stock are $1500 - $1650USD. Terrible value and no one should buy at these price gouge prices.

ZOTAC Gaming GeForce RTX 5090 Solid OC 32GB GDDR7

The ZOTAC GAMING GeForce RTX 5090 SOLID OC is designed for enthusiasts seeking performance in gaming and creative applications. Equipped with 32 GB of GDDR7 SDRAM and a wide 512-bit bus, this graphics card supports advanced rendering capabilities at resolutions up to 7680 x 4320. The NVIDIA...

Similar threads

- Replies

- 376

- Views

- 27K

- Replies

- 8

- Views

- 1K

- Replies

- 615

- Views

- 62K

- Replies

- 3

- Views

- 827