I got a score of 4972 ("Fairly High") with those settings on my RTX 2080, using the "Curve" oveclock, plus 700 MHz on the memory. The benchmark crashed a few times before that, maybe because of the more aggressive overclock I use in every other game, or maybe because of Afterburner's overlay. So I disabled the overlay, and went back to the original overclock nVidia allows you to discover (Its curve) using its utility.

i7 6700, non-k.

Demo looks nice enough, though it seemed pretty casual. The antialiasing was top notch, though I noticed a brief amount around the rear window of the car in the very beginning of the video. I've seen worse in my games at 4K, and with other other forms of AA on top of that, so good job here, imo. I was frequently hovering below 50 fps but the motion looked very smooth. Though there was some hitching, very briefly, here and there.

I installed the game to a SSD (480 GB Mushkin Striker) that isn't the one my OS is on, fwiw. Even so, I think there was a bit of pop-in, here and there, though it wasn't distracting.

We can debate the budgeting of transistors towards use as tensor cores, but seeing as they're there, I judge the use of them as a positive, and not a negative, or "a push"/being of no benefit.

I guess I should compare by looking at the game using TAA and 3200 x 1800, but I'm already pleased by the lack of aliasing that I've seen.

Edit: I got a score of 6178 at 2560 x 1440 using TAA, 3200 x 1800 wasn't available, though I do have that custom resolution enabled, and I even tested it just now in Windows. I ran the demo twice with Afterburner's Rivatuner overlay enabled and it didn't crash, so maybe it was my overclock, or DLSS still having a bug or two, that caused it previously. The pop-in was mostly shadows, what there was of it, btw.

Hard to compare the IQ when 2560 x 1440 gives a better framerate, but I'm guessing that even against 3200 x 1800 using TAA, DLSS will get the win. It's sharp, and has good clarity and saturation.

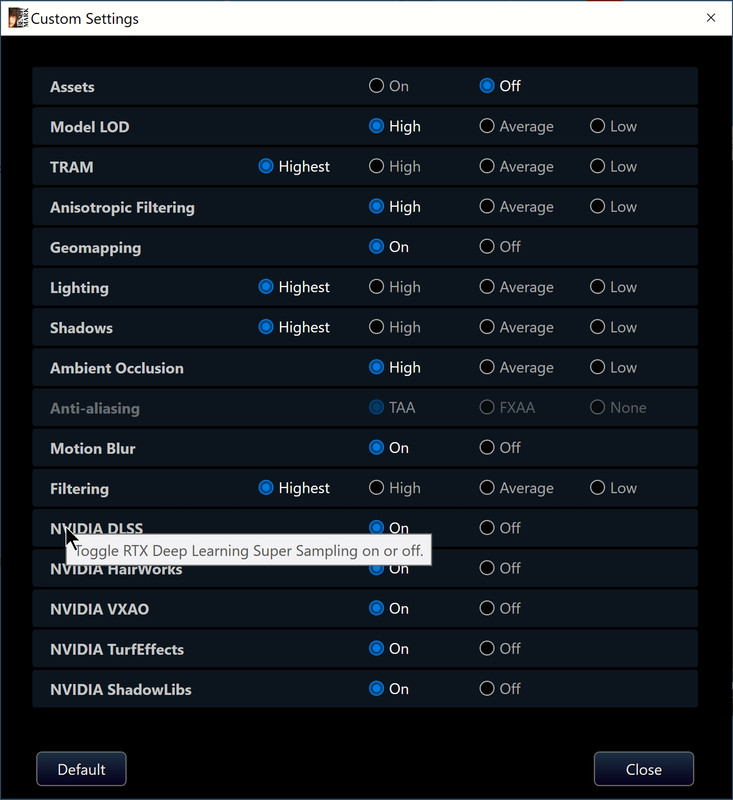

I just noticed the "Assets" setting. I didn't click that on while setting everything else to the highest setting.

OK, so I tested again with that on, and got 6106 at 2560 x 1440, and TAA.