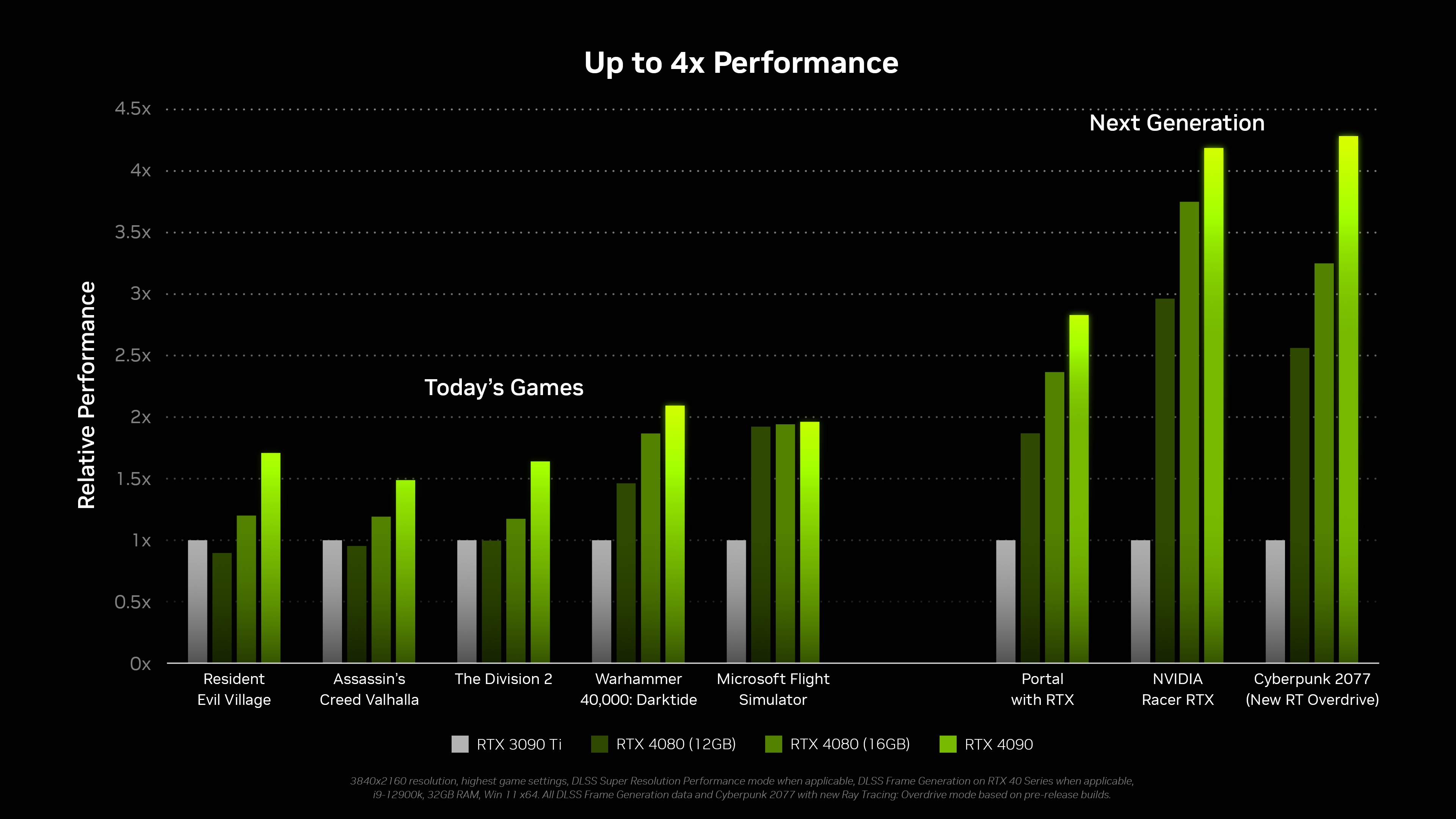

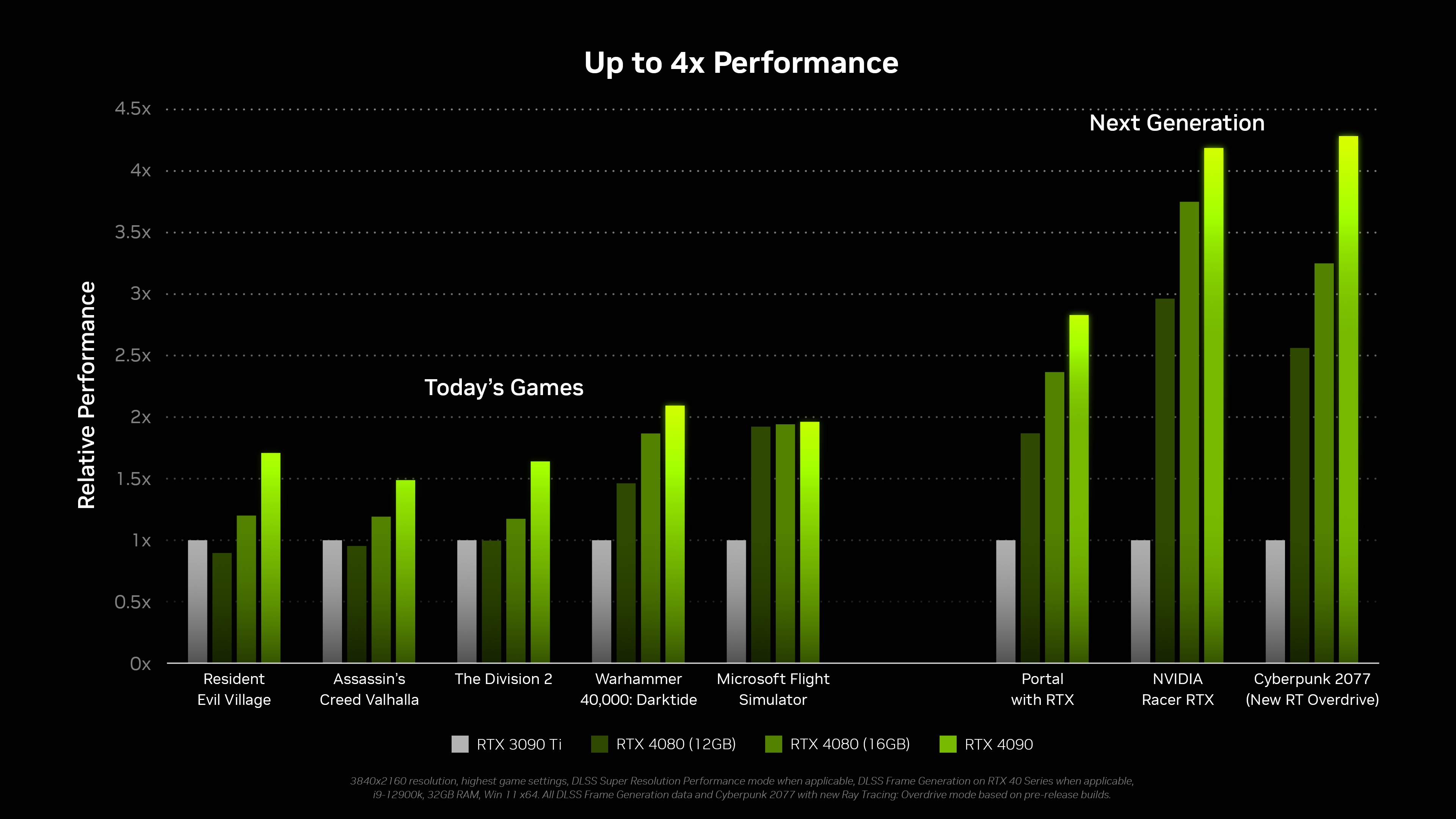

It says "if available". It's available in Cyberpunk, Portal RTX, and that car game. It's not available in Valhalla, REVIII, or The Division 2.The fine print leaves room for it not to be enabled TBF.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

davis.anthony

Veteran

So I've just found out these things are over 20% more expensive in Europe too. There goes any tiny temptation I may have had to just screw it and get one.

I moved from a 1070 to a 3060ti and have zero regrets.

It's twice as fast and one of the better value cards at the minute at £400, if not the best value card at the minute, especially for RT performance.

Last edited:

DegustatoR

Legend

GA103 is 320 bit and it goes into laptops fine with a disabled MC.If AD103 is going into laptops it's basically limited to 256 bit.

NVAPI - Get Started

Get the latest version, release notes, and other resources for NVIDIA API SDK.

Is SER exclusive to Ada? They mention it like it will be a general scheduler for RT operations but I couldn't be sure. Is it hardware based? It says its an extension of NVAPI.

Rootax

Veteran

It's only me that watch the 2 year old CP2077 running at 22fps on the still unreleased most powerful gpu in the world, and thinks that maybe RT is still 10 years away?

Maybe not the fastest engine tbh

I hope 3xxx and 6xxx will stay and become more affordable, but i doubt it. From the EVGA incident we can conclude they could not sell those GPUs much cheaper without selling at a loss.These high end RTX4000 gpus arent something you need right now to be able to enjoy pc gaming. RTX3070, 3080, RX6800 all offer quite nice value.

Consoles can be sold at some loss initially, but for PC this does not work, because HW industry gets no cut from the software. That's the price we pay for an open platform.

But even if we can buy those GPUs at MSRP now (almost) it's still too expensive, not only becasue they are already 2 years old.

I'm worried the PC platform is no longer competive, it only becoems worse with time, we'll loose more and more gamers to consoles.

And that's just HW issues, which is a pretty new problem. The other, and maybe even bigger problem is stagnation on games themselves, which goes on since 2010 i would say.

Games became so expensive to produce, requiring large teams, the necessary creativity of indivuduals can no longer be utilized. Instead we get recipes, franchises, kinda always the same games, just bigger.

Still, gaming did still grow a lot during PS4 gen. The upwind did still hold. But i doubt further growth is possible. Consequently the arrow goes down, and we'll face a recession, increasing dissatisfaction and critique, dissatisfaction.

The global recession in economy will add to this of course.

The proper reaction to such situation is to tone down, not up. We need affordable HW to leverage and adapt.

We also need to reduce energy consumption due to increasing prices and climate. It really looks bad if we increase power draw, chip sizes, and crank everything up to the max just to double down once more.

If we don't, the image of all involved gaming parties will suffer, and it will accelerate the depression even more. Govs will tax our gaming power even, at some point. We have no choice.

Some years ago i would have said 'APU for my gaming? Never ever, dude!' And to me, consoles would be no alternative at all. I'd rather quit gaming. It's no better than cloud.I wouldnt want it to be a APU configuration either as that has its downsides too. The PS5, a 5700XT/3700x (2019 mid end) setup for 550euros and higher with the more expensive games, subcriptions etc aint that great a value either, to me.

But now, seeing the other extreme of bigger and bigger PCs at higher and higher costs, then looking at what Apple does with a 15W chip... well, obviously at some point we just took the wrong turn, don't you think?

If i would offer you a small box, running Windows, with a single chip, which can run the same games looking closely as good as Jensens demos, for <1000 bucks - what would you choose? Would you still pay >3000 for a gaming PC instead?

Maybe some enthusiasts would. Nothing wrong with that, and it can coexist, ofc. But we need to focus on this sweet spot. IMO, the sweet spot is 5 - 10 tf. 10% from what a 4090 has.

I don't take those 5-10 tf from current consoles - it is jsut what i personally need, which coincidently aligns with console specs. You may argue they run 30 fps, lack this and that, and you are right. But that's solvable with better software.

Rembrandt has 3.5 tf. That's now. Afaik (hard to estimate) Intel already works on APU with GPU twice as powerful - probably one or two years away. That's plenty. DDR5 has okayish bandwidth, and after DDR6... dGPUs will be niche.

And that's actually a pessimistic prediction, assuming PC space keeps ignoring M1s advantage to put the whole fucking computer into a single chip, which is what we really should do.

We will see. But i bet in few years i will read in your signature, you'll proudly presenting your new, slick and modern single chip system >

NVAPI - Get Started

Get the latest version, release notes, and other resources for NVIDIA API SDK.developer.nvidia.com

Is SER exclusive to Ada? They mention it like it will be a general scheduler for RT operations but I couldn't be sure. Is it hardware based? It says its an extension of NVAPI.

It would be hardware based, but you have to leverage it through the api. Similar to the two new hardware accelerators included in the RT cores that require particular sdks to leverage.

What I meant in my head when writing that was: That's less than 3070 Ti with less than half the TFlops. I hope you will believe me, when I say that I know 504 is not "less than half" of 608.3070Ti is a 256 bit 19Gbps G6X card with 608GB/s of bandwidth.

4080/12 will be a 192 bit 21Gbps G6X card with 504GB/s of bandwidth.

That is a regression but not "less than half" for a 40TF card.

Still there will certainly be some cases where the big L2 won't help - we've seen this on RDNA2 already.

From the EVGA incident we can conclude they could not sell those GPUs much cheaper without selling at a loss.

The EVGA 'incident' has more behind it than evil Nvidia, i know its not what you want to hear, but have a peek in the EVGA topic here.

Consoles can be sold at some loss initially, but for PC this does not work, because HW industry gets no cut from the software. That's the price we pay for an open platform.

But even if we can buy those GPUs at MSRP now (almost) it's still too expensive, not only becasue they are already 2 years old.

I'm worried the PC platform is no longer competive, it only becoems worse with time, we'll loose more and more gamers to consoles.

Gaming PC's have always been more expensive, atleast initially. It also depends on what kind of system you want, not everyones looking at 9 times the consoles performance and capabilities either.

Gamers moving to consoles, its not what is happening today and theres no evidence either it will be happening in the (near-term) future. Heck it seems its the other way around.

And that's just HW issues, which is a pretty new problem. The other, and maybe even bigger problem is stagnation on games themselves, which goes on since 2010 i would say.

Games became so expensive to produce, requiring large teams, the necessary creativity of indivuduals can no longer be utilized. Instead we get recipes, franchises, kinda always the same games, just bigger.

Still, gaming did still grow a lot during PS4 gen. The upwind did still hold. But i doubt further growth is possible. Consequently the arrow goes down, and we'll face a recession, increasing dissatisfaction and critique, dissatisfaction.

The global recession in economy will add to this of course.

The proper reaction to such situation is to tone down, not up. We need affordable HW to leverage and adapt.

We also need to reduce energy consumption due to increasing prices and climate. It really looks bad if we increase power draw, chip sizes, and crank everything up to the max just to double down once more.

If we don't, the image of all involved gaming parties will suffer, and it will accelerate the depression even more. Govs will tax our gaming power even, at some point. We have no choice.

Well, that stagnation is also true on the console platforms. Besides, if the PC as a gaming platform is so bad, then so would be Microsofts as basically everything Xbox=PC, and finally Sony moving onto the pc platform aswell.

Recessions and other problems affect all markets and platforms.

Some years ago i would have said 'APU for my gaming? Never ever, dude!' And to me, consoles would be no alternative at all. I'd rather quit gaming. It's no better than cloud.

But now, seeing the other extreme of bigger and bigger PCs at higher and higher costs, then looking at what Apple does with a 15W chip... well, obviously at some point we just took the wrong turn, don't you think?

If i would offer you a small box, running Windows, with a single chip, which can run the same games looking closely as good as Jensens demos, for <1000 bucks - what would you choose? Would you still pay >3000 for a gaming PC instead?

Maybe some enthusiasts would. Nothing wrong with that, and it can coexist, ofc. But we need to focus on this sweet spot. IMO, the sweet spot is 5 - 10 tf. 10% from what a 4090 has.

I don't take those 5-10 tf from current consoles - it is jsut what i personally need, which coincidently aligns with console specs. You may argue they run 30 fps, lack this and that, and you are right. But that's solvable with better software.

Rembrandt has 3.5 tf. That's now. Afaik (hard to estimate) Intel already works on APU with GPU twice as powerful - probably one or two years away. That's plenty. DDR5 has okayish bandwidth, and after DDR6... dGPUs will be niche.

And that's actually a pessimistic prediction, assuming PC space keeps ignoring M1s advantage to put the whole fucking computer into a single chip, which is what we really should do.

We will see. But i bet in few years i will read in your signature, you'll proudly presenting your new, slick and modern single chip system >

APU's can co-exist, in special in laptops and handhelds where you cant upgrade and where the performance requirements arent as high. For a desktop/stationary system it makes less sense. Not yet atleast.

This with bigger and bigger and higher costs, the consoles are in the same boat basically. They are on the same X86 hardware sharing the same architectures in a smaller APU configuration. Consoles are seeing stagnating leaps and diminishing returns, drawing more watts then ever and being more pricey to own then ever.

PC & console gaming are essentially in the same boat and will probably pave the same roads going forward (or end with the same fate).

dGPUs being niche and pc gamers on shiny powerfull APU's in a few years seems very quick

DegustatoR

Legend

3070Ti is pretty much exactly half in TFs from 4080/12 though. 21 vs 40.What I meant in my head when writing that was: That's less than 3070 Ti with less than half the TFlops. I hope you will believe me, when I say that I know 504 is not "less than half" of 608.

Bit more thinking how bad the 4080 12GB looks in the stack. The SM ratio between the cut down AD102, 128 SM 4090 and AD104, 60 SM 4080 12GB is absolutely wild, 46.9% rounded up. Previous gens for perspective:

3090 82SM -> 38.4, 3060 Ti has 38

2080Ti 68SM -> 31.9, 2060 has 30, 2060S 34

1080Ti 28SM -> 13.1, 1060 6GB has 10, 1070 15

980Ti 22SM -> 10.3, 960 has 8, 970 13

So saying it should be a 4070 is actually generous, it's more like a 4060 Ti compared to every other gen's ratio which is partially re-affirmed by the 192 bit bus. The 4070 is probably going to be 52 or maybe 54 if they have a 4070 Ti, which would make the 4070 more like an x60 non-Ti vs x80Ti/x90 in previous gens purely by ratio. Yes AD102 is a big leap over GA102 but you could park a bus in that gap. Business wants money etc but $900 for a 4080 12GB which by their own slides is slower than a 3090Ti in raster, making it maybe 15-20% faster than a 3080 for 30%/$200 more 2 years later? Take a bow 104, you have peaked

Also confused with their "191 RT Tflops" claim for the 4090 (and the "200 RT Tflops" they gave in the presentation). 2.52GHz * 512 tensor FP16 ops per SM/clock * 128 SM = 165 "RT Tflops", for some reason there's a 15-20% modifier applied. They said SER was a "up to 2-3x increase in RT and 25% in overall game performance" so that's not it either

If I was buying Ada the only card I would consider is the 4090. The 4080 12GB Is a joke both in name and performance. I’m not drawing any final conclusions until we see 3rd party benchmarks in realistic scenarios without the DLSS performance mode nonsense.

Even DFs teaser benchmarks were for 4K DLSS performance. They never did that for the 3090 so why do it for the 4090? Silly.

It's also available in flightsim & warhammer in thoseIt says "if available". It's available in Cyberpunk, Portal RTX, and that car game. It's not available in Valhalla, REVIII, or The Division 2.

DegustatoR

Legend

NDAEven DFs teaser benchmarks were for 4K DLSS performance. They never did that for the 3090 so why do it for the 4090? Silly.

DFs had a day one preview of the 3080:If I was buying Ada the only card I would consider is the 4090. The 4080 12GB Is a joke both in name and performance. I’m not drawing any final conclusions until we see 3rd party benchmarks in realistic scenarios without the DLSS performance mode nonsense.

Even DFs teaser benchmarks were for 4K DLSS performance. They never did that for the 3090 so why do it for the 4090? Silly.

Yeah so basically the disclosed stuff so far is that the 4090 is 50% faster than the 3090 in CP2077 overdrive edition @ 1080p. Have we seen a single 4K benchmark?

DFs had a day one preview of the 3080:

I was referring to the use of DLSS performance which is a pointless setting on these cards.

I did not depict NV to be evil at all. I just said - likely - they could not reduce prices. For my point it does not matter if 'they' stands for EVGA or NV.The EVGA 'incident' has more behind it than evil Nvidia, i know its not what you want to hear, but have a peek in the EVGA topic here.

Gamers moving to consoles, its not what is happening today and theres no evidence either it will be happening in the (near-term) future. Heck it seems its the other way around.

I guess neither of us can proof his assumption. But does not matter i either. I see just many people quitting games. Often it goes PC -> try console -> quit completely.

PC is big, but C64 was big too.

Obviously - becasue we have the same games on both platforms.Well, that stagnation is also true on the console platforms.

You still seem to think i try to downplay PC in favour of consoles?

Then i just can't help it. I really tried to be clear about that more than once.

Yep. But you should know me well enough - i always think and talk about the future. And i made this clear as well.Not yet atleast.

Still - what has more impact on gaming right now? Steam Deck or RTX?

I guess it's like this: Devs try to make sure on their own that their game scales down to Steam Deck. NV tries to pay some selected devs so they implement high fidelity raytracing.

But i can't proof that either - just a guess.

No. 400 -> 500. That's 25% more after 7(?) years. Pascal->Turing->Ampere->Ada, checking launch prices for x80 is 600 -> 1200, so 100% for the same time. That's well above inflation.This with bigger and bigger and higher costs, the consoles are in the same boat basically.

Do you even care about money? Or do you just agree to disagree?

I give up.

I did not depict NV to be evil at all. I just said - likely - they could not reduce prices. For my point it does not matter if 'they' stands for EVGA or NV.

I just think that the whole EVGA incident has some more merits behind it, its not a good indicator of a healthy or unhealthy pc market.

I guess neither of us can proof his assumption. But does not matter i either. I see just many people quitting games. Often it goes PC -> try console -> quit completely.

PC is big, but C64 was big too.

Ive seen both ways, some shift to console some to pc. Quitting completely also happens, however wasnt the total gaming market actually growing or is that accounting mobile gaming.

Obviously - becasue we have the same games on both platforms.

You still seem to think i try to downplay PC in favour of consoles?

Then i just can't help it. I really tried to be clear about that more than once.

Look, i think both are sharing the same problems. A look at MS is probably a look into the future with these gamepass, xcloud and services etc. Play anywhere on any system, whatever hardware you want with great scaling of graphics.

Yep. But you should know me well enough - i always think and talk about the future. And i made this clear as well.

Still - what has more impact on gaming right now? Steam Deck or RTX?

I guess it's like this: Devs try to make sure on their own that their game scales down to Steam Deck. NV tries to pay some selected devs so they implement high fidelity raytracing.

But i can't proof that either - just a guess.

Maybe. Though i think were hitting a wall in normal raster and its not a bad idea to push new ideas. RTX as in Lovelace? Its not making much of an impact because its merely a 'paperlaunch', the product is only in the higher end range, new and expensive. RTX encompasses turing and ampere too, and those would be good enough for most pc gamers (i hope). Someone on a RTX2070S, or a 3060 or better can tag along fine and enjoy equal to console experiences or better. These arent that far out of reach for most, and when the time comes they can upgrade to a 5060 etc if needed.

No. 400 -> 500. That's 25% more after 7(?) years. Pascal->Turing->Ampere->Ada, checking launch prices for x80 is 600 -> 1200, so 100% for the same time. That's well above inflation.

Do you even care about money? Or do you just agree to disagree?

I give up.

I was talking performance, not price. I just do not think Ada is a good value atm when looking at deals for RTX3000 gpus. its too early to judge, we will have to see what happens next year.

DavidGraham

Veteran

NVIDIA answered some questions in a new Q&A post on their website.

Q: How does the RTX 40 Series perform compared to the 30 series if DLSS frame generation isn't turned on?

This chart has DLSS turned on when supported, but there are some games like Division 2 and Assassin's Creed Valhalla in the chart that don’t have DLSS, so you can see performance compared to our fastest RTX 30 Series GPU without DLSS.

Q: Can someone explain these performance claims in how they relate to gaming? 2-4x faster seems unprecedented. Usually from generation to generation GPU's see a 30-50% increase in performance. Is the claim being made that these cards will at *minimum* double the performance for gaming?

The RTX 4090 achieves up to 2-4x performance through a combination of software and hardware enhancements. We’ve upgraded all three RTX processors - Shader Cores, RT Cores and Tensor Cores. Combined with our new DLSS 3 AI frame generation technology the RTX 4090 delivers up to 2x performance in the latest games and creative applications vs. RTX 3090 Ti. When looking at next generation content that puts a higher workload on the GPU we see up to 4x performance gains. These are not minimum performance gains - they are the gains you can expect to see on the more computationally intensive games and applications.

I think that seals it, RTX 4090 is about 60% to 70% faster than 3090Ti in rasterization, 2X should be realized in heavy ray tracing titles, or with the assistance of DLSS3. 4X is achievable only through very heavy ray tracing + DLSS3.

NVIDIA even put out some performance figures for professional applications, the 4090 is about 60% to 70% faster than 3090Ti in those app too.

The question now is: with the crazy tripling of the transistor budget, where did all of this budget go? Towards RT cores and Tensor cores? The 4090 probably needs more power and more clocks to push towards 2X the rasterization that was expected from it. Either that or the architecture is not as scalable as it used to be.

Q: How does the RTX 40 Series perform compared to the 30 series if DLSS frame generation isn't turned on?

This chart has DLSS turned on when supported, but there are some games like Division 2 and Assassin's Creed Valhalla in the chart that don’t have DLSS, so you can see performance compared to our fastest RTX 30 Series GPU without DLSS.

Q: Can someone explain these performance claims in how they relate to gaming? 2-4x faster seems unprecedented. Usually from generation to generation GPU's see a 30-50% increase in performance. Is the claim being made that these cards will at *minimum* double the performance for gaming?

The RTX 4090 achieves up to 2-4x performance through a combination of software and hardware enhancements. We’ve upgraded all three RTX processors - Shader Cores, RT Cores and Tensor Cores. Combined with our new DLSS 3 AI frame generation technology the RTX 4090 delivers up to 2x performance in the latest games and creative applications vs. RTX 3090 Ti. When looking at next generation content that puts a higher workload on the GPU we see up to 4x performance gains. These are not minimum performance gains - they are the gains you can expect to see on the more computationally intensive games and applications.

GeForce RTX 40 Series Community Q&A: You Asked, We Answered

NVIDIA Product Managers answer your GeForce RTX 40 Series, NVIDIA DLSS 3, NVIDIA Remix and NVIDIA Studio questions!

www.nvidia.com

I think that seals it, RTX 4090 is about 60% to 70% faster than 3090Ti in rasterization, 2X should be realized in heavy ray tracing titles, or with the assistance of DLSS3. 4X is achievable only through very heavy ray tracing + DLSS3.

NVIDIA even put out some performance figures for professional applications, the 4090 is about 60% to 70% faster than 3090Ti in those app too.

Creativity Redefined: New GeForce RTX 40 Series GPUs and NVIDIA Studio Updates Accelerate AI Revolution

Next-generation ray tracing and Tensor Cores plus dual AV1 NVIDIA encoders power new tools for 3D, video production, live streaming, game modding and more.

blogs.nvidia.com

The question now is: with the crazy tripling of the transistor budget, where did all of this budget go? Towards RT cores and Tensor cores? The 4090 probably needs more power and more clocks to push towards 2X the rasterization that was expected from it. Either that or the architecture is not as scalable as it used to be.

Last edited:

DegustatoR

Legend

It's closer to 2.5X when comparing 3090Ti to 4090. And yes, it likely went into h/w changes and caches.The question now is: with the crazy tripling of the transistor budget, where did all of this budget go? Towards RT cores and Tensor cores?

We still need to wait for proper benchmarks though before claiming any % of performance improvement.

- Status

- Not open for further replies.

Similar threads

- Replies

- 177

- Views

- 39K

D

- Replies

- 90

- Views

- 17K

- Replies

- 20

- Views

- 6K