You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

davis.anthony

Veteran

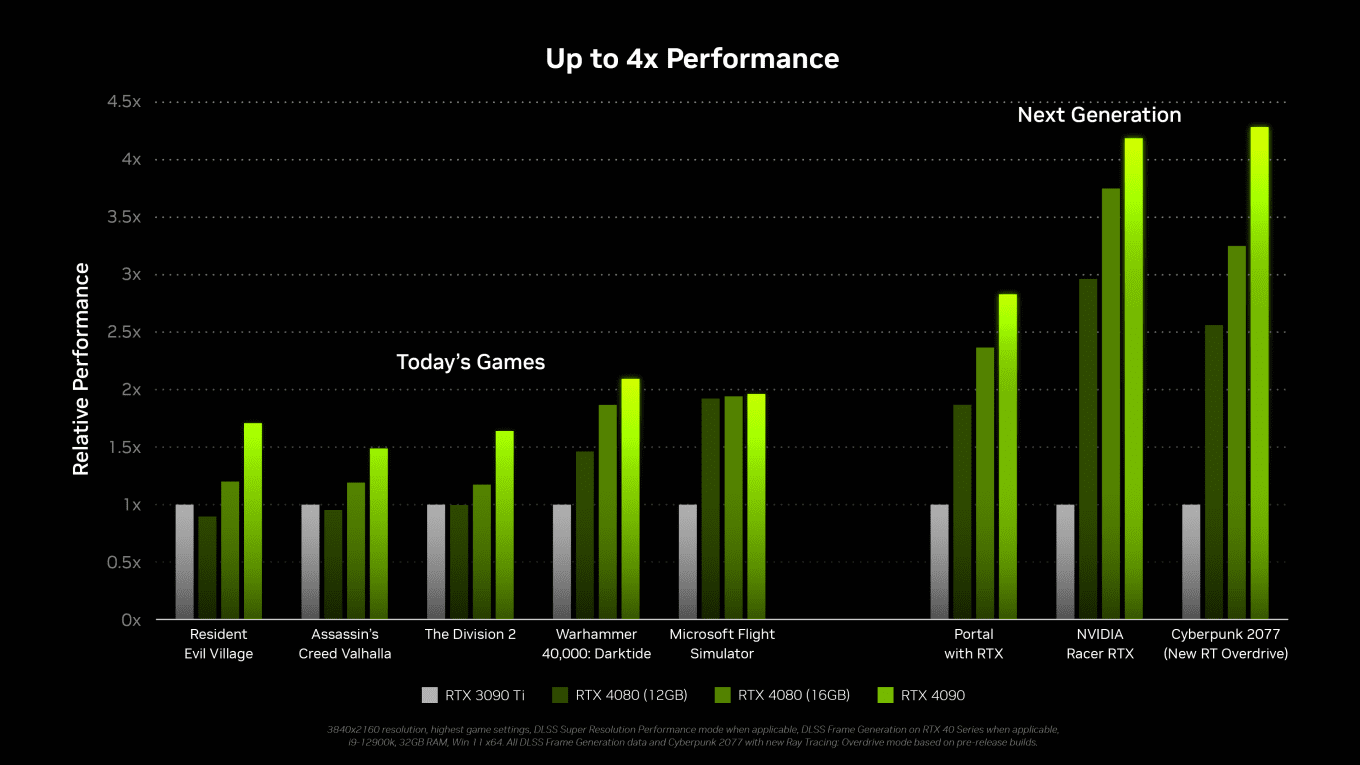

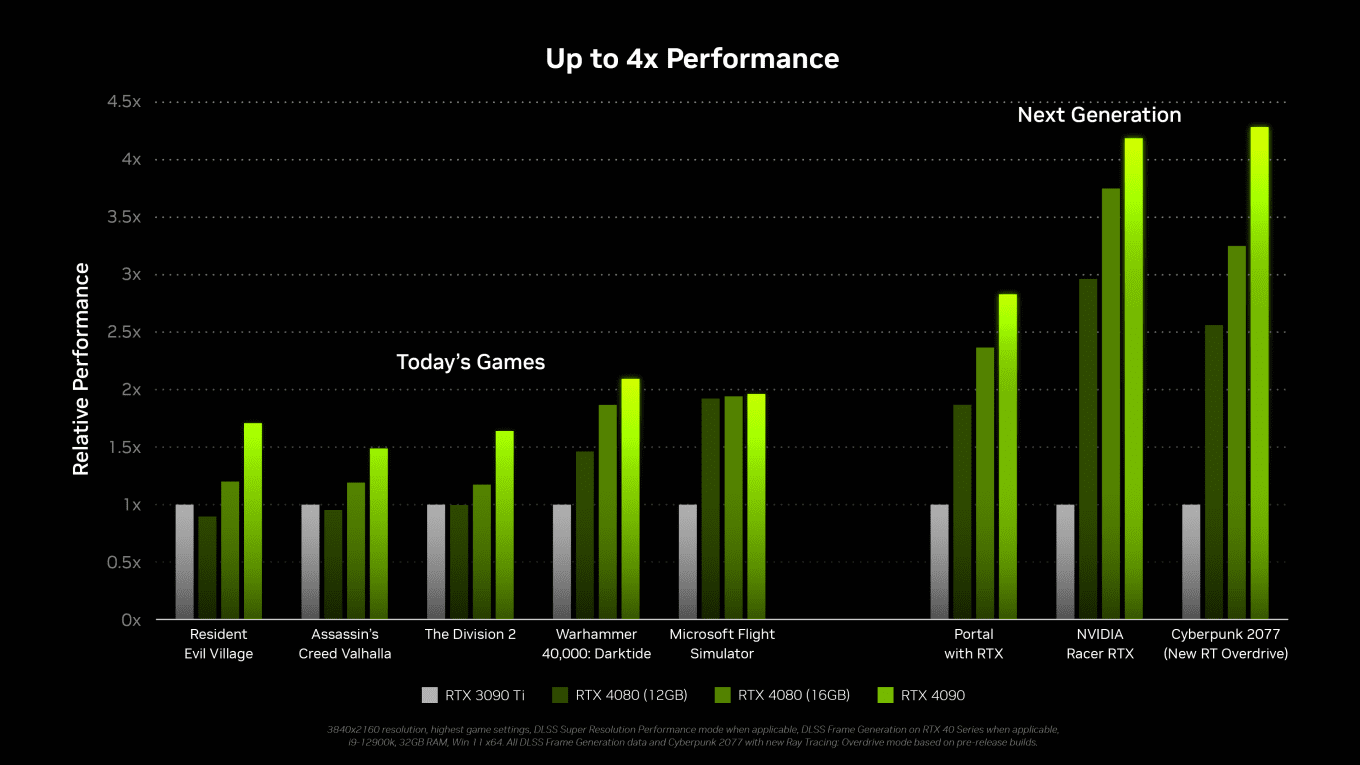

Talking about Spider-Man: In the new DLSS 3 video the 4090 gets over 110 FPS in 4K with Raytracing. A 3090TI is around 65. With over 100 FPS the 4090 should be CPU limited.

Without knowing tested settings you can't compare the two.

Jensen Confirms: NVLink Support in Ada Lovelace is Gone

Wait, so he said on the call that Ada has Gen5 but it only has Gen4? Just clarifying.

It has Gen5 power connector but the GPU only supports Gen4. The Gen5 connector is part of ATX 3.0 spec tooJensen Confirms: NVLink Support in Ada Lovelace is Gone

Wait, so he said on the call that Ada has Gen5 but it only has Gen4? Just clarifying.

Ok but he's speaking about Gen5 bandwidth, not power, in relation to why NVLink was removed.It has Gen5 power connector but the GPU only supports Gen4. The Gen5 connector is part of ATX 3.0 spec too

New infos about the FE: https://www.techpowerup.com/299096/...-cooler-pcb-design-new-power-spike-management

Flappy Pannus

Veteran

I do not choose my platform - gamers do.

Let's put the old misunderstandings on a rest, please. It really was just that - all the time.

My predictions are PC arrives at APUs in general. SteamDeck, Rembrandt etc., are first signs, but it will come to desktop as well.

NV looks like they already prepare for this. Their consumer focus seems much more cloud gaming and content creation. At least that's how it looks to me.

But that's jsut me, and we shall see.

I think it's too early and too unique conditions right now to make the argument that Nvidia is doing this as some sort of prep for an Intel/AMD x86 APU dominated future. Nvidia is stuffing their AI tech into 'consumer' GPU's because that's where the bulk of their R&D has been going, and it's been going there because the areas where they make steady, massive increases in revenue over the years is through the datacenter. It only makes sense they leverage that expertise, as well the writing has been on the wall for a while that we're running into physical process limits, brute force just can't be done every 2 years - we see that right now with the near non-existent improvement in memory bandwidth. Got to work smarter. Whether that means DLSS3 and techniques like it is really the solution, too early.

But, I certainly have been hoping for x86 APU's to step into this vacuum of the low/low-mid tier for a while now without success. It seemed were were definitely on that path with Hades Canyon/Kaby Lake G, while a trial balloon it signified to me that this would eventually be the low/midrange gaming PC market - a multi-package CPU with a GPU + decent amount of HBM. Obviously, didn't pan out. The big bottleneck is always memory cost, HBM was hoped to be the savoir for this area but it's never been cost effective, and the ~32GB of GDDR6 is certainly not cost effective either which is what you'd need in a modern APU system, never mind it might be detrimental to CPU performance with latency issues.

The Steam Deck certainly is the most successful PC APU implementation yet, but it bypasses the big problem with desktop-class APU's: Memory bandwidth. It's just DDR5. That's fine for a 720p display, not so for 4k. So we really haven't advanced in this area that much, laptops still use discrete GPU's with GDDR6 after all.

So you can't just look at the Steam Deck and think "What if we just scaled that up" - that memory bandwidth wall is very, very tall and it's proved insurmountable to climb, cost-effectively, so far for the class of APU's desktop gamers want unless it's subsidized by the closed console model.

Last edited:

I'm not negative - i'm sad.

PC is my personal platfrom of choice, and til there is some open alternative, it will reamin that.

Theres absolutely no reason to be sad at all. These high end RTX4000 gpus arent something you need right now to be able to enjoy pc gaming. RTX3070, 3080, RX6800 all offer quite nice value. These Ada gpus will come down in price sometime.

No, sadly not.

I want some Rembrandt laptop. Becasue that's almost a SeriesX/S. I'm sure that's enough specs for 60 fps next gen games. It's the first proper APU to play games.

Now go and try to find a laptop with 6800U.

There are a few, but ALL of them also have a dGPU, e.g. 3060M.

Why should i pay for a dGPU which is only slightly more powerful than the built in iGPU?

This is just stupid. And this is what makes me sad about the PC platform. They fail to offer attractive products in general. It's not just NV which feels completely out of this world.

Wouldnt know why you'd be so sad about it really. I have a 5800H/3070m combo for 1.5 years now. Its generally faster than the PS5 and is very close to a 3060Ti dGPU system. In comparison to a equalivent desktop setup, it doesnt draw much power either. Probably below what a PS5 does aswell. I wouldnt want it to be a APU configuration either as that has its downsides too. The PS5, a 5700XT/3700x (2019 mid end) setup for 550euros and higher with the more expensive games, subcriptions etc aint that great a value either, to me.

People blame NV of being greedy, or the current crisis, economy, Putin, whatever.

But that's not the main reasons GPUs are too expensive.

The main reason is: People fail to lower their expectations.

They can not accept that tech progress at some point has to slow down, that Moores Law has an end.

We are all gulity. Devs were lazy and based their progress on the next generation HW improvements. Gamers took the bait of an ever increasing realism promise, e.g. path traced games.

And now we see it does not work, and point with fingers at anybody else. Just not on ourselfes, the true origin of the failure.

So it will take a wohle lot of time, until we seriously realize: APU is the saviour of gaming.

But after that, it will be all fine. \/

The same applys to humanity as a whole, btw. If we fail to replace envy with simple needs, we will all die.

NV is greedy just like any other company that hunts for profit.

Its that tech is generally moving into another direction, ray tracing and AI/ML tech, while still improving in normal rasterization. Again this is the same 'problem' in the console space, the smalles tleap ever in raw raster, but missed the boat on RT and AI. Not that good of a proposition either. Alll the while things are getting more expensive.

Wouldnt know why an APU would be the saviour of this all. Its not really welcomed in the pc space to begin with as in replacing everything, but a rather good addition to it.

Flappy Pannus

Veteran

I think it has to do with optical flow frame generation

Artifacts appear on exactly one frame, the frame which was generated by dlss3

You can see for yourself. In YouTube, you can move the video one frame forward or backward (keys , and . on your keyboard). Timecode 1:33

I noticed that as well.

Before this announcement, my hopes for the rumored 'DLSS3' was that it would have a reduced cost (to that where it could barely be detectable in in performance cost over its native starting res), and some way to improve quality - one way to mitigate the low-resolution of RT reflections with it enabled for example, and also handle edge cases like how it can break down with lower resolution buffers and the like.

I certainly did not expect we'd get a new mode that could potentially add more artifacts. We'll see with more detailed analysis and without youtube compression, but damn those really stood out to me too.

Looks like today was not only the architecture day for the press but they are free to release certain information:

www.hardwareluxx.de

www.hardwareluxx.de

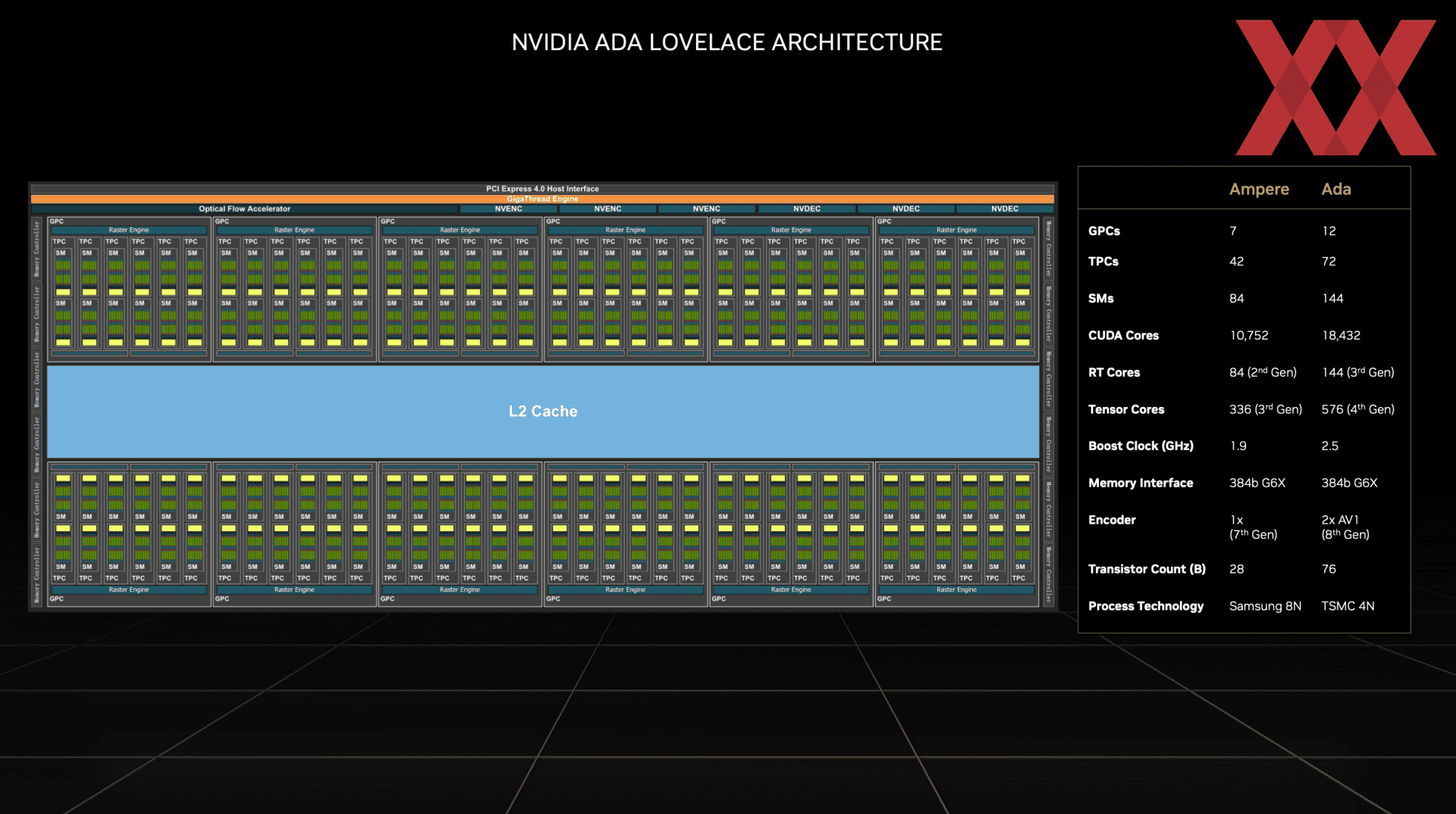

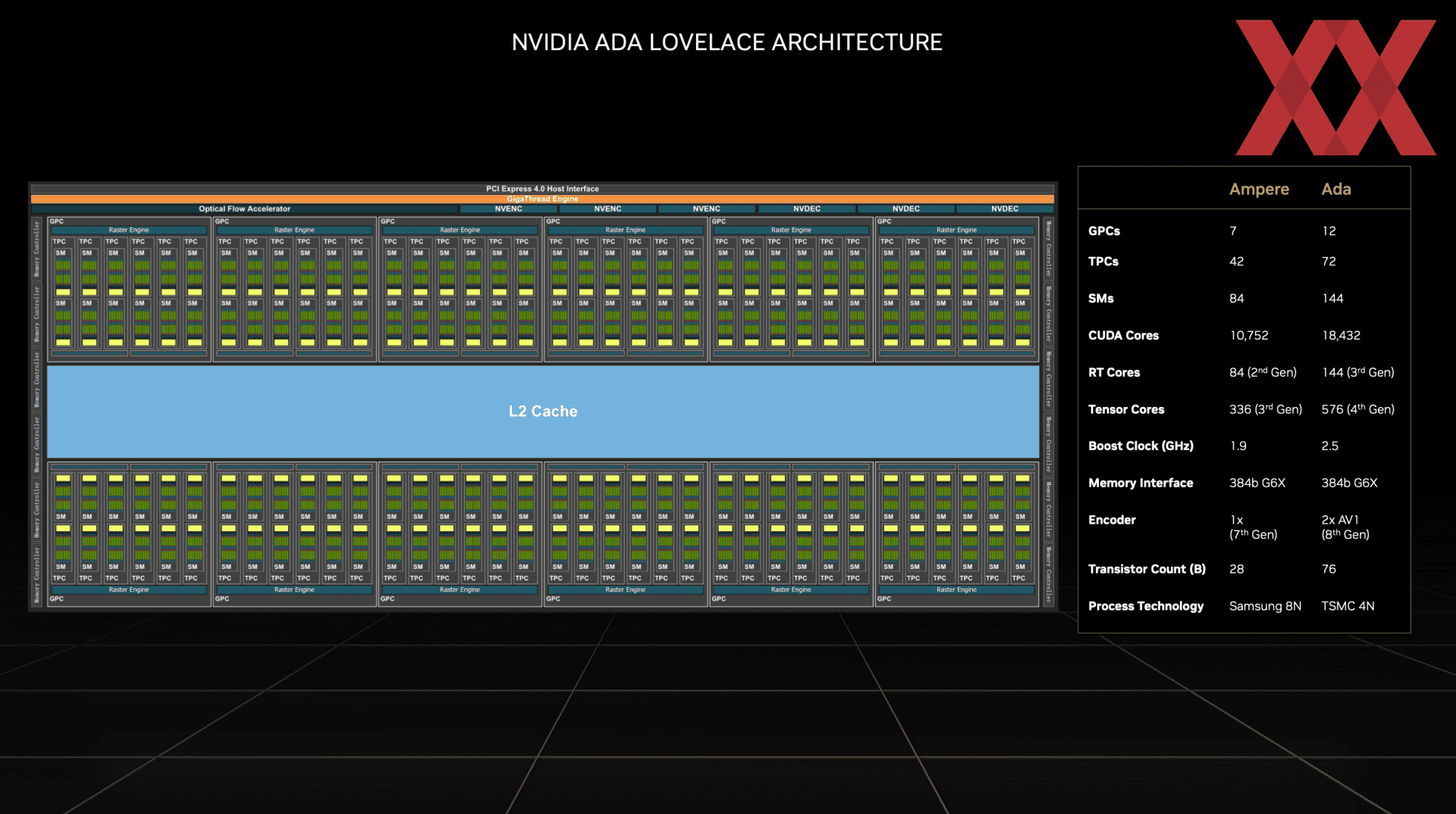

12 Rasterizer, a huge L2 cache (which nVidia seems not to care at all). 2.5x higher geometry performance than 3090...

NVIDIA stellt die ersten Modelle der GeForce-RTX-40-Serie vor (Update) - Hardwareluxx

NVIDIA stellt die ersten Modelle der GeForce-RTX-40-Serie vor.

12 Rasterizer, a huge L2 cache (which nVidia seems not to care at all). 2.5x higher geometry performance than 3090...

davis.anthony

Veteran

I was hoping for a DLSS that operates within the ray tracing itself.... like how Insomniac used checker boarding on the reflections in Ratchet & Clank but using DLSS instead for a bigger quality increase.I noticed that as well.

Before this announcement, my hopes for the rumored 'DLSS3' was that it would have a reduced cost (to that where it could barely be detectable in in performance cost over its native starting res), and some way to improve quality - one way to mitigate the low-resolution of RT reflections with it enabled for example, and also handle edge cases like how it can break down with lower resolution buffers and the like.

I certainly did not expect we'd get a new mode that could potentially add more artifacts. We'll see with more detailed analysis and without youtube compression, but damn those really stood out to me too.

arandomguy

Veteran

They could have used wider buses on 104 and 103 chips.

Product stack, market segmentation, costs, VRAM capacity (as in too high relatively from a business stand point) and mobile considerations.

davis.anthony

Veteran

There's a massive gap in shaders between the 4080 and 4090 (9,728 vs 16,384) so a nice gap for a future 4080ti.

Could have 100 SM's?

Could have 100 SM's?

DegustatoR

Legend

There is a huge gap between AD103 and AD102 so none of these are exactly a big issue.Product stack, market segmentation, costs, VRAM capacity (as in too high relatively from a business stand point) and mobile considerations.

The only issue which would be valid is their aim at making the chips as small as possible - to the point where a wider bus may be problematic to implement (without going the MCM route at least).

So I've just found out these things are over 20% more expensive in Europe too. There goes any tiny temptation I may have had to just screw it and get one.

So I've just found out these things are over 20% more expensive in Europe too. There goes any tiny temptation I may have had to just screw it and get one.

Around 1500 euros for the 4080 and 2 grand for the 4090. But add another hundred or two for custom cards, these are nvidia sugested prices for now, in europe.

It looks like they've refurbished the 3Dfx T-Buffer Motion Blur effect.I'm much more worried about the artifacts. Here's 4 frame sequence from Digital Foundrys (NV controller) preview

Can you guess which frames are scaled and which generated?

arandomguy

Veteran

There is a huge gap between AD103 and AD102 so none of these are exactly a big issue.

The only issue which would be valid is their aim at making the chips as small as possible - to the point where a wider bus may be problematic to implement (without going the MCM route at least).

If AD103 is going into laptops it's basically limited to 256 bit. Also sometimes these specs need to play to buyer expectations. If AD102 has no memory advantages over AD103 than they need to a way to sell it as such.

They're partially segmenting by VRAM. Nvidia has considerations when designing for creation type work loads and VRAM capacity segmentation is a much more significant factor even at 12GB and higher capacities.

As an aside even for gaming both sides seem like they are going to heavily segment in this area by keeping mainstream at <12GB.

DRAM prices were in a much higher point in the cycle likely when these were planned out as well. Whereas now due a combination of macro factors the DRAM price crash is likely much lower than I'd guess most people predicated.

- Status

- Not open for further replies.

Similar threads

- Replies

- 177

- Views

- 40K

D

- Replies

- 90

- Views

- 17K

- Replies

- 20

- Views

- 6K