Ideas for new uses by NVidia for tensor cores:

- Frame doubler, using AI-driven interpolation. Many games use a G-buffer and often there are motion vectors associated with G-buffers. In general, I presume, G-buffers are fully complete less than half-way through the time it takes to render a frame. This would enable an inference engine to take two frames' G-buffers, motion vectors (if available) and render targets to interpolate a third frame (underlined in the list below) while the fourth frame is being fully rendered (turning 60fps rendering into 120fps display):

- 8.333ms: frame 1 inferenced G-buffer, motion-vectors and render target

- 16.67ms: frame 2 real G-buffer, motion-vectors and render target

- 25.00ms: frame 3 inferenced G-buffer, motion-vectors and render target generated while frame 4 is being shaded

- 33.33ms: frame 4 real G-buffer, motion-vectors and render target

- Ray-generation optimiser. Generating the best rays to trace seems to be a black art. It's unclear to me how developers tune their algorithms to do this. I'm going to guess that with some AI inferencing that takes the denoiser input and some ray generation statistics, the next frame's ray generation can be steered for higher ray count per pixel where quality matters most. This would enable faster stabilisation for algorithms such as ambient occlusion or reflections where disocclusion and rapid panning cause highly visible artefacts due to low ray count per pixel.

I'm going to guess that these ideas would use a lot of memory. I get the sense that the next generation is going to be more generous with memory, so that shouldn't be a problem.

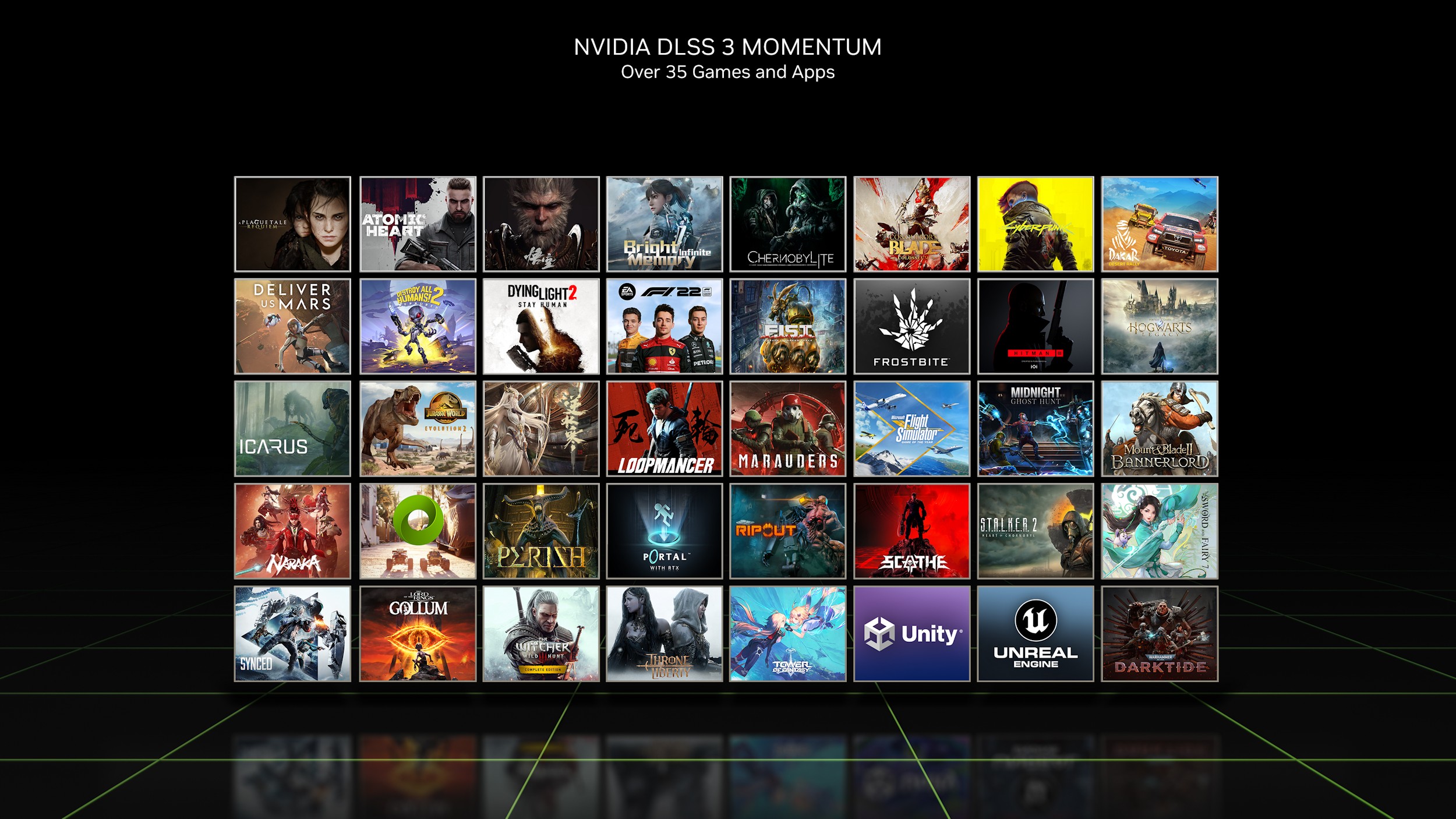

I'm expecting these kinds of ideas to make use of the DLL-driven plug-in architecture we've seen with DLSS, activated by developers as a new option in the graphics settings menus.

How much of this kind of stuff can be back-ported to Turing or Ampere? What would make these features uniquely available on Ada?