anexanhume

Veteran

Would a wider bus reduce disproportionate bandwidth contention however?

60 FPS doubles bandwidth use over 30fps, while physical bandwidth keeps as a constant.

That being said, the CPU is running the memory twice as hard gutting the bandwidth available to the GPU.

*correct me if wrong*

Say CPU normally takes up 20 gb/s at 30fps. The bandwidth available to GPU is now 120 say.

But at 60fps it's going to be 40 gb/s. The bandwidth now will be < 100.

If the goal is high framerate support for next gen, will bus width be a factor?

edit:

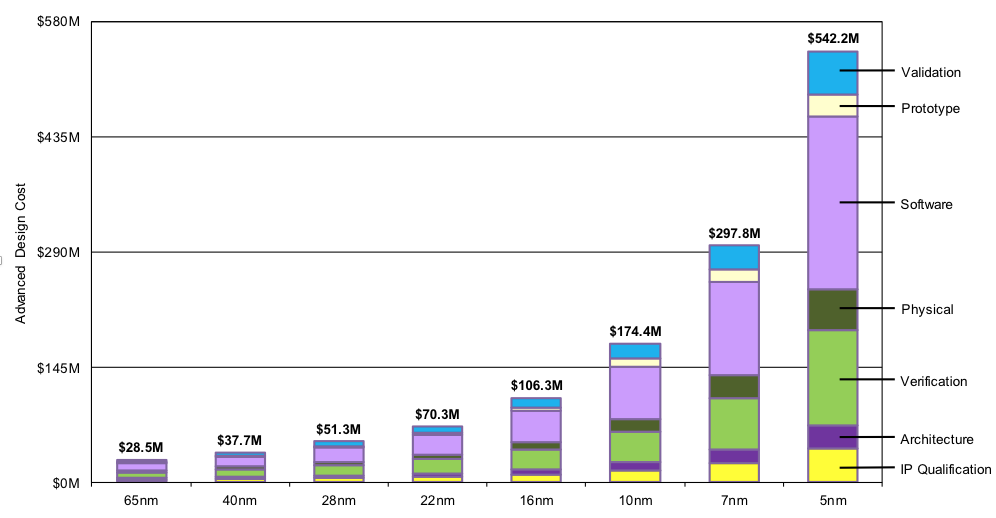

wrt this old document

Do you have the source of this document? I’ve never seen that graphic before.