A 4060 would be RTX3080 range somewhere, which is going to be 2020 high-end or close to enthusiast level of hardware.

It's expected that next gen is faster than prev gen. But it is new that the price and now goes up linear with performance. Power draw goes up too the same way, so you pay even more.

Price of electricity, fuel and food goes up too. So people aim to pay less for their hobby, not more.

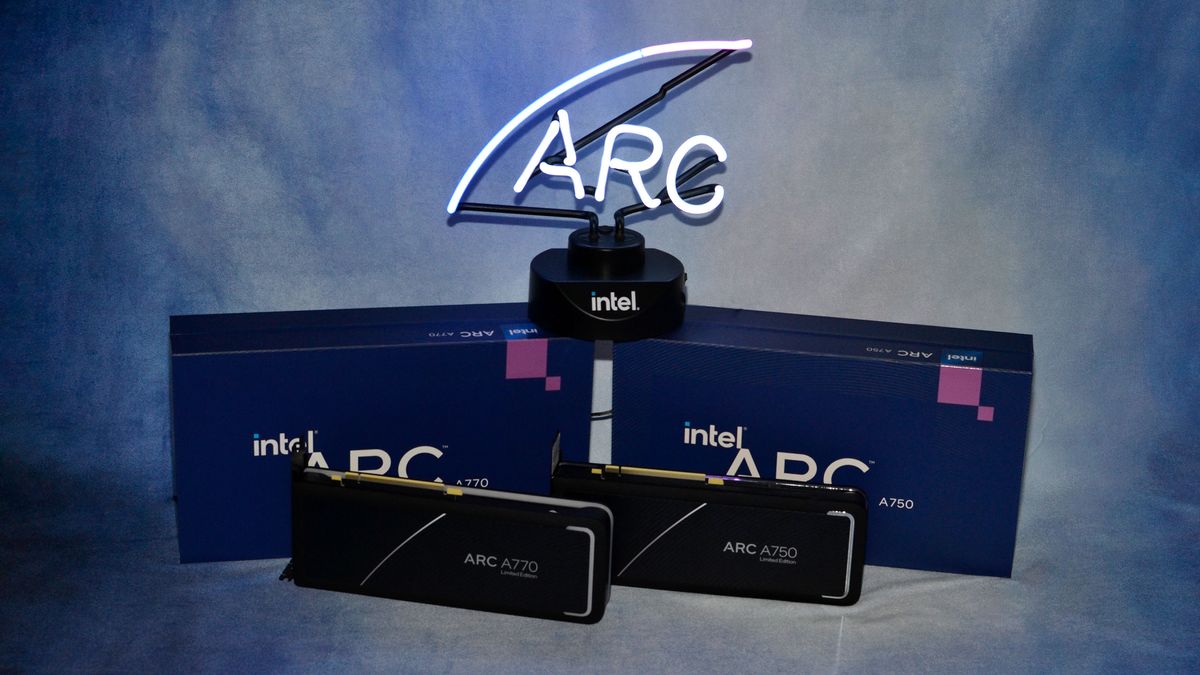

NV ignores this completely, Intel reacts as desired, but people are quite unsure about the risk to invest into a newcomer.

Which means ther is no really good offer to many of them. After years of chip shortage and moon prices, still no offer.

That's the situation. And it really is easy to understand why people are disappointed and feel abandoned from an industry they have supported with their money over many years.

Regarding RT and ML, i feel like the resistance has settled. Initially many disliked it, but to me it seems those have changed their mind.

I don't think it's about technology, and if people think it's needed or not. It really is just at the pricing.

The arguments that i read here are inconsistent and bended into form as needed.

If i say RT is too expensive, i hear i can get 3060 for a good, small price. (The same price i've spend on x80 models some years before.)

The same people claim that a 6700 is 'too slow' for proper raytracing, consoles can barely do it at all, etc.

Tech journalists think RT is not relevant at midrange level of a A770. (quite a statement!)

That's confusing, but the final conclusion is that RT is a high end feature, and mid range is not good enough, while entry level is not even a topic still.

At the same time, Jensen himself confrims GPU prices won't go down anymore. So they will only go up even further. Besides trumpeting newest ML and RT features for those who can pay premium.

Connecting all those dots, i really ask how anyone of you can seriously wonder why contra RT echo chambers form, or why people like me dare to doubt the new shiny god, which you declare to be the future.

Becasue if this is the only future, then mainstream gaming is literally declared to die out rather quickly. Am i wrong? Does not matter - because that's what many people think and experience.

Now you may respond with 'RT is optional. You do not need to have it.'

But at the same time you request and predict that games should and will be made, finally, entirly on top of RT, and the dark past of affordable gaming should be burried asap.

Which means: Some day soon i will have to have it, but then it will be even less affordable. So i have to quit, even if i don't want to.

And that's not all.

We have the same problems about games themselfs for years, and it's growing. They go up from 60 to 70 to 80 bucks. While people are disappointed more and more about the content. Always the same recipes and franchises, releasd years before finished, just remakes from a better past, requiring always online for a single player game, MTX and loot boxes, etc., you name it. Political things aside.

It feels like the gaming industry is at an all time low of creativity, and devs do not enjoy to make them. They have to crunch, get lowest payment, suffer from harassement at work, management only cares about money, etc...

That's the current public impression as we hear. And no see folds are big enough to ignore this.

I think we have to figure out what people want, not what we want. We have to make better offers.

Regarding HW, Intel shows the way. It's the first time RT feels 'in reach' for real.