Yep, N6 is 7"nm" family, N5 5"nm" familyIs there a large jump in wafer pricing when moving from n6 to n5?

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel ARC GPUs, Xe Architecture for dGPUs [2018-2022]

- Thread starter DavidGraham

- Start date

-

- Tags

- intel

- Status

- Not open for further replies.

Bondrewd

Veteran

Yea.Is there a large jump in wafer pricing when moving from n6 to n5?

Flappy Pannus

Veteran

There will be an 8GB and 16GB version . If the 16GB version was $329 then it doesn't make sense to use the "Starting at" framing when that's the top tier in that class. They would have more likely said "Starting at sub $300" for the 8GB version if the 16GB was $329.

Confirmed.

Arstechnica said:After announcing a $329 price for its A770 GPU earlier this week, Intel clarified that the company would launch three A700 series products on October 12: The aforementioned Arc A770 for $329, which sports 8GB of GDDR6 memory

So what I expected. However I'm only 'correct' in the most pedantic technical way:

Arstechnica said:an additional Arc A770 Limited Edition for $349, which jumps up to 16GB of GDDR6 at slightly higher memory bandwidth and otherwise sports identical specs

That's an extremely meagre upsell hike for 8 more gigs, and you get a bit more bandwidth boost too. The 8GB model really doesn't make that much sense to get by comparison, so this is clearly the model Intel wants to put out there as the basis for reviews imo.

Arstechnica said:and the slightly weaker A750 Limited Edition for $289. If you missed the memo on that sub-$300 GPU when it was previously announced, the A750 LE is essentially a binned version of the A770's chipset with 87.5 percent of the shading units and ray tracing (RT) units turned on, along with an ever-so-slightly downclocked boost clock (2.05 GHz, compared to 2.1 GHz on both A770 models).

Full specs below. 512 GB/sec for under $300 is pretty awesome. Hell they're even maintaining that with the A5, although no price details yet.

Hope to eventually dispose of the 'if the drivers don't suck' disclaimer sometime in the near future though, but alas.

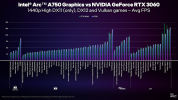

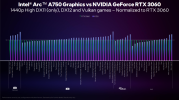

As for performance, with Intel's own slide against against a 3060, it's pretty simple to extrapolate vs a 770 considering the small uplift in specs over a 750 in the chart. So the LE 770 will definitely not compete with the 3060ti anytime soon, and likely still fall below the 3060 in DX11 titles. So give and take, not going to significantly shake up the market at this point but if they can truly offer it at the MSRP and get the drivers in order it has a chance. Pretty significant 'if's' though.

Last edited:

This is like christmas. A 20TF GPU with 16GB and all bells and whistles for 350 in 2022. Hard to believe.an additional Arc A770 Limited Edition for $349, which jumps up to 16GB of GDDR6 at slightly higher memory bandwidth and otherwise sports identical specs

Soon i shall see how brand loyal (aka stupid) gamers actually are...

RTX 40 supports AV1 encoding and decoding in hardware, just like Arc, and RDNA3 has been confirmed (Linux patches) to support both too.I never care, but iirc it's ahead even RTX4xxx becasue AV1:

Oh, sorry.RTX 40 supports AV1 encoding and decoding in hardware, just like Arc, and RDNA3 has been confirmed (Linux patches) to support both too.

But who cares about 'Screamers'

(typo intended, this time)

Oh, sorry.

But who cares about 'Screamers'

(typo intended, this time)

Its good for future proofing and creators etc.

Techpowerup's site implies the A770 in its 8GB variant should be around 20% faster than my current 2080Ti. 16GB version has double the memory and additional 50gb/s or so more. TDP of just 225watts?

Intel Arc A770 Specs

Intel DG2-512, 2400 MHz, 4096 Cores, 256 TMUs, 128 ROPs, 16384 MB GDDR6, 2000 MHz, 256 bit

Spec wise your getting 3080 performance in raw raster with 16GB vram, with what kind of RT/ML performance? Seems a very good price, extremely good lol.

It's probably going to be something like a 2070. In games that run well on it at least.Might get one just because. See if its a replacement for the 2080Ti which i can give to a nephew.

Last edited:

It would be a substantial downgrade. It's probably going to be something like a 2070. In games that run well on it at least.

Then Intel truly has to improve their drivers/optimization.

Then Intel truly has to improve their drivers/optimization.

They are all about comparing it to a 3060 and a 2070 is similar to that.

They're also comparing A750 to 3060 and it's beating it on average in DX12/VulkanThey are all about comparing it to a 3060 and a 2070 is similar to that.

There's virtually no chance any of these GPUs is competing with a 2080 Ti. Their best SKUs are on the level of the 3060/2070.Might get one just because. See if its a replacement for the 2080Ti which i can give to a nephew.

Huh, what?They're also comparing A750 to 3060 and it's beating it on average in DX12/Vulkan

DavidGraham

Veteran

The only reason to buy this GPU is for the novelty sake, other than that you are buying subpar DX11 performance, emulated DX10/DX9/DX8 games with all the bugs and performance issues associated with emulation, and non existent support for anything older than DX8.This is like christmas. A 20TF GPU with 16GB and all bells and whistles for 350 in 2022. Hard to believe.

Soon i shall see how brand loyal (aka stupid) gamers actually are...

OpenGL games are a toss up in the air, drivers features too, bugs, artifacts are rampant, and worst of all, unreliable history and future, as the fate of this whole Intel endeavor is under threat of ending, and then your card could be nothing more than paperweight.

- Status

- Not open for further replies.

Similar threads

- Replies

- 505

- Views

- 83K

- Replies

- 211

- Views

- 9K

- Replies

- 3

- Views

- 1K