DegustatoR

Legend

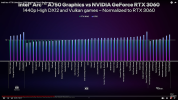

It costs the same and it's close in DX12/RT but will probably lose in other APIs, and that's from Intel's own benchmarks.I admit I didn't actually count the difference and see if it's really faster on average, but if it isn't it's close.

Doesn't look like a very attractive price/perf to me.