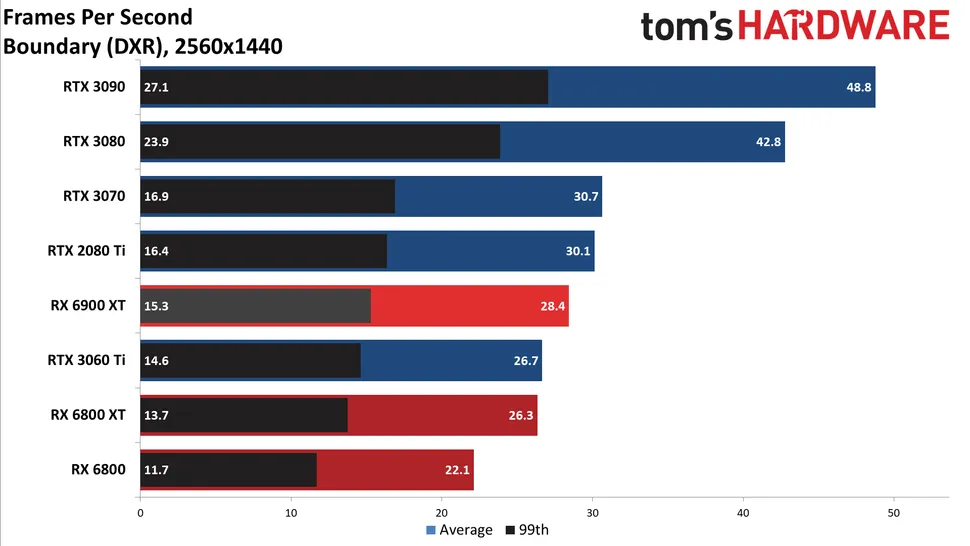

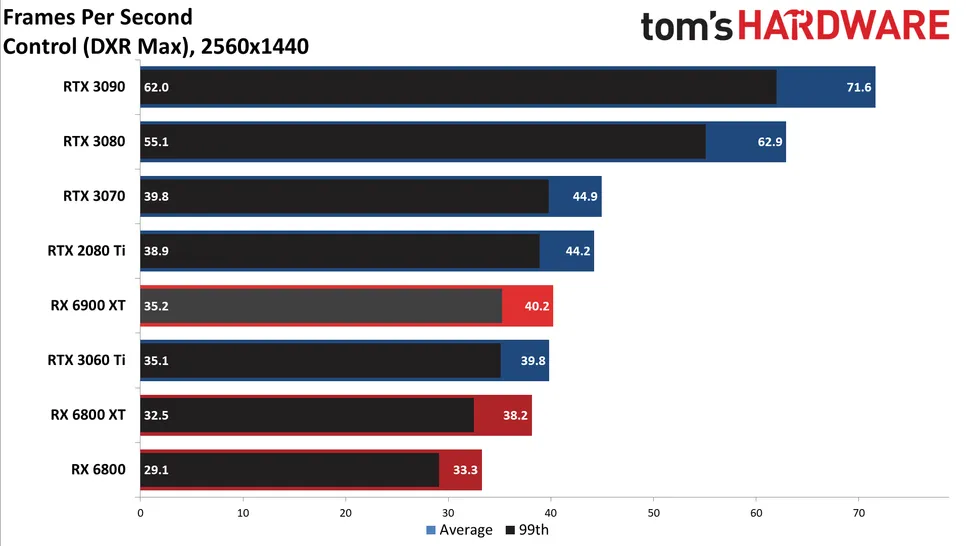

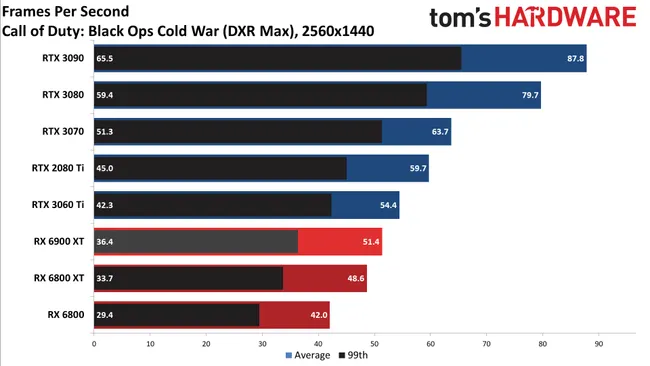

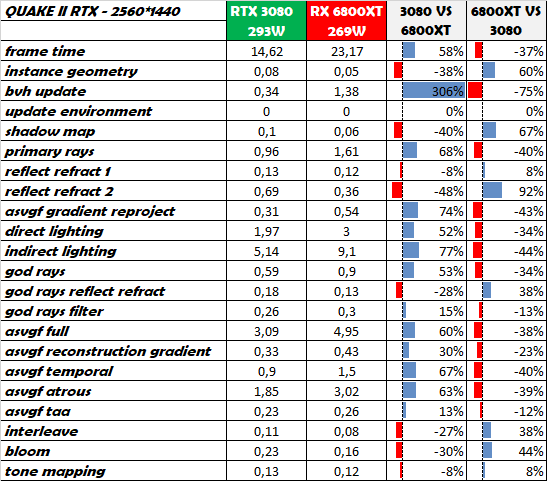

It's worth remembering that AMD with a compute-SIMD "slow" approach has equalled NVidia's dedicated-MIMD in Turing. With Ampere, NVidia gained 40% on Turing in games, but it seems likely there are no major gains to be had from "better MIMD" in Lovelace.

Are you drawing that conclusion based on mixed workloads where RT is just one consideration? For reference the 3080 and 2080 Ti have the same number of RT “cores” yet the former is 85% faster in Optix.