No need for me to pick any poison, I'm going to play it on pc

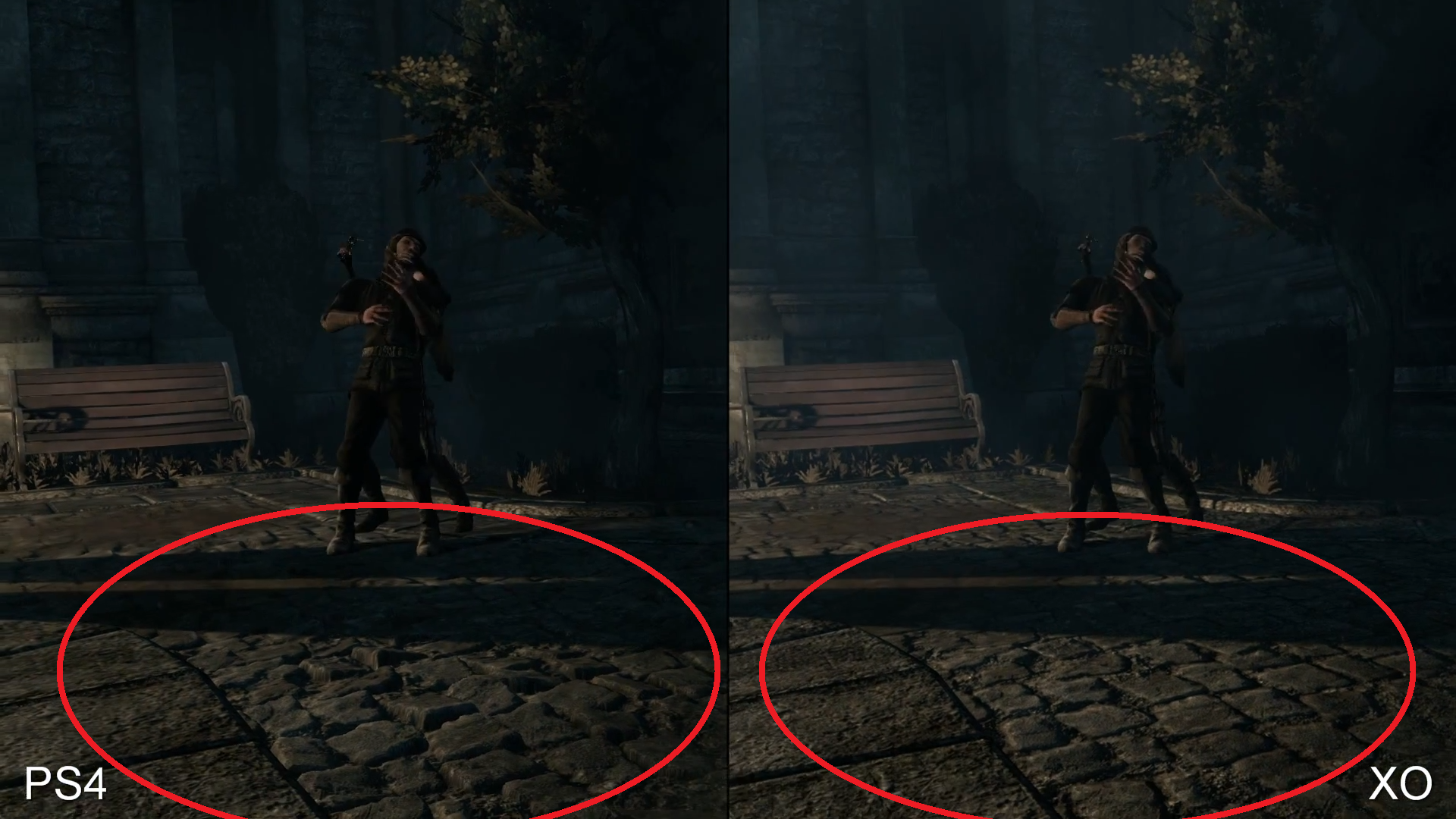

But depending on circumstance af can definitely be more important than resolution. The color palette of thief seems to imply that aliasing won't be as much of a determent as texture filtering given that being a thief you will be hugging walls and other surfaces, hence texture detail becomes important. Specular aliasing will suck regardless of resolution until new software techniques are employed. Both console versions seem to have a crappy frame rate so whether it's bad or badder, either way it's bad. Either way I'd expect good af to be very important to this particular game.

It's an interesting test case because af is a function of texture samplers, so sacrificing some resolution can let a machine go with stronger af. Which is better? It'll depend on the game.