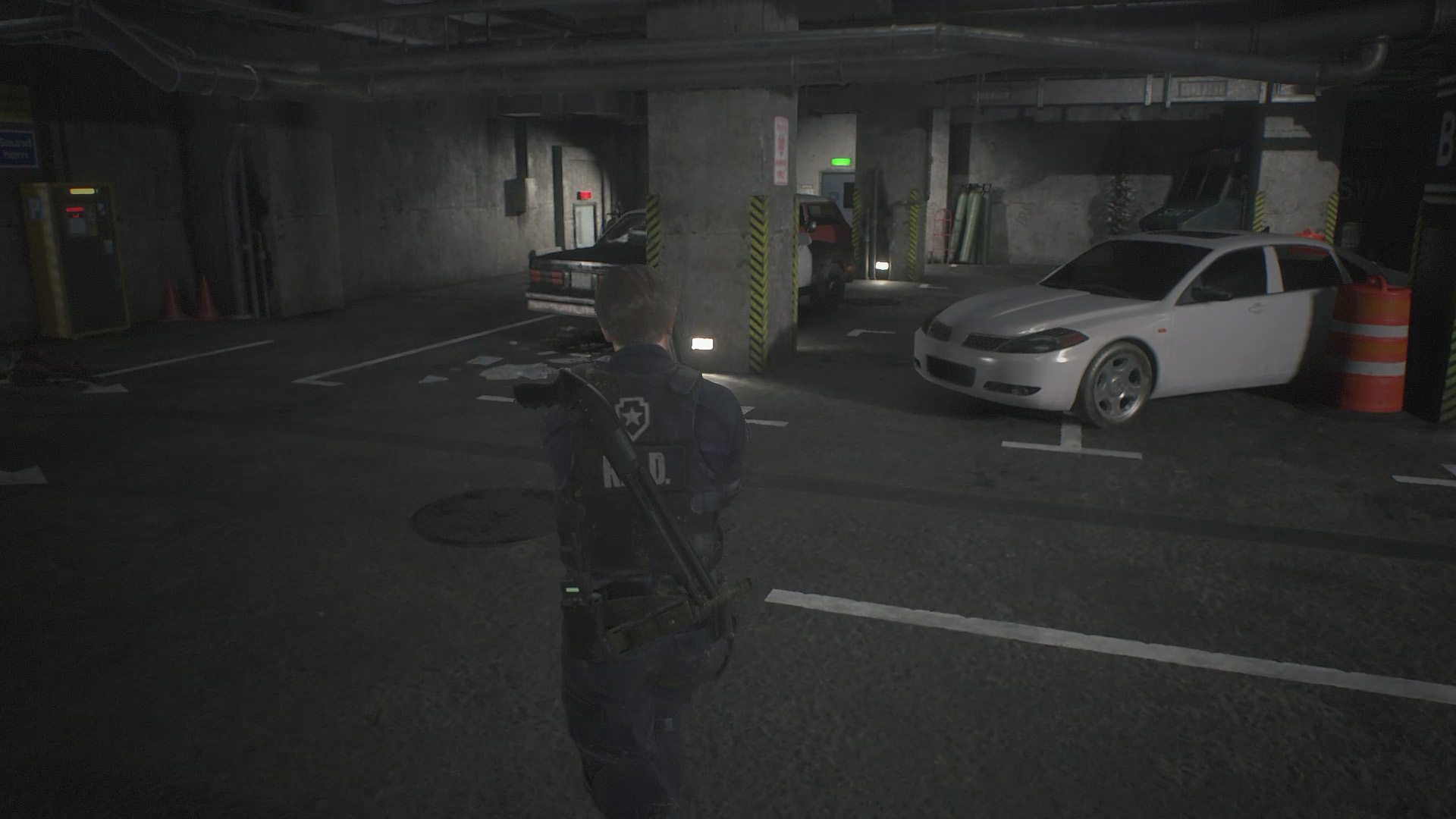

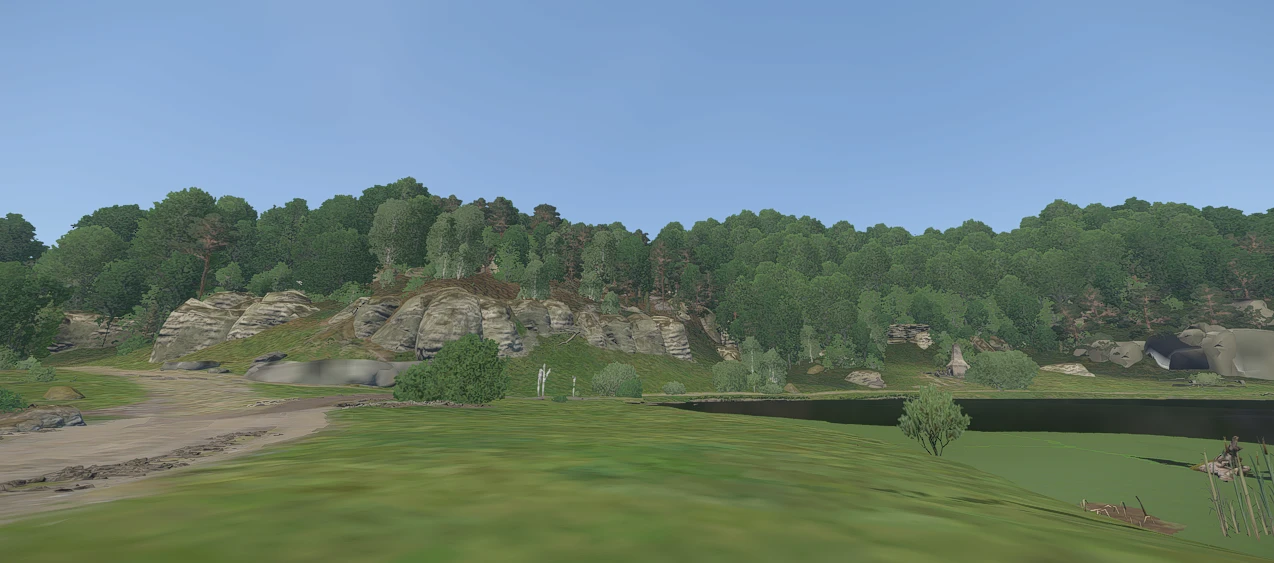

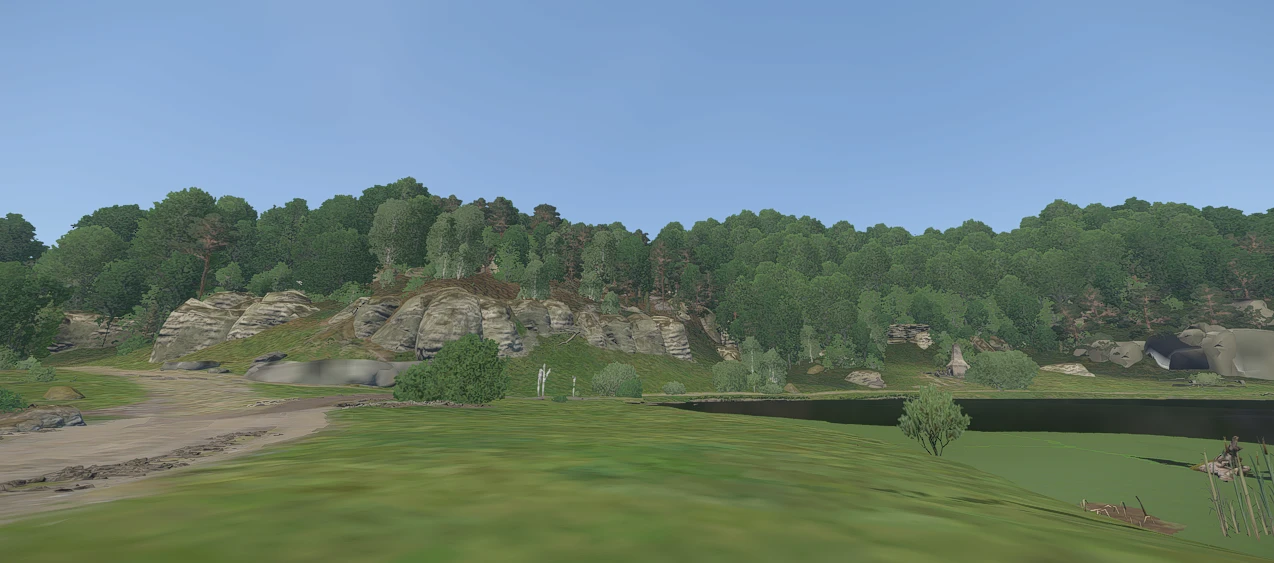

a setting like this could be the solution to games' optimisation, or letting ANYONE play a modern game, in toaster mode (or everybody's mode). This is a cool mod for Kingdom Come Deliverance 2 if you don't care if it looks like a half-finished game.

www.nexusmods.com

www.nexusmods.com

Ultra Low Graphics Mode

This mod sets the graphics extremely low, allowing you to maximize the game's performance, at the cost of graphical fidelity.

This mod sets the graphics extremely low, allowing you to maximize the game's performance, at the cost of graphical fidelity. This allows you to run the game at acceptable frame rates on systems with much lower specifications than those minimal required by the game.

Resolution is brute forced to HD (1280x720) and VSync is off. If you want to change the resolution, you can do so at the top of the mod file, using Notepad. By changing various values in the mod file (or simply just by deleting individual lines of the file) you can easily bring the look of the game back up, closer to normal "Low" settings. If you want the game to retain its original look, try the Very Low Graphics Mode instead.

The mod doesn't interfere with or change DLSS/FSR settings, although I'd suggest leaving those off - the base resolution is already set very low and these technologies typically do not help much at such low fidelity/resolution anyway.

Last edited: