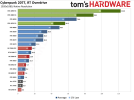

Edit: Also, I think the general discourse tends to undersell ray tracing. Rich's comparisons mainly focused on rasterization I believe (Cyberpunk in performance mode, Immortals of Aveum, TLOU Part II, A Plague Tale: Requiem, Alan Wake 2). I think only Frontiers of Pandora had RT in the suite of games he tested. If you enable it, then the performance differential is quite a bit higher than a 100% advantage in favor of the 4070S. You're probably looking at a 120-150% uplift on average and if the PS5 could take path tracing, it'd be 200%. So I guess on that front it's not all bad but even on a 4070S, ray tracing isn't that amazing, and current games are still largely built traditionally so rt isn't always impressive outside of the usual suspects like Cyberpunk and Alan Wake 2.