Reminds me of this, it was the only time in my life my dad lost it with laughing, he didnt like these shows but when the TV exploded he lost it.U think we called it technical adjustment, due to the heavy handed nature of this the hammer will all ways be known in my house as the technical adjuster.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Digital Foundry Article Technical Discussion [2021]

- Thread starter BRiT

- Start date

- Status

- Not open for further replies.

They have the better "anti aliasing"Yeah how can you romantize something that made a high pitched squeal when it was on ( though maybe now my tinnitus damaged ears couldnt hear this ), plus the heat/weight

https://www.provideocoalition.com/the_crt_replacement_is_here-_finally/

I dont know if theres anything that CRTs are better than other screen types, its certainly not the blacks, maybe viewing angle?

The "beam" of light that hits your dot/ptich-mask in the CRT monitor still bleeds through the edges of the tiny holes and there they get mixed with their neighbor pixels. So you always have a "natural AA"-Filter on a CRT monitor. That doesn't happen with an LCD (or whatever) screen. Every pixel is sharp and separated from the other pixels.

A big problem CRTs always had was sharpness. LCDs have the sharpness (but not in motion) but are missing color-mixing on the pixels edges.

I really don't miss my 120Hz CRT.

Another aspect why the good old CRTs might look better, they were much smaller than todays displays. My 19" CRT was gigantic as computer monitor at that time (had a 14" CRT before). But now with 27" monitor (or 65" TV) the picture is a lot bigger and much sharper, so you can identify artifacts and aliasing much easier.

Riddlewire

Regular

...PC connects to TV's just as nicely and comfortably as any console

Except for the BGR subpixel alignment of many TVs.

Although I suspect that was more of a problem in the days of 720p displays.

So the link was www.anecdotal.comI am so annoyed I threw out out Sony Trinitron monitor..

It was so fun doing A/B test with people thinking the flatpanels had good I.Q.

Putting the same movie on, side by side...even OLED looked FUBAR next to a proper CRT.

They always left with their head down.

(same thing with their "3D headsets" vs 7.1 THX sound btw...)

I am amazed (even though I should not be at my age) how fast people forget things and get used to lesser quality.

Our displays today are lacking in the same way that 3D sounds is in games today.

People awe at Atmos sound...but it cannot hold a candle to the EAX 5.0 sound of old days.

Today we have a current generation that thinks they are getting the best of tech today....and telling them otherwise tends to anger them..go figure.

It is really simple...image quality was lowered in order to get another form factor.

It is not a secret.

But it can (very amusing) anger people...

lol

John Linneman and Rich Leadbetter take a look at Doom Eternal's next-gen patch - we've already checked out the PC game and came away impressed, so how does everything scale onto the PS5 and Xbox Series consoles? We've got three machines and eight modes in total to test - let's do it.

Silent_Buddha

Legend

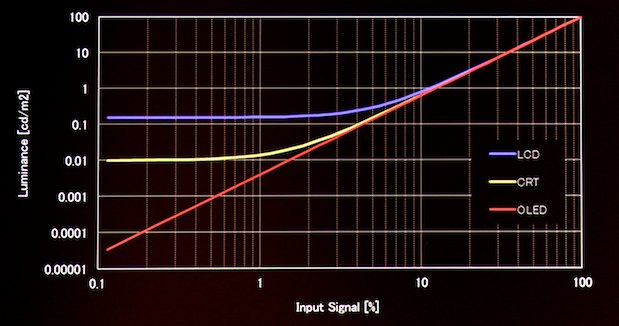

Eg. true black is not present on LCD, IPS or OLED displays (despite what false PR says)

Um, no. While true for LCD due to the need for a backlight, OLEDs have self emissive pixels (well actually the sub pixels that make up the pixel). So when an OLED is sent a signal for black, it turns off and it's actually a black pixel.

um mate, i still remember CRTs

Black was not black

https://www.google.com/amp/s/www.expertreviews.co.uk/tvs-entertainment/tvs/24899/the-tv-for-tv-producers-hands-on-with-sonys-oled-reference-monitors?amp

Yup, while CRTs have deeper blacks than LCDs, in a perfectly dark room, if you turned on a CRT and sent it a black screen, your room would no longer be perfectly dark. The black screen (well really dark grey

Best memories i have from gaming on crts are headhache and eye pain after long sessions.

If you gamed on console then you'd unfortunately have been limited by either 50 Hz (PAL) or 60 Hz (NTSC) screen refresh rates. Depending on the person this could cause headaches depending on how sensitive you were to the screen strobing that slowly.

Luckily higher end desktop PC CRT displays could refresh the screen significantly faster than that. I found that for me, at 72 Hz and higher refresh, the chance of eye strain was greatly reduced compared to 60 Hz screen refresh. For consoles hooked up to a CRT TV, I'd just limit how long I used it and/or only use it in a very bright room.

For this reason, most ergonomic office CRT monitors were 72 Hz or higher.

I can't even imagine how painful it would have been for me to use a PAL TV for anything. (/shudder). At least even in the EU, I believe PC displays were 60 Hz minimum, but I could be wrong about that.

Regards,

SB

Full DigitalFoundry Article @ https://www.eurogamer.net/articles/...-60fps-on-xbox-series-x-s-thanks-to-fps-boost

Dark Souls 3 now runs at 60fps on Xbox Series X/S thanks to FPS Boost

And we've tested it.

Dark Souls 3 has just received a new lease of life on Xbox Series consoles, thanks to the transformative powers of FPS Boost. The From Software classic now targets 60 frames per second on the new wave of Microsoft consoles, replacing the somewhat wobbly 30fps of the Xbox One rendition of the game. We had early access to the FPS Boosted rendition of the game and it certainly does the job, delivering nigh-on flawless performance on Xbox Series consoles.

This is a much-requested upgrade for Xbox Series X and S owners, bringing the game closer into line with its performance profile on PS5. Dark Souls 3 released on last-gen systems in 2016 with a firm 30fps cap, but many months later, a PlayStation 4 Pro patch removed the limit, offering frame-rates up to 60fps. Unfortunately, the value of the upgrade was questionable at the time, owing to a highly variable level of performance on Sony's enhanced machine. However, in the long run, it worked out - PlayStation 5's backwards compatibility horsepower locks the game to 1080p60.

Unfortunately, From Software never went back to the Xbox One version of Dark Souls 3 to add One X support, meaning that 900p30 has been the upper limit of performance on Xbox consoles since the game's original launch. Not only that, in common with From's other 30fps console offerings, inconsistent frame-pacing added a certain degree of judder to the game, something baked into the engine that extra back-compat horsepower could not address. Today's FPS Boost upgrade overrides the frame-rate cap to give Xbox Series X and S the same, smooth experience 60fps as PS5, with no judder, hitches or stuttering.

...

Dark Souls 3 now runs at 60fps on Xbox Series X/S thanks to FPS Boost

And we've tested it.

Dark Souls 3 has just received a new lease of life on Xbox Series consoles, thanks to the transformative powers of FPS Boost. The From Software classic now targets 60 frames per second on the new wave of Microsoft consoles, replacing the somewhat wobbly 30fps of the Xbox One rendition of the game. We had early access to the FPS Boosted rendition of the game and it certainly does the job, delivering nigh-on flawless performance on Xbox Series consoles.

This is a much-requested upgrade for Xbox Series X and S owners, bringing the game closer into line with its performance profile on PS5. Dark Souls 3 released on last-gen systems in 2016 with a firm 30fps cap, but many months later, a PlayStation 4 Pro patch removed the limit, offering frame-rates up to 60fps. Unfortunately, the value of the upgrade was questionable at the time, owing to a highly variable level of performance on Sony's enhanced machine. However, in the long run, it worked out - PlayStation 5's backwards compatibility horsepower locks the game to 1080p60.

Unfortunately, From Software never went back to the Xbox One version of Dark Souls 3 to add One X support, meaning that 900p30 has been the upper limit of performance on Xbox consoles since the game's original launch. Not only that, in common with From's other 30fps console offerings, inconsistent frame-pacing added a certain degree of judder to the game, something baked into the engine that extra back-compat horsepower could not address. Today's FPS Boost upgrade overrides the frame-rate cap to give Xbox Series X and S the same, smooth experience 60fps as PS5, with no judder, hitches or stuttering.

...

So, now we can choose if we want the game to be 900p@60 + AF (xbox series consoles) or 1080p@60 on PS5.Full DigitalFoundry Article @ https://www.eurogamer.net/articles/...-60fps-on-xbox-series-x-s-thanks-to-fps-boost

Dark Souls 3 now runs at 60fps on Xbox Series X/S thanks to FPS Boost

And we've tested it.

Dark Souls 3 has just received a new lease of life on Xbox Series consoles, thanks to the transformative powers of FPS Boost. The From Software classic now targets 60 frames per second on the new wave of Microsoft consoles, replacing the somewhat wobbly 30fps of the Xbox One rendition of the game. We had early access to the FPS Boosted rendition of the game and it certainly does the job, delivering nigh-on flawless performance on Xbox Series consoles.

This is a much-requested upgrade for Xbox Series X and S owners, bringing the game closer into line with its performance profile on PS5. Dark Souls 3 released on last-gen systems in 2016 with a firm 30fps cap, but many months later, a PlayStation 4 Pro patch removed the limit, offering frame-rates up to 60fps. Unfortunately, the value of the upgrade was questionable at the time, owing to a highly variable level of performance on Sony's enhanced machine. However, in the long run, it worked out - PlayStation 5's backwards compatibility horsepower locks the game to 1080p60.

Unfortunately, From Software never went back to the Xbox One version of Dark Souls 3 to add One X support, meaning that 900p30 has been the upper limit of performance on Xbox consoles since the game's original launch. Not only that, in common with From's other 30fps console offerings, inconsistent frame-pacing added a certain degree of judder to the game, something baked into the engine that extra back-compat horsepower could not address. Today's FPS Boost upgrade overrides the frame-rate cap to give Xbox Series X and S the same, smooth experience 60fps as PS5, with no judder, hitches or stuttering.

...

From Software should really have released at least a res-update for PS4 Pro. This way it is a little bit hard to decide ^^

ChuckeRearmed

Regular

Were the animations or physics tied to framerate in that game on Xbox?

It's nice they have another way to do it, as it may be applicable for other titles.Full DigitalFoundry Article @ https://www.eurogamer.net/articles/...-60fps-on-xbox-series-x-s-thanks-to-fps-boost

Dark Souls 3 now runs at 60fps on Xbox Series X/S thanks to FPS Boost

And we've tested it.

Dark Souls 3 has just received a new lease of life on Xbox Series consoles, thanks to the transformative powers of FPS Boost. The From Software classic now targets 60 frames per second on the new wave of Microsoft consoles, replacing the somewhat wobbly 30fps of the Xbox One rendition of the game. We had early access to the FPS Boosted rendition of the game and it certainly does the job, delivering nigh-on flawless performance on Xbox Series consoles.

This is a much-requested upgrade for Xbox Series X and S owners, bringing the game closer into line with its performance profile on PS5. Dark Souls 3 released on last-gen systems in 2016 with a firm 30fps cap, but many months later, a PlayStation 4 Pro patch removed the limit, offering frame-rates up to 60fps. Unfortunately, the value of the upgrade was questionable at the time, owing to a highly variable level of performance on Sony's enhanced machine. However, in the long run, it worked out - PlayStation 5's backwards compatibility horsepower locks the game to 1080p60.

Unfortunately, From Software never went back to the Xbox One version of Dark Souls 3 to add One X support, meaning that 900p30 has been the upper limit of performance on Xbox consoles since the game's original launch. Not only that, in common with From's other 30fps console offerings, inconsistent frame-pacing added a certain degree of judder to the game, something baked into the engine that extra back-compat horsepower could not address. Today's FPS Boost upgrade overrides the frame-rate cap to give Xbox Series X and S the same, smooth experience 60fps as PS5, with no judder, hitches or stuttering.

...

But, the fact that they had to work with From even a little, should've just done a proper patch improving resolution and 60fps.

The amount of effort MS did for this title could've easily funded a patch.

Shame fps boost didn't have a 120fps mode for this, would've made the 900p more palatable.

Then you have things like Fallout 76 (1P) still getting content, yet hasn't received a patch but relying on fps boost.

snc

Veteran

usualy there are different categories depending on fps for speedruning gameYeah, better than nothing i guess, great news as many people still run speedruns and generaly enjoys souls games. But i fully agree, no proper patch is a real shame.

DF written article @ https://www.eurogamer.net/articles/...of-duty-warzone-ps5-120hz-back-compat-evolved

Call of Duty: Warzone at 120Hz - has PS5 back-compat evolved?

And how does it stack up against Series X?

The recently released 120Hz update for Call of Duty: Warzone for PlayStation 5 users is a welcome boost - it doubles performance over the prior version of the game, doing so with no noticeable impact to image quality. More importantly, its release may well signify that Sony is beefing up its backwards compatible support for its latest console, bringing it closer into line with the kind of features available on Xbox Series consoles, when running code designed for last-gen machines.

So, in this piece, I'll be considering backwards compatibility features in general for both Microsoft and Sony machines, while at the same time looking at Warzone specifically. But to begin with, there are a couple of caveats and clarifications required for the game's 120Hz support on PlayStation 5. First of all, Activision's patch notes suggest that an HDMI 2.1 screen is required to achieve 120Hz gaming - but this is not the case. If you have an HDMI 2.0 display (or indeed capture card), you can still achieve 120fps - however, the image will be internally downscaled from native resolution to 1080p.

...

How they compare brings us onto a different topic - how back-compat works on each console. Microsoft has been open about this. With the its SDK, games can query the hardware to find out exactly what system it is running on. From there, added modes and features can be enabled - like higher resolutions, 120Hz support or even different graphics settings, as seen in Cyberpunk 2077, for example. Sony has not been forthcoming on how this all works on PS5, but having now seen developer documentation, it does now seem that it works in the same way. There's an 'sceKernelisProspero' system call developers can use that returns 1 if running on PS5, 0 if it is not (Prospero is Sony's codename for PS5).

So, if that is the case, why have we typically seen less ambitious back-compat support on PS5 compared to Series X? It's not 100 per cent clear, but the most likely explanation is that PS5 adds CPU and GPU horsepower from the new hardware and also benefits from faster storage. However, system level limitations from PlayStation 4 Pro remain in effect - and fundamentally, this suggests that even with back-compat offering so much more over PS4 Pro, there's likely no scope for overcoming the Pro's relatively limited 5.5GB of useable memory. Meanwhile, on the Xbox side, Series X will be able to address at least the same minimum 9GB pool of RAM as Xbox One X. Things may be changing on the Sony side, however. 120Hz output is not supported at the system level on PS4 - but clearly it is happening with Warzone on PS5, which we can confirm is still very much a PS4 app.

Call of Duty: Warzone at 120Hz - has PS5 back-compat evolved?

And how does it stack up against Series X?

The recently released 120Hz update for Call of Duty: Warzone for PlayStation 5 users is a welcome boost - it doubles performance over the prior version of the game, doing so with no noticeable impact to image quality. More importantly, its release may well signify that Sony is beefing up its backwards compatible support for its latest console, bringing it closer into line with the kind of features available on Xbox Series consoles, when running code designed for last-gen machines.

So, in this piece, I'll be considering backwards compatibility features in general for both Microsoft and Sony machines, while at the same time looking at Warzone specifically. But to begin with, there are a couple of caveats and clarifications required for the game's 120Hz support on PlayStation 5. First of all, Activision's patch notes suggest that an HDMI 2.1 screen is required to achieve 120Hz gaming - but this is not the case. If you have an HDMI 2.0 display (or indeed capture card), you can still achieve 120fps - however, the image will be internally downscaled from native resolution to 1080p.

...

How they compare brings us onto a different topic - how back-compat works on each console. Microsoft has been open about this. With the its SDK, games can query the hardware to find out exactly what system it is running on. From there, added modes and features can be enabled - like higher resolutions, 120Hz support or even different graphics settings, as seen in Cyberpunk 2077, for example. Sony has not been forthcoming on how this all works on PS5, but having now seen developer documentation, it does now seem that it works in the same way. There's an 'sceKernelisProspero' system call developers can use that returns 1 if running on PS5, 0 if it is not (Prospero is Sony's codename for PS5).

So, if that is the case, why have we typically seen less ambitious back-compat support on PS5 compared to Series X? It's not 100 per cent clear, but the most likely explanation is that PS5 adds CPU and GPU horsepower from the new hardware and also benefits from faster storage. However, system level limitations from PlayStation 4 Pro remain in effect - and fundamentally, this suggests that even with back-compat offering so much more over PS4 Pro, there's likely no scope for overcoming the Pro's relatively limited 5.5GB of useable memory. Meanwhile, on the Xbox side, Series X will be able to address at least the same minimum 9GB pool of RAM as Xbox One X. Things may be changing on the Sony side, however. 120Hz output is not supported at the system level on PS4 - but clearly it is happening with Warzone on PS5, which we can confirm is still very much a PS4 app.

D

Deleted member 11852

Guest

Then you have things like Fallout 76 (1P) still getting content, yet hasn't received a patch but relying on fps boost.

And enabling FPS boost can impact visual quality elsewhere. I don't play 76 but I still play Fallout 4 and started a new play through using FPS boost on my Series X. This hits 60fps but drops the resolution down to 1080p and reduces some other graphical settings too. But if you disable FPS boost and use the 60fps mod, you get 4K/60 with all the usual bells and whistles.

I think xbox platform team are amazing including fps boost.And enabling FPS boost can impact visual quality elsewhere. I don't play 76 but I still play Fallout 4 and started a new play through using FPS boost on my Series X. This hits 60fps but drops the resolution down to 1080p and reduces some other graphical settings too. But if you disable FPS boost and use the 60fps mod, you get 4K/60 with all the usual bells and whistles.

But when first party titles are relying on it, especially when XSX has to drop to XO versions of games is crazy.

Plus XSS has to run at a much lower resolution than necessary.

Not asking for more than default resolution and fps boost at the minimum.

Great video and interview. Lots of good stuff.

-VRS tier-2 is great and reduces the need for full frame DRS for an overall more consistent experience.

-Raytraced reflections were used to realize the original artist vision that wasn’t fully possible with SSR and cube maps. The end result sticks to the original design goals.

-They built a completely raytraced renderer for Doom Eternal! This was to ensure RT compatibility with their unified lighting system but it was obviously too slow. End result was that RT has access to all assets and effects including particle systems.

-They would prefer to roll their own upscaling solution but integrating DLSS was a good experience and it produced good results. I could be wrong but I got the feeling the engine team didn’t make this decision and it came down from above.

-Developers have experience with offline RT and are stoked to have access to usable hardware RT performance to bring some of those concepts to real-time.

Silent_Buddha

Legend

-They would prefer to roll their own upscaling solution but integrating DLSS was a good experience and it produced good results. I could be wrong but I got the feeling the engine team didn’t make this decision and it came down from above.

Yeah, I get the feeling that they only did DLSS because they "had" to. They would have preferred a solution that worked on all platforms and all GPUs. This would be consistent with their past behavior (using Vulkan instead of DX to allow easier implementation on other platforms such as Linux).

Maybe we'll see them roll their own upscaling solution for their next project?

Regards,

SB

- Status

- Not open for further replies.

Similar threads

- Replies

- 1K

- Views

- 89K

- Replies

- 5K

- Views

- 469K

- Replies

- 3K

- Views

- 365K