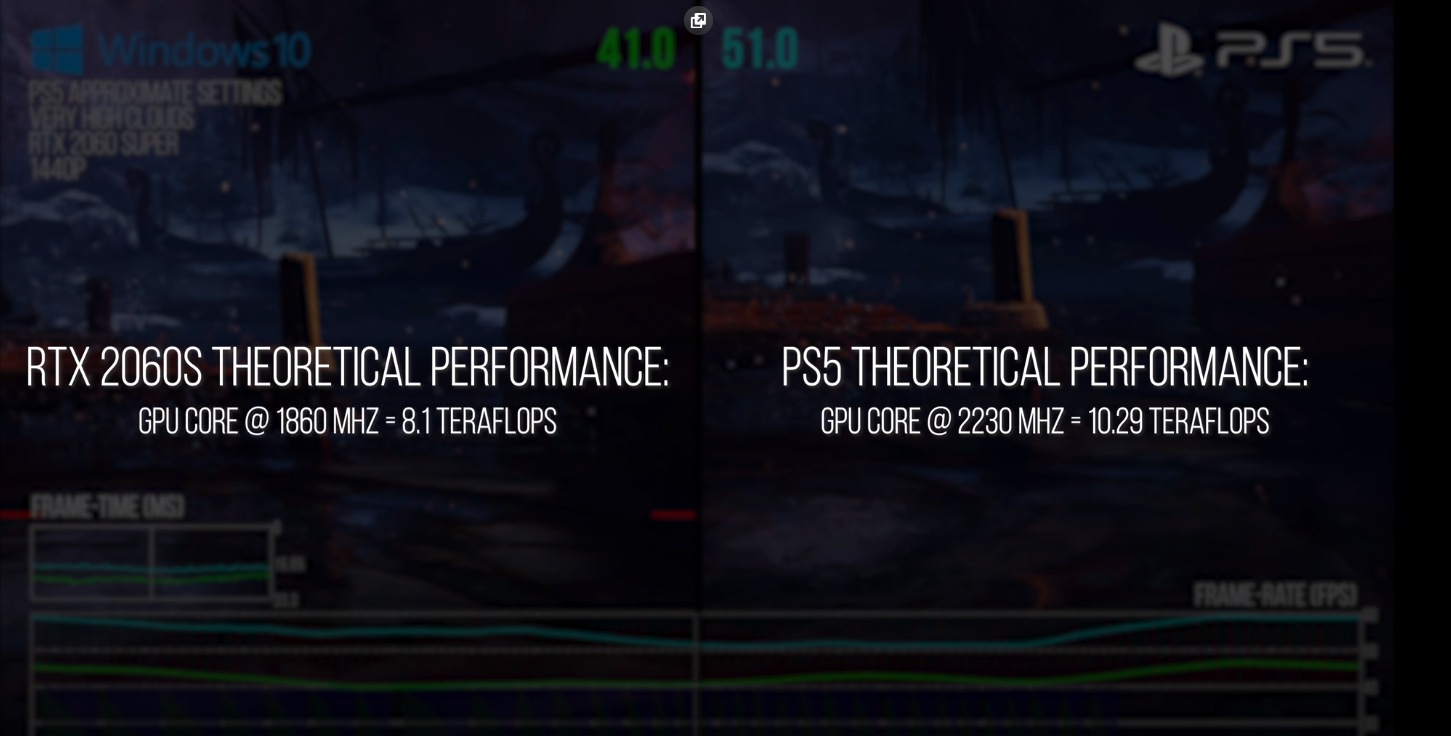

In other words PS5 and xsx can perform better than 5700XT which only leads 5700 by 15%.

It seems the problem is not peak performance of xsx. It is that PS5 has fewer bottlenecks for rasterization and 120fps. When RT enabled xsx seems to have the same or several percent better performance.

Why would being faster than the 5700XT mean the XSX is reaching it's peak performance? Based on it's specs it should be easily exceeding the 5700XT's performance. The PS5 should also be faster so I don't think this really tells us anything. To me the PS5 looks to be performing exactly as it should vs the 5700XT which means the XSX is underperforming if it's slower than the PS5.

Expected results given the PC benchmarks. Turing will not age well.

Turing seems to have the feature set to age reasonably. Unlike Kepler for example which deliberately scaled back GPGPU capabilities right before a console generation than featured very strong GPGPU capabilities for the time. Naturally it will get slower compared with the consoles over time due to increasingly less driver and developer optimisation, and increasingly more console optimisation. But that will stand for any architecture. On a feature set level it seems very well prepared though, especially given it's stronger RT capabilities which should go a long way to mitigating the usual optimisation issues.

The game will use the Pro and XOX version on next gen, how is that meaningful?

It should be self evident that using the same settings across platforms as a basis for comparison is the most meaningful way to do things. If the suggestion is that the comparison doesn't make sense because the next gen consoles aren't using their full potential on account of using similar settings to the previous generation then I don't think that makes sense. It's the very use of those previous gen like settings (they aren't identical) that allows the new consoles to hot 60fps at relatively high resolutions. And at the same time, the PC GPU's they are being compared to are also not able to stretch their legs using 'last gen settings'. With the exception of the 5700, the PC GPU's stand to gain as much or even more (if RT is used) from true next gen settings that leverage the full capabilities of DX12U. In fact the argument can be made that leaving RT unused unfairly penalises Turing in such a comparison. The reality is that as a design, Turings peak performance can only be reached when both RT and DLSS are fully utilised - because the architecture dedicated die space to these features that goes idle if they're not used. Much like not taking advantage of CBR in the PS4P.

I am guessing the 399 dollar PS5 is outperforming a 1200+ dollar PC here?

Would it be relevant for historical purposes if we get to know the price of the PC which was used?

I'm not sure that it would be relevant. Said PC was available 2 years before these consoles launched so what value would you place on having next gen console level performance 2 years before those next gen consoles are launched? Using more modern components it should be possible to get a console equivalent experience for around $1000 at the moment.

To be honest though I do find these "but that PC cost 3x as much as the console" arguments quite tiresome because they ignore so many other factors, not the least of which is that no-one buying a whole new PC at the start of a new console generation is doing so because they offer a good value proposition. It's a bit like telling the guy who just bought a BMW M8 that he made the wrong choice because it doesn't go 5x faster than a Ford Focus.

you still cannot get the PS5s impressive storage I/O on the PC no matter how much you spend.

True but I think you can buy the hardware which will allow it. You'll just be constrained by software until Direct Storage comes along.

Because the software created by developers may not necessarily expose it. Nvidia's higher geometry throughput has never materialized into anything outside of their over tessellated gameworks effects. Its not certain to me that their RT advantage will either.

I thought Nvidia's strong front end performance was considered to be a driving factor behind their performance lead over the past few generations? At least until things started to become more compute focused during the previous console generation. I'm not going to start digging through old reviews but I'm sure I've read this on numerous occasions over the years. Ultimately it will come down to how much the consoles push RT usage. If it's used very sparingly then Nvidia's advantage may well be nullified outside of Nvidia sponsored titles. But if the consoles push their own RT capabilities to the limits then this should allow Turing and Ampere to stretch their legs in relation to RDNA2.

Along with flagging the differences between PS5 settings and PC settings.

Along with flagging the differences between PS5 settings and PC settings.