You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Current Generation Games Analysis Technical Discussion [2022] [XBSX|S, PS5, PC]

- Thread starter BRiT

- Start date

Intresting, isnt all that crazy much o data being transferred afterall.

It's definitely more than I've seen in any last gen games. But it's also a bit lower than I thought given that these are multiple minute runs.

I obviously can't test burst reads though so keep that in mind. The averages are probably misleading in this regard.

Great job. What you should do is compare with multiplatform games.On a different note, I just got an extra drive in my PS5 and did a bit of testing via the drive's SMART data and CrystalDiskInfo. I haven't seen anyone do this and figured it would be interesting to see how much data is flowing through since it's impossible to check on the console.

Here is where I started out.

I first tried R&C and loading into the main menu reads ~2GB from the disk. I then booted back in and started a new game and total amount of reads by the first checkpoint was ~18GB including the main menu and intro sequence. I then reloaded the checkpoint (bypassing the intro) and the total reads from that added another ~5GB. And since we already know that booting to the menu reads ~2GB then loading the checkpoint would have added ~3GB and with somewhere in the region of 13GB being streamed in during the intro.

I also did a bit of testing with Demon's Souls but not as granular. The long and short of it is that spawning into three different worlds read ~15GB from the drive. I then created a savegame at the start of World 1-1 and played until the Phalanx boss (which took me 30 minutes) and the drive had added another 100GB of reads to the counter. My reasoning was that this should give us an idea about the game's streaming rate.

I would test R&C some more but I haven't found time to start playing it yet. But I hope someone finds this interesting.

Good use of the SSD. Would be worthwhile to compared against a last generation game that has been upgraded to PS5 to get an idea of streaming differences between generations.It's definitely more than I've seen in any last gen games. But it's also a bit lower than I thought given that these are multiple minute runs.

I obviously can't test burst reads though so keep that in mind. The averages are probably misleading in this regard.

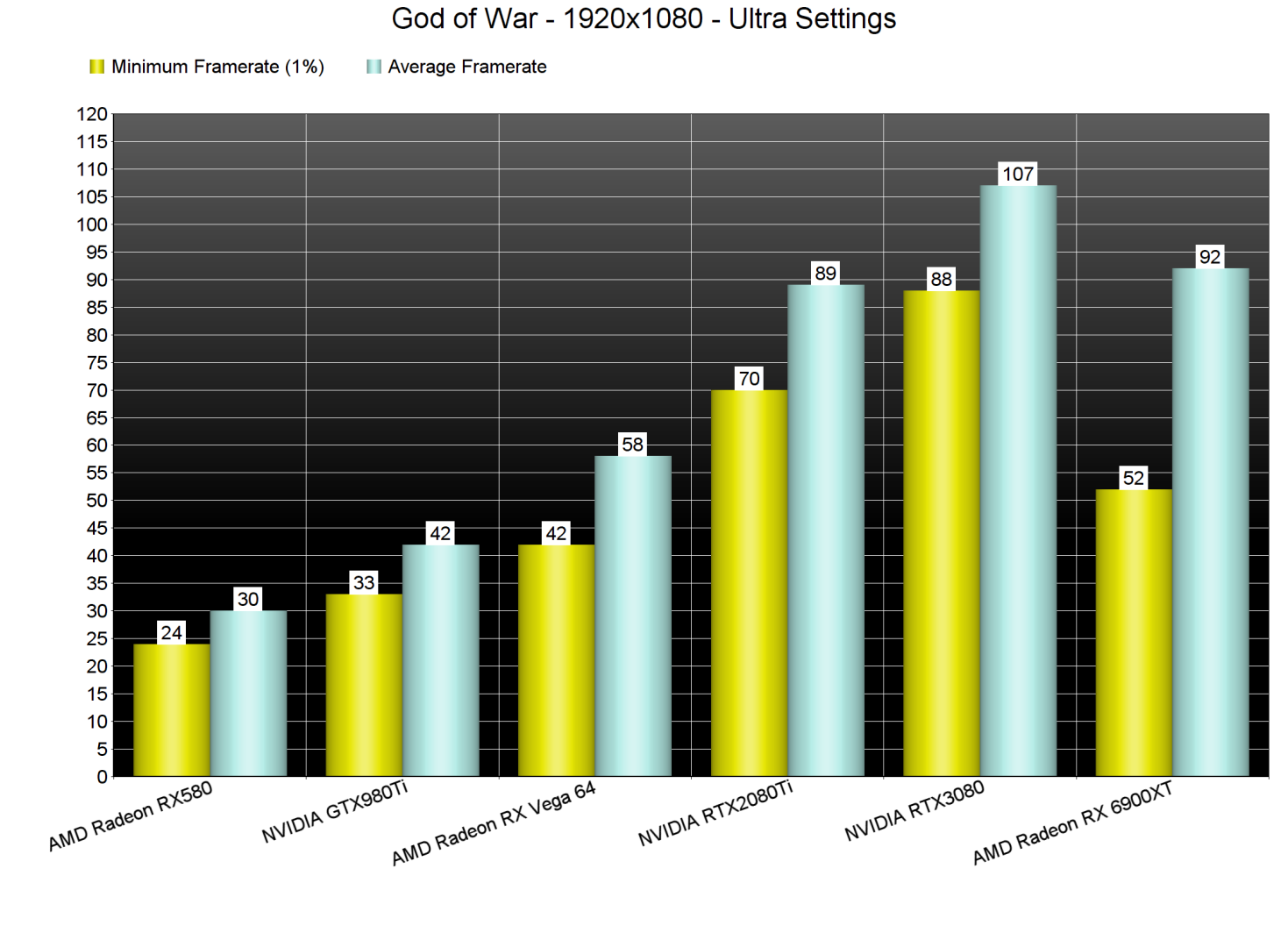

God of War seems to have a lot of DX11 CPU overhead with AMD drivers.

https://www.dsogaming.com/pc-performance-analyses/god-of-war-pc-performance-analysis

https://www.dsogaming.com/pc-performance-analyses/god-of-war-pc-performance-analysis

Although the average framerate of the AMD Radeon RX 6900XT was around 90fps, there were some drops to low 50s. This is mainly due to AMD’s awful DX11 drivers. As we’ve reported multiple times, there is an additional driver overhead on AMD’s hardware in all DX11 games. AMD hasn’t done anything to address this issue, and we don’t expect the red team to ever resolve this. You can easily reproduce this DX11 overhead issue on AMD’s GPUs. All you have to do is turn Kratos (in the scene we used for our benchmarks) and look at the road that leads to your house.

Now while this sounds awful for AMD users, we have at least some good news. By dropping the settings to High or Original, we were able to get a constant 60fps experience on the RX6900XT, even in 4K. Our guess is that the LOD, which basically hits more the CPU/memory, is the culprit behind these DX11 performance issues on AMD’s hardware. As such, we suggest using the High settings on AMD’s hardware (which are still higher than the Original/PS4 settings).

D

Deleted member 11852

Guest

he's one man =P It's a lot of work imo. It's really a full time job and I'm not sure it's his FT job. More of a hobby / side business I think.

NXGamer is Michael Thompson, who is a game dev. I believe he worked at EA and Ubisoft - I don't know where he is now.

Not sure how his 2070 (pseudo 2070s) results here are so much lower than what I measure on a real 2070 Super at 1512p original settings.

The 2070 Super I have is like 2x the framerate in the moment? I use FCAT though for my PC testing...

Could this be partly because NXG is running with a hard vsync on? i.e. no adaptive vsync? My understanding is that in most console titles, if a frame misses the target frametime, say 16.6ms, then adaptive vsync kicks in and you get tearing, thus the actual frame time might only be 17ms and the average framerate will be barely impacted.

But for his PC test it looks as though any 16.6ms misses are dropping right down to 33.3ms and thus that frame is registered as 30fps. So while the actual unlocked framerate might be something like 57fps, because of the hard vsync it artificially appears to be much lower.

Perhaps the rest can be made up by the CPU and GPU difference but it still seems like a lot.

NXGamer is Michael Thompson, who is a game dev. I believe he worked at EA and Ubisoft - I don't know where he is now.

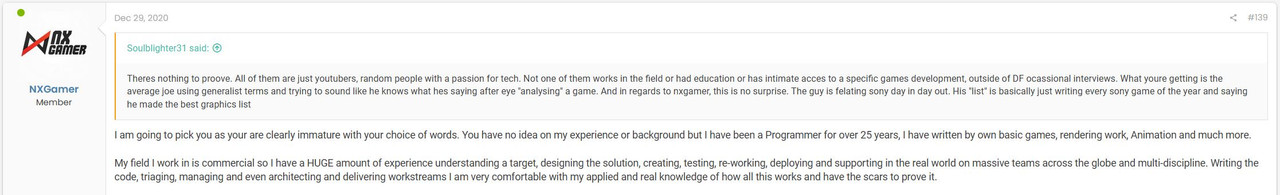

He's not and never was a dev. I remembered his rant over here, because each and every single one of his articles gets picked appart and he usually tries to mock people or if someone with a technical edge comes along he just stops responding

He's not and never was a dev

You mean Game Developer?

Yes, by his own admission there. He says he has written his own basic games. Meaning, some small indie project for himself ? I vaguelly remember other instances when people were having issues with his articles (the guy literally NEVER outputs something where people dont find numerous mistakes) where he said he is in networking i think ? But its just a fuzzy memory, im not sure

snc

Veteran

you posted printscreen of his post when he wrote he has been programmer for 25 years ;d

yes, in other fields. The guy is so arrogant that he would have screamed from the highest skyscraper if he was working at EA or Ubisoft or whatever. And you can also know he never worked in this field by the fact that he's been called on his lack of training and knowledge for at least half a decade by now

snc

Veteran

Still 25 years of software programming and some experience with creating some smaller games (rendering, animation) doesn't sound bad for youtube content creator, to be fair only channel that can exceed this is More Cherno as this guy is former Dice developer and creates his own game engine tough he posts very rarely.yes, in other fields. The guy is so arrogant that he would have screamed from the highest skyscraper if he was working at EA or Ubisoft or whatever. And you can also know he never worked in this field by the fact that he's been called on his lack of training and knowledge for at least half a decade by now

Last edited:

DavidGraham

Veteran

NVIDIA doesn't suffee an overhead under DX11, only AMD does. NVIDIA suffers greatly under DX12 though.My guess is that the heart of the problem is Nvidia's high driver overhead coupled with a relatively slow CPU particularly unsuited to handling that. But it's almost like there's more to it than that, because his PC consistently performs badly.

That example is just HUB being dumb, Battlefield V is an incredibly CPU limited game, especially with large numbers of players, the user is testing the battle royal mode in two different playthroughs with probably two different player counts. The 1660Ti is operating at 50% capacity because the 3770K CPU is being hammared by the larger player count, while the R9 390 is operating at 100% because the CPU is relatively spared.It is interesting to see just how differently a particular setup can represent the particular components that are in it though.

Still 25 years of software programming and some experience with creating some smaller games (rendering, animation) doesn't sound bad for youtube content creator, to be fair only channel that can exceed this is More Cherno as this guy is former Dice developer and creates his own game engine tough he posts very rarely.

What counts is being able to correctly recognize the reason for an observation on a consistent basis? I don't care if someone were an engineer on Unreal engine for the last 25 years, if they were consistently wrong (not saying thats absolutely true of NGX) with their technical analysis/setups they shouldn't be on youtube.

Plus its simple you can have youtubers with relatively limited technical knowledge producing similar videos with little problem. Its all hypothesizing vs making declarative statements. "Could this be", "I wonder if the cause is" or "maybe its" are simple ways to avoid making false statements. We do it here all the time.

Last edited:

D

Deleted member 11852

Guest

He's not and never was a dev. I remembered his rant over here, because each and every single one of his articles gets picked appart and he usually tries to mock people or if someone with a technical edge comes along he just stops responding

Possibly there are two Michael Thompson's in the game industry. One certainly has EA and Ubisoft game credits, but I am absolutely certain NXGamer has described himself as dev in some of his YouTube videos.

I'm neither on the Team Pro-NXGamer or Team NXGamer-Must-Die so I don't really care, just chipping in what I've seen stated.

snc

Veteran

Yeah but I dont know one channel that makes constantly mistakes and Im happy that can check results on few (nxgamer, df, vgtech)What counts is being able to correctly recognize the reason for an observation on a consistent basis? I don't care if someone were an engineer on Unreal engine for the last 25 years, if they were consistently wrong (not saying thats absolutely true of NGX) with their technical analysis/setups they shouldn't be on youtube.

Plus its simple you can have youtubers with relatively limited technical knowledge producing similar videos with little problem. Its all hypothesizing vs making declarative statements. "Could this be", "I wonder if the cause is" or "maybe its" are simple ways to avoid make false statements. We do it here all the time.

NVIDIA doesn't suffee an overhead under DX11, only AMD does. NVIDIA suffers greatly under DX12 though.

I think there's a bit more to it than that, though I may be wrong. It was a few years ago that I watched / read something on this.

My understanding is that Nvidia's DX11 uses software scheduling and the driver can spread draw call processing over many threads. So that can lead to higher GPU utilisation vs AMD (less likely to be bottlenecked by a single thread), but it can also lead to a greater total CPU workload. In the case of a highly threaded, CPU intensive game, perhaps that could become a factor.

I'm also wondering about how the first gen Zen inter CCX communication might impact on Nvidia's driver, assuming the above is correct and still true.

Edit: this might explain what I'm trying to get at

Possibly there are two Michael Thompson's in the game industry. One certainly has EA and Ubisoft game credits, but I am absolutely certain NXGamer has described himself as dev in some of his YouTube videos.

I'm neither on the Team Pro-NXGamer or Team NXGamer-Must-Die so I don't really care, just chipping in what I've seen stated.

Likely different people, its rare to go from engineer to artist or vice versa. Even if you're a game dev -- even if you're an engineer, and even if you work on stuff like the engine -- it's easy to be completely unqualified to discuss graphics, it's pretty niche. I know multiple software engineers in games who know very little about graphics. Touting that you're "an engineer" so you must be an expert is not something I'd expect somebody who actually knows the field to say.

Silent_Buddha

Legend

I think there's a bit more to it than that, though I may be wrong. It was a few years ago that I watched / read something on this.

My understanding is that Nvidia's DX11 uses software scheduling and the driver can spread draw call processing over many threads. So that can lead to higher GPU utilisation vs AMD (less likely to be bottlenecked by a single thread), but it can also lead to a greater total CPU workload. In the case of a highly threaded, CPU intensive game, perhaps that could become a factor.

I'm also wondering about how the first gen Zen inter CCX communication might impact on Nvidia's driver, assuming the above is correct and still true.

Edit: this might explain what I'm trying to get at

Yeah, NV's Dx11 drivers are more CPU heavy but use the CPU better as their multithreaded drivers spread the load across multiple cores much better than AMD's does. In older titles or titles that only really stress a few cores this leads to significantly better CPU usage than AMD assuming your cores aren't occupied by other tasks.

That last is where NV drivers stumble at times for me. It's rare that my machine isn't multi-tasking "something". So I rarely play a game without something else using the CPU. Back when I originally bought the 1070, I immediately noticed how depending on the game, performance would be less consistent on the 1070 versus the R9 290 that it was replacing. Looking at CPU useage in like-for-like scenarios it was easy to see that this would happen almost 100% of the time if my CPU was being used at or near capacity. In the same situation the R9 290 would have much more consistent performance with little impact from the CPU being at or near capacity.

Of course the flip side of that is that when the CPU isn't at or near capacity the NV Dx11 driver makes much better use of CPU resources. So in a situation where primarily 1 CPU thread is being pushed to capacity then the NV driver does significantly better than the AMD driver assuming something other than the game isn't pushing the other cores to capacity.

Regards,

SB

Last edited:

Similar threads

- Replies

- 797

- Views

- 77K

- Locked

- Replies

- 3K

- Views

- 292K

- Locked

- Replies

- 3K

- Views

- 311K

- Replies

- 453

- Views

- 34K